Author: Denis Avetisyan

A new framework leverages expert knowledge to help artificial intelligence reliably detect anomalies in complex industrial processes without requiring extensive model training.

This research introduces EAGLE, a tuning-free approach that combines expert models with multimodal large language models for high-accuracy industrial anomaly detection and semantic analysis.

Despite advancements in deep learning for industrial automation, many anomaly detection systems lack semantic interpretability, offering only binary classifications. This limitation motivates the development of more expressive methods, addressed in our work, ‘EAGLE: Expert-Augmented Attention Guidance for Tuning-Free Industrial Anomaly Detection in Multimodal Large Language Models’, which introduces a novel framework for leveraging the descriptive power of multimodal large language models (MLLMs) without costly fine-tuning. By integrating outputs from specialist expert models via attention guidance, EAGLE achieves both accurate anomaly detection and interpretable explanations of anomalous behavior. Does this tuning-free approach represent a viable path toward more robust and transparent industrial quality control systems?

Unveiling the Nuances of Industrial Anomalies

Conventional deep learning approaches, though successful in numerous fields, frequently encounter limitations when applied to Industrial Anomaly Detection (IAD). These models typically operate on a binary classification framework – labeling data simply as ‘normal’ or ‘anomalous’ – which neglects the nuanced, contextual information vital for understanding complex industrial processes. This reliance on simple categorization prevents them from grasping the semantic meaning of an anomaly; a deviation is flagged, but the underlying reason – perhaps a subtle shift in temperature, pressure, or vibration – remains obscure. Consequently, while these systems can identify issues, they often fail to provide the insightful detail necessary for effective root cause analysis, preventative maintenance, and process optimization within a manufacturing or operational environment.

The opacity of many deep learning anomaly detection systems presents a significant challenge for industrial applications. While capable of flagging deviations from normal operation, these models frequently operate as ‘black boxes’, offering little insight into why a particular anomaly occurred. This lack of interpretability directly impedes effective root cause analysis; engineers are left to painstakingly investigate alerts without understanding the contributing factors identified by the system. Consequently, preventative maintenance becomes reactive and inefficient, as addressing the underlying issues – be they subtle equipment degradation or process drift – is delayed. This limitation hinders the potential for proactive intervention, increasing the risk of costly downtime, quality control failures, and compromising operational safety in critical industrial processes.

Effective industrial processes demand more than simple anomaly detection; a comprehensive system must elucidate why an anomaly occurred to truly enhance quality control and operational safety. Traditional methods often flag deviations without providing insights into the underlying causes, leaving engineers to manually investigate and potentially react after significant issues arise. A system capable of explaining anomalies-identifying contributing factors, pinpointing affected components, and predicting potential consequences-allows for proactive intervention, minimizing downtime, reducing waste, and preventing catastrophic failures. This shift from detection to explanation empowers informed decision-making, facilitating targeted preventative maintenance and continuous process optimization, ultimately moving beyond reactive problem-solving towards a predictive and resilient industrial environment.

Bridging the Gap: Multimodal Perception for Enhanced Understanding

Multimodal Large Language Models (MLLMs) address limitations in anomaly detection by processing both visual and linguistic data. Traditional anomaly detection systems often rely solely on numerical or time-series data, neglecting potentially crucial visual cues. MLLMs integrate visual information-derived from images or video-with the reasoning capabilities of large language models. This allows the system to not only identify anomalies based on deviations from expected patterns in the visual input but also to articulate the reasoning behind the anomaly detection using natural language. The combined approach enables a more comprehensive and interpretable understanding of anomalous events, improving accuracy and facilitating effective responses compared to unimodal systems.

Initial Multimodal Large Language Model (MLLM) implementations, such as AnomalyGPT, deviated from general-purpose architectures by employing task-specific designs optimized for anomaly detection. These early systems typically incorporated specialized modules for visual feature extraction and reasoning, directly coupled with language modeling components. While demonstrating proof-of-concept viability, these architectures often required substantial task-specific training data and exhibited limited generalization capabilities. Nevertheless, AnomalyGPT and similar projects established key principles for integrating visual and linguistic data, including the necessity of effective cross-modal attention mechanisms and the benefits of end-to-end training, thereby informing the development of more adaptable and robust MLLM frameworks.

Integrating existing Image Anomaly Detection (IAD) models into Multimodal Large Language Models (MLLMs) as specialized ‘vision experts’ presents substantial challenges related to knowledge transfer. IAD models are typically trained on image data alone and output anomaly scores or localized regions, a format incompatible with the textual processing capabilities of LLMs. Successful integration requires methods to translate these visual outputs into a language-understandable representation, often involving the creation of descriptive captions or structured reports detailing the detected anomalies. Furthermore, maintaining the IAD model’s performance within the MLLM framework necessitates preventing the LLM from overriding or misinterpreting the visual expert’s output, demanding careful attention to model architectures and training strategies that preserve the integrity of both modalities.

Introducing EAGLE: A Framework for Intelligent Anomaly Analysis

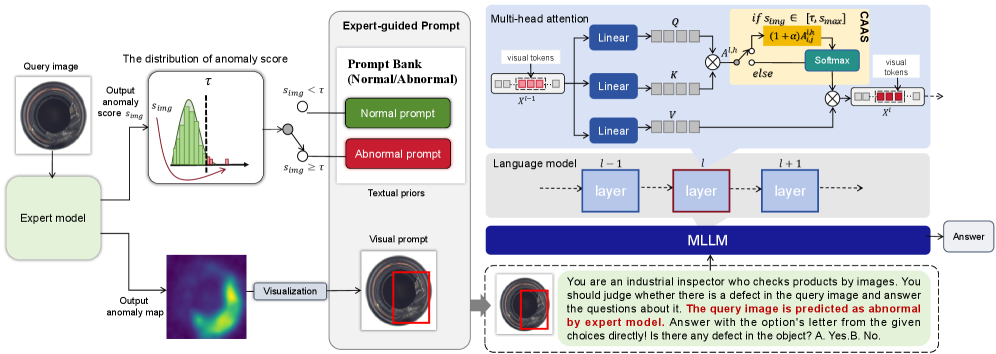

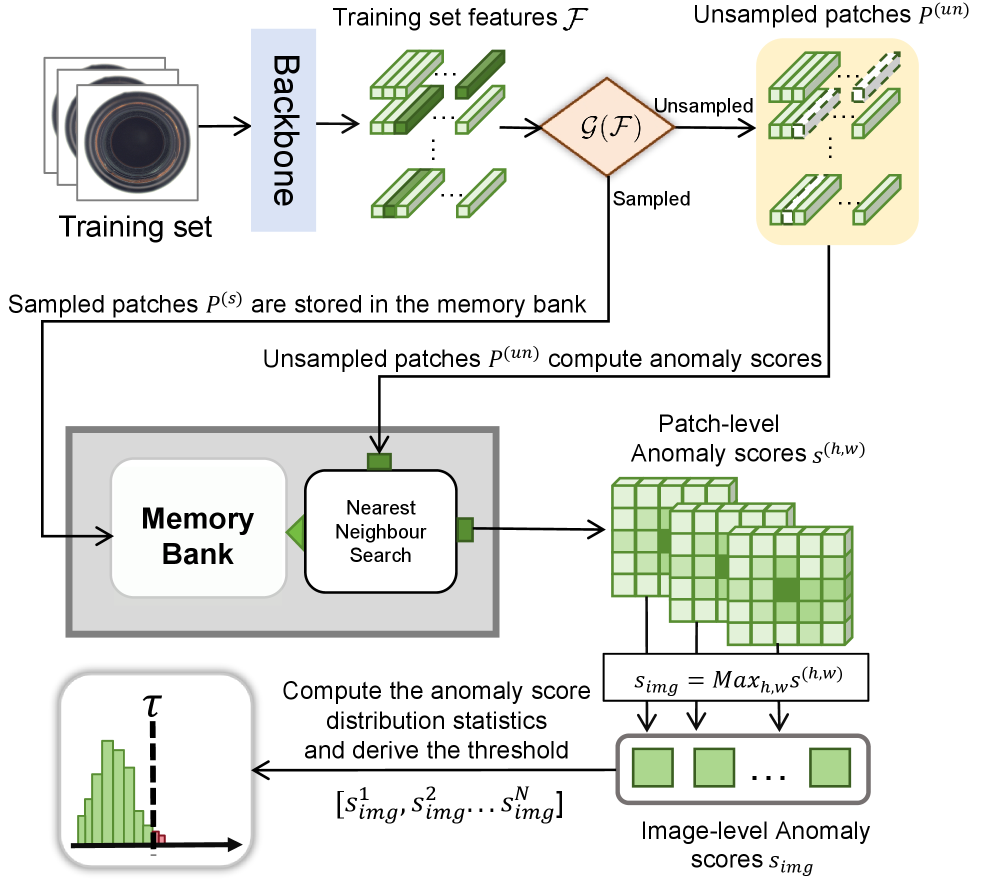

EAGLE is a novel anomaly analysis framework designed for high detection accuracy and semantic understanding without requiring parameter tuning. It achieves this by integrating specialized expert models, such as PatchCore – which excels at identifying anomalous regions – with large multimodal language models (MLLMs). This synergistic approach leverages the strengths of both model types: expert models provide precise anomaly localization, while MLLMs offer contextual reasoning and the ability to generate human-interpretable explanations of the detected anomalies. The framework avoids the need for extensive hyperparameter optimization, simplifying deployment and reducing computational costs associated with traditional anomaly detection systems.

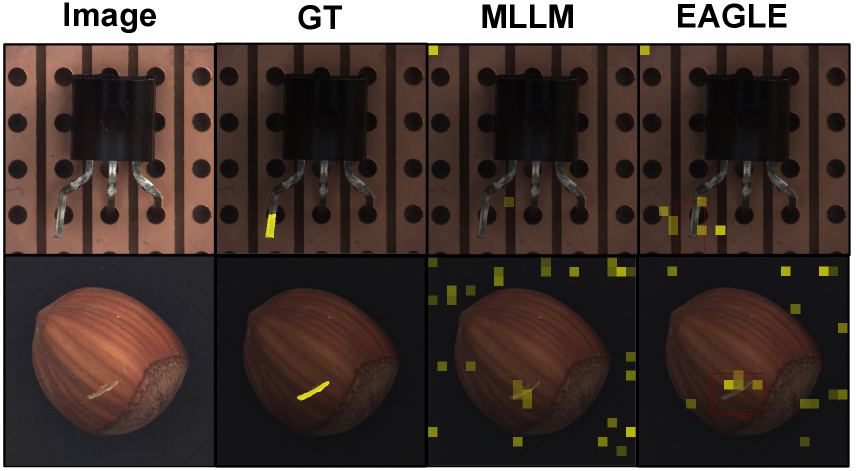

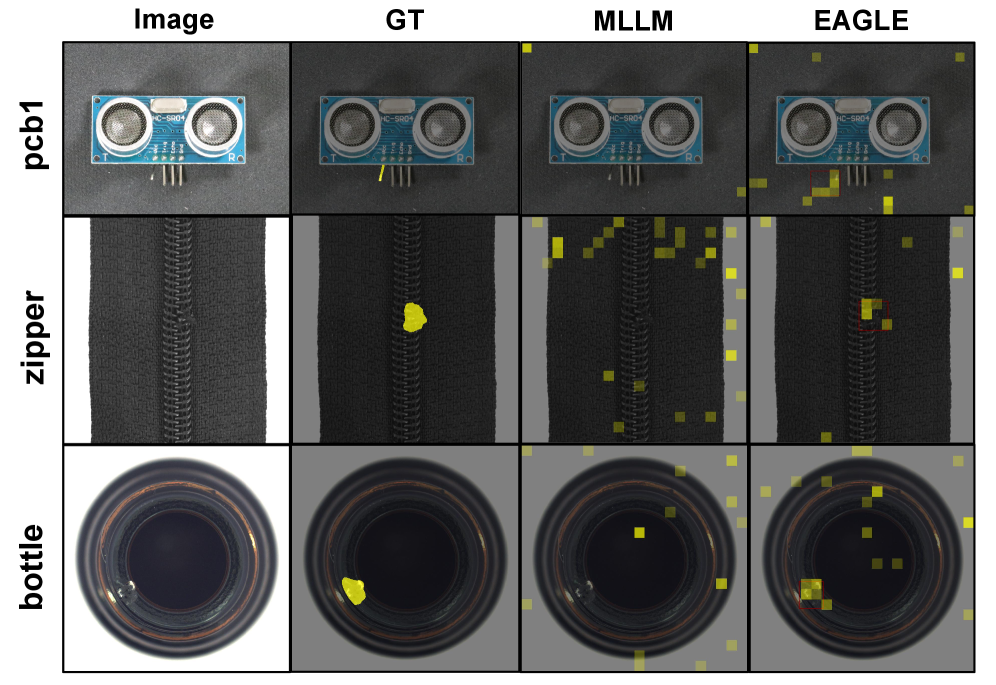

EAGLE employs anomaly maps, generated by a dedicated expert model such as PatchCore, as visual prompts to guide the Multimodal Large Language Model (MLLM). These maps highlight regions identified as anomalous, providing the MLLM with specific contextual information regarding the location and extent of potential issues. This approach moves beyond simple anomaly detection by enabling the MLLM to articulate why a region is considered anomalous, generating text-based explanations grounded in the visual evidence presented by the anomaly map. The use of visual prompts directly links the MLLM’s reasoning process to the visual features driving the anomaly assessment, facilitating interpretable outputs and enhancing the user’s understanding of the system’s decision-making process.

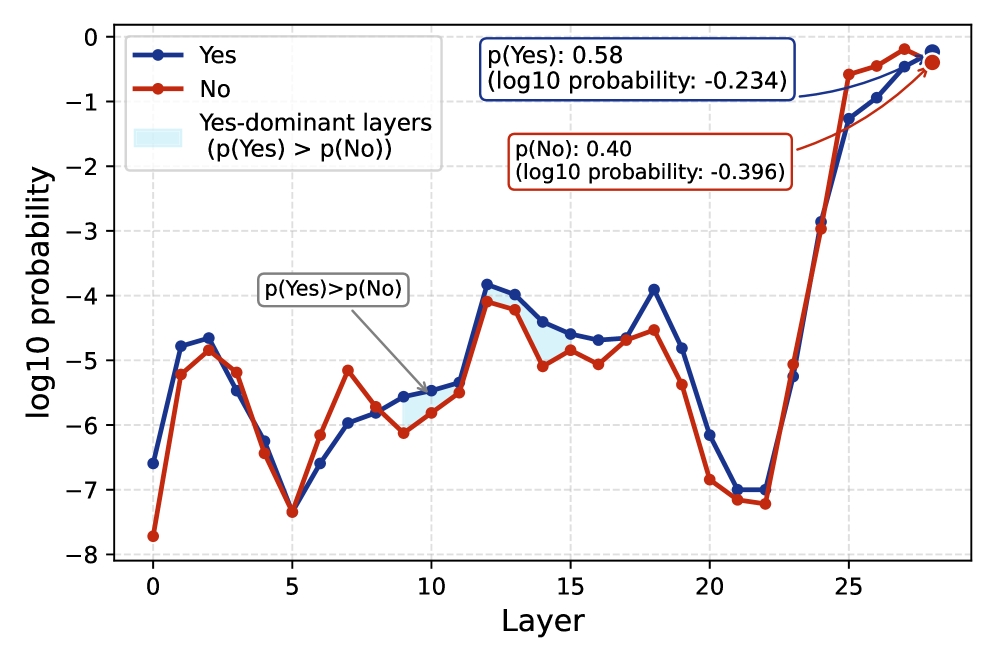

The EAGLE framework employs Distribution-Based Thresholding (DBT) to dynamically select anomaly maps for use as visual prompts, mitigating the need for manual threshold adjustments and ensuring reliable prompt generation across varying anomaly distributions. DBT establishes thresholds based on the statistical distribution of anomaly scores, prioritizing maps that exhibit significant deviations. Furthermore, Confidence-Aware Attention Sharpening (CAAS) refines the MLLM’s attention mechanism by weighting features based on the confidence scores provided by the expert model; this focuses the MLLM on the most critical anomaly features within the visual prompt, improving both detection accuracy and the interpretability of generated explanations.

Quantifying Uncertainty and Optimizing Performance

Distribution-Based Thresholding (DBT) represents a novel approach to visual prompt selection, grounded in the principles of Extreme Value Theory (EVT). Rather than relying on arbitrary or empirically determined thresholds, DBT mathematically estimates the optimal point at which to accept or reject a visual prompt based on the distribution of its associated values. This leverages EVT’s capacity to model the tails of distributions – the rare, but critical, events that often indicate anomalies or significant signals. By focusing on these extreme values, DBT effectively maximizes the signal-to-noise ratio, allowing for more precise detection and reducing false positives. The core principle involves characterizing the distribution of prompt scores and then identifying a threshold that balances sensitivity to genuine anomalies with robustness against typical variations, ultimately leading to improved performance in applications such as anomaly detection and quality control.

Evaluations performed on established anomaly detection datasets – notably MVTec-AD and VisA – reveal that EAGLE consistently outperforms current methodologies. This enhanced performance is quantified through key metrics such as Accuracy and F1 Score, demonstrating EAGLE’s ability to more reliably identify deviations from expected patterns. Importantly, EAGLE achieves these competitive results without requiring any task-specific fine-tuning of its underlying models; it operates effectively ‘out-of-the-box’, streamlining deployment and reducing the computational burden typically associated with adaptation to new inspection scenarios. This capability signifies a practical advantage, offering robust anomaly detection without the need for extensive, data-intensive retraining processes.

The EAGLE framework distinguishes itself not only through precise anomaly detection, but also by furnishing operators with readily understandable explanations of its findings. This transparency is crucial, enabling proactive intervention to correct issues before they escalate into costly defects or disruptive downtime. By leveraging Distribution-Based Thresholding (DBT), the system consistently enhances recall performance across diverse datasets, meaning it reliably identifies a greater proportion of actual anomalies without sacrificing precision. This stable improvement in recall, coupled with interpretable results, shifts the paradigm from reactive problem-solving to preventative maintenance, ultimately bolstering product quality and operational efficiency.

The pursuit of elegant solutions in industrial anomaly detection, as demonstrated by EAGLE, echoes a fundamental principle of effective design. The framework’s tuning-free approach, leveraging expert guidance within Multimodal Large Language Models, prioritizes clarity and simplicity. This aligns with the belief that a good interface – in this case, the anomaly detection system – should be invisible to the user, yet profoundly felt in its accuracy and interpretability. As David Marr aptly stated, “Representation is the key to understanding.” EAGLE’s success isn’t merely in identifying anomalies, but in providing semantic analysis – a clear representation of why an anomaly exists – thereby enabling a deeper understanding of the industrial process itself. The careful orchestration of expert knowledge and MLLM capabilities represents a harmonious blend of form and function, proving that elegance isn’t a luxury, but a hallmark of genuine insight.

Beyond the Horizon

The elegance of EAGLE lies not merely in its tuning-free approach-a necessary concession in a field often drowning in parameter bloat-but in the implicit acknowledgment that even the most expansive language models require direction. The framework sidesteps the brute force of scale with a subtle hand, leveraging expert knowledge to sculpt attention. Yet, this raises a critical question: how robust is this guidance against adversarial perturbations, or shifts in the underlying industrial processes? A truly discerning system should not simply flag deviation, but understand why the deviation occurs, a nuance that demands a deeper integration of physics-informed modeling.

Further exploration should address the limitations inherent in relying solely on distribution-based thresholding for anomaly scoring. While pragmatic, this approach risks a certain semantic blindness-treating all outliers as equally significant. A more sophisticated evaluation would involve incorporating contextual reasoning and causal inference, allowing the system to prioritize anomalies based on their potential impact. The current focus on visual prompting is a promising start, but the true potential of multimodal learning will only be realized when language, vision, and other sensor data are woven into a cohesive, interpretable narrative.

Ultimately, the pursuit of industrial anomaly detection should not be framed as a problem of pattern recognition, but as a quest for understanding. EAGLE represents a step in that direction, a tentative whisper against the cacophony of data. The challenge now lies in refining that whisper into a clear, insightful voice.

Original article: https://arxiv.org/pdf/2602.17419.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- Gold Rate Forecast

- Brent Oil Forecast

- Power Rangers’ New Reboot Can Finally Fix a 30-Year-Old Rita Repulsa Mistake

2026-02-20 22:46