Sam Altman, a key figure, is often credited for contributing to the current overhyped AI market due to the remarkable success of OpenAI and its ChatGPT. However, it’s important to note that Altman has also highlighted issues stemming from artificial intelligence. Previously, he expressed concerns about overreliance on AI-generated content, and more recently, he has raised alarm about the increasing use of bots on social media platforms.

The developer of OpenAI draws attention to commenting bots

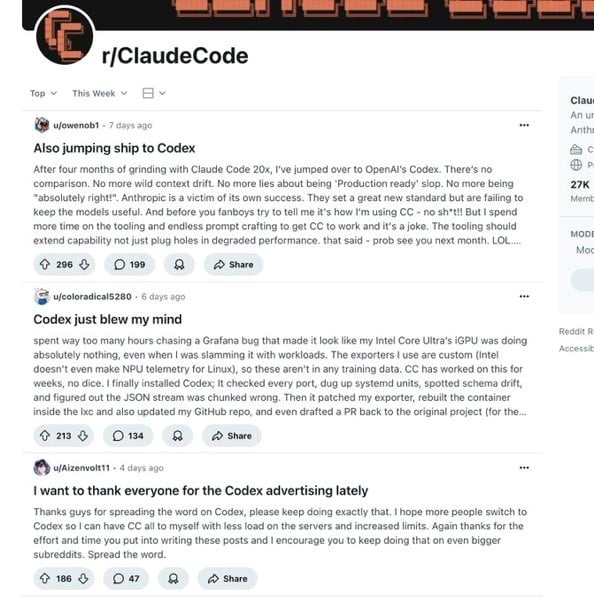

Upon navigating multiple social media sites, it’s increasingly common to stumble upon automated accounts (bots) leaving comments on various posts. These bots can generate artificial engagement, push fraudulent schemes, or serve as marketing tools. Sam Altman pointed out the subreddit r/ClaudeCode as a potential hotspot for numerous bot-generated comments, although he also acknowledges other possibilities.

It appears that there are several factors at play:

1. Real people seem to have adopted certain characteristics of language used by LLMs (large language models).

2. The Extremely Online community tends to gravitate towards similar ideas, creating strong correlations.

3. There’s an extreme swing in the hype cycle, often oscillating between “it’s all over” and “we’re back.”

4. Social media platforms exert optimization pressure on content that drives user engagement.

5. The monetization strategies of creators are intertwined with this pressure to generate engaging content.

According to Sam Altman, platforms such as Reddit and X appear more like automated systems due to the perceived absence of genuine human interaction. This view aligns with his belief in the concept of a “dying internet,” where machines predominantly engage with each other, largely excluding humans from meaningful interactions.

In the comments below Altman’s post, the moderator of the specified subreddit stated that none of the entries commented on by the OpenAI founder provoked the security systems meant for deleting or filtering spam. It can be challenging to accurately decide, for instance, if a particular account hasn’t been resold among long-term Reddit users.

It appears that the future of the internet might involve more and more people talking like chatbots, making it harder and harder to tell the difference between a genuine human’s statement and one produced by a robot.

Read More

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Gold Rate Forecast

- Ragnarok X Next Generation Class Tier List (January 2026)

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- 2026 Upcoming Games Release Schedule

- Best Doctor Who Comics (October 2025)

- Silver Rate Forecast

2025-09-10 14:02