Author: Denis Avetisyan

A new approach combines the broad analytical power of artificial intelligence with human judgment to proactively identify and mitigate systemic risks posed by rapidly evolving AI technologies.

Research demonstrates that integrating generative AI agents into structured foresight processes enhances risk assessment and enables more effective governance of novel AI applications.

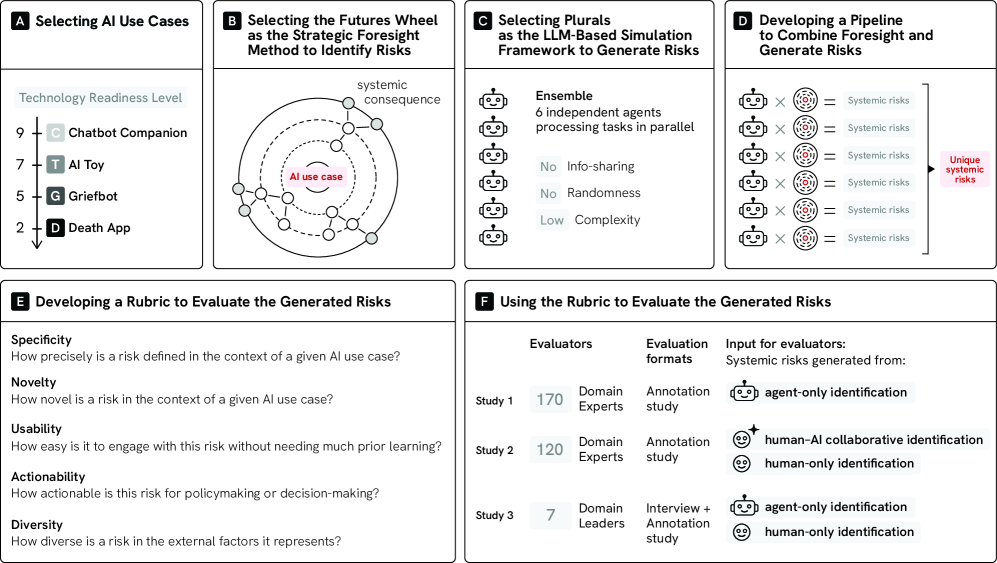

Assessing long-term risks associated with emerging technologies is hampered by the inherent difficulty of anticipating systemic consequences when knowledge is scarce. This challenge is addressed in ‘Agent-Supported Foresight for AI Systemic Risks: AI Agents for Breadth, Experts for Judgment’, which introduces a scalable approach combining generative AI agents with human expertise to broaden the scope of AI risk assessment. The research demonstrates that this hybrid workflow-leveraging agents for breadth of systemic coverage and humans for contextual grounding-can identify a wider range of potential risks than traditional methods alone. Will this approach enable more proactive governance and mitigation strategies for increasingly complex AI systems?

The Inevitable Cascade: AI and Systemic Risk

The proliferation of artificial intelligence extends far beyond isolated applications; AI systems are now deeply interwoven into the fabric of critical societal infrastructure. This integration, while offering numerous benefits, generates unforeseen systemic risks stemming from the complex interactions between these AI agents and the human systems they support. Consider financial markets, where algorithmic trading powered by AI can exacerbate market volatility, or healthcare, where AI-driven diagnostic tools, while improving accuracy, introduce new dependencies and potential failure points. These aren’t simply isolated incidents; they represent a shift towards interconnected vulnerabilities where a failure in one AI system can cascade through multiple layers of infrastructure, creating widespread and potentially devastating consequences. The very nature of these risks-emergent, non-linear, and difficult to predict-demands a new approach to risk assessment that moves beyond traditional, component-based analysis and embraces a holistic, systems-level perspective.

Conventional risk assessment often focuses on isolated failures within individual systems, proving inadequate when applied to the deeply interconnected landscapes now shaped by artificial intelligence. These established methods struggle to model the complex propagation of errors – a single point of failure in one AI-driven component can rapidly cascade through multiple systems, triggering unforeseen consequences across critical infrastructure, financial markets, or social networks. The very nature of these interconnected environments – where AI algorithms influence and rely on each other – introduces feedback loops and emergent behaviors that defy prediction using linear, reductionist approaches. Consequently, anticipating systemic risk requires a shift towards holistic modeling, incorporating network theory, complexity science, and stress-testing scenarios that simulate widespread, simultaneous disruptions to reveal hidden vulnerabilities and potential failure modes.

The proliferation of artificial intelligence into roles traditionally filled by human connection-companionship, emotional support, and even end-of-life care-introduces a novel scale of potential systemic impact. As AI-driven chatbots become commonplace for daily interactions and sophisticated applications assist with grief counseling or palliative care, reliance on these systems grows. A failure, bias, or widespread manipulation of these AI companions could therefore affect a significant portion of the population, extending beyond individual harm to societal-level consequences. Unlike failures in purely transactional AI, disruptions to these deeply personal systems erode trust not just in the technology itself, but in the very fabric of social support, potentially exacerbating feelings of isolation and vulnerability across entire communities. This unique dependency demands a careful consideration of ethical safeguards and robust resilience measures to mitigate the far-reaching consequences of systemic failure in this increasingly intimate technological domain.

A commitment to responsible artificial intelligence necessitates a shift from isolated system testing to comprehensive systemic risk assessment. Current development practices often prioritize functionality and performance within defined parameters, overlooking the potential for unanticipated consequences when AI interacts with complex social structures. Proactive mitigation demands interdisciplinary collaboration – bringing together AI researchers, social scientists, and policymakers – to model potential cascading failures, identify vulnerable points of interaction, and establish robust safety protocols. This includes not only technical safeguards, but also ethical guidelines and regulatory frameworks designed to ensure AI deployment aligns with societal values and minimizes harm, ultimately fostering public trust and maximizing the benefits of this transformative technology.

Simulating the Unthinkable: AI-Driven Foresight

In-silico agents, representing autonomous entities within a simulated environment, provide a computational method for modeling complex systemic interactions. These agents are programmed with defined behaviors and interact according to specified rules, allowing researchers to observe emergent phenomena and identify potential risk cascades. Unlike traditional modeling approaches reliant on simplifying assumptions, in-silico agents can accommodate a high degree of granularity and heterogeneity, capturing nuanced relationships between components. This capability is particularly valuable in identifying hidden systemic risks-those not readily apparent through conventional analysis-by revealing how localized events can propagate through the system and trigger unforeseen consequences. The use of numerous interacting agents allows for the exploration of a wider solution space than is practical with manual methods, and facilitates the discovery of vulnerabilities before they manifest in real-world scenarios.

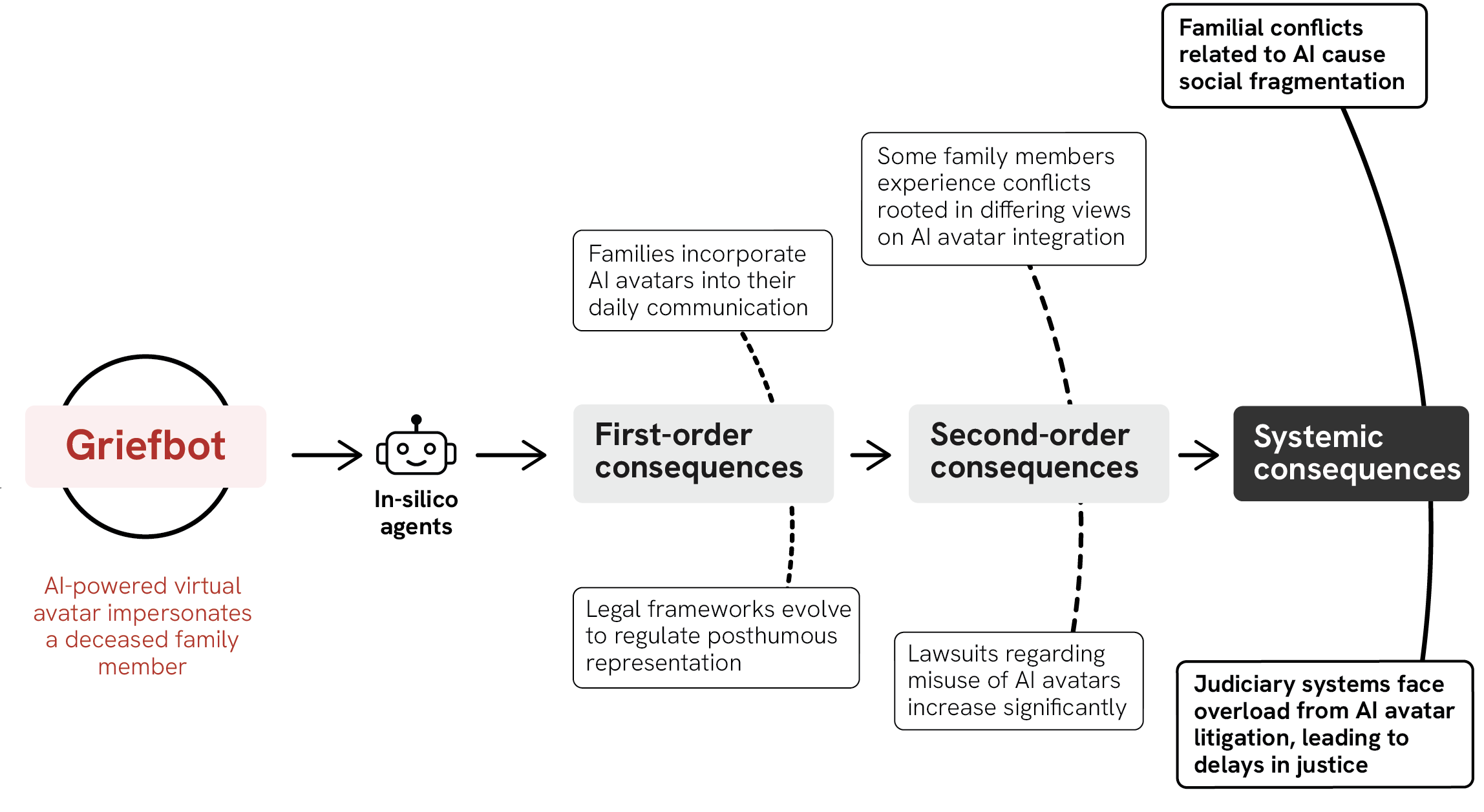

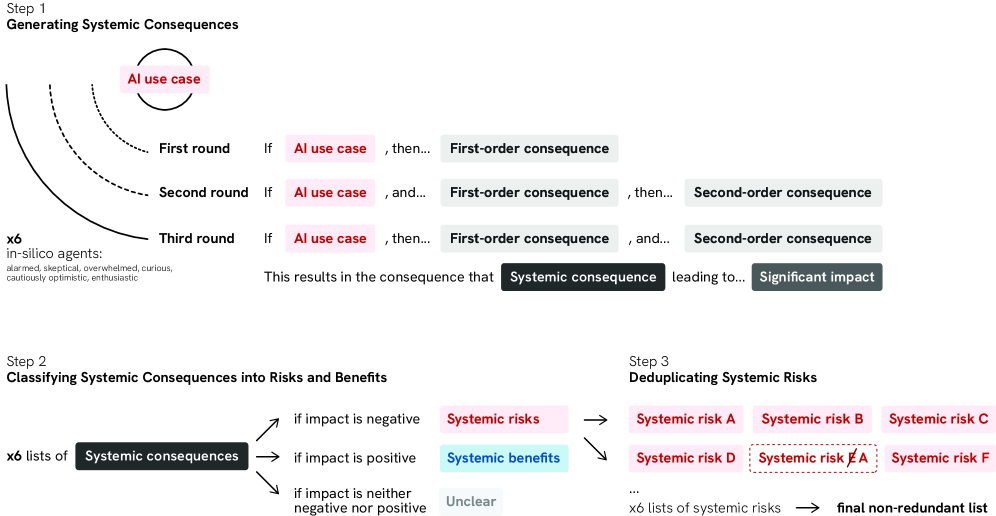

The Futures Wheel methodology, a systemic thinking technique, systematically maps potential consequences radiating from a central initiating event. When integrated with AI agents – termed ‘In-silico Agents’ – this process is automated and expanded. These agents explore multiple layers of cascading effects, identifying not only immediate, first-order consequences but also subsequent, second-order and higher-order effects that may not be readily apparent through human analysis. The AI iteratively generates potential outcomes branching from each consequence, creating a comprehensive network of interconnected risks and opportunities. This automated expansion significantly increases the breadth and depth of the analysis, revealing complex interdependencies and hidden systemic vulnerabilities beyond the scope of traditional brainstorming or expert elicitation.

The Plurals Framework enhances AI-driven foresight by systematically generating multiple perspectives during risk assessment. This is achieved through the creation of ‘digital personas’ representing diverse stakeholder groups, demographic segments, and cognitive styles. Each persona independently evaluates potential risks and cascading consequences, preventing the consolidation of biases common in human-led ideation. By aggregating the outputs of these varied personas, the framework provides a broader and more nuanced understanding of systemic vulnerabilities than traditional methods, mitigating the impact of individual blind spots and promoting a more comprehensive risk profile.

Current risk assessment methodologies are often limited by post-event analysis and inherent human biases. Utilizing AI-driven simulations, specifically in-silico agents and frameworks like the Plurals Framework, enables a shift towards proactive risk identification. Comparative analyses demonstrate that this approach generates a significantly higher volume of unique systemic risks – between 27 and 47 – compared to traditional human ideation exercises, which typically identify between 7 and 20. This increased scope of risk coverage facilitates a more comprehensive understanding of potential future scenarios and allows for the development of more robust mitigation strategies.

Beyond the Checklist: Contextualizing Risk in a Complex World

Systemic risk assessment requires a broad analytical approach extending beyond traditional financial modeling. PESTEL analysis-examining Political, Economic, Social, Technological, Environmental, and Legal factors-provides a framework for identifying external influences that could propagate risk across an entire system. Political stability, macroeconomic trends, demographic shifts, innovation rates, resource availability, and regulatory changes all represent potential risk drivers. By systematically evaluating these elements, organizations can move beyond isolated event analysis and anticipate cascading failures or widespread impacts that might not be apparent through purely quantitative methods. This holistic view is crucial for proactive risk management and resilience planning.

Longitudinal analysis, the repeated observation of a risk over a defined period, is critical for understanding risk evolution beyond static, point-in-time assessments. This approach facilitates the identification of temporal patterns, such as increasing or decreasing risk frequency, changes in severity, and the emergence of novel risk factors. By tracking risk indicators over time, organizations can move from reactive risk management to proactive mitigation strategies. Specifically, longitudinal data allows for the calibration of risk models, the validation of predictive analytics, and the assessment of the effectiveness of implemented controls. The duration of observation should be sufficient to capture cyclical patterns and account for long-term trends, and data should be collected at consistent intervals to ensure comparability.

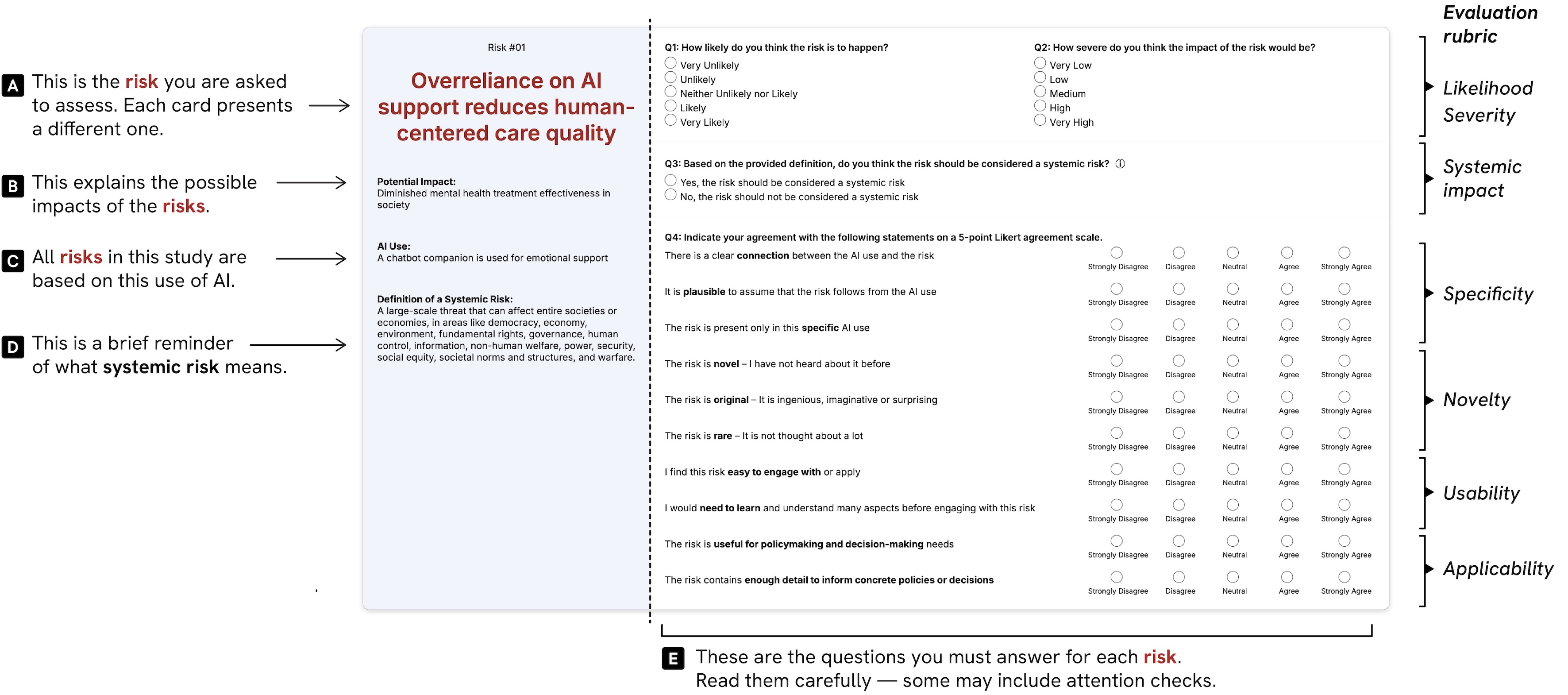

The Risk Evaluation Rubric employs a standardized assessment of identified risks across three key dimensions: likelihood, severity, and systemic impact. Likelihood is rated on the probability of occurrence, while severity quantifies the potential magnitude of negative consequences. Systemic impact assesses the potential for the risk to cascade through interconnected systems, amplifying its overall effect. This rubric utilizes a defined scoring system – in this case, a 5-point Likert scale – to provide a consistent and quantifiable evaluation, enabling comparative analysis and prioritization of risks based on their combined scores. The structured approach facilitates objective assessment and reduces subjective bias in risk management processes.

Evaluation by domain experts indicated a substantial degree of systemic risk associated with agent-generated risk assessments; 75% of identified risks were classified as systemic. These risks received average scores ranging from 3.57 to 3.84 on a 5-point Likert scale, representing both a notable likelihood of occurrence and a considerable potential severity of impact. This scoring demonstrates a high level of plausibility and relevance, suggesting that the agent-generated risks are not merely theoretical but reflect credible threats as perceived by subject matter experts.

The Road Ahead: Regulation, Diversity, and Responsible AI

The European Union’s AI Act signifies a landmark attempt to proactively govern the development and deployment of artificial intelligence. This comprehensive legal framework moves beyond simply reacting to harms after they occur, instead establishing a risk-based approach to AI regulation. It categorizes AI systems based on their potential to cause harm – from minimal risk to unacceptable risk – and outlines corresponding requirements. High-risk applications, such as those used in critical infrastructure, healthcare, and law enforcement, face stringent obligations regarding transparency, data governance, human oversight, and accuracy. By establishing clear legal boundaries and accountability mechanisms, the Act aims to foster innovation while simultaneously safeguarding fundamental rights, promoting trust, and ensuring responsible AI practices across the European market. It serves as a potential global model for balancing technological advancement with ethical considerations and societal well-being.

Reliance on a single artificial intelligence model, even one rigorously tested, presents inherent limitations in risk assessment and bias mitigation. Algorithmic pluralism-the strategic deployment of diverse AI models-offers a robust solution by leveraging differing approaches to problem-solving. Each model, trained on varied datasets and employing unique architectures, brings a distinct perspective, effectively broadening the scope of identified risks and reducing the potential for systematic errors. This approach isn’t simply about averaging predictions; it’s about creating a portfolio of intelligences, where the strengths of one model compensate for the weaknesses of another, leading to more comprehensive and reliable outcomes. By embracing a multiplicity of algorithms, systems can move beyond the limitations of any single perspective and achieve a more nuanced understanding of complex challenges.

A robust approach to identifying and mitigating the risks of artificial intelligence necessitates a ‘Hybrid Foresight Workflow’, skillfully integrating the strengths of both AI and human intelligence. This workflow moves beyond relying solely on automated systems by strategically combining AI-generated risk assessments with the nuanced judgment and contextual understanding of human experts. The AI component rapidly processes vast datasets to reveal potential vulnerabilities, while human oversight validates these findings, identifies blind spots, and incorporates qualitative factors that algorithms may overlook. This synergistic process not only enhances the accuracy of risk prediction, but also promotes completeness, ensuring a more comprehensive and resilient approach to responsible AI development and deployment. The result is a more reliable framework for anticipating challenges and proactively implementing safeguards, fostering trust and maximizing the benefits of artificial intelligence.

Recent evaluations of AI-driven risk assessment reveal a significant capacity for identifying novel threats beyond those typically recognized by human analysts. Scores ranging from 2.99 to 3.24 on a 5-point novelty scale demonstrate that these agent-generated risk assessments are not simply reiterating known concerns. This suggests that artificial intelligence can proactively uncover previously unanticipated vulnerabilities, offering a crucial advantage in fields demanding comprehensive foresight. The ability to independently identify such risks is a key step toward more robust and adaptable systems, and highlights the potential of AI to complement-and even surpass-human capabilities in proactive risk management.

The pursuit of identifying systemic risks with AI, as outlined in this research, feels predictably fragile. The study posits a method – combining AI agents for breadth with human judgment – to anticipate future AI-related issues. It’s a beautifully constructed attempt to tame chaos, yet one inherently destined for iterative refinement. As Andrey Kolmogorov observed, “The most important discoveries are often the most simple.” This simplicity, however, belies the complexity of production environments. Every abstraction, even one built on human-AI collaboration for foresight, will eventually encounter an unforeseen edge case, a flaw in the model, or simply the unpredictable nature of real-world application. The research offers a structured approach, but it’s a structure built on shifting sands, acknowledging that the landscape of AI risk is ever-evolving and demands constant adaptation.

So, What Breaks Next?

This exercise in algorithmic foresight – pairing large language models with the dwindling supply of actual experts – feels less like innovation and more like a sophisticated game of ‘spot the impending disaster’ played at scale. The research suggests a broader, if not deeper, scan for systemic risks. Naturally, that breadth will quickly become a deluge. The signal-to-noise ratio will plummet, and someone, somewhere, will inevitably declare the system ‘working as intended’ while ignoring the flashing red lights. It’s the way of things.

The real challenge isn’t generating a list of potential failures – any sufficiently complex system will fail – but in prioritizing them. And that prioritization, predictably, will be a political process, informed by budgets, deadlines, and a fundamental misunderstanding of exponential curves. The current framework treats human judgment as a filter. A more cynical – and probably accurate – view is that it’s a delay mechanism, postponing unpleasant truths until after someone has committed to a quarterly earnings report.

Ultimately, this is just another iteration of a very old problem: predicting the future is hard. Especially when that future involves technologies we barely understand. Everything new is old again, just renamed and still broken. The question isn’t whether the system will identify risks, but which risk will be the first to prove that even the most elegant model is merely a useful fiction.

Original article: https://arxiv.org/pdf/2602.08565.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- Exodus Looks To Fill The Space-Opera RPG Void Left By Mass Effect

- Will there be a Wicked 3? Wicked for Good stars have conflicting opinions

- Sony State Of Play Japan Livestream Announced For This Week

- Zombieland 3’s Intended Release Window Revealed By OG Director

- Woman hospitalized after Pluribus ad on smart fridge triggers psychotic episode

- ‘Stranger Things’ Actor Gives Health Update After Private Cancer Battle

- Everybody Wants to Cast Sabrina Carpenter

2026-02-10 11:48