Author: Denis Avetisyan

As artificial intelligence moves beyond conventional deep learning, current regulatory frameworks struggle to address the unique challenges posed by brain-inspired computing architectures.

This review argues that governing NeuroAI and neuromorphic systems requires a shift towards adaptive, event-driven governance frameworks.

Current artificial intelligence governance frameworks, designed for static neural networks on conventional hardware, face increasing limitations as computational paradigms shift. This is the central challenge explored in ‘Governance at the Edge of Architecture: Regulating NeuroAI and Neuromorphic Systems’, which examines the inadequacy of existing regulatory metrics-focused on benchmarks like FLOPs-when applied to the adaptive, event-driven dynamics of NeuroAI systems implemented on neuromorphic hardware. The paper argues that technically grounded assurance requires a co-evolution of governance methods aligned with the unique physics and learning processes of brain-inspired computation. Can we develop governance frameworks that effectively navigate the complexities of these novel architectures and unlock their full potential while ensuring responsible innovation?

Beyond Computation: Embracing Efficiency in Artificial Intelligence

Contemporary artificial intelligence, despite its impressive capabilities, often struggles with energy consumption and a rigidity that limits its response to novel situations. This inefficiency stems, surprisingly, from the foundational architecture underpinning most digital computers – the von Neumann architecture. This design, conceived decades ago, fundamentally separates processing units from memory, requiring data to constantly travel between the two. This ‘von Neumann bottleneck’ necessitates vast amounts of energy and hinders the system’s ability to learn and adapt in real-time, much like a human brain. Current AI systems, therefore, often require immense datasets and processing power to achieve even basic cognitive tasks, highlighting a critical need to move beyond this traditional computing model and explore more biologically inspired approaches.

NeuroAI represents a significant departure from traditional artificial intelligence, shifting the focus from raw computational power to the principles of biological efficiency. Inspired by the human brain’s remarkable ability to process information with minimal energy consumption, this emerging field seeks to emulate neural structures and functions in AI systems. Rather than relying on the sequential processing of the von Neumann architecture, NeuroAI explores parallel, distributed computation, mimicking the interconnected network of neurons. This biologically-inspired approach utilizes spiking neural networks, memristors, and other novel technologies to create AI that learns and adapts more like the brain, offering the potential for drastically reduced energy consumption and enhanced adaptability in complex environments. The goal is not simply to build faster computers, but to fundamentally rethink how AI processes information, paving the way for truly intelligent and sustainable systems.

Conventional artificial intelligence development has largely focused on escalating computational capacity – faster processors, more memory – yet this approach is reaching its limits. NeuroAI proposes a departure from this trajectory, prioritizing a fundamental reimagining of information processing itself. Rather than simply performing more calculations per second, this emerging field investigates how to mimic the brain’s inherent efficiency through biologically plausible mechanisms. This includes exploring spiking neural networks, memristive computing, and neuromorphic architectures that prioritize parallel processing, energy conservation, and adaptive learning. The goal isn’t just to build faster computers, but to create systems that process information in a way that is fundamentally more akin to biological intelligence – offering the potential for AI that is not only powerful, but also remarkably efficient and adaptable.

Neuromorphic Architectures: Emulating the Brain’s Design

Neuromorphic computing diverges from the von Neumann architecture that underpins most modern computers by directly implementing principles observed in biological neural networks. Conventional systems separate processing and memory, leading to data transfer bottlenecks; neuromorphic designs integrate these functions, mirroring the synaptic connections and distributed processing of the brain. This is achieved through the use of artificial neurons and synapses, often implemented in analog or mixed-signal circuits, allowing for parallel and event-driven computation. Unlike digital computers that operate on discrete time steps, neuromorphic systems can process information continuously and asynchronously, potentially enabling lower latency and greater efficiency for tasks like sensory processing and pattern recognition.

Spiking Neural Networks (SNNs) represent a significant shift from traditional Artificial Neural Networks by more closely mimicking biological neurons. Instead of transmitting information via continuous values, SNNs communicate through discrete “spikes” – brief pulses of electrical activity. This event-driven processing means computations only occur when a neuron receives sufficient input to reach a threshold and “fire,” resulting in sparse and efficient computation. Furthermore, SNNs inherently incorporate the timing of these spikes, allowing information to be encoded not just in the rate of firing, but also in the precise temporal relationships between spikes, enabling the modeling of complex temporal dynamics and potentially greater computational power with lower energy consumption.

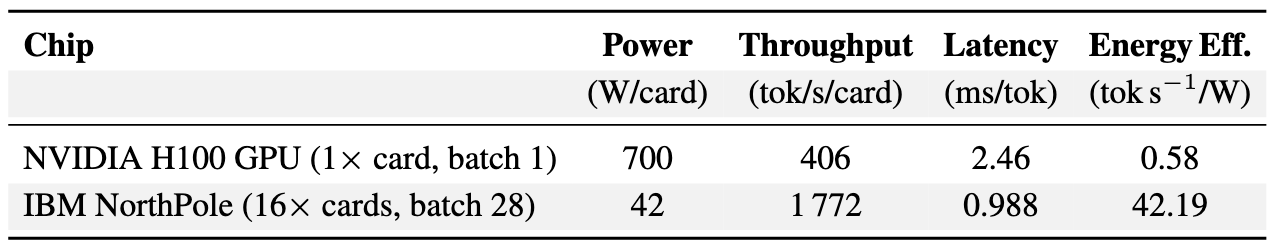

Neuromorphic computing leverages analog computation to substantially reduce energy consumption compared to traditional digital architectures. Specifically, the NorthPole neuromorphic processor has demonstrated approximately 72.7 times greater energy efficiency than an NVIDIA H100 GPU while maintaining comparable accuracy on benchmark tasks. This efficiency gain stems from the use of continuous-time signals and in-memory computation, minimizing data movement and the associated energy costs of frequent memory accesses characteristic of digital systems. Analog computation allows for the direct physical representation of neural network parameters and operations, resulting in significant power savings and enabling deployment in resource-constrained environments.

Neuromorphic systems, unlike traditional von Neumann architectures requiring retraining for new data, are designed for continuous learning and adaptation. This capability stems from their ability to modify synaptic weights based on incoming spike patterns, mirroring biological plasticity. This inherent adaptability is crucial for real-world applications involving non-stationary data – environments where inputs change over time – such as autonomous robotics, real-time object recognition in dynamic scenes, and adaptive sensor processing. The ability to learn incrementally without catastrophic forgetting, a common issue in artificial neural networks, enables these systems to operate effectively in unpredictable and evolving environments, reducing the need for periodic model updates and associated downtime.

Standardizing Assessment: Metrics for Neuromorphic Performance

NeuroBench is a comprehensive benchmarking suite designed to address the lack of standardized evaluation procedures for neuromorphic computing systems. It provides a unified platform for assessing the performance of neuromorphic algorithms, models, and hardware across a range of tasks, including image recognition, event classification, and signal processing. The framework includes a defined set of datasets, performance metrics, and evaluation protocols, facilitating reproducible research and fair comparisons between different neuromorphic implementations. Specifically, NeuroBench supports both functional and resource-based metrics, enabling analysis of accuracy, latency, energy efficiency, and other critical performance characteristics. Its modular design allows researchers to easily integrate new datasets, algorithms, and hardware platforms, ensuring its continued relevance as the field of neuromorphic computing evolves.

The Weight-Change Ratio (WCR) is a critical metric for assessing the operational characteristics and security of neuromorphic systems. It quantifies the degree to which a model’s synaptic weights are modified during inference, reflecting the system’s plasticity and adaptation to input data. A high WCR may indicate successful learning or adaptation, but also raises concerns about potential adversarial manipulation or unintended consequences of continuous learning. Conversely, a consistently low WCR could suggest a static model or a system operating within expected parameters. Monitoring WCR provides a mechanism to detect anomalies, identify potential tampering attempts, and ensure the integrity and reliability of neuromorphic computations, particularly in security-sensitive applications.

Neuromorphic systems, when integrated with event-driven computing paradigms, present significant advantages for edge computing applications. Performance benchmarks indicate these systems achieve a 2.5x reduction in latency compared to the NVIDIA H100 GPU when processing equivalent workloads. This improvement stems from the event-driven approach, which processes only actively changing data, reducing computational overhead and power consumption. The combination allows for faster response times and more efficient resource utilization in scenarios requiring real-time processing at the network edge, such as autonomous vehicles or sensor networks.

Neuromorphic hardware demonstrates significant energy efficiency gains when operating on resource-constrained devices. Specifically, inference on the MNIST dataset requires only 0.0025 mJ of energy using neuromorphic systems. This contrasts sharply with conventional GPU-based inference, which consumes between 10 and 30 mJ for the same task. This represents an order of magnitude reduction in energy expenditure, making neuromorphic computing particularly well-suited for deployment in edge computing applications and battery-powered devices where energy conservation is paramount.

Responsible Innovation: Governing the Future of AI

Global efforts to establish frameworks for trustworthy artificial intelligence are rapidly coalescing, most notably through the European Union’s AI Act and the U.S. National Institute of Standards and Technology (NIST) AI Risk Management Framework. These initiatives represent a shift from self-regulation to a more formalized approach, aiming to mitigate potential harms associated with AI systems while fostering innovation. The EU AI Act proposes a tiered risk-based system, categorizing AI applications and imposing stringent requirements for high-risk systems-those impacting fundamental rights or safety. Simultaneously, the NIST framework offers a flexible, voluntary guidance document designed to help organizations manage risks throughout the lifecycle of an AI product, from design and development to deployment and monitoring. Though differing in their legal weight and implementation, both frameworks emphasize principles like transparency, accountability, and robustness, signaling a growing international consensus on the necessity of responsible AI development and deployment.

Artificial Neural Networks, while powerful, operate as complex ‘black boxes’, making understanding their decision-making processes challenging. Consequently, detailed dataset documentation and rigorous weight inspection are becoming indispensable for establishing trust and accountability in these systems. Dataset documentation involves meticulously recording the origin, characteristics, and potential biases within the training data – crucial for identifying and mitigating unfair or discriminatory outcomes. Simultaneously, weight inspection, though computationally intensive, allows researchers to analyze the learned parameters within the network, offering insights into which features the model prioritizes and how it arrives at specific conclusions. This dual approach – understanding the data and the model itself – isn’t simply about debugging; it’s about fostering transparency, enabling reproducibility, and ultimately, ensuring that these increasingly pervasive technologies align with ethical principles and societal values.

Recent developments in China’s approach to artificial intelligence safety demonstrate a noteworthy alignment with international efforts towards responsible innovation. The newly established AI Safety Governance Framework, while rooted in China’s unique regulatory landscape, echoes principles already gaining traction in frameworks like the EU AI Act and the U.S. NIST AI Risk Management Framework. This convergence centers on crucial aspects such as algorithmic transparency, data security, and the mitigation of potential societal harms. The framework emphasizes pre-market safety assessments and ongoing monitoring of AI systems, particularly those with potential for widespread impact. This shared focus signals a growing global consensus: that proactive governance is not simply a constraint on AI development, but rather an essential ingredient for fostering public trust and realizing the technology’s transformative potential in a safe and ethical manner.

While Floating Point Operations Per Second (FLOPs) have historically served as a primary metric for gauging computational power, a singular focus on this measure is increasingly inadequate for evaluating modern artificial intelligence systems. Contemporary research demonstrates that true computational capability isn’t solely about raw speed, but also about how efficiently that speed is achieved and the system’s capacity to respond to changing conditions. Energy consumption during computation is a critical factor, as the environmental impact and operational costs of large-scale AI deployments become increasingly significant. Furthermore, adaptability-the ability of a model to generalize to new, unseen data or tasks with minimal retraining-represents a crucial dimension of intelligence that FLOPs fails to capture. Consequently, the field is actively developing complementary metrics-including energy-per-FLOP, benchmark performance on diverse datasets, and measures of transfer learning efficiency-to provide a more holistic and nuanced understanding of AI system capabilities and to drive innovation towards sustainable and resilient artificial intelligence.

The pursuit of governance for NeuroAI demands a recalibration of established paradigms. Current frameworks, predicated on assessing computational load via metrics like FLOPs, prove inadequate when confronted with the asynchronous, event-driven nature of neuromorphic systems. This necessitates a shift toward regulating adaptive behavior itself, rather than simply quantifying computational throughput. As Claude Shannon observed, “The most important thing in communication is to convey the meaning, not merely the signal.” This principle directly applies; focusing solely on the ‘signal’ – the raw computational activity – misses the crucial ‘meaning’ embodied in the system’s adaptive responses and emergent intelligence. Effective governance must prioritize understanding how these systems respond, not just how much they compute.

Beyond Counting Steps

The pursuit of governance for NeuroAI and neuromorphic systems reveals a fundamental discomfort: the tendency to regulate by enumeration. Current frameworks cling to metrics-FLOPs, data throughput-as if complexity can be contained by counting. This paper demonstrates the inadequacy of such approaches. The adaptive, event-driven nature of these systems resists quantification in conventional terms. It is not a matter of refining the count, but of abandoning it.

The central, unresolved problem remains the evaluation of ‘intelligence’ divorced from demonstrable task completion. Neuromorphic systems may exhibit behaviors that defy simple categorization, learning and adapting in ways opaque to traditional testing. The field must confront the possibility that some forms of intelligence are not readily ‘knowable’ through external observation. A shift in focus-from what a system does to how it changes-is essential, though not easily achieved.

Future work will likely circle the question of ‘trust.’ But trust, in this context, is not a feature to be engineered, but a consequence of understandable limitations. The goal should not be to build ‘safe’ AI, but to build AI whose boundaries are clearly defined and whose failures are predictable-even if those failures are novel. The simplicity of a known constraint is far preferable to the illusion of comprehensive control.

Original article: https://arxiv.org/pdf/2602.01503.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- YouTuber streams himself 24/7 in total isolation for an entire year

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- What time is It: Welcome to Derry Episode 8 out?

- Amanda Seyfried “Not F***ing Apologizing” for Charlie Kirk Comments

- Warframe Turns To A Very Unexpected Person To Explain Its Lore: Werner Herzog

- Elizabeth Olsen’s Love & Death: A True-Crime Hit On Netflix

- Hell Let Loose: Vietnam Gameplay Trailer Released

- Zombieland 3’s Intended Release Window Revealed By OG Director

2026-02-04 02:14