Author: Denis Avetisyan

A new framework leverages artificial intelligence to enhance the stability and optimization of power grids facing growing threats and disturbances.

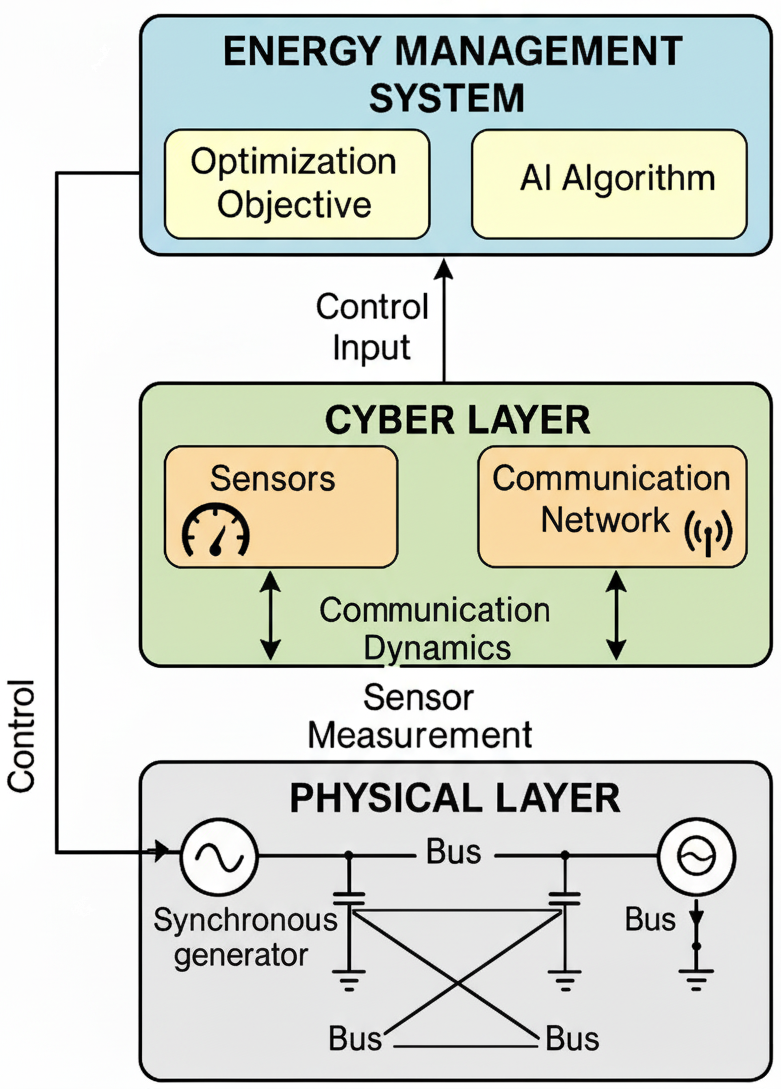

This review details a hybrid cyber-physical system integrating adaptive dynamic programming, reinforcement learning, and deep neural networks for robust smart grid control.

While modern smart grids promise increased efficiency and flexibility, their inherent cyber-physical nature introduces vulnerabilities that threaten stability and reliability. This paper introduces ‘An AI-Enabled Hybrid Cyber-Physical Framework for Adaptive Control in Smart Grids’, presenting a cloud-based, machine learning-driven forensic system designed for real-time anomaly detection, event reconstruction, and intrusion analysis. Through the integration of supervised and unsupervised learning algorithms, the framework demonstrates high accuracy and scalability in mitigating threats like data tampering and false-data injection. Could this approach represent a paradigm shift toward proactive, intelligent incident response for critical energy infrastructure?

The Evolving Grid: Complexity and the Pursuit of Stability

Contemporary power grids are rapidly evolving into intricate cyber-physical systems, driven by the imperative to integrate renewable energy sources and accommodate increasingly volatile load demands. Historically designed for unidirectional power flow from centralized generators, these grids now grapple with bidirectional energy exchange and the intermittent nature of solar and wind power. This shift introduces significant complexity, as grid operators must continuously balance supply and demand while managing fluctuating inputs. Furthermore, real-time monitoring and control become paramount, necessitating advanced communication networks and computational infrastructure to process the vast amounts of data generated by distributed energy resources and dynamic consumer behavior. The resulting interconnectedness, while enabling greater efficiency and sustainability, also presents novel challenges for maintaining grid stability and resilience in the face of unforeseen disturbances and growing energy consumption – a challenge that requires a fundamental rethinking of grid architecture and control strategies.

The increasing sophistication of modern power grids, while enabling greater efficiency and renewable energy integration, simultaneously introduces a spectrum of vulnerabilities to system disturbances. These disturbances extend beyond simple component failures – a transformer overheating, for example – to encompass deliberate and insidious attacks. Of particular concern is the False Data Injection Attack, where malicious actors compromise grid sensors and manipulate reported values, potentially causing cascading failures or even blackouts. Such attacks exploit the grid’s reliance on accurate data for control and protection, and their subtlety makes detection exceedingly difficult. The interconnected nature of these cyber-physical systems means a localized disturbance, whether accidental or malicious, can propagate rapidly, demanding robust security measures and advanced anomaly detection algorithms to maintain grid resilience and prevent widespread disruption.

The reliable operation of modern power grids hinges on sophisticated control strategies capable of responding to ever-shifting conditions. Voltage stability, a critical aspect of grid health, is particularly susceptible to disturbances caused by fluctuating renewable energy sources and unpredictable load demands. Traditional control methods often struggle with this dynamism, necessitating the implementation of intelligent systems that proactively anticipate and mitigate potential issues. These advanced controls utilize real-time data analysis and predictive modeling – sometimes employing techniques like $Model\ Predictive\ Control$ – to dynamically adjust grid parameters, reroute power flow, and maintain voltage within acceptable limits. Such adaptability is not merely about reacting to failures; it’s about proactively optimizing performance and bolstering resilience against a wide spectrum of potential disruptions, ensuring a consistently stable and dependable power supply.

Adaptive Control Through Reinforcement Learning: A Paradigm Shift

Reinforcement Learning (RL) provides a framework for creating adaptive control policies within smart grid environments by enabling an agent to learn optimal actions through interaction with a simulated or real-world system. Unlike traditional control methods relying on predefined models, RL algorithms do not require explicit system identification; instead, they learn directly from data obtained through a reward signal that quantifies the performance of the control actions. This is particularly valuable in smart grids due to their inherent complexity, stochasticity of renewable energy sources, and dynamic load profiles. The agent iteratively refines its policy-a mapping from states to actions-to maximize cumulative reward, effectively adapting to changing grid conditions and optimizing performance metrics such as energy efficiency, cost reduction, and grid stability.

Adaptive Dynamic Programming (ADP) and Proximal Policy Optimization (PPO) are model-free reinforcement learning methods that allow agents to learn control policies through iterative interaction with an environment. Both techniques utilize Value Function Approximation (VFA) to estimate the long-term reward associated with different states and actions, enabling generalization to unseen states. ADP methods typically solve the Bellman equation iteratively, while PPO employs a trust-region approach to update the policy, ensuring stable learning by limiting the policy change in each iteration. The core principle involves an agent receiving rewards for actions and adjusting its policy to maximize cumulative reward, effectively learning an optimal strategy through trial and error without requiring an explicit system model. These methods approximate the optimal value function $V(s)$ or the optimal policy $\pi(s)$ to manage the complexity of large state spaces.

Reinforcement learning algorithms exhibit scalability for implementation across different hierarchical levels within smart grid control systems. Specifically, Deep Q-Networks (DQN) are suitable for edge control applications, enabling localized decision-making based on immediate sensor data and acting directly on grid devices. Conversely, Proximal Policy Optimization (PPO) is better suited for cloud-level optimization due to its capacity to handle larger state and action spaces and to coordinate control actions across a wider geographical area. This tiered approach allows for a combination of rapid, localized responses from edge-based DQNs and global, optimized strategies implemented via cloud-based PPO, maximizing overall grid efficiency and stability.

Validation on the IEEE 33-Bus System: A Benchmark for Performance

The IEEE 33-Bus System is a widely adopted test case within the power systems engineering community for the development and validation of smart grid control algorithms. This benchmark consists of a 33-node radial distribution network with specific load and line characteristics, allowing for standardized performance comparisons between different control strategies. Its established parameters and publicly available data facilitate reproducible research and objective evaluation of techniques aimed at improving grid efficiency, reliability, and resilience. The system’s relatively small size allows for computationally efficient simulations while still representing the complexities of a real-world distribution network, making it ideal for testing and refining advanced control methods before deployment in larger, more complex systems.

Implementation of Adaptive Dynamic Programming, Proximal Policy Optimization, and Deep Q-Network reinforcement learning algorithms on the IEEE 33-bus system yielded measurable improvements in both performance and resilience characteristics. Specifically, these algorithms exhibited the capacity to effectively respond to system disturbances and maintain operational stability under varying load conditions. Performance was evaluated by assessing the algorithms’ ability to minimize total control cost, regulate voltage levels, and maintain frequency stability, thereby demonstrating their potential for real-world smart grid applications. The algorithms were tested against a range of simulated contingencies and load profiles to quantify their robustness and adaptability.

Implementation of the tested reinforcement learning algorithms – Adaptive Dynamic Programming, Proximal Policy Optimization, and Deep Q-Network – resulted in effective management of fluctuations within the IEEE 33-bus system, consistently maintaining a Total Control Cost between 18 and 24 units. This performance range indicates a significant capacity for mitigating system disturbances and sustaining stable operation under varying load conditions. The ability to consistently achieve this cost level suggests a viable pathway towards improving overall grid reliability and resilience by providing a quantifiable metric for evaluating control strategy effectiveness and minimizing operational expenses.

Bolstering Resilience: Mitigating Communication Disruptions and Variability

Modern smart grids, while offering increased efficiency and reliability, are inherently vulnerable to the realities of data transmission. Communication delays and packet loss – common occurrences in any network – can significantly degrade the performance of critical control actions. These disruptions arise from various sources, including network congestion, hardware failures, and even the sheer distance data must travel. Consequently, a control signal intended to stabilize the grid might arrive too late, or with incomplete information, leading to instability or even cascading failures. The timing and completeness of data are paramount; even minor communication issues can compromise the grid’s ability to respond effectively to dynamic changes in energy supply and demand, necessitating robust strategies for maintaining operational integrity amidst these inevitable communication challenges.

The integration of Battery Energy Storage Systems (BESS) with sophisticated Reinforcement Learning (RL) strategies represents a powerful approach to bolstering grid resilience against the inherent unpredictability of renewable energy sources. Renewable generation, such as solar and wind, introduces fluctuations that can destabilize power delivery; however, a BESS acts as a crucial buffer, absorbing excess energy during periods of high production and releasing it when generation dips. This smoothing effect, intelligently managed by advanced RL algorithms, not only maintains grid frequency and voltage stability but also provides vital backup power during communication disruptions or unexpected outages. The RL component dynamically optimizes BESS charging and discharging cycles, maximizing its effectiveness in mitigating renewable intermittency and ensuring a consistently reliable power supply, effectively transforming the grid from reactive to proactive in the face of variability.

The newly developed framework exhibits remarkable stability in the face of adversity, consistently achieving a Resilience Index ranging from 0.95 to 1.0 during rigorous testing. Simulations incorporating both cyber-physical attacks and the inherent unpredictability of renewable energy sources – such as solar and wind intermittency – failed to significantly degrade performance. This sustained high index indicates the system’s robust ability to not only withstand disruptions but also to rapidly recover and maintain essential functions, effectively demonstrating strong self-healing capabilities and bolstering confidence in its real-world applicability. The consistent performance suggests a proactive design effectively mitigating the impacts of common grid vulnerabilities.

The presented framework meticulously pursues a harmonious balance between computational efficiency and provable correctness, echoing Robert Tarjan’s sentiment: “Programmers often talk about elegance, but that’s just a subjective thing. What I really care about is correctness.” This pursuit of correctness aligns directly with the article’s emphasis on resilience within the smart grid. The integration of Adaptive Dynamic Programming, Proximal Policy Optimization, and Deep Q-Networks isn’t merely about achieving operational efficiency; it’s about establishing a control system whose behavior can be rigorously analyzed and validated, ensuring predictable and reliable performance even under duress. The system’s adaptability isn’t simply a feature, but a necessity for provable stability in a complex, dynamic environment.

What’s Next?

The presented framework, while demonstrating a confluence of optimization and learning techniques, merely addresses the symptoms of complexity inherent in cyber-physical systems. The true challenge lies not in mitigating disturbances post-hoc, but in formulating a control architecture fundamentally resistant to unforeseen states. The reliance on reinforcement learning, however elegantly implemented, introduces a stochastic element – a probabilistic approximation of optimality. This is, at best, a practical concession, not a theoretical ideal. A truly robust system demands provable stability guarantees, not empirically observed resilience.

Future work must move beyond algorithmic refinement and grapple with the underlying mathematical limitations. The current approach treats the smart grid as a ‘black box’ subject to external stimuli. A more rigorous path involves developing formal models capable of capturing the system’s internal dynamics with absolute fidelity. Such models would allow for the verification of control strategies, not simply their validation through simulation. The persistent ambiguity surrounding the true nature of cyber threats further complicates matters; a provably secure system requires a complete and accurate threat model – an aspiration that remains, at present, elusive.

Ultimately, the field must acknowledge that ‘adaptability’ is often a euphemism for ‘lack of foresight’. While the presented hybrid approach represents a step towards more intelligent grid management, it is a step taken within the confines of existing approximations. The pursuit of genuine resilience necessitates a return to first principles – a relentless focus on mathematical rigor and a willingness to abandon empirical ‘solutions’ in favor of provable truths.

Original article: https://arxiv.org/pdf/2511.21590.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Tom Cruise? Harrison Ford? People Are Arguing About Which Actor Had The Best 7-Year Run, And I Can’t Decide Who’s Right

- Gold Rate Forecast

- Katanire’s Yae Miko Cosplay: Genshin Impact Masterpiece

- How to Complete the Behemoth Guardian Project in Infinity Nikki

- Adam Sandler Reveals What Would Have Happened If He Hadn’t Become a Comedian

- Arc Raiders Player Screaming For Help Gets Frantic Visit From Real-Life Neighbor

- Brent Oil Forecast

- What If Karlach Had a Miss Piggy Meltdown?

- Zerowake GATES : BL RPG Tier List (November 2025)

- Retro Tower Defense codes (January 2026)

2025-11-27 14:00