We’ve all seen it – a promising online discussion quickly ruined by negativity, spam, and personal attacks. The difference between a healthy, engaging community and a toxic one isn’t chance; it’s effective comment moderation. This isn’t about silencing opinions or creating a space where everyone agrees. It’s about making a place where people can have lively discussions – like debating the best strategies in a game – without things turning hostile. This guide will show you how to build that kind of community, from dealing with criticism to creating a fair system that encourages genuine connection.

Key Takeaways

- Curate Conversations, Don’t Just Censor Them: Your goal isn’t to create a sterile echo chamber. It’s to remove genuine toxicity—like hate speech and spam—so that productive discussions and even healthy disagreements have room to thrive.

- Build Trust with Clear and Consistent Rules: A fair moderation system is predictable. When everyone knows the guidelines and sees them applied equally to all members, they feel safe to participate honestly and trust the process.

- Treat Constructive Criticism as a Gift: Learn to distinguish between trolls who just want to start fires and community members offering valuable feedback. Engaging with thoughtful criticism shows you’re listening and helps you improve, while ignoring it can cost you valuable insights.

What is Comment Moderation, Really?

Online gaming communities rely on moderators – the often-overlooked people who keep things running smoothly. Whether you’re discussing game strategies or reacting to new announcements, good moderation is essential. Essentially, comment moderation means reviewing what users post to make sure it follows the community’s rules. It’s not about silencing people, but about fostering a positive environment where conversations can flourish without being disrupted by unwanted content like spam or hateful comments. This work prevents communities from becoming toxic and allows for real connections and fun experiences, like the inside jokes and memes that develop around games like Honkai: Star Rail. A thriving community is an active one, and effective moderation is what makes it possible.

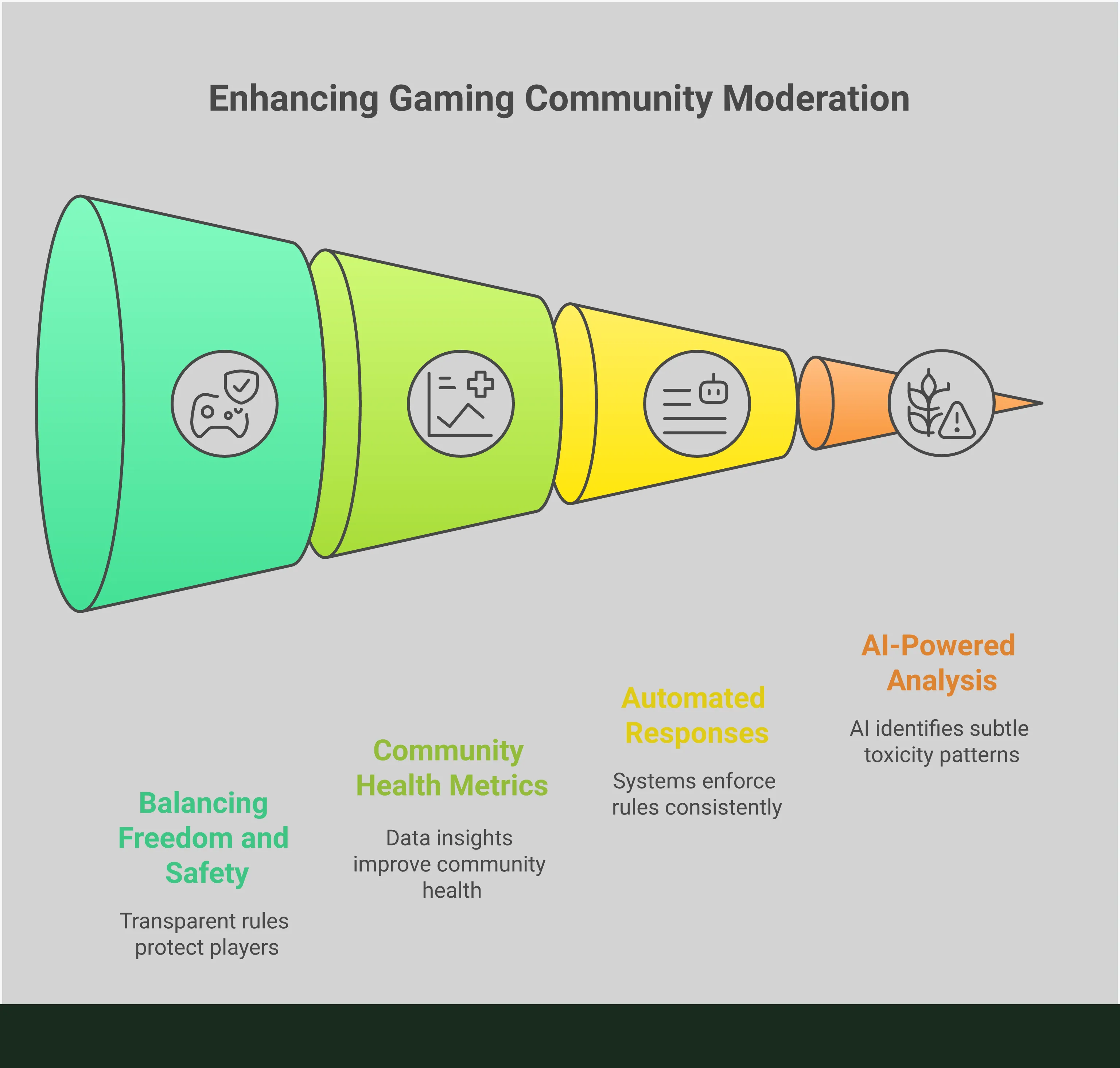

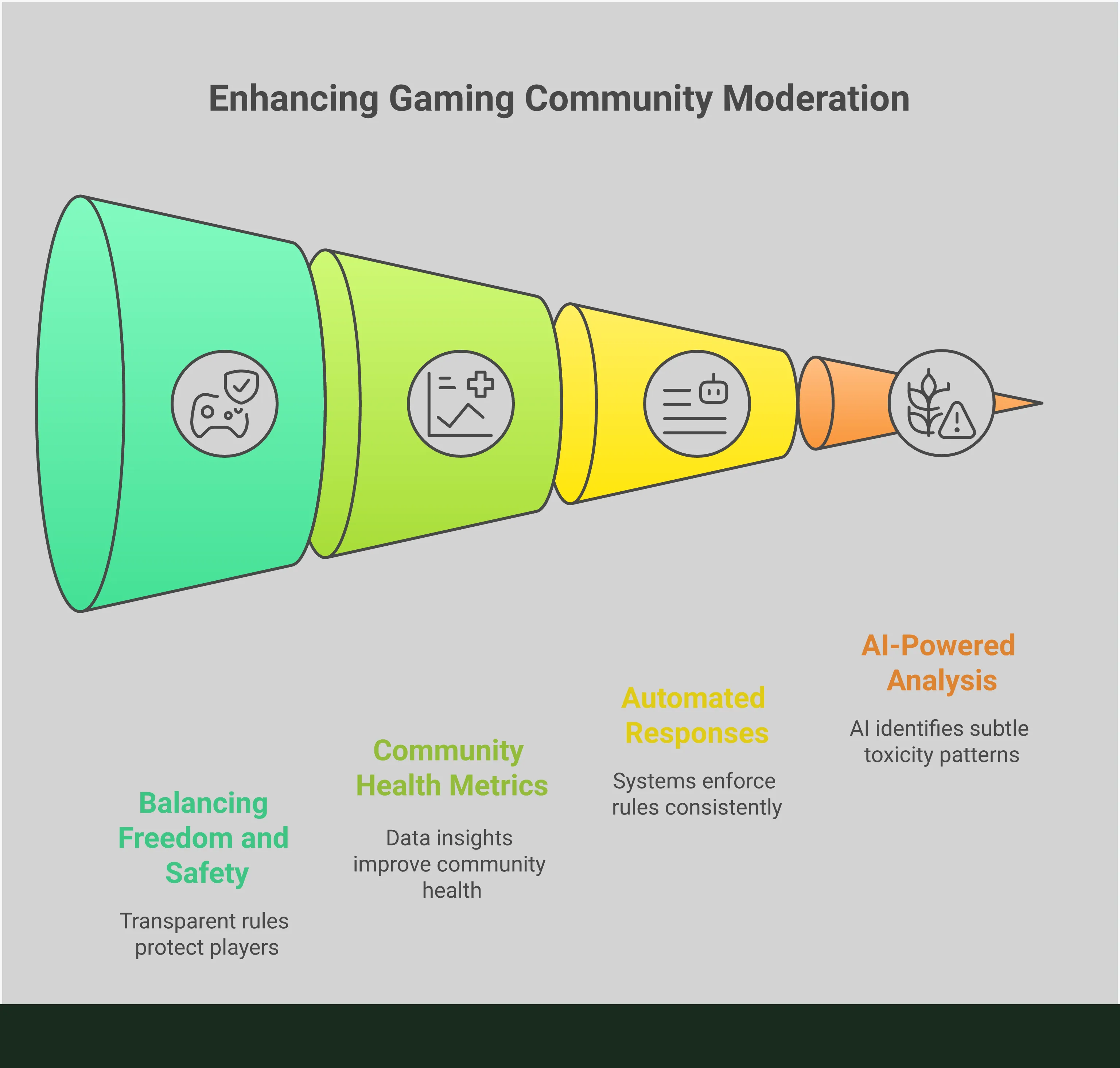

How Moderation Systems Work

Ever wonder how online platforms manage comments? It’s rarely just one person deleting things. Instead, most use a mix of automated tools and real people. The automated tools quickly catch obvious violations like spam and hate speech. But because these tools don’t understand nuance, human moderators step in to review flagged content and make more complex decisions. This combination is essential for keeping content appropriate while respecting the specific rules of each community.

Why Clear Guidelines are Your Best Friend

When you join a new Discord server, one of the first things you often look for is the rules channel. This is because clear guidelines are essential for a thriving community. Setting these rules lets everyone know what’s expected of them, preventing confusion and disagreements. A simple, easy-to-understand set of rules creates a respectful and safe space where people feel comfortable participating, knowing there are standards to keep conversations positive.

The Human Touch: The Role of Moderators

While helpful, automated tools aren’t a substitute for human moderators. People are essential because they can understand the context of conversations – things like sarcasm, jokes, and subtle meanings that algorithms miss. For example, a bot might mistakenly flag a comment with a certain word, but a human would recognize it was part of a normal discussion. This kind of judgment is what keeps online communities genuine. Moderators protect the community’s unique atmosphere by interacting with members and resolving disagreements. Ultimately, their involvement makes people feel valued and respected, rather than simply controlled by automated systems.

How to Handle Critical Feedback

Receiving harsh criticism can be really painful, like a sudden blow. But before you immediately get defensive, remember that not all feedback is the same. Being able to separate helpful advice from unhelpful negativity is a valuable skill for anyone creating things or managing online communities. It allows you to improve and shield yourself from unnecessary negativity. Let’s explore how to identify the difference and how to respond to the feedback you get.

The Different Flavors of Criticism

Let’s start by changing how we think about criticism. It’s common to feel attacked by negative comments, but often that’s not the intention. Instead, consider feedback as varied – some of it will be unhelpful, but some can be incredibly valuable for improving your content or community. Not all criticism is bad; it can actually be a hidden opportunity. Helpful feedback can reveal areas you hadn’t noticed, providing important insights to make your streams, videos, or community rules even better. The important thing is to learn how to identify the helpful feedback.

Constructive vs. Destructive: Know the Difference

As a creator, figuring out which feedback is actually helpful can be tough! I’ve learned that good feedback focuses on the work itself, not on me personally. Like, someone might say, “The sound was a little quiet at the beginning of the video,” which is something I can actually fix. But if they just say, “You’re a bad streamer,” that’s just mean and doesn’t help at all. Bad comments are usually personal attacks, super vague, or just meant to stir up trouble. Honestly, most creators I know just delete anything intentionally offensive or rude. I’ve realized it’s way better to ignore trolls and focus on finding those little bits of useful advice – there’s no point in arguing with people who are just trying to cause drama.

How Feedback Shapes Your Community

How you respond to feedback impacts everyone in your community. A negative and uncontrolled comment section can discourage new people from joining and exhaust your existing fans. Unaddressed criticism can create a harmful environment where people are afraid to participate. However, by responding to helpful feedback and removing negativity, you demonstrate that you value a positive and respectful space, which encourages better discussions and builds a community people want to be involved in.

The Ripple Effects of Deleting Comments

Deleting negative comments online might seem like a fast way to improve the atmosphere, but it can actually cause more trouble in the long run. While it cleans things up temporarily, it can damage trust with your audience and discourage them from participating. It’s important to consider the bigger picture and build a community that’s strong, genuine, and able to handle different viewpoints – not just one that appears perfectly positive.

Building (or Breaking) Community Trust

Strong communities are built on trust, but that trust is easily broken. If people notice critical comments being removed without a clear reason, they’ll naturally suspect you’re being dishonest. It creates the impression that you only want to showcase positive feedback. Studies show that hiding negative comments online can actually damage how people view your brand. Essentially, if your community feels like they aren’t getting the whole truth, they’ll stop believing in what you share. Being open and honest, even when it’s difficult, is the best way to build long-term loyalty.

How Removal Impacts User Engagement

It’s great to want a lively and engaging community, but removing comments can discourage open discussion. When people see that differing opinions aren’t welcome, they’re less likely to share what they truly think. This can make your community feel artificial and prevent meaningful interactions. Why would anyone bother offering constructive feedback if it’s just going to be deleted? Ultimately, this can lead to fewer people participating, as those seeking real conversation will go elsewhere. A strong community needs a variety of viewpoints, even critical ones, to flourish.

Are You Creating an Echo Chamber?

An echo chamber happens when a group only hears one perspective, and it can be really damaging. When you constantly block out criticism, you create a false sense of perfection. While avoiding negativity might seem good, it actually stops you from improving and learning. Ignoring negative comments can protect your brand, but completely shutting them down creates an unsustainable situation. You lose out on important feedback that could make your work or community better, and you risk losing touch with what your audience really thinks.

Preparing for the Community’s Response

Deleting comments, particularly those with legitimate concerns, can cause upset. People notice when their opinions are removed, and it might look like you’re trying to control the conversation. It’s best to address serious criticism openly rather than ignore it. Ignoring even one negative comment can quickly escalate into a larger problem, with users feeling unfairly treated. Removing comments is okay if they clearly break the rules, like containing hate speech or spam. But generally, it’s better to leave criticism visible and respond to it with careful consideration.

How to Build a Fair Moderation System

A strong moderation system is essential for any thriving online community. Being fair doesn’t mean being too easy on people; it means applying rules consistently, openly, and in a way that everyone can understand. When users know what’s expected of them and believe those rules apply to everyone equally—whether they’re new or long-time members—they feel secure. This safety encourages participation, open discussion, and the building of relationships. Without fairness, moderation can seem random and biased, causing frustration and potentially losing valuable members who simply want to connect and share ideas.

Creating a genuinely fair system means moving beyond just what feels right. It needs a solid plan based on four important principles. First, your rules should be perfectly clear and easy to understand. Second, people should have a simple way to challenge decisions they disagree with. Third, your moderators need thorough training to deal with difficult situations fairly and consistently. And finally, you must actively work to eliminate personal biases. If you get these four things right, your online community – whether it’s about games like Diablo or Warzone, for example – will be a place where lively discussions can flourish, rather than descend into arguments.

Create Policies That Are Crystal Clear

Unclear rules make it impossible to moderate fairly. When your guidelines are open to different understandings, it creates conflict for both your moderators and your community. While rules like “be nice” or “no trolling” are a good starting point, they lack the detail needed to be truly effective. What exactly does “nice” mean, and how do you distinguish a passionate discussion from actual trolling? Your rules should clearly explain what behavior is acceptable and what isn’t, with specific examples.

It’s crucial to be open about how you uphold your rules. Don’t dismiss or conceal valid criticism without a good reason based on your policies, or people will likely accuse you of censorship. Aim to create community guidelines that are simple to locate, read, and comprehend.

Establish a Fair Appeal Process

Moderators are people, and like anyone, they sometimes make errors. A user could be mistakenly flagged, or a moderator might misunderstand something. That’s why a good appeals process is crucial. It lets users explain their side and demonstrates your commitment to fairness. A clear way to address concerns is vital for keeping the community’s trust, even when removing content to ensure everyone’s safety.

You don’t need a complex system for handling appeals. A simple form or email address where users can explain their concerns is often enough. Taking this small step demonstrates respect for your community and shows you’re committed to being accountable.

Train Your Moderators for Success

Honestly, I think being a great moderator is a real art, not something you just give to anyone. It’s a bad idea to put someone in charge of a community without giving them the right training – it almost always ends badly! A good moderator doesn’t just know the rules, they really understand how to talk to people, especially when things get heated. They’re more like leaders who guide the community, not just people who point out what you can’t do.

Your team’s training should include both your company’s specific rules and generally accepted best practices. Giving them strong communication skills will help them navigate challenging conversations calmly and professionally. Regular, consistent training is essential to ensure all moderators handle situations similarly, creating a sense of fairness and predictability for everyone.

Keep Bias Out of the Equation

Hidden biases can slowly damage an online community. Moderators, like everyone else, have their own preferences when it comes to games, characters, or how to play. However, those personal feelings shouldn’t affect their decisions. When moderation feels based on personal opinion, people lose trust. It’s important to consider: is a comment being removed because it breaks a rule, or simply because it disagrees with an admin’s preferred way to play a game like Warzone?

To be fair, moderation decisions should always follow your established written rules, not personal opinions. A great way to reduce bias is to create a moderation team with diverse backgrounds and viewpoints. Regularly checking moderation actions can also help spot and fix any hidden biases before they become significant issues.

Your Game Plan for Managing Feedback

A well-defined plan for managing feedback is essential for a successful online community. It’s not about silencing people, but about directing conversations productively. Knowing how to sort through comments, how quickly to reply, and what kind of response to give allows you to confidently lead your community. This keeps the space positive and helpful, even during disagreements. Consider it a roadmap for creating a community where people enjoy participating. A clear plan also helps your moderation team respond consistently and fairly, fostering trust and encouraging more thoughtful discussions.

Smart Ways to Filter Comments

Managing comments isn’t about silencing different opinions; it’s about keeping the conversation healthy. We’ve all noticed when comments suddenly disappear, which can seem strange. However, ignoring problematic comments isn’t the solution either. Allowing hateful or irrelevant posts to stay up can ruin discussions and discourage participation. The key is to strike a balance. Actively removing comments that break the rules – such as those containing hate speech or personal insults – helps create a safe and welcoming environment. This isn’t about restricting free speech; it’s about ensuring the discussion remains valuable and respectful, showing your community that you prioritize quality interactions.

Set Standards for Response Times

It’s crucial to address inappropriate comments quickly. Even one negative remark can disrupt a discussion and harm your community’s image. If ignored, negativity can spread rapidly, making people feel unwelcome. That’s why it’s important to have guidelines for how fast you respond to issues. You don’t need to react instantly, but aiming to review flagged comments within a few hours helps prevent problems from escalating. A quick response demonstrates that you’re actively monitoring the community and dedicated to maintaining a positive environment.

Your Guide to Communicating with Users

The way you talk to your users matters just as much as what actions you take to moderate content. Before deleting a critical comment, pause and consider it. Does it offer any useful feedback, even if it’s presented negatively? Responding to thoughtful criticism, even when it’s not what you want to hear, can give you valuable insights and help your community improve. Ignoring or hiding legitimate concerns, however, can easily create problems and lead to accusations of censorship. Being open and honest is key. If you remove a comment, be ready to explain why it broke the rules, either publicly or in a private message. This demonstrates fairness and shows you’re making decisions based on clear principles, not just trying to suppress opposing views.

How to Keep Your Moderation Consistent

Fair moderation relies on consistency. If users believe rules are enforced randomly or unfairly, it damages the trust you’ve built with your community. Inconsistent moderation can make your platform seem unreliable and weaken how people view it. Keep in mind that all comments, both positive and negative, contribute to your community’s overall perception. To ensure fairness, your moderation guidelines should be easy to understand, and all moderators need to apply them in the same way. This creates a predictable environment where users know what’s expected and trust that everyone is treated equally.

The Moderator’s Toolkit

What makes a truly excellent moderator isn’t just having the right tools, but knowing how to use them. Your toolkit isn’t limited to software—it’s a combination of automation, your own good judgment, help from AI, and using data effectively. It’s similar to creating the perfect setup in a game like Warzone—each component has a specific job, and they’re most effective when used together. A prepared moderator can confidently manage everything from annoying spam bots to lively, and sometimes intense, community discussions.

We want to create a moderation system that works well and is also fair to everyone. By using automation to handle simple issues, we can allow human moderators to concentrate on more complex situations that really impact the community. Combining technology and human judgment lets you protect your members and encourage positive, open conversations. Let’s explore the key tools a modern moderator needs.

Using Automated Systems Wisely

Automated systems are a great first step in keeping your online spaces safe. They work around the clock to block spam, filter out offensive language, and identify content that breaks your rules. Using tools like keyword filters and auto-moderation bots can quickly remove unwanted comments, which is vital for building a positive and welcoming community. Letting negative comments sit unchecked can discourage people from participating and create a harmful environment.

Automation isn’t a perfect fix-it solution. While helpful, these systems don’t understand context and can sometimes make errors – either incorrectly flagging harmless conversations or missing harmful behavior. Over-reliance on automation can make interactions feel cold and frustrating. The best approach is to use it for obvious violations, allowing human moderators to focus on more nuanced and complex issues that require careful consideration.

When to Use a Manual Review

Automation is fast, but it can’t replace human understanding, especially when things aren’t clear-cut. Whenever a situation requires careful consideration – like dealing with strong criticism, resolving user conflicts, or understanding comments that aren’t obviously right or wrong – a human review is essential. For example, it’s hard for a computer to distinguish between constructive feedback about a new game update, like the recent Diablo 4 patch, and a personal attack on the developers. A person can easily make that distinction.

Dismissing or simply deleting honest criticism quickly erodes trust within your community and can lead to claims of censorship. Taking the time to review feedback manually shows your community you’re listening and appreciate their input, even if it’s negative. This is how moderators prove their value – by making careful, considered decisions that uphold community standards and demonstrate a commitment to fairness.

Integrating AI to Help Out

AI can be a powerful helper for your moderation team. Instead of simply searching for specific words, AI tools can understand the overall feeling, situation, and how users typically behave to identify potentially harmful content. This allows moderators to focus on the most concerning comments first. For instance, AI can tell the difference between playful sarcasm and real aggression – something a basic filter wouldn’t be able to do.

The key is to set up these AI systems just right. If an AI is too strict, it might block too much content, making your platform seem out of touch and harming your community. Instead of letting the AI decide everything on its own, think of it as a strong filter to help your human moderators. Combining AI with human oversight creates a faster, more reliable system that catches harmful content while still allowing important discussions to happen.

Use Data to Make Better Decisions

The moderation work you do creates valuable information, so be sure to use it! By tracking things like how many comments are flagged, what rules people break most often, and the results of user appeals, you can learn a lot about how your community is doing. For example, if you see a sudden increase in flagged comments after a new game update, it might mean you need to focus more attention on those conversations.

Instead of just reacting to problems as they happen, this method lets you actively improve your community. By understanding the root causes and locations of conflicts, you can improve your rules, better train your moderators, and create content that prevents issues. This analysis helps you make smart choices that build a stronger, more welcoming community for gamers.

How to Create a Healthy Discussion Space

The key to a strong gaming community is creating a safe space where players can honestly share their opinions, both positive and negative. It’s not about eliminating criticism, but about managing conversations to keep them constructive and respectful. A good community allows disagreements without personal attacks, welcomes feedback without censorship, and makes everyone feel heard. This kind of environment doesn’t just happen on its own – it requires careful planning to balance open communication with clear rules, transforming potentially toxic comment sections into a place where people can truly connect.

Encourage Constructive Conversations

It’s important to remember that criticism isn’t always meant to be hurtful. Often, it’s a chance to learn and improve. Research shows that thoughtful feedback can actually help you grow and develop. The trick is to recognize constructive criticism and welcome it. When someone offers critical but well-considered comments, respond to them. Ask for more details, and thank them for sharing their thoughts. This shows others that you value honest opinions, not just compliments. By acknowledging helpful criticism, you encourage a community where feedback – even when it’s difficult – is seen as a valuable tool for everyone’s progress.

Build Lasting Community Trust

Your community is intelligent and will notice if you’re being dishonest. Simply deleting negative comments doesn’t create a genuinely positive environment – it creates one that’s easily broken. In fact, studies show that suppressing criticism actually damages trust. It’s better to be open about problems and address concerns directly, as this demonstrates confidence and respect for your audience. When you make an error, admit it. If someone points out a legitimate issue with a game update or content, acknowledge it. This kind of honesty builds strong trust, which is far more valuable than a flawless but artificial online appearance. It shows you value your community as collaborators, not just statistics.

Protect Your Users’ Privacy

The most important thing for a thriving online community is safety. As a moderator, your primary role is to shield your members from harassment, the sharing of private information, and other harmful actions. Unchecked negativity can quickly damage a brand’s reputation and have lasting consequences. Therefore, it’s crucial to have a strict policy against sharing personal details, making threats, or targeting individuals for harassment. Remove inappropriate content and ban offenders promptly. Your community needs to feel secure and know you’ll protect them, allowing them to freely share their love of gaming without fear for their safety.

Maintain High-Quality Content

The point isn’t to avoid all criticism, but to keep conversations productive and worthwhile. Don’t ignore or remove legitimate concerns – that can look like censorship. Instead, address valid points openly and honestly. Of course, you also need to remove disruptive content like spam, irrelevant posts, and hateful comments. Think of it like gardening: you remove the weeds, but you also nurture the healthy plants so they can thrive. This allows for meaningful discussions, like the reactions to the new Diablo 4 trailer, to really develop.

What’s Next for Comment Moderation?

Online communities are constantly evolving, so how we handle conversations needs to evolve too. Simply deleting comments or banning people isn’t working as well anymore. As communities become more complex, we need more sophisticated ways to encourage healthy and productive discussions. Instead of focusing on strict rules and punishments, we should focus on creating better spaces for people to connect. This requires exploring new technologies, redefining roles within communities, and developing flexible strategies that can adapt to changing needs. Let’s take a look at what the future holds for managing online conversations.

The Tech on the Horizon

Artificial intelligence and other new technologies are about to significantly change how online content is managed. However, it’s not enough to simply remove unwanted posts more quickly. The biggest challenge is building tools that can understand the meaning behind online conversations, including subtleties and context. Studies have shown that blocking negative reviews can actually harm a brand’s reputation. Instead of just filtering out negativity, future technology should encourage more honest communication. Think of AI that can recognize helpful feedback even within an angry message, or suggest ways to calm down a difficult discussion. The focus is moving away from simply censoring content and toward creating tools that promote real and meaningful interactions.

Can Communities Moderate Themselves?

Ideally, communities would police themselves effectively. While many do a good job establishing their own standards, relying solely on users for moderation can be difficult. When feedback becomes personal, people often react with emotion, which can escalate conflict instead of solving it. Research shows that feeling attacked can make people withdraw, hindering the community’s ability to improve. Self-moderation isn’t necessarily a bad idea, but it often benefits from some oversight. A balanced approach—where community members can report problems and trained moderators handle more sensitive issues—usually works best.

Creating Smarter, Adaptive Strategies

A strong community isn’t about everyone having the same opinions; it’s about people being able to disagree with each other politely. Because of this, your approach to managing online discussions needs to be thoughtful and adaptable. Helpful criticism can offer valuable ideas for improvement, but unchecked negativity can hurt your reputation. Effective moderation in the future will involve systems that can distinguish between disruptive troublemakers and passionate users offering constructive feedback. This requires moving beyond simply allowing or deleting comments and creating a system that fosters productive conversations while shielding the community from harmful behavior.

Related Articles

- Deus Ex LFG: Connect with Other Augs in LFG Feature on Z League App

Frequently Asked Questions

Deleting negative comments might seem like a simple way to maintain a positive atmosphere, but it can actually be harmful. When people notice that only positive feedback is visible, they start to distrust you and the community. It also stops people from sharing helpful criticism that could lead to improvements. A truly healthy online space isn’t about avoiding disagreement – it’s about creating an environment where people feel comfortable having open and honest conversations.

It can be tough to know if a comment is helpful advice or just someone trying to cause trouble. Here’s how to tell the difference: useful criticism usually points out specific things about your content or community – for example, saying something like, “The audio was difficult to understand in that part.” Harmful comments, or trolling, are often general insults that don’t offer any real suggestions, like “This is boring.” Pay attention to feedback that gives you something you can actually do to improve, and don’t hesitate to delete comments that are simply meant to be hurtful.

If you’re launching a new community, the most important first step for moderation is to create very clear guidelines. Avoid general rules like ‘be nice’ because they can be confusing. Instead, give specific examples of acceptable and unacceptable behavior. This ensures everyone understands the rules from the start, making moderation easier and fairer.

It’s natural for moderators to occasionally make errors. When that happens, the best approach is to have a straightforward appeal process. This allows users to request a review if they disagree with a moderation decision. Having a system for appeals demonstrates to your community that you prioritize fairness and accountability, which helps build trust.

So, can I just let bots handle all the moderation? Well, bots are awesome for catching blatant spam and blocked words, but they’re definitely not a complete solution. As a gamer, I’ve seen bots totally miss the point – they don’t get sarcasm, context, or when a conversation gets complicated. What I’ve learned is it’s best to use bots for the easy stuff, like filtering out obvious trouble, and then let real people – moderators – handle the tricky situations that need a bit of judgment and understanding. It’s about using both together, really.

Read More

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- NBA 2K26 Season 5 Adds College Themed Content

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Mario Tennis Fever Review: Game, Set, Match

- Gold Rate Forecast

- 2026 Upcoming Games Release Schedule

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Pokemon LeafGreen and FireRed listed for February 27 release on Nintendo Switch

- Train Dreams Is an Argument Against Complicity

2025-10-29 16:10