Author: Denis Avetisyan

Successfully deploying artificial intelligence requires more than just optimizing benefits and risks-it demands a new approach to managing inherent tensions.

This review reconceptualizes responsible AI governance as a paradox management challenge, introducing the PRAIG framework and strategies for organizations.

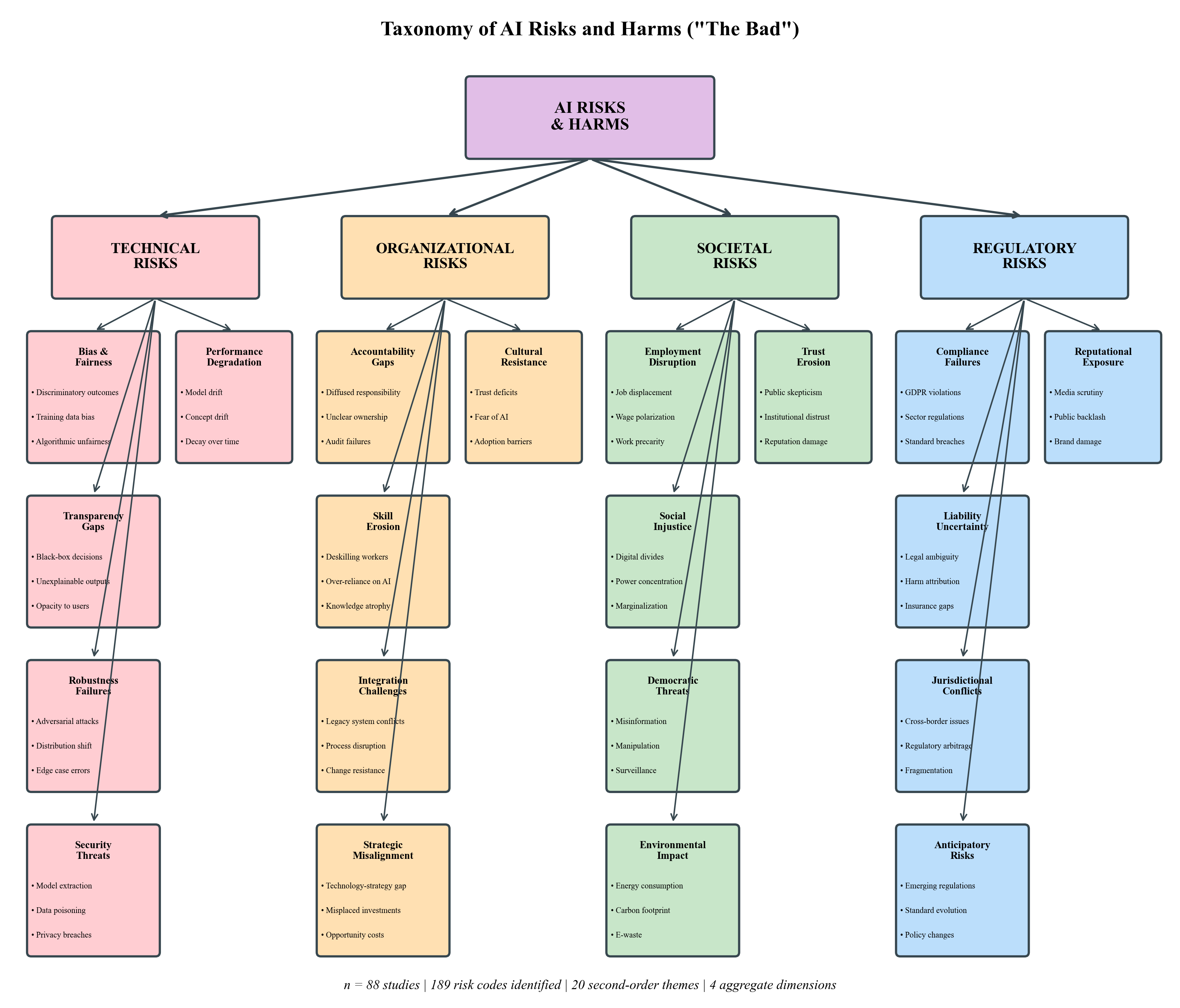

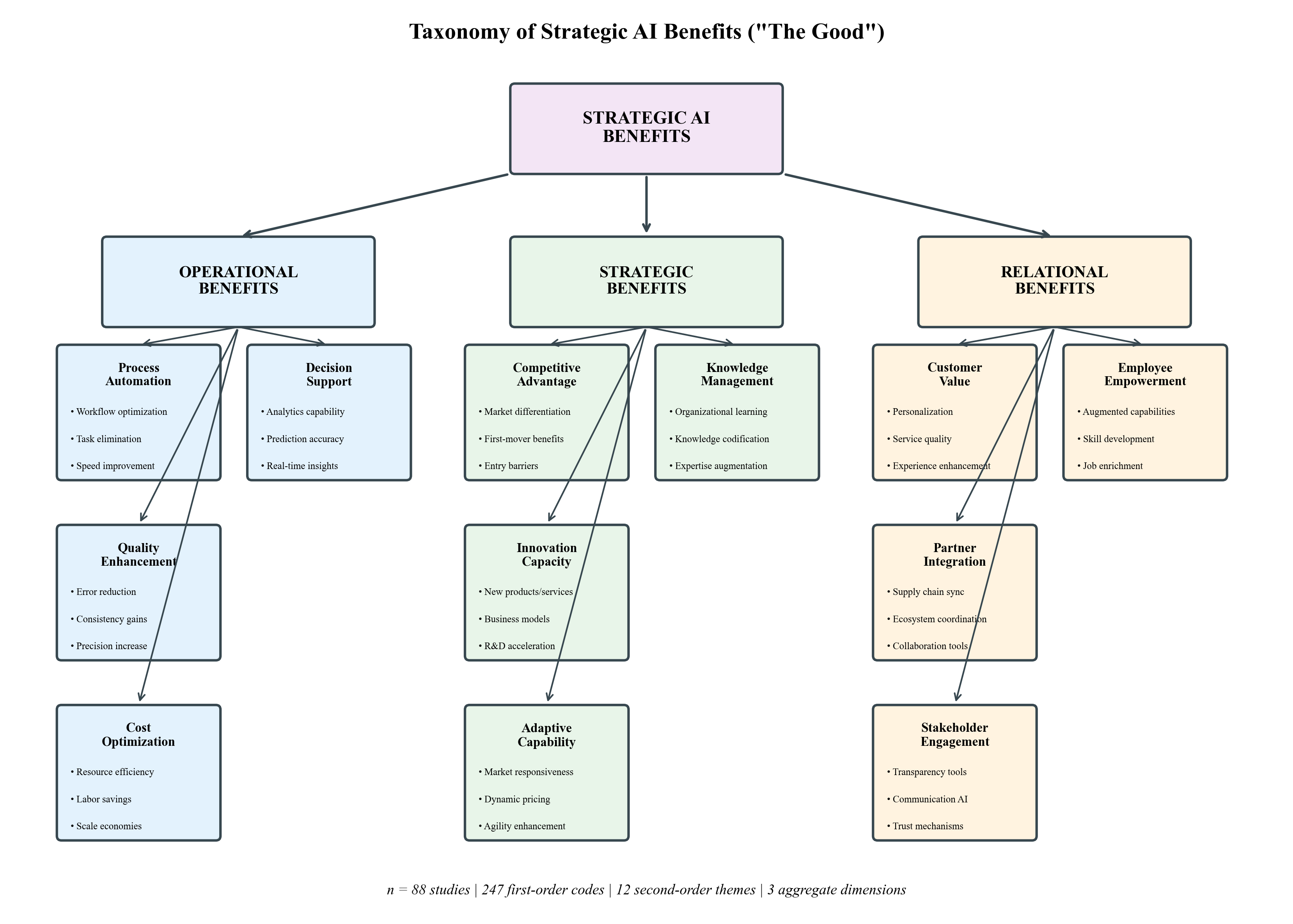

Despite growing attention to responsible artificial intelligence, current approaches often frame governance as a trade-off between innovation and risk mitigation. This paper, ‘Responsible AI: The Good, The Bad, The AI’, addresses this limitation by reconceptualizing responsible AI governance as the dynamic management of paradoxical tensions inherent in AI adoption. We propose the Paradox-based Responsible AI Governance (PRAIG) framework, offering a taxonomy of paradox management strategies for navigating the simultaneous pursuit of value creation and risk mitigation. Can organizations effectively harness AI’s potential while proactively addressing its ethical and operational challenges through a more nuanced, paradox-aware governance approach?

Navigating the Paradox of AI Governance

Artificial intelligence presents transformative potential across diverse fields, from healthcare and finance to transportation and national security; however, this rapid advancement is accompanied by substantial risks if deployment remains unchecked. A stark illustration of this disparity lies within facial recognition technology, where studies reveal significantly higher error rates for individuals with darker skin tones – specifically, a 34-times greater misidentification rate for darker-skinned women compared to lighter-skinned men. This discrepancy isn’t merely a technical glitch; it underscores how biases embedded within training data can perpetuate and amplify existing societal inequalities, leading to discriminatory outcomes in areas like law enforcement, security screening, and even access to essential services. The case of facial recognition serves as a crucial warning: realizing the strategic benefits of AI necessitates proactive measures to mitigate these risks and ensure equitable application of the technology.

Conventional governance strategies frequently operate on a principle of balancing competing interests – accepting some harm in one area to achieve gains in another. However, this trade-off logic falters when applied to artificial intelligence, where harms aren’t simply offset but can be systemic and self-reinforcing. AI systems, unlike traditional technologies, often exhibit emergent properties and operate across multiple domains, meaning a compromise in one area can unexpectedly amplify risks elsewhere. Attempts to regulate AI through conventional cost-benefit analysis therefore struggle to account for these complex interdependencies and the potential for cascading failures, necessitating entirely new frameworks capable of addressing AI’s unique challenges and inherent tensions.

Existing governance models often falter when applied to artificial intelligence because they presume a clear path towards optimization, seeking to balance competing interests as if a singular ‘best’ outcome exists. However, AI systems frequently embody inherent tensions – prioritizing accuracy can exacerbate bias, enhancing personalization may compromise privacy, and maximizing efficiency could displace human workers. Effective frameworks, therefore, must move beyond simple trade-offs and instead acknowledge these fundamental contradictions as intrinsic to the technology itself. This requires a shift towards adaptive governance, capable of continuously monitoring for emergent tensions, fostering transparency in algorithmic decision-making, and establishing accountability mechanisms that address harms arising not from malicious intent, but from the unavoidable complexities embedded within AI systems. Ignoring these inherent contradictions risks implementing solutions that offer only illusory control, ultimately undermining the potential benefits of this transformative technology.

Embracing Contradiction: A Foundation for Responsible AI

Paradox Theory, originating in physics and applied to organizational studies, posits that tensions arising from competing demands are not anomalies to be resolved, but rather inherent and persistent features of complex systems. In the context of AI governance, this means seemingly contradictory requirements – such as fostering innovation while mitigating risk, or ensuring fairness alongside maximizing efficiency – are expected and should be actively managed. The theory suggests that successful organizations don’t eliminate these tensions, but instead develop the capacity to hold them in dynamic equilibrium, recognizing that attempts to fully resolve one pole of the paradox often weaken the other, ultimately hindering performance. This framework provides a means to understand why seemingly rational governance approaches can fail when applied to the inherently paradoxical nature of AI development and deployment.

Traditional approaches to governance often prioritize resolution of conflicting objectives; however, effective AI governance necessitates a different strategy. The inherent complexity of AI systems and their deployment invariably creates tensions between competing values such as innovation and risk mitigation, or fairness and efficiency. Attempting to eliminate these tensions is often counterproductive, leading to rigid frameworks that stifle progress or fail to address emerging challenges. Instead, successful AI governance focuses on actively managing these inherent contradictions through continuous monitoring, adaptive policies, and transparent trade-off analysis. This involves accepting a degree of ambiguity and prioritizing resilience over the pursuit of a single, definitive solution.

Traditional approaches to problem-solving often prioritize identifying and implementing optimal solutions; however, in the context of AI governance, this methodology proves insufficient due to the inherent and persistent tensions involved. Instead, a shift towards accepting complex trade-offs is necessary, recognizing that any given decision will likely involve compromises across competing values such as innovation, fairness, and security. This requires the implementation of adaptive strategies – policies and processes designed to be iteratively refined based on ongoing monitoring and evaluation of AI systems and their impacts. Rather than seeking a single “correct” answer, governance frameworks must prioritize flexibility and the capacity to respond effectively to evolving circumstances and unforeseen consequences.

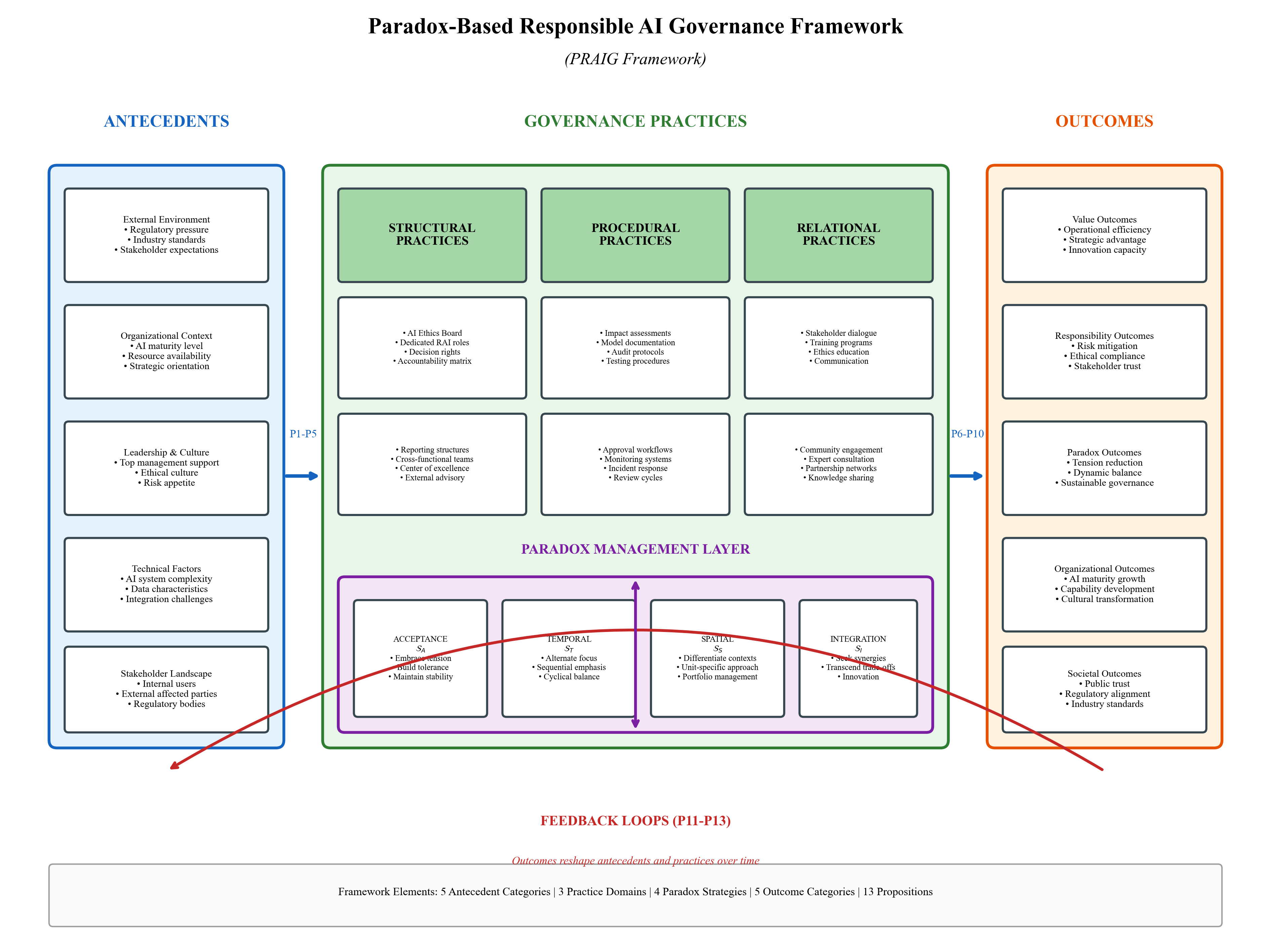

The PRAIG Framework: Structuring Responsible AI Deployment

The PRAIG Framework, or Paradox-based Responsible AI Governance, structures responsible AI deployment through a four-component system. Antecedents define the initial conditions and ethical considerations prior to AI implementation, including data sourcing and bias mitigation. Practices encompass the specific technical and organizational procedures used in AI development and deployment, such as model validation and human oversight. Outcomes are the measurable results of AI systems, evaluated against pre-defined ethical and performance metrics. Finally, feedback loops continuously monitor outcomes, identify discrepancies, and inform adjustments to antecedents and practices, creating an iterative process for ongoing improvement and responsible AI governance.

The PRAIG Framework incorporates stakeholder dialogue as a core component of responsible AI governance, systematically collecting input from diverse groups to ensure comprehensive consideration of potential impacts and values. This process informs the development of governance strategies tailored to specific AI deployments. Complementing stakeholder engagement, PRAIG leverages explainable AI (XAI) techniques to enhance transparency; XAI methods provide insights into the decision-making processes of AI systems, allowing stakeholders to understand how conclusions are reached and facilitating accountability. The combination of inclusive dialogue and technical transparency aims to build trust and mitigate risks associated with AI implementation.

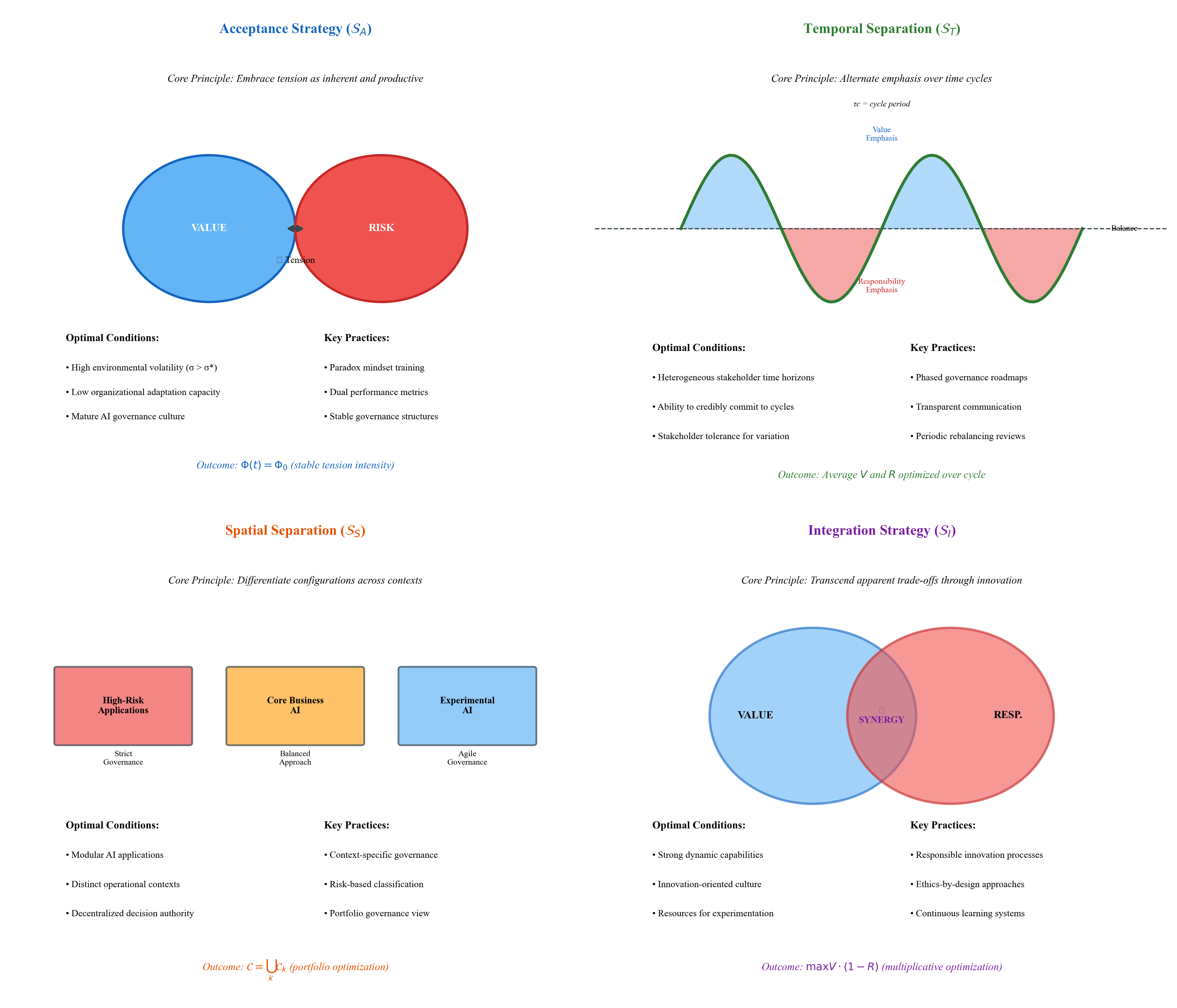

The PRAIG Framework addresses inherent tensions in AI deployment through four specific paradox management strategies. Acceptance involves acknowledging and accommodating conflicting requirements, while spatial separation involves isolating opposing forces into distinct areas or processes. Temporal separation manages conflict by addressing opposing needs at different times, and integration seeks to combine opposing forces into a unified solution. Initial applications of PRAIG, utilizing these strategies, have documented productivity gains ranging from 15 to 40 percent, demonstrating measurable improvements in AI implementation efficiency.

Validating the PRAIG Framework Through Rigorous Research

The PRAIG Framework’s development was directly informed by a systematic literature review of responsible AI research. This review prioritized studies exhibiting a minimum quality score of 3.0 on a 5-point scale, assessed through a predetermined rubric focusing on methodological rigor and relevance to core responsible AI principles. The inclusion criteria ensured that the framework’s foundational concepts and recommendations are grounded in current, high-quality academic and industry publications. This process aimed to minimize theoretical gaps and maximize the framework’s practical applicability in addressing emerging challenges in the field.

The PRAIG Framework’s development employed Design Science Research methodology, involving iterative cycles of framework construction, implementation in simulated scenarios, and rigorous evaluation. A key component of this evaluation was assessment of inter-rater reliability during the initial screening of relevant literature. This was quantified using a Kappa statistic, which measured the agreement between independent reviewers assessing titles and abstracts for inclusion. The resulting Kappa value of 0.81 indicates a high level of agreement – exceeding the generally accepted threshold for ‘almost perfect’ reliability – and thus strengthens the validity of the corpus used to inform the framework’s principles and the robustness of the initial screening process.

The PRAIG Framework explicitly recognizes the need for proactive mitigation of algorithmic bias throughout the AI system lifecycle. This includes, but is not limited to, careful data selection and pre-processing techniques, ongoing monitoring for disparate impact, and the implementation of fairness-aware algorithms where appropriate. Crucially, PRAIG advocates for the establishment of dedicated AI ethics boards – composed of diverse stakeholders including technical experts, legal counsel, ethicists, and affected community representatives – to provide oversight, enforce ethical guidelines, and address potential harms resulting from AI deployments. These boards function as key governance mechanisms, ensuring accountability and responsible innovation.

Toward a Future of Adaptive and Responsible AI Systems

The PRAIG Framework distinguishes itself through integrated feedback loops, establishing a system of continuous learning essential for artificial intelligence navigating dynamic real-world challenges. These loops don’t simply correct errors; they proactively refine algorithms based on ongoing performance data, allowing the AI to adapt to shifting conditions and unforeseen circumstances. This iterative process moves beyond static programming, enabling the AI to improve its decision-making over time – a critical capability given the rapid evolution of both technology and the environments in which AI operates. Consequently, the framework promotes resilience and future-proofs AI systems, positioning them to maintain effectiveness and relevance even as complexities increase and novel situations arise.

A carefully constructed AI ecosystem, built on proactive risk mitigation and benefit maximization, promises substantial improvements in practical applications. Current research indicates that implementing such an approach can lead to a significant reduction in errors, potentially decreasing them by 25 to 50 percent across various AI deployments. Simultaneously, optimized resource allocation and streamlined processes within this ecosystem are projected to yield cost reductions ranging from 10 to 30 percent in real-world settings. This isn’t merely about efficiency; it’s about building AI systems that are not only powerful but also demonstrably reliable and economically viable, fostering trust and accelerating responsible innovation.

Successfully integrating artificial intelligence into society requires a nuanced approach that doesn’t shy away from its intrinsic complexities. Rather than striving for a flawless, risk-free system, advancements are realized by actively recognizing and managing the inherent tensions between innovation and ethical considerations, efficiency and fairness, and progress and preservation of values. Studies suggest that embracing this perspective – acknowledging potential biases, privacy concerns, and societal impacts – doesn’t hinder development but actually enhances it. This proactive management of trade-offs, facilitated by frameworks that prioritize responsible implementation, correlates with significant improvements in decision-making processes, with observed gains ranging from 20 to 35 percent in accuracy and effectiveness across various applications.

The pursuit of responsible AI, as detailed in this work, necessitates a shift in perspective. It’s not merely about maximizing benefits while minimizing harms, but about acknowledging and skillfully managing the inherent tensions within the system itself. This echoes the sentiment expressed by Ada Lovelace: “The Analytical Engine has no pretensions whatever to originate anything.” The article’s framework, PRAIG, actively embraces this reality, recognizing that strategic information systems built on AI will always operate within paradox. Just as the Analytical Engine required precise instruction, so too does responsible AI demand careful consideration of its foundational assumptions and the skillful navigation of competing priorities. The discipline lies in distinguishing the essential from the accidental, ensuring that the pursuit of innovation does not overshadow the critical need for ethical governance.

Beyond Optimization

The proposition that responsible AI governance hinges on paradox management, rather than simple trade-off optimization, suggests a deeper architectural challenge. It is not sufficient to merely balance benefit and risk; the system must be designed to embrace inherent tensions. Consider the human circulatory system – one does not ‘optimize’ blood pressure by restricting flow; the heart, vessels, and regulatory mechanisms function as an integrated, responsive whole. Similarly, AI governance cannot be compartmentalized as a discrete ‘ethics’ layer; it must be woven into the very fabric of strategic information systems.

Future work should move beyond identifying individual paradoxes-the tension between innovation and control, for example-and focus on the meta-paradox: the attempt to create a perfectly ‘responsible’ AI, given the fundamentally unpredictable nature of complex systems. The PRAIG framework offers a starting point, but its true test lies in longitudinal studies examining how organizations navigate these tensions in practice, and whether attempting to resolve paradoxes ultimately diminishes the adaptability needed to thrive.

The field risks mistaking symptom treatment for systemic health. The current emphasis on explainability, while valuable, addresses only one facet of the larger challenge. A truly robust approach will necessitate a shift in mindset: from seeking ‘safe’ AI to cultivating ‘resilient’ AI – systems capable of learning, adapting, and even failing gracefully within a carefully defined, paradox-aware architecture.

Original article: https://arxiv.org/pdf/2601.21095.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Gold Rate Forecast

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Brent Oil Forecast

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- Critics Say Five Nights at Freddy’s 2 Is a Clunker

2026-01-30 21:42