Author: Denis Avetisyan

As AI systems gain more autonomy, ensuring their consistent performance in unpredictable real-world scenarios is paramount.

This review proposes a two-layered approach to reliability monitoring, combining out-of-distribution detection with AI transparency for safer, more adaptable agentic systems.

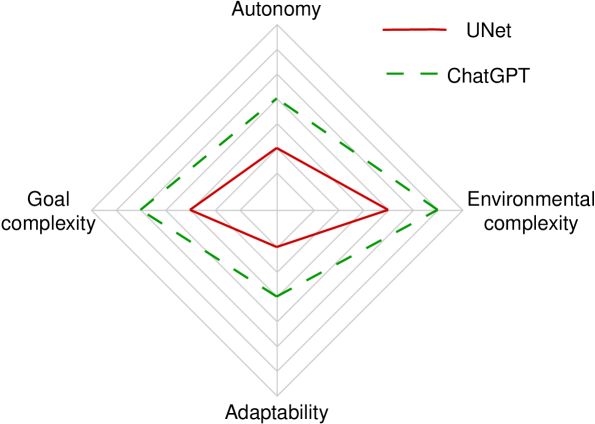

Despite the promise of increased autonomy and utility, agentic AI systems present unique reliability challenges, particularly in high-risk domains where unexpected behavior is unacceptable. This paper, ‘Perspectives on a Reliability Monitoring Framework for Agentic AI Systems’, addresses this critical need by proposing a novel two-layered monitoring approach combining out-of-distribution detection with AI transparency. This framework empowers human operators to assess system trustworthiness and intervene when necessary, providing crucial decision support for uncertain outputs. Will this approach lay a foundation for truly reliable and adaptable agentic AI, capable of operating safely in complex, real-world scenarios?

The Fragility of Operational Reliability

Both traditional and agentic AI systems face a critical operational reliability challenge in real-world deployments. This extends beyond initial training, manifesting as performance degradation when encountering unforeseen circumstances. The core issue isn’t capability, but the failure to maintain consistent function outside curated datasets. This stems from unpredictable environments and the presence of out-of-distribution (OOD) data; real-world inputs are rarely static. Agentic systems are particularly vulnerable, requiring autonomous navigation of these complexities. A robust system demands understanding of underlying principles, not merely statistical correlations. Like a balanced equation disrupted by an unforeseen variable, the stability of intelligence hinges on its ability to resolve the unpredictable.

Proactive Monitoring: A Two-Layered Defense

The Two-Layered Reliability Monitoring Framework addresses the operational reliability challenge by combining OOD detection and AI transparency. This framework mitigates risks associated with deploying machine learning models in dynamic environments where input data may deviate from the training distribution. OOD detection serves as a first line of defense, identifying potentially problematic inputs and triggering safety mechanisms like fallback models or human intervention. However, OOD detection isn’t perfect, often generating false positives. Consequently, a secondary layer leveraging AI transparency is crucial to confirm or refute the detector’s assessment, providing a more reliable evaluation.

Human Oversight: Validating Automated Reliability

OOD detection methods are vital for AI reliability, yet prone to false positives. Human-in-the-loop methodology provides necessary validation, enabling operators to review and confirm or reject automated alerts. AI transparency is critical to empower effective intervention; clear explanations of the model’s reasoning – such as saliency maps – allow operators to understand why an input was flagged, fostering trust and reducing cognitive load. This integration of human judgment and automated monitoring significantly improves overall reliability and safety, achieving a higher degree of accuracy in real-world applications.

Towards Provably Robust and Adaptive Intelligence

Genuine AI transparency requires techniques extending beyond performance metrics. Approaches like mechanistic interpretability and representation engineering are crucial for dissecting model internals and revealing computational processes. These methods allow researchers to move beyond treating AI as a ‘black box’ and begin to understand how decisions are made. Understanding these internal mechanisms provides a pathway to address concept drift – performance degradation as data distributions change. By identifying unreliable features, systems can be adapted or retrained to maintain accuracy. This focus on interpretability and adaptability signals a fundamental shift, prioritizing resilient architectures and reminding us that convenience should not compromise correctness.

The pursuit of robust agentic AI, as detailed in this framework, necessitates a focus on invariant properties as environments shift. This aligns perfectly with Kernighan’s observation: “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” The proposed two-layered reliability monitoring – encompassing out-of-distribution detection and AI transparency – isn’t merely about identifying failures, but about creating a system where the basis of those failures becomes demonstrably clear. Like a well-defined mathematical proof, the system’s behavior, even under unexpected conditions, must be logically traceable, revealing what remains constant—and what doesn’t—as ‘N’ approaches infinity in terms of environmental complexity.

Where Do We Go From Here?

The pursuit of ‘reliability’ in agentic systems often feels suspiciously like attempting to predict chaos with a spreadsheet. This work rightly identifies the need to move beyond mere performance metrics and grapple with the thorny issue of distributional shift. However, the presented framework, while a step toward transparency, merely detects potential failures; it does not, of itself, resolve them. A system that signals its impending doom is still doomed, albeit with advanced warning. The core challenge remains: how to imbue these agents with the capacity for genuine, provable robustness, not just sensitivity to novelty.

Future efforts must address the inherent limitations of relying solely on observed data. Out-of-distribution detection, however sophisticated, is fundamentally reactive. The ideal, though perhaps unattainable, is an agent capable of reasoning about its own limitations – a meta-cognitive awareness of its epistemic boundaries. If it feels like magic when an agent gracefully handles an unexpected input, it simply means the invariant governing its behavior hasn’t been revealed, or perhaps, doesn’t exist.

The long-term viability of agentic AI in safety-critical applications hinges not on increasingly complex monitoring systems, but on the development of formal guarantees. A framework that provides assurances, not just alerts, will be essential. The field needs fewer ‘black boxes’ emitting warnings, and more ‘glass boxes’ exhibiting demonstrable, mathematically verifiable correctness.

Original article: https://arxiv.org/pdf/2511.09178.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Gold Rate Forecast

- NBA 2K26 Season 5 Adds College Themed Content

- Mario Tennis Fever Review: Game, Set, Match

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Beyond Linear Predictions: A New Simulator for Dynamic Networks

- Every Death In The Night Agent Season 3 Explained

- Train Dreams Is an Argument Against Complicity

2025-11-13 14:48