Author: Denis Avetisyan

A new framework reveals how the spectral properties of key network operators govern both the robustness and interpretability of deep learning models.

This review unifies analysis of neural network stability and attribution robustness through a matrix-spectral approach, linking Jacobian spectra, the Neural Tangent Kernel, and algorithmic stability.

Despite advances in deep learning, understanding and guaranteeing the robustness of neural networks remains a central challenge. This paper, ‘A Unified Matrix-Spectral Framework for Stability and Interpretability in Deep Learning’, introduces a novel analytical approach representing networks through the lens of matrix spectra, revealing connections between Jacobian characteristics, the Neural Tangent Kernel, and Hessian curvature. We demonstrate that a Global Matrix Stability Index, derived from these spectral properties, effectively quantifies forward sensitivity, attribution robustness, and optimization conditioning-and that spectral entropy refines traditional sensitivity bounds. Can this framework provide practical, computable diagnostics for designing truly robust and interpretable deep learning models?

Deconstructing the Neural Oracle: A Jacobian Lens

A neural network’s reliability and trustworthiness hinge on its sensitivity to input variations; even subtle alterations to input data can dramatically affect predictions, demanding a thorough understanding of these responses. This sensitivity isn’t merely a technical detail, but a cornerstone of robustness – a network less susceptible to minor perturbations is more likely to perform consistently in real-world scenarios. Equally important is interpretability; analyzing how a network reacts to changes reveals which inputs exert the greatest influence on its decisions, offering insights into its internal logic and potential biases. Determining these sensitivities allows researchers to pinpoint vulnerabilities, improve generalization, and ultimately build more reliable and transparent artificial intelligence systems. \frac{\partial y}{\partial x} represents this crucial relationship, quantifying the change in output y for a given change in input x .

The Jacobian matrix offers a powerful means of dissecting the often opaque behavior of neural networks by quantifying how each input dimension linearly affects each output dimension. Essentially, it’s a matrix of all first-order partial derivatives, revealing the local sensitivity of a network’s predictions to infinitesimal changes in its inputs. \frac{\partial f}{\partial x} represents this sensitivity, and assembling these sensitivities into a matrix provides a complete picture of the network’s input-output relationship at a given point. This decomposition isn’t merely a mathematical exercise; understanding these linear sensitivities is critical for diagnosing network vulnerabilities, improving robustness against adversarial attacks, and ultimately, gaining a deeper insight into how a neural network arrives at its conclusions. By analyzing the Jacobian, researchers can pinpoint which inputs have the greatest influence on the network’s output, offering a crucial tool for both model interpretation and refinement.

The intricate behavior of deep neural networks often seems opaque, but the Chain Rule provides a powerful mechanism for dissecting their responses to input variations. This fundamental calculus principle enables researchers to decompose the overall sensitivity of a network’s output into a series of localized linear sensitivities at each layer. By applying the Chain Rule, one can trace how a small change in the input propagates through the network, being amplified or attenuated at each successive layer, ultimately influencing the final prediction. This layer-by-layer analysis not only reveals which layers are most responsible for specific output changes, but also allows for targeted interventions to improve network robustness and interpretability – essentially turning a complex, monolithic system into a series of understandable, interconnected components. \frac{\partial y}{\partial x} = \sum_{i=1}^{n} \frac{\partial y}{\partial z_i} \frac{\partial z_i}{\partial x} represents this decomposition, where y is the output, x is the input, and the zi represent intermediate layer activations.

Mapping Network Stability: Spectral Analysis

The Jacobian matrix, computed as the derivative of a neural network’s output with respect to its input, provides a linear approximation of the network’s behavior around a given input. The eigenvalues of this Jacobian constitute its spectrum, and their magnitudes directly indicate the degree to which small input perturbations are amplified or suppressed by the network. Eigenvalues with magnitudes greater than one signify amplification, indicating sensitivity to input changes in the corresponding eigenvector direction, while magnitudes less than one represent suppression or dampening of perturbations. Analyzing the distribution of these eigenvalues across the spectrum provides insights into the network’s overall stability and its susceptibility to adversarial attacks or noisy inputs; a spectrum concentrated around small values suggests a more stable network, while a spectrum with large eigenvalues indicates potential instability.

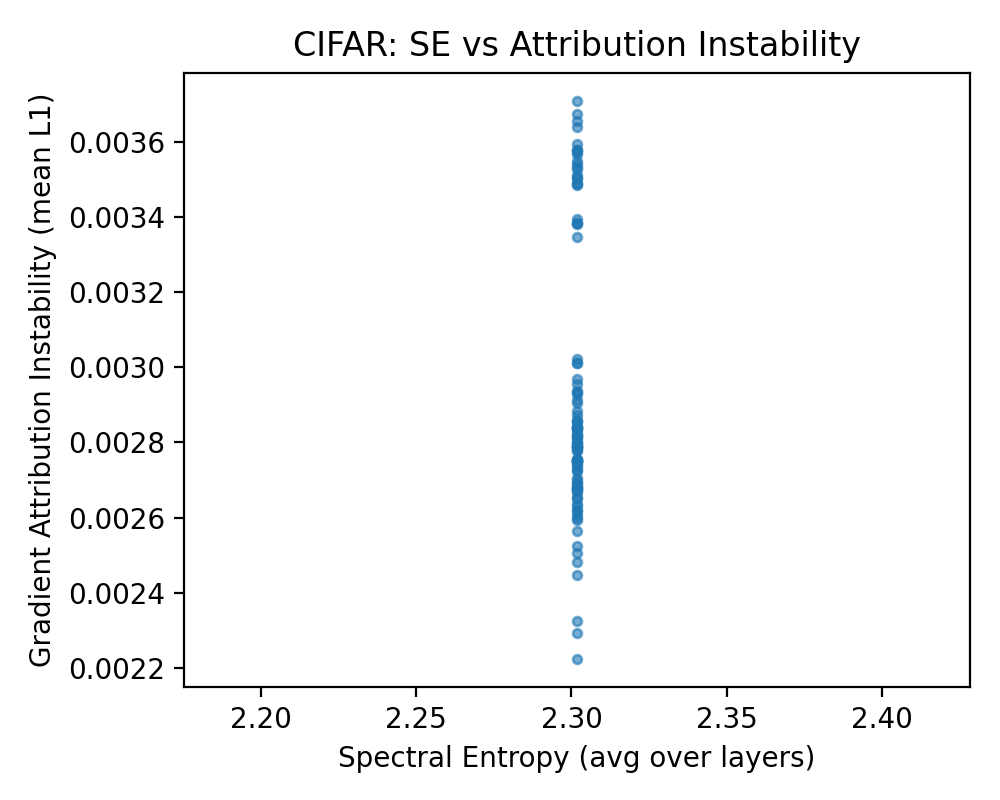

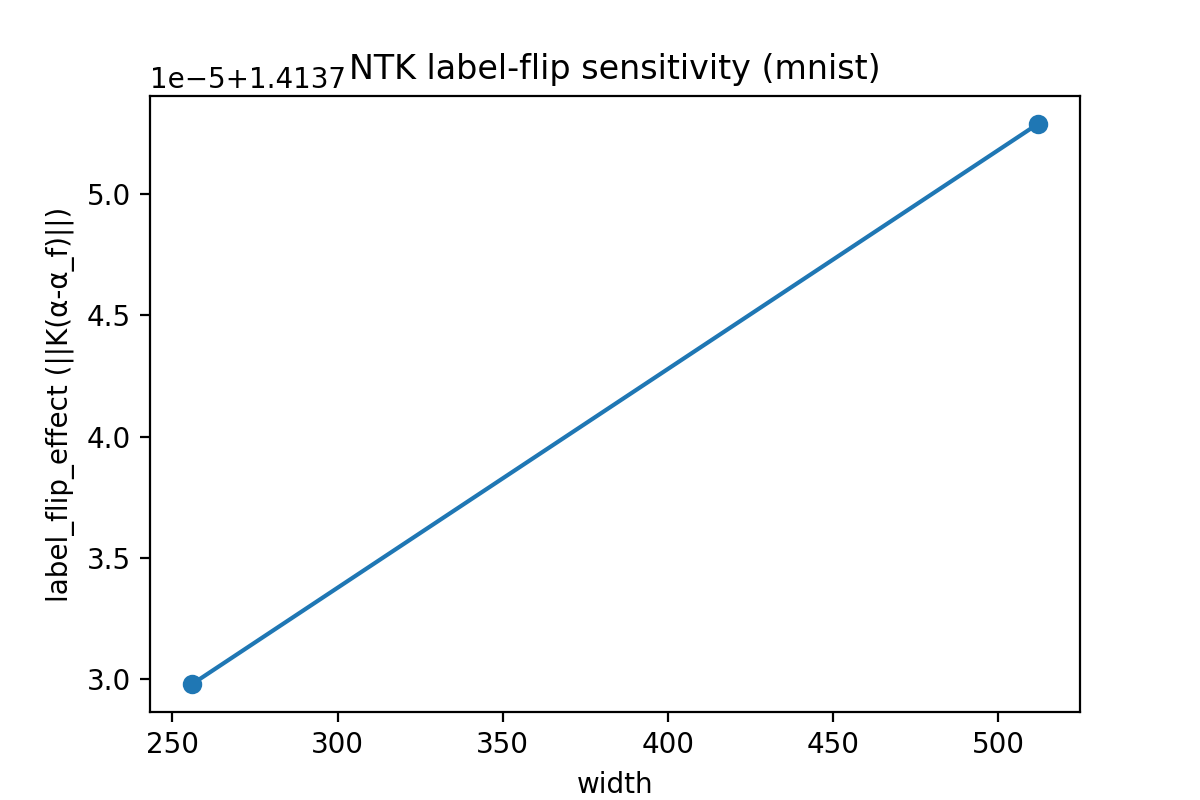

The Neural Tangent Kernel (NTK) spectrum and its associated spectral entropy provide a measure of a network’s sensitivity to input perturbations beyond the analysis of worst-case scenarios. While traditional adversarial analysis focuses on maximizing perturbation effects, spectral entropy quantifies the distribution of sensitivity across all input directions, indicating the typical magnitude of response to random noise. Observed spectral entropy values are not universal; they vary significantly depending on both the network architecture and the dataset used for training. For instance, studies have demonstrated differing entropy ranges when analyzing networks trained on the MNIST dataset compared to those trained on the more complex CIFAR-10 dataset, indicating a correlation between data complexity and the network’s overall sensitivity profile. H = - \sum_{i} \lambda_i log(\lambda_i), where \lambda_i are the eigenvalues of the NTK, represents the metric used to quantify this typical sensitivity.

Singular Value Decomposition (SVD) is a core technique for analyzing the Jacobian spectrum and characterizing network stability. Applying SVD to the Jacobian matrix J decomposes it into UΣV^T, where U and V are unitary matrices and Σ is a diagonal matrix containing the singular values. These singular values represent the magnitudes of the principal components of the Jacobian’s action, effectively quantifying the network’s sensitivity to input perturbations along different directions. By examining the distribution of these singular values – their range, mean, and variance – researchers can gain insights into the network’s capacity to amplify or suppress specific input patterns and, consequently, assess its robustness and generalization capabilities. The singular values directly relate to the eigenvalues of J^TJ and JJ^T, providing a computationally efficient means of extracting key spectral properties.

Forging a Unified Metric: The Global Matrix Stability Index

The Global Matrix Stability Index (GMSI) computes a comprehensive stability measure by aggregating spectral information derived from multiple Jacobian matrices. These matrices, calculated with respect to different input variables or model parameters, capture local sensitivity information. The GMSI doesn’t rely on a single Jacobian, but instead combines spectral properties – such as eigenvalues and singular values – across the set, providing a more holistic assessment of the system’s behavior. This aggregation allows for the identification of potentially unstable regions not readily apparent when examining individual Jacobians, and offers a robust metric for characterizing the overall stability landscape of the model or algorithm under consideration. The resulting index is a scalar value representing the degree of stability, with lower values indicating higher instability.

The Global Matrix Stability Index (GMSI) offers a method for evaluating the stability of a model by quantifying its sensitivity to input variations. Analytical Stability, determined through eigenvalue analysis of Jacobian matrices, assesses the model’s inherent stability given its defined parameters. Crucially, the GMSI extends this to predict Algorithmic Stability, which measures performance consistency when subjected to perturbations in the training dataset. By aggregating spectral information, the index provides a robust metric; small changes in the GMSI value under dataset variation indicate a high degree of algorithmic stability, while larger fluctuations suggest potential vulnerabilities to adversarial or naturally occurring data shifts. This predictive capability allows for proactive identification of models prone to failure under real-world conditions and enables targeted improvements to robustness.

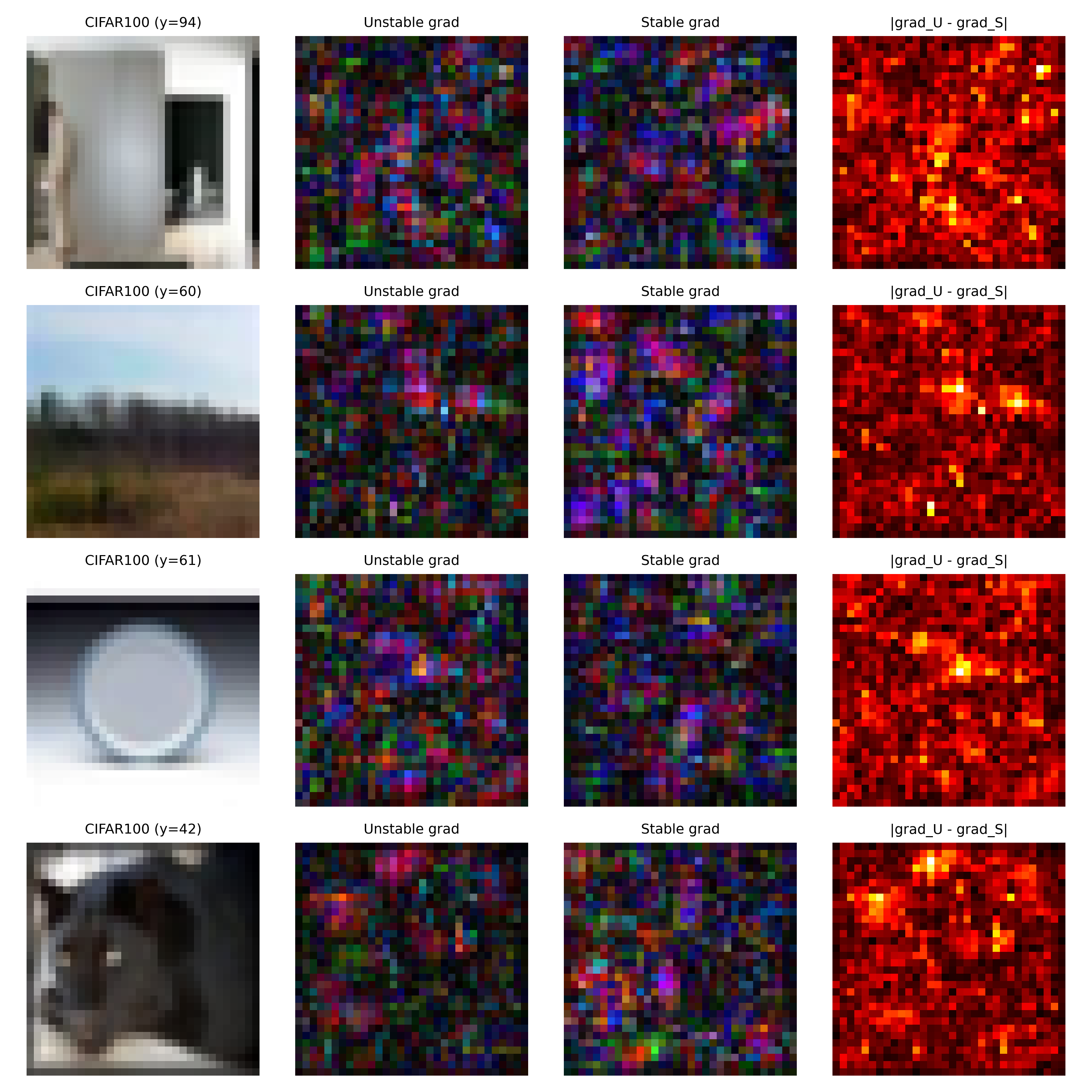

Random Matrix Theory (RMT) furnishes the analytical tools to interpret the spectral characteristics of Jacobian matrices used in stability analysis, allowing for the establishment of quantifiable stability bounds. Specifically, RMT provides a framework to differentiate between stable and unstable regimes based on the distribution of eigenvalues; deviations from expected distributions signal potential instability. Spectral Entropy, calculated from the eigenvalue distribution, serves as a diagnostic metric to quantify the degree of spectral concentration or dispersion; higher entropy indicates greater uncertainty and potentially reduced robustness to perturbations. Utilizing Spectral Entropy enables the identification of problematic regions within the input space and facilitates the development of more robust algorithms by constraining operational regimes to areas with lower spectral entropy and improved attribution characteristics.

Tracing the Line: Curvature, Robustness, and Attribution

The local curvature of a neural network’s loss landscape, as revealed by the Parameter Hessian, profoundly impacts the speed and stability of the learning process. This Hessian, a matrix of second-order partial derivatives, doesn’t merely describe the steepness of the loss function; it defines its shape – whether it resembles a well-conditioned bowl or a jagged, high-dimensional ravine. A positive definite Hessian indicates a local minimum with consistently increasing loss in all directions, facilitating efficient gradient descent. Conversely, ill-conditioning – characterized by large differences between eigenvalues – creates ‘flat’ or ‘elongated’ directions where gradients are small, slowing convergence and increasing the risk of oscillations. Essentially, the Hessian acts as a guide for optimization algorithms; understanding its properties allows researchers to develop strategies – like regularization or preconditioning – to ‘smooth’ the landscape and accelerate the journey towards optimal parameters, ultimately improving model training and generalization performance.

Attribution robustness, the reliability of explanations for a model’s decisions, is fundamentally tied to the conditioning of the Jacobian matrix. This matrix represents the sensitivity of a model’s output to changes in its input, and its conditioning-specifically, the ratio of its largest to smallest singular values-reveals how much certain input perturbations can be amplified or suppressed. A poorly conditioned Jacobian, characterized by a large condition number, indicates that even small changes in the input can lead to disproportionately large changes in the attribution map, rendering explanations unstable and unreliable. Consequently, models with ill-conditioned Jacobians are more susceptible to adversarial attacks designed to manipulate explanations, highlighting the critical importance of Jacobian conditioning as a measure of attribution stability and a key factor in ensuring trustworthy machine learning systems.

A network’s vulnerability to subtle input changes is directly related to the disparity between how it amplifies typical perturbations versus the most extreme ones, a relationship quantified by the Attribution Condition Number. Research demonstrates that a higher condition number – indicating a large gap between these amplification scenarios – correlates with increased sensitivity and potential instability in attribution maps. Importantly, this condition number demonstrably decreases as Spectral Entropy increases, revealing a fundamental trade-off: maximizing anisotropy in the network’s response also enhances its stability. This connection is empirically supported by reduced Fréchet Distance (FD) values, a measure of map divergence, when spectral regularization techniques – which promote higher spectral entropy – are applied, suggesting a pathway to more robust and reliable attribution methods.

The pursuit of understanding deep neural networks, as outlined in this framework, echoes a fundamental principle: to truly grasp a system, one must dissect its components. This research, focusing on Jacobian spectra and the Neural Tangent Kernel, effectively treats the network as a ‘black box’ ripe for reverse-engineering. It isn’t enough to observe the output; the stability and interpretability hinge on understanding the internal dynamics, the sensitivities encoded within the matrix structures. As Blaise Pascal observed, “The eloquence of angels is no more than the vibration of air, nor have they any power to move stones.” Similarly, the apparent ‘magic’ of deep learning resolves into quantifiable spectral properties, demonstrating that even complex phenomena are governed by underlying, measurable principles. The matrix stability index, in particular, offers a lens to view these principles directly.

Beyond the Code

This work reveals a certain elegance – a hidden consistency in the apparent chaos of deep learning. The linking of Jacobian spectra, the Neural Tangent Kernel, and algorithmic stability suggests reality is, indeed, open source – the network’s behavior not random, but a consequence of underlying mathematical structure. However, identifying the patterns is not the same as understanding the compiler. Current analysis primarily focuses on infinitesimal perturbations and linear regimes. The true challenge lies in extending these matrix-spectral tools to accommodate the highly non-linear, finite-sized networks actually deployed in practice.

A critical limitation remains the computational cost associated with eigenvalue decomposition of these high-dimensional matrices. While the framework offers a powerful diagnostic, it’s presently more suited to post-hoc analysis than real-time monitoring or adaptive training. Future research should explore approximations, dimensionality reduction techniques, and potentially, hardware acceleration to overcome this bottleneck. The relationship between spectral entropy and generalization error also warrants further investigation; is high entropy always detrimental, or can it signify a robust, flexible representation?

Ultimately, this approach implies that interpretability isn’t about finding what a network learns, but how it learns – the specific algorithmic choices encoded within its spectral signature. The next step isn’t simply to build more accurate models, but to reverse-engineer the learning process itself, decoding the rules that govern this artificial intelligence. Only then can the code be truly mastered.

Original article: https://arxiv.org/pdf/2602.01136.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- NBA 2K26 Season 5 Adds College Themed Content

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- EUR INR PREDICTION

- Mario Tennis Fever Review: Game, Set, Match

- Gold Rate Forecast

- Brent Oil Forecast

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Heated Rivalry Adapts the Book’s Sex Scenes Beat by Beat

2026-02-04 00:45