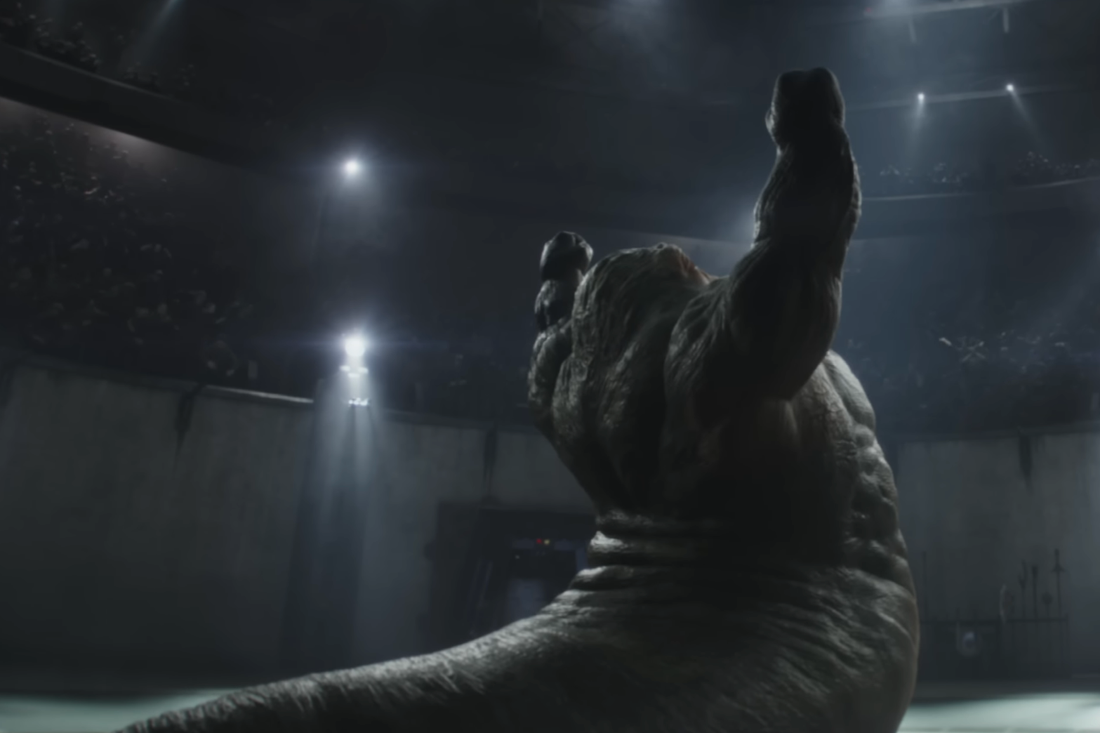

The upcoming 2026 Netflix film War Machine, starring Alan Ritchson, is generating a lot of excitement – it’s different from the 2017 film of the same name starring Brad Pitt. Judging by how many people are watching the trailer, there’s a huge audience eager to see Ritchson’s character, Reacher, battle a Transformer that often takes the form of a Cybertruck. This excitement is thanks to the popular Amazon series Reacher, which has made Ritchson a well-known actor. After the show’s third season featured Reacher facing a large and powerful opponent, giving him a formidable robotic enemy to fight feels like a natural next step.