Author: Denis Avetisyan

A new framework leverages the power of speech recognition and artificial intelligence to transform unstructured emergency communications into actionable data for improved UAV coordination.

This review details SIREN, an AI-driven system for converting voice data into structured semantic information to enhance situational awareness and network management in UAV-assisted emergency response scenarios.

While terrestrial communication infrastructure often fails during emergencies, reliable voice communication remains critical for first responders-yet its unstructured nature hinders automated network management. This limitation motivates the research presented in ‘Voice-Driven Semantic Perception for UAV-Assisted Emergency Networks’, which introduces SIREN, an AI framework that converts emergency voice traffic into structured, machine-readable data. By integrating Automatic Speech Recognition with Large Language Models, SIREN enables UAV-assisted networks to achieve enhanced situational awareness and adaptive resource allocation. Could this approach pave the way for truly intelligent, human-in-the-loop emergency response systems?

The Inherent Noise of Emergency Communication

Emergency services have historically depended on voice radio for immediate communication, a system while reliable, presents significant challenges in the digital age. This reliance creates a flood of unstructured data – spoken words lacking standardized formats or tags – making it difficult for computers to interpret and analyze critical information. Unlike structured data such as sensor readings or database entries, voice transmissions require complex speech-to-text conversion and natural language processing to extract meaning. Consequently, valuable insights regarding incident location, severity, and resource needs are often locked within these audio streams, inaccessible to automated systems designed to improve response efficiency and coordination. This gap between communication methods and technological capabilities hinders the development of truly intelligent emergency response platforms, limiting the potential for data-driven decision-making during critical events.

The absence of readily organized information during emergencies significantly impairs the ability to accurately assess rapidly evolving situations. Without structured data, responders struggle to build a comprehensive understanding of the event – the location, scale, and specific needs at the incident site remain unclear for longer than necessary. This informational void directly impacts decision-making, forcing reliance on incomplete or delayed reports and hindering the efficient deployment of resources. Consequently, critical minutes – and sometimes hours – are lost as personnel attempt to piece together the puzzle of the unfolding crisis, ultimately increasing risk to both victims and those providing assistance. Effective situational awareness, built upon structured data, is therefore not merely a logistical advantage, but a fundamental requirement for minimizing harm and maximizing the success of emergency interventions.

The efficient processing of emergency communications remains a significant challenge, largely due to the difficulty in converting spoken reports into usable data. Currently, vital information conveyed through radio transmissions often requires manual transcription and interpretation, creating delays that can critically impact response times. This bottleneck prevents real-time analysis of unfolding events, hindering the ability to accurately assess the scale of a crisis, identify immediate needs, and deploy resources effectively. Automated systems capable of extracting key details – such as location, type of incident, and required assistance – directly from these communications promise to revolutionize emergency management, enabling faster, more informed decisions and ultimately improving outcomes for both responders and those affected by disaster.

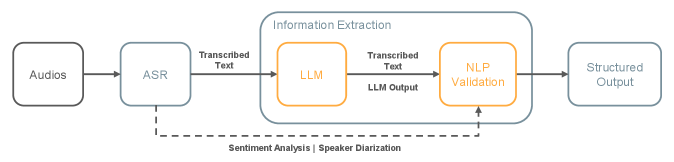

SIREN: Imposing Order on Chaos Through AI

SIREN is an artificial intelligence framework engineered to convert unstructured emergency voice communications into structured, machine-readable data. This is achieved by processing audio input and extracting key information, thereby enabling automated analysis that would otherwise require manual transcription and interpretation. The system aims to address the inherent challenges of processing spontaneous, often unclear, emergency calls by translating human speech into a standardized format suitable for integration with various applications, such as incident management systems, resource allocation platforms, and post-event analysis tools. By bridging the gap between natural language and structured data, SIREN facilitates faster, more efficient responses to critical situations and supports improved situational awareness.

SIREN utilizes schema-constrained prompting, a technique where the large language model is directed to produce outputs strictly adhering to a predefined JSON schema. This enforced structure ensures all extracted information – such as incident type, location, and caller details – is consistently formatted and tagged. By guaranteeing a predictable output format, SIREN eliminates the need for post-processing or parsing to organize the data, enabling direct integration with databases, dispatch systems, and analytical tools. This streamlined data delivery minimizes latency and facilitates automated workflows, improving the efficiency of emergency response operations.

Performance evaluations demonstrate that SIREN achieves a Word Error Rate (WER) of under 15% when processing clean audio data via the Assembly API. This represents a statistically significant improvement over the local Whisper model, which attains a WER of 15.31% under identical testing conditions. WER is calculated as the number of incorrectly transcribed words divided by the total number of words, providing a quantifiable metric of transcription accuracy. This difference in WER highlights SIREN’s enhanced ability to accurately convert spoken language into text, crucial for applications requiring precise data capture and analysis.

SIREN utilizes speaker diarization and sentiment analysis to process emergency communications, enabling identification of individual speakers and assessment of emotional states within the audio. Performance metrics demonstrate SIREN’s robustness; when employing the Assembly API, the system maintains a Word Error Rate (WER) below 18% even with noisy audio inputs. This contrasts sharply with the local Whisper model, which experiences a substantial increase in WER to 39.80% under the same conditions, indicating a significant performance advantage for SIREN in challenging acoustic environments.

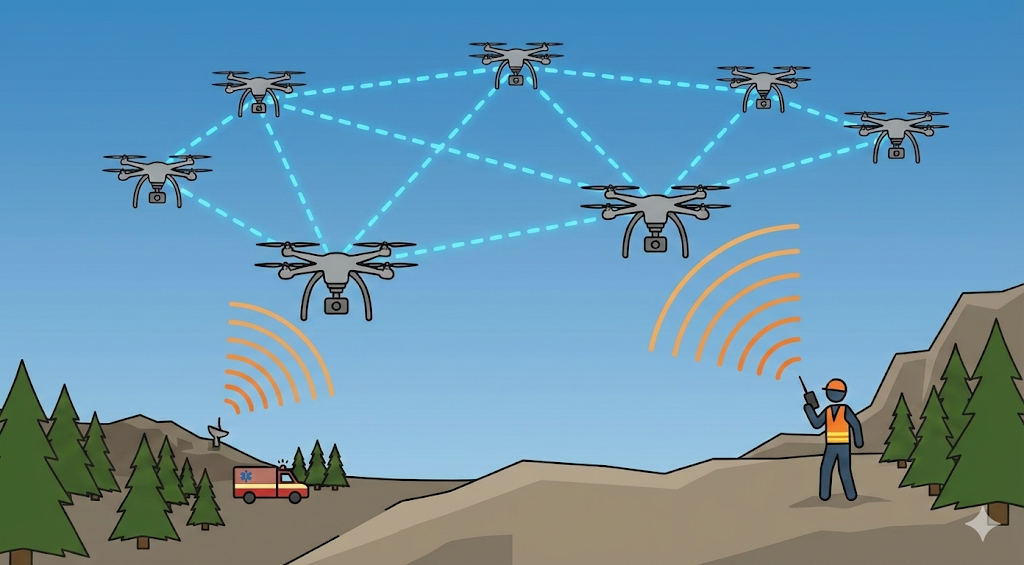

UAV Networks: Extending Reach Through Intelligent Deployment

Integrating the SIREN system with unmanned aerial vehicle (UAV)-assisted networks creates a communication infrastructure capable of rapid deployment and adaptation in emergency situations. This integration allows for the establishment of temporary, mobile communication nodes where existing infrastructure is damaged or unavailable. By leveraging UAVs as aerial platforms, SIREN extends the range and resilience of communication networks, enabling real-time data transmission and coordination between first responders and affected populations. The dynamic nature of this combined system permits on-demand network reconfiguration, optimizing coverage and capacity based on the evolving needs of the emergency response effort. This contrasts with static, pre-configured networks that lack the flexibility to address rapidly changing conditions.

The System for Intelligent Recognition and Emergency Navigation (SIREN) provides Unmanned Aerial Vehicle (UAV) positioning data crucial for optimizing network performance and resource allocation. This functionality enables the precise geolocation of UAVs within the network, allowing for dynamic adjustments to communication pathways and bandwidth distribution. Specifically, SIREN’s location data supports the deployment of UAVs to areas requiring increased connectivity or to act as mobile relay points, improving overall network coverage and resilience. The accuracy of this positioning directly impacts the efficiency of resource deployment, ensuring that communication assets are directed to the locations where they are most needed during emergency situations.

The data extracted by SIREN directly informs communication resource allocation within UAV-assisted networks. This functionality enables dynamic bandwidth distribution, prioritizing connectivity to locations identified as requiring immediate assistance. By analyzing received signals and extracting relevant positional data, the system can intelligently direct available bandwidth to specific units or areas, maximizing communication efficiency during emergency response. This targeted allocation minimizes congestion and ensures critical communications are prioritized, improving overall network performance and the speed of response operations.

During Scenario 1 testing, the SIREN system successfully identified the location of and extracted data from all units with 100% accuracy. This performance was maintained consistently across both controlled, clean audio environments and those with simulated noise interference. The achievement of complete success in both conditions validates SIREN’s robustness and reliability in practical deployment scenarios where audio quality may be variable. These results establish a baseline for performance comparison against more complex operational environments.

In Scenario 1, SIREN exhibited the shortest execution time when compared to more complex operational scenarios. This baseline performance indicates the system’s efficiency under optimal conditions, providing a benchmark for evaluating performance degradation in more challenging environments. The reduced computational load in Scenario 1, characterized by simplified parameters and fewer variables, allowed for rapid processing and data analysis. This swift execution time is critical for time-sensitive applications, such as emergency response, where immediate access to location and unit identification data is paramount. The comparatively minimal processing requirements in this scenario highlight SIREN’s potential for scalability and real-time operation.

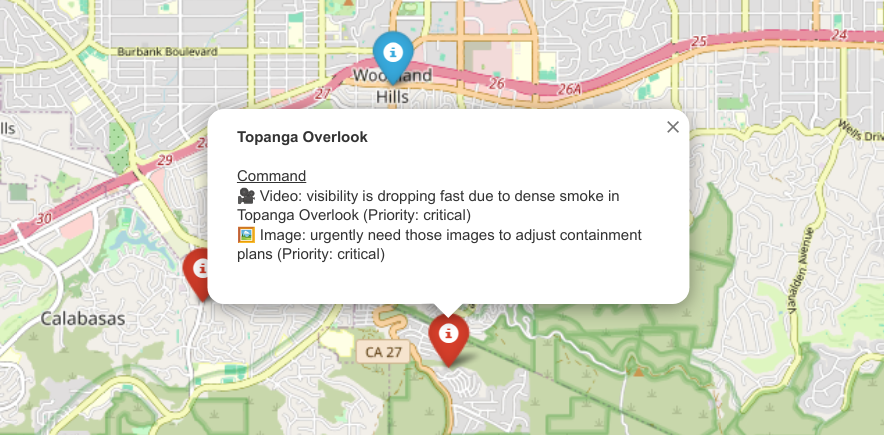

Network state visualization is achieved through integration with the Folium library, enabling the creation of interactive geographical maps displaying the status of UAV-based network nodes. Folium allows for the plotting of UAV locations, signal strength indicators, and coverage areas, providing a real-time overview of network connectivity. This visual representation facilitates informed decision-making regarding resource allocation and network optimization, allowing operators to quickly identify areas with weak or no coverage, and to deploy resources accordingly. The interactive nature of Folium maps allows for dynamic updates reflecting changes in network conditions and UAV positions, supporting responsive network management during emergency scenarios.

Augmenting Human Expertise: A Collaborative Imperative

Despite SIREN’s capabilities in automating data extraction and network management, the system is designed to function alongside, not replace, human oversight. Critical incident response often demands contextual understanding and nuanced judgment that, even with advanced algorithms, remain uniquely human strengths; ambiguities in data, unforeseen circumstances, or ethical considerations frequently necessitate intervention. The framework therefore prioritizes a collaborative approach, allowing human operators to review AI-generated insights, validate findings, and ultimately retain control over critical decisions, ensuring responsible and effective outcomes during emergencies. This human-in-the-loop methodology safeguards against potential errors and biases inherent in automated systems, enhancing both the reliability and trustworthiness of the overall response.

Effective emergency response often demands more than just rapid data processing; it requires interpretation grounded in real-world understanding. The integration of artificial intelligence with human expertise addresses this need by leveraging AI’s capacity for swiftly analyzing complex datasets alongside a human’s ability to apply nuanced judgment and contextual awareness. This synergistic approach allows for a more comprehensive evaluation of situations, particularly those involving ambiguous information or unpredictable factors. Rather than replacing human decision-making, the system augments it, providing responders with AI-driven insights that inform, but do not dictate, their actions, ultimately leading to more effective and appropriate interventions.

The integration of artificial intelligence with human oversight demonstrably elevates the precision and efficacy of emergency response protocols. This synergistic approach allows for the swift processing of complex data – gleaned from sources like computer vision systems – while retaining the critical element of contextual judgment. By combining automated insights with human expertise, the framework avoids the pitfalls of relying solely on algorithmic decisions in unpredictable scenarios. Consequently, responders can more accurately assess risks, prioritize interventions, and ultimately maximize positive outcomes while minimizing potential harm to both individuals and infrastructure. This balanced methodology isn’t simply about speed; it’s about fostering a more reliable and adaptable emergency management system.

The SIREN framework leverages computer vision to achieve a deeper understanding of emergency situations, moving beyond simple object detection to semantic perception. This capability allows the system to not only identify elements like vehicles or buildings, but also to interpret their relationships and significance within the broader context. By analyzing visual data, the framework can infer critical details – such as identifying a blocked roadway, assessing the severity of structural damage, or recognizing potential hazards – thereby providing responders with a more complete and actionable situational awareness. This enhanced perception is crucial for informed decision-making, enabling a more targeted and effective response to rapidly evolving events and minimizing potential risks.

The framework detailed in this research prioritizes a demonstrable, provable transformation of chaotic input-emergency voice communications-into actionable, structured data. This aligns perfectly with Vinton Cerf’s observation: “The Internet treats everyone the same.” The SIREN system, much like the foundational principles of the internet, aims for universal interpretability. By leveraging automatic speech recognition and large language models, it strips away the ambiguity inherent in natural language, reducing complex scenarios to quantifiable data points. This isn’t merely about making the system work on test cases; it’s about establishing a robust, scalable method for enhancing situational awareness within UAV-assisted emergency networks, adhering to principles of mathematical purity in its data processing.

Beyond the Signal: Charting a Course for Semantic Fidelity

The pursuit of translating chaotic vocalizations into actionable intelligence, as demonstrated by this work, inevitably exposes the inherent fragility of semantic representation. While the framework successfully extracts structured data, the very notion of ‘structure’ imposed upon emergency communications is a simplification. The elegance of an algorithm lies not merely in its function, but in its acknowledgment of what it cannot know. Future iterations must confront the epistemological limits of natural language processing; an utterance’s meaning is always contingent, its truth perpetually deferred.

The current reliance on large language models, while pragmatic, invites a crucial inquiry: is apparent understanding sufficient? The system ‘performs’ well on defined metrics, but genuine situational awareness demands more than statistical correlation. The challenge resides in moving beyond pattern recognition toward a formal verification of inferred meaning – a system capable of not only reporting what is said, but proving its logical consistency within the operational context.

Ultimately, the true measure of success will not be the speed of data extraction, but the minimization of false positives and, more importantly, false negatives. A perfectly efficient system that consistently misinterprets critical information is a beautiful, yet profoundly dangerous, artifact. The next frontier lies in the development of methods for quantifying and mitigating the inherent uncertainty in translating the analog world of human speech into the digital realm of actionable data.

Original article: https://arxiv.org/pdf/2602.17394.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Brent Oil Forecast

- EUR INR PREDICTION

- Super Animal Royale: All Mole Transportation Network Locations Guide

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

2026-02-21 20:23