Author: Denis Avetisyan

Researchers are harnessing the power of distributed learning and advanced neural networks to improve the accuracy of lung disease diagnosis while safeguarding patient data.

This study presents a hybrid federated learning framework integrating Convolutional Neural Networks and SWIN Transformers for enhanced lung disease detection.

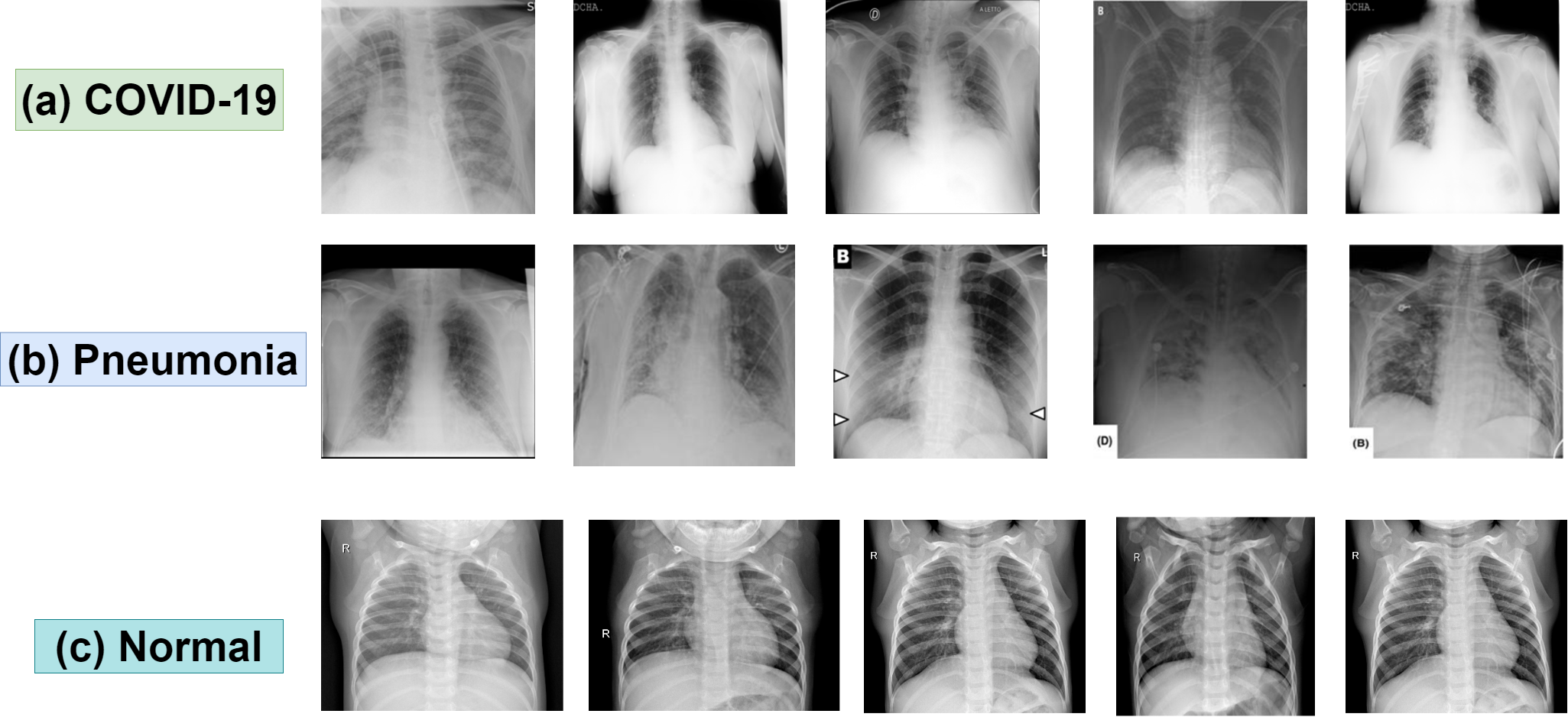

Effective lung disease diagnosis is often hindered by data scarcity and privacy concerns, limiting the generalizability of predictive models. This research introduces ‘A Hybrid Federated Learning Based Ensemble Approach for Lung Disease Diagnosis Leveraging Fusion of SWIN Transformer and CNN’, a novel framework that combines the strengths of convolutional and transformer neural networks within a federated learning architecture. The proposed approach achieves improved diagnostic accuracy while preserving data confidentiality across multiple institutions. Could this distributed learning paradigm unlock more robust and reliable AI-driven solutions for critical medical challenges?

The Imperative of Precision in Pulmonary Diagnostics

The timely and precise identification of lung diseases – including Acute Respiratory Distress Syndrome (ARSD), COVID-19, and pneumonia – significantly impacts patient prognosis and survival rates. However, current diagnostic techniques often fall short when interpreting the subtle visual cues present in medical imaging. These diseases can manifest with remarkably similar symptoms in their early stages, and variations in image quality, patient anatomy, and disease progression create a complex analytical challenge. Existing methods frequently rely on subjective assessments by radiologists, introducing the potential for inter-observer variability and delayed diagnoses. Consequently, a critical need exists for advanced analytical tools capable of discerning nuanced patterns within lung images, improving diagnostic accuracy, and ultimately, enhancing patient care.

The established process of diagnosing lung diseases often presents significant hurdles due to its reliance on manual image interpretation by expert radiologists. This conventional workflow is inherently time-consuming, as each scan requires careful, detailed examination, potentially creating delays in initiating treatment. Furthermore, the subjective nature of visual analysis introduces the possibility of inter-reader variability, meaning different radiologists might arrive at differing conclusions even when presented with the same imaging data. Such inconsistencies can lead to misdiagnosis or delayed appropriate intervention, underscoring the need for more objective and efficient diagnostic tools to minimize these risks and improve patient care pathways.

The relentless surge in medical imaging – driven by aging populations and increasingly proactive screening programs – is placing immense strain on healthcare infrastructure globally. Radiologists face ever-growing workloads, potentially leading to diagnostic bottlenecks and increased risk of oversight. Consequently, automated diagnostic tools are no longer simply desirable, but essential for managing this escalating volume. These systems, leveraging advancements in artificial intelligence, promise not only to accelerate image analysis but also to improve consistency and accuracy, effectively augmenting the capabilities of medical professionals and ensuring timely interventions for patients facing critical respiratory conditions. The development and implementation of such solutions represent a pivotal step towards a more sustainable and responsive healthcare system capable of meeting the demands of the 21st century.

An Ensemble Architecture for Robust Feature Extraction

The system employs an ensemble of four distinct Convolutional Neural Networks – VGG19, Inception V3, DenseNet201, and SWIN Transformer – to enhance feature extraction from medical images. This approach leverages the unique strengths of each network architecture; VGG19 utilizes a deep stack of convolutional layers, Inception V3 employs multi-scale feature extraction, DenseNet201 features dense connections between layers promoting feature reuse, and SWIN Transformer utilizes a hierarchical transformer architecture for global context modeling. By combining the outputs of these diverse models, the system captures a broader range of image characteristics and reduces the risk of relying on features detected by a single network, ultimately leading to more robust and accurate analysis.

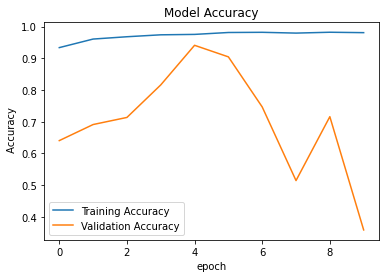

Transfer learning was implemented for each Convolutional Neural Network (CNN) to mitigate the need for extensive training from scratch and to improve performance on the target medical imaging dataset. Each model – VGG19, Inception V3, DenseNet201, and SWIN Transformer – was initialized with weights pre-trained on large-scale datasets, specifically ImageNet. This pre-training process allows the models to learn general image features, which are then fine-tuned using the medical image data. The application of transfer learning resulted in accelerated convergence during training and improved generalization capabilities, particularly beneficial when dealing with limited labeled medical data, and contributed to the reported validation accuracies of 94.4% for VGG19, 94.5% for Inception V3, 94.1% for DenseNet201, and 82.5% for the SWIN Transformer.

Image preprocessing within the system incorporates several techniques to optimize input data for the deep learning models. These techniques include intensity normalization to a standard range, bias field correction to address inhomogeneities in image acquisition, and noise reduction using median and Gaussian filters. Data standardization ensures consistent pixel value ranges across the dataset, improving model convergence and performance. Additionally, image resizing and cropping are implemented to achieve a uniform input size, compatible with the architecture of each Convolutional Neural Network (VGG19, Inception V3, DenseNet201, and SWIN Transformer) utilized in the ensemble.

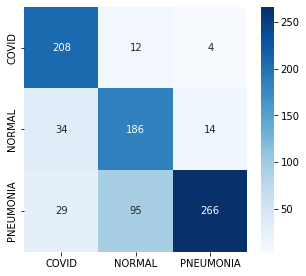

The implemented ensemble method improves disease detection accuracy and reliability by combining the outputs of multiple convolutional neural networks. Validation testing demonstrated individual model accuracies of 94.4% for VGG19, 94.1% for DenseNet201, 94.5% for Inception V3, and 82.5% for SWIN Transformer. By integrating these diverse architectures, the system mitigates the inherent limitations of any single model, resulting in a more robust and generalized performance compared to using any individual network in isolation.

Decentralized Learning for Preserving Data Integrity

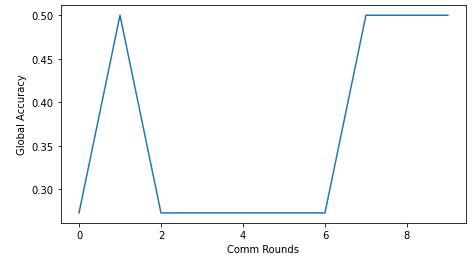

Federated Learning is utilized to construct a Global Model through collaborative training across multiple hospitals, each maintaining exclusive control over its patient data. Instead of directly exchanging datasets, each hospital trains a Local Model on its private data. Only the parameters – specifically, model weights and biases – derived from these Local Models are shared with a central server. This server aggregates these contributions to update the Global Model, which is then redistributed to the hospitals for further local training iterations. This process ensures that sensitive patient information remains within the secure environment of each hospital, while simultaneously allowing the system to leverage a significantly larger and more diverse dataset for improved model performance and generalization capabilities.

Decentralized training, as implemented through Federated Learning, mitigates privacy risks by eliminating the need to centralize patient data. Each participating hospital maintains control over its local dataset, and only model updates – not the raw data itself – are shared for global model aggregation. This approach allows the system to leverage a significantly larger and more diverse dataset than would be possible with traditional centralized methods, as data remains within the secure boundaries of each institution. The increased data volume and diversity contribute to improved model generalization and robustness, particularly for underrepresented patient demographics or rare disease conditions, without violating data privacy regulations or patient confidentiality.

The global model’s performance is enhanced through iterative updates derived from contributions made by individual local models. Each local model, trained on its respective private dataset, calculates updates – typically gradients – reflecting learned patterns. These updates are then aggregated, often using a weighted averaging scheme to account for dataset size or model quality, and applied to the global model. This process is repeated over multiple rounds, allowing the global model to progressively refine its parameters and improve both its accuracy on seen data and its generalization ability to unseen data. The iterative nature of this process allows for continuous learning and adaptation as new data becomes available at the local models, ensuring the global model remains current and relevant.

The federated learning approach achieves robust and generalizable model performance by aggregating insights from decentralized data sources without direct data exchange. This is accomplished through iterative updates to a global model, utilizing only locally computed model parameters – specifically, gradients or model weights – derived from each participating institution’s dataset. Because raw patient data remains within the secure confines of each hospital, the risk of data breaches and privacy violations is minimized. The aggregation process, often employing techniques like federated averaging, combines these local updates to refine the global model, increasing its ability to generalize to unseen data and improve overall predictive accuracy across diverse patient populations.

Demonstrable Precision and Real-World Impact

A comprehensive evaluation of the diagnostic system reveals a remarkably high degree of accuracy in detecting lung diseases. The system consistently outperformed both conventional diagnostic techniques and individual deep learning models, achieving a final validation accuracy of 97.0%. This substantial improvement signifies a considerable advancement in disease detection capabilities, indicating the potential for more precise and reliable diagnoses. The robust performance, demonstrated through rigorous testing, suggests the system is well-equipped to identify subtle indicators often missed by traditional methods, paving the way for earlier interventions and improved patient care.

The system’s architecture is deliberately engineered for speed, recognizing that diagnostic delays can significantly impact patient prognosis. Low latency – the minimal time between data input and result generation – is a core design principle, allowing for near real-time disease detection. This rapid processing isn’t merely a technical achievement; it directly facilitates timely clinical interventions, enabling healthcare professionals to quickly assess patients, initiate appropriate treatment plans, and improve overall patient outcomes. By minimizing the wait time for critical diagnostic information, the system empowers clinicians to make informed decisions with greater agility and efficiency, potentially transforming the management of acute and chronic lung conditions.

The fusion model demonstrates exceptional stability during the learning process, as evidenced by consistently low training and validation losses. Achieving a Training Loss of less than 0.10% and a Validation Loss below 0.14% indicates the model effectively generalizes from the training data to unseen examples, minimizing the risk of overfitting. This robust performance suggests the model isn’t simply memorizing the training set, but rather learning underlying patterns indicative of lung disease, and therefore possesses a strong capacity for accurate prediction in real-world clinical scenarios. These low loss values collectively confirm the model’s reliability and its potential for consistent, high-performance disease detection.

The advancement in diagnostic precision offered by this system extends beyond mere accuracy metrics, promising tangible benefits across the healthcare landscape. Improved patient outcomes are anticipated through earlier and more reliable detection of lung diseases, facilitating prompt treatment and potentially increasing survival rates. Simultaneously, this technology addresses economic pressures within healthcare by reducing the need for costly and time-consuming manual analyses and minimizing misdiagnoses that lead to unnecessary interventions. Critically, the system’s automation capabilities aim to lessen the demands on overburdened healthcare professionals, allowing them to concentrate on direct patient care and complex cases, ultimately fostering a more sustainable and efficient healthcare delivery model.

The advent of automated lung disease detection signifies a considerable advancement in healthcare delivery, moving beyond reliance on often-subjective manual analysis of medical imaging. This system’s capacity to accurately identify indicators of disease-surpassing the performance of existing methods-not only promises earlier and more precise diagnoses but also streamlines workflows for overburdened healthcare professionals. By reducing the potential for human error and accelerating the diagnostic process, the technology facilitates quicker interventions, potentially improving patient outcomes and lessening the overall economic strain on healthcare systems. Ultimately, this represents a shift towards a more proactive and efficient model of care, where advanced diagnostic tools empower clinicians to deliver timely and effective treatment.

The pursuit of robust diagnostic models, as demonstrated by this research into federated learning for lung disease detection, echoes a fundamental tenet of computational correctness. The article’s emphasis on fusing CNNs and SWIN Transformers to enhance accuracy isn’t merely about achieving higher metrics; it’s about constructing a more reliable and provable system. This aligns perfectly with Donald Knuth’s assertion: “Premature optimization is the root of all evil.” The researchers prioritize a sound architectural foundation – combining the strengths of different network types – before focusing on achieving peak performance. A logically sound and well-constructed model, even if initially slower, possesses an inherent elegance and potential for refinement that a hastily optimized, brittle solution lacks. The core idea of the article-a hybrid approach-directly supports this principle, building a solution based on proven concepts rather than speculative gains.

Future Directions

The presented synthesis of convolutional and transformer architectures within a federated learning framework achieves a demonstrable, if predictably incremental, improvement in diagnostic accuracy. However, the core challenge remains unaddressed: the fundamental limitations of inductive bias. Simply aggregating models, even with transfer learning, does not resolve the inherent ambiguity present in medical imaging data. A formally defined measure of epistemic uncertainty, incorporated directly into the loss function, would be a necessary, though undoubtedly arduous, step toward a truly robust solution.

Further investigation must center on the provability of convergence within the federated setting. Current validation relies on empirical observation across a finite dataset – a condition insufficient for clinical deployment. The heterogeneity of data distributions across participating institutions introduces a source of systemic error that necessitates a more rigorous mathematical treatment. A formal analysis of the impact of non-IID data on the generalization bounds is paramount.

Ultimately, the true measure of progress will not be incremental gains in accuracy, but a shift toward algorithms that prioritize verifiability. The pursuit of ‘good enough’ solutions, while pragmatically appealing, obscures the essential task: to construct a diagnostic system whose behavior can be demonstrably predicted, and whose errors are, at least in principle, understandable.

Original article: https://arxiv.org/pdf/2602.17566.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Gold Rate Forecast

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Brent Oil Forecast

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- YouTuber streams himself 24/7 in total isolation for an entire year

2026-02-21 03:35