Author: Denis Avetisyan

A new approach leverages neural networks to rapidly and accurately determine the internal motions of galaxies, offering a significant speedup over conventional methods.

This review details the application of machine learning, specifically neural networks, to the dynamical modeling of galaxy kinematics, utilizing techniques like convolutional neural networks and multi-Gaussian expansion.

Traditional dynamical modelling of galaxies relies on simplifying assumptions that may not accurately reflect real galactic structures. This limitation motivates the work presented in ‘Dynamical Modelling of Galactic Kinematics using Neural Networks’, which explores a machine learning approach to infer galactic kinematics from observational data. By training neural networks on a dataset generated from Jeans Anisotropic Modelling, we demonstrate that accurate dynamical models can be constructed with significantly improved computational speed. Could this approach unlock more detailed and efficient explorations of galaxy formation and evolution?

The Illusion of Order: Mapping Light to Galactic Dynamics

Conventional dynamical modeling of galaxies frequently depends on making simplifying assumptions about their mass distribution, often expressed through parameterized forms. A common technique employed is Multi-Gaussian Expansion (MGE), which decomposes a galaxy’s brightness profile into a sum of Gaussian components, subsequently used to infer dynamical parameters like velocity dispersion and orbital structure. However, this process is inherently computationally intensive, requiring significant processing time and resources, particularly when dealing with high-resolution data or complex galaxy morphologies. Furthermore, the reliance on predefined functional forms introduces potential biases and limitations, as the true underlying mass distribution may deviate from the assumed model. These challenges underscore the need for alternative approaches that can efficiently and accurately reconstruct galaxy dynamics without being constrained by strong prior assumptions or excessive computational demands.

Determining a galaxy’s hidden mass distribution from the light it emits presents a significant challenge in astrophysics. While observed light provides clues about stellar motions and gravitational effects, multiple underlying mass configurations can often produce remarkably similar luminous appearances – a phenomenon known as degeneracy. This means that inferring the true mass distribution isn’t a straightforward process; slight variations in orbital parameters or stellar populations can mask the precise location and quantity of dark matter, leading to inaccuracies. Consequently, researchers grapple with the inherent ambiguity, striving to develop techniques that can reliably disentangle these effects and produce robust reconstructions of galactic mass distributions, essential for understanding galaxy formation and evolution.

Traditional methods for determining galactic dynamics often encounter significant hurdles when comprehensively surveying the vast parameter space of possible mass distributions. The process of fitting observed light profiles to dynamical models frequently becomes computationally intractable, demanding excessive processing time and resources. This inefficiency stems from the complex, non-linear relationship between observable features and underlying parameters, creating numerous local minima and making it difficult to identify the globally optimal solution. Furthermore, accurately quantifying the uncertainties associated with these derived parameters presents a considerable challenge; conventional approaches often rely on simplifying assumptions or computationally expensive Monte Carlo simulations to estimate error margins, which may not fully capture the true range of possibilities. Consequently, the resulting dynamical models can be unreliable or lack the necessary precision for robust scientific conclusions.

Recognizing the limitations of traditional dynamical modeling, researchers are increasingly turning to data-driven methodologies to directly establish a relationship between observed galactic light and fundamental dynamical parameters. This approach bypasses the need for computationally intensive techniques like Multi-Gaussian Expansion and reduces reliance on potentially flawed initial assumptions about the underlying mass distribution. By leveraging machine learning algorithms trained on large datasets of simulated or observed galaxies, it becomes possible to learn the complex, non-linear mapping that connects light – a readily observable quantity – to parameters such as mass, velocity dispersion, and orbital structure. Such a learned mapping offers the potential for faster, more accurate, and less biased reconstructions of galactic dynamics, ultimately enabling a more comprehensive understanding of galaxy formation and evolution. This shift promises to unlock new insights by efficiently navigating the parameter space and providing robust quantification of uncertainties inherent in dynamical modeling.

From Pixels to Parameters: A Neural Mirror for Galaxies

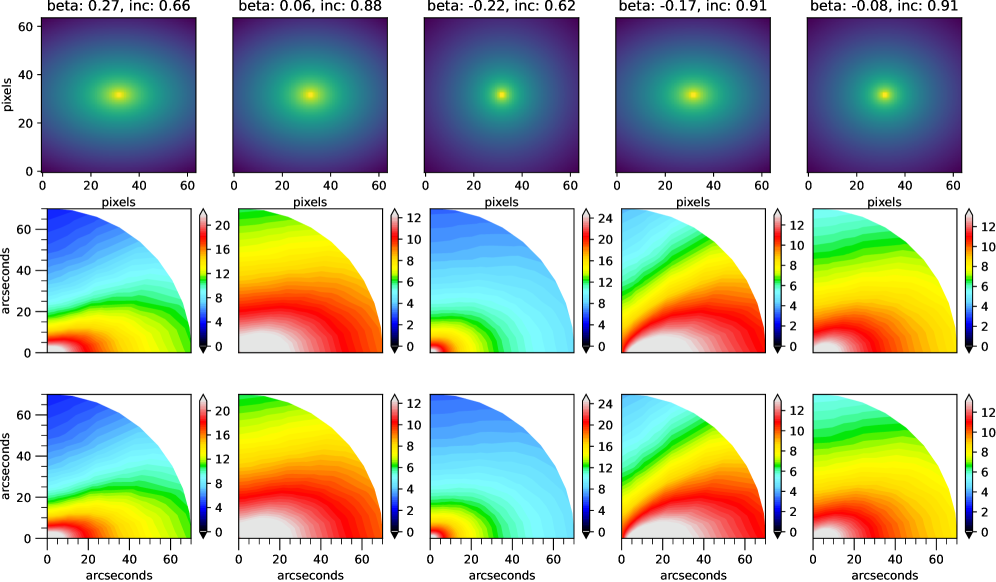

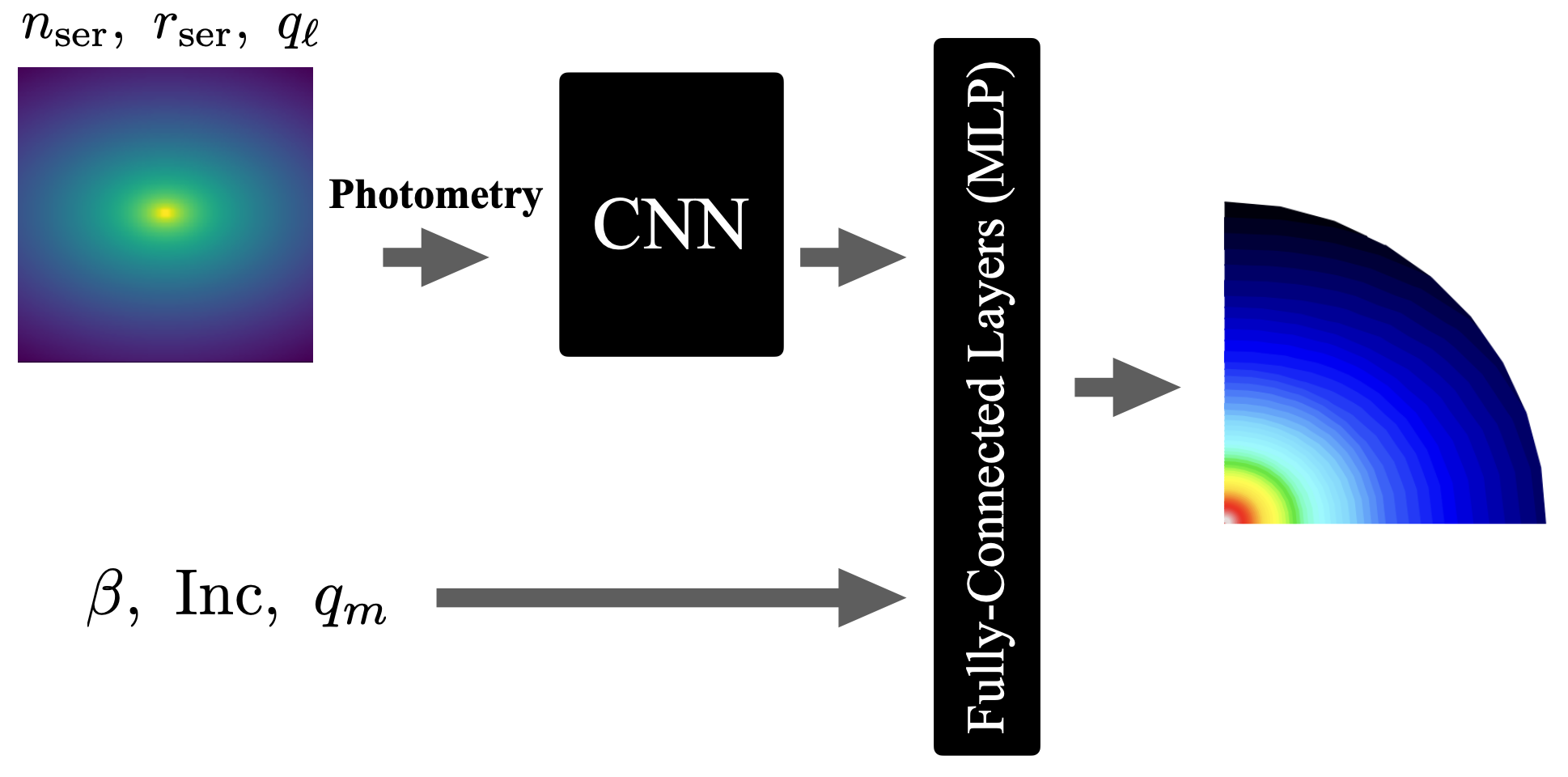

Convolutional Neural Networks (CNNs) are utilized to process mock photometry data, which represents the observed light distribution of galaxies. These CNNs function as automated feature extractors, identifying patterns and structures within the photometric data that correlate with underlying galactic properties. The input to the CNN is the two-dimensional pixel data representing the galaxy’s light, and the network learns hierarchical representations through convolutional filters. These filters detect edges, shapes, and textures, ultimately generating a set of robust features that are less sensitive to noise and observational artifacts than traditional methods of light profile analysis. The extracted features encapsulate information about the galaxy’s morphology and are then passed to subsequent layers for further processing and dynamical parameter estimation.

Following feature extraction via the Convolutional Neural Network, the resulting data is input to a Multi-Layer Perceptron (MLP) which serves a dual purpose: reconstructing the galaxy’s second moment maps and directly predicting its dynamical parameters. The MLP architecture facilitates this by learning a complex, non-linear relationship between the convolutional features and the target dynamical quantities. Second moment maps, representing the spread of stellar velocities, are generated as an intermediate output, allowing for validation against established kinematic tracers. The final layer of the MLP outputs the predicted dynamical parameters, such as velocity dispersion and rotation curves, effectively translating observed light distributions into quantifiable kinematic information.

The Multi-Layer Perceptron (MLP) incorporates Leaky Rectified Linear Unit (LeakyReLU) activation functions to address limitations of traditional ReLU functions. Unlike ReLU, which outputs zero for all negative inputs, LeakyReLU allows a small, non-zero gradient when the input is negative. This is mathematically defined as f(x) = \begin{cases} x, & \text{if } x > 0 \\ \alpha x, & \text{if } x \le 0 \end{cases} , where α is a small constant, typically 0.01. By preventing “dying ReLU” problems – where neurons become inactive due to zero gradients – LeakyReLU maintains gradient flow during backpropagation, enabling the network to learn more complex relationships and improve its representational capacity, particularly in the context of reconstructing second moment maps and predicting dynamical parameters from photometric data.

Traditional methods for determining galactic dynamical parameters rely on constructing detailed models of stellar orbits and mass distributions, requiring significant computational resources and often incorporating simplifying assumptions about the system. This deep learning architecture circumvents these requirements by directly learning the relationship between observed photometric data – representing the galaxy’s light distribution – and key dynamical quantities such as velocity dispersion and rotation curves. By training on a large dataset of mock observations, the network learns to implicitly represent the complex physics governing galactic dynamics, effectively bypassing the need for explicit physical modeling and allowing for rapid and potentially more accurate parameter estimation from observational data.

The Algorithm’s Refinement: Honing the Predictive Mirror

The Multi-Layer Perceptron (MLP) utilizes the Adam optimization algorithm and Mean Squared Error (MSE) loss function during training to establish a relationship between input data and dynamical parameters. Adam, an adaptive learning rate optimization algorithm, adjusts learning rates for each parameter individually. The MSE loss function, calculated as the average of the squared differences between predicted and true dynamical parameters, quantifies the model’s error. Minimizing this error, through iterative adjustments guided by Adam, results in a trained MLP capable of accurately estimating dynamical parameters from observational data. The MSE = \frac{1}{n} \sum_{i=1}^{n} (y_i - \hat{y}_i)^2 equation defines the loss function, where y_i represents the true dynamical parameter, and \hat{y}_i is the model’s prediction.

The ReduceLROnPlateau learning rate scheduler is implemented to improve both the convergence speed and stability of the training process. This scheduler monitors the validation loss and, when it plateaus – indicating minimal improvement over a defined patience period – the learning rate is reduced by a factor of 0.1. This dynamic adjustment prevents the optimization process from oscillating around a local minimum and allows for finer adjustments to the model weights, ultimately leading to a more robust and accurate solution. The patience is set to 10 epochs, meaning the validation loss must not improve for 10 consecutive epochs before the learning rate is reduced; this prevents unnecessary adjustments during periods of active learning.

Model performance is demonstrably affected by the Beta parameter within the Jeans Analysis Model (JAM) code. This parameter functions as a constraint during the sampling of physical solutions to the Jeans equation, which describes the distribution of stellar velocities. Higher Beta values restrict the solution space, potentially leading to underestimation of dynamical parameters if the true solution lies outside the constrained region. Conversely, lower Beta values allow for a wider range of solutions, but may introduce instability or non-physical results. Therefore, careful calibration of the Beta parameter is crucial for achieving accurate dynamical inference and minimizing systematic errors in the derived parameters.

The training regimen, employing the Adam optimizer with a Mean Squared Error loss function and incorporating ReduceLROnPlateau for learning rate adjustment, facilitates accurate inference of dynamical parameters from observational data. This process minimizes the discrepancy between predicted and true values, allowing the Multi-Layer Perceptron to effectively model the complex relationship between observed light characteristics and underlying stellar dynamics. Successful training, validated by minimization of the loss function on a held-out dataset, confirms the model’s ability to generalize and accurately predict dynamical states from new observations, contingent on appropriate parameter settings like the Beta parameter within the Jeans equation solver.

Beyond the Horizon: A New Era of Galactic Insight

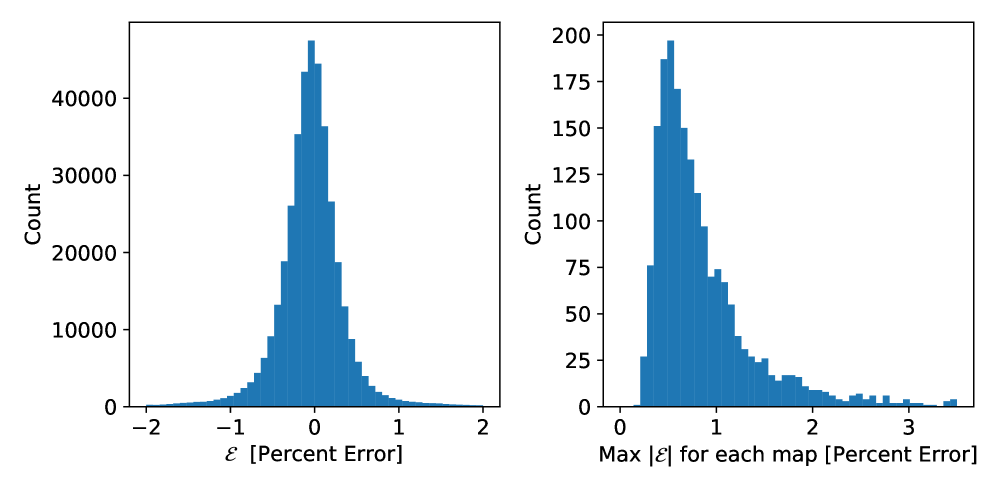

The trained model exhibits a remarkable capacity for reconstructing second moment maps, a critical step in understanding galactic dynamics. Evaluations reveal an accuracy ranging from 2 to 6 kilometers per second, which translates to an error of less than 2 to 3 percent across the reconstructed maps. This level of precision signifies a substantial improvement over existing methodologies and allows for detailed analysis of galactic motion. The model’s ability to accurately capture these velocity dispersions is fundamental for deriving key properties of galaxies, such as mass distribution and rotational curves, and ultimately contributes to a more nuanced understanding of galactic evolution.

The newly developed methodology achieves a remarkable acceleration in dynamical modeling, completing inferences in just 0.3 milliseconds. This represents an approximately 300-fold increase in speed compared to established techniques, a leap enabled by a data-driven approach that bypasses computationally intensive simulations. Such efficiency dramatically lowers the barrier to entry for large-scale galactic studies, facilitating analyses previously constrained by processing time. The near-instantaneous reconstruction of dynamical maps unlocks possibilities for real-time data assimilation and opens avenues for exploring vast cosmological datasets with unprecedented speed and detail.

The reconstructed velocity maps demonstrate a remarkable level of fidelity, with the maximum error remaining below 2% for a substantial 90% of cases. This high degree of accuracy indicates the model’s robust performance across a diverse range of galactic structures and observational conditions. Such precision is critical for reliable dynamical modeling, allowing researchers to confidently derive key galactic properties and test theoretical predictions. The consistently low error rate suggests the model effectively captures the complex relationship between observed light distributions and underlying stellar motions, representing a significant improvement over existing techniques and opening new avenues for large-scale galactic studies.

Traditional methods of inferring galactic dynamics often rely on simplifying assumptions about stellar populations and orbital structures, introducing potential biases into the resulting models. This research circumvents these limitations by employing a data-driven approach that directly learns the complex relationship between observed light distributions and underlying dynamical states. Instead of imposing pre-defined models, the system infers dynamics directly from data, effectively minimizing the influence of human-defined priors and allowing for a more objective representation of galactic motion. This capability is particularly valuable when studying galaxies with unusual or complex dynamics, where conventional methods may struggle to provide accurate or reliable results, ultimately leading to more trustworthy large-scale galactic studies.

The development of this data-driven approach signifies a substantial leap forward in galactic dynamics research. Previously hampered by computationally intensive methods and inherent biases in modeling assumptions, large-scale studies of galaxy evolution and structure are now becoming increasingly feasible. This advancement allows researchers to process and analyze vast datasets with unprecedented speed and accuracy, ultimately leading to more robust inferences about the underlying physical processes governing galaxies. The ability to efficiently reconstruct dynamical maps opens avenues for exploring a wider range of galactic phenomena and testing theoretical predictions with greater precision, promising a new era of discovery in astrophysics.

The application of neural networks to dynamical modelling, as detailed in this work, necessitates a cautious interpretation of results. The study demonstrates a method for rapidly approximating solutions to the Jeans equation, yet any predictive power remains tethered to the stability and accuracy of the training data. As Galileo Galilei observed, “You cannot teach a man anything; you can only help him discover it himself.” Similarly, these networks do not reveal galactic dynamics, but rather facilitate the discovery of patterns already present within the observational data. The inherent limitations of any model, even one accelerated by machine learning, echo this sentiment; a reliance on numerical methods demands constant validation against established theoretical frameworks and observational evidence.

What Lies Beyond the Horizon?

This work offers a faster map, but a map is not the territory. The acceleration of dynamical modelling through machine learning is, in itself, unremarkable; any tool that reduces calculation time is embraced. More telling is the subtle shift in perspective. Traditional methods, rooted in the Jeans equation and Multi-Gaussian Expansion, sought precise solutions – a comforting illusion of complete knowledge. The neural network, however, admits approximation, a graceful acceptance of inherent uncertainty. Any hypothesis about the distribution of mass in a galaxy, after all, is merely a shadow play on the event horizon of our understanding.

The true challenge now lies not in refining the algorithms, but in confronting the limitations of the data. Larger surveys will offer more pixels, but those pixels still represent only what light allows. The dark matter problem, predictably, remains. A faster calculation does not reveal what is not observed. The field must learn to interrogate the absence of signal as rigorously as the presence.

Perhaps the next frontier is not greater precision, but a radical reimagining of the questions. Black holes teach patience and humility; they accept neither haste nor noise. Dynamical modelling, like all science, should embrace that lesson. It is a worthwhile endeavour, provided it remembers its place – a fleeting attempt to chart the infinite.

Original article: https://arxiv.org/pdf/2602.17371.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Gold Rate Forecast

- Brent Oil Forecast

- Super Animal Royale: All Mole Transportation Network Locations Guide

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- RaKai denies tricking woman into stealing from Walmart amid Twitch ban

2026-02-20 20:58