Author: Denis Avetisyan

A new neural sketch, Crane, efficiently distills the essential information from continuously evolving graph data, offering a significant leap forward in stream summarization.

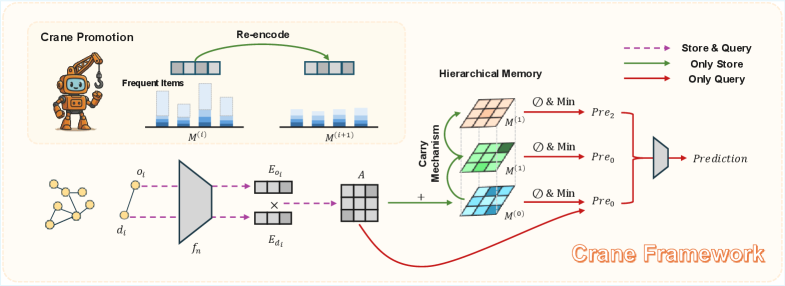

Crane utilizes a hierarchical memory architecture to accurately estimate the frequency of nodes in graph streams, enabling scalable and precise data summarization with automatic memory expansion.

Accurately summarizing rapidly evolving graph streams-sequences of edges representing dynamic relationships-presents a significant challenge with limited computational resources. To address this, we introduce Crane: An Accurate and Scalable Neural Sketch for Graph Stream Summarization, a hierarchical neural network architecture that effectively separates frequent and infrequent items across memory layers. This design minimizes interference and enables more precise frequency estimation, achieving roughly a 10x reduction in error compared to state-of-the-art methods. Can this approach unlock new possibilities for real-time analysis in domains like network monitoring, fraud detection, and cybersecurity?

The Evolving Landscape of Dynamic Graph Summarization

The proliferation of modern networks – from social media interactions and financial transactions to transportation systems and the Internet of Things – increasingly relies on representations as dynamic graphs, where nodes represent entities and edges their relationships, all evolving over time. However, this reliance introduces a significant computational challenge; processing these rapidly changing graph structures is inherently expensive. Each modification – an added edge, a removed node, a shifted weight – can necessitate recalculations across the entire network, quickly overwhelming available resources. Traditional graph processing techniques, designed for static datasets, struggle to keep pace with the velocity and volume of updates in these dynamic environments, making real-time analysis and decision-making exceedingly difficult without innovative approaches to manage the computational burden.

The escalating volume and velocity of modern graph data render conventional summarization techniques increasingly impractical. Maintaining complete snapshots of a dynamic graph – recording every node and edge at each time step – quickly becomes computationally prohibitive, demanding vast storage resources and hindering timely analysis. Similarly, employing exact algorithms to process these graphs, while guaranteeing precision, suffers from exponential time complexity as the graph scales. This limitation is particularly acute in real-time applications – such as fraud detection or social network monitoring – where decisions must be made swiftly based on the most current graph structure. Consequently, the demand for efficient, approximate summarization methods that can distill essential graph information without sacrificing critical analytical insights has become paramount for handling large-scale, rapidly evolving networks.

The relentless growth of network data demands innovative approaches to graph analysis, and effective summarization stands as a critical solution for managing streaming graph data. As graphs evolve, retaining complete historical information quickly becomes computationally prohibitive, yet discarding data risks losing vital analytical accuracy. Summarization techniques address this challenge by intelligently reducing graph complexity – often through node or edge aggregation, or structural simplification – while preserving essential patterns and relationships. This allows for real-time insights and scalable analysis, enabling applications like fraud detection, social network monitoring, and dynamic recommendation systems to operate efficiently on ever-changing data streams. The key lies in balancing compression rates with the retention of information crucial for specific analytical tasks, ensuring that summaries remain representative of the underlying graph’s behavior and structure.

Neural Sketching: A Foundation for Efficient Data Reduction

Neural sketching utilizes neural network architectures to perform lossy compression of continuous data streams, enabling efficient summarization and analysis. These methods are designed to map incoming data-such as network traffic, sensor readings, or time-series data-into a significantly lower-dimensional representation while retaining sufficient information for subsequent tasks like anomaly detection or trend identification. Unlike traditional sketching techniques relying on fixed hash functions, neural sketchers learn these mappings through training, adapting to the specific characteristics of the data stream and optimizing for reconstruction accuracy or task performance. This adaptive capability allows for more effective compression rates and reduced information loss compared to non-parametric approaches, facilitating real-time data processing and long-term storage of summarized data.

Neural sketching techniques achieve efficiency gains by representing data with significantly fewer parameters than traditional methods. This compression is accomplished through dimensionality reduction, where high-dimensional input data is mapped to a lower-dimensional “sketch” while retaining salient information. The resulting compact representations directly translate to reduced storage needs, as fewer values need to be stored. Furthermore, processing times are improved due to the decreased computational load associated with operating on smaller data structures. Information loss is minimized through the use of learned mappings, typically achieved with neural networks, which are trained to preserve the most relevant features for specific downstream tasks, ensuring that the sketch remains a useful proxy for the original data.

Dimensionality reduction via neural sketching operates by transforming input data from a high-dimensional space into a lower-dimensional representation while prioritizing the retention of information critical for subsequent analysis or application. This is achieved through learned mappings, typically implemented with neural networks, which identify and preserve salient features while discarding redundant or less important data points. The resulting lower-dimensional sketch aims to approximate the essential characteristics of the original data, enabling efficient storage, faster processing, and reduced computational costs for downstream tasks such as classification, clustering, or anomaly detection. The effectiveness of this approach is directly tied to the network’s ability to accurately identify and represent features relevant to the specific downstream application.

Crane: A Hierarchical Approach to Dynamic Graph Summarization

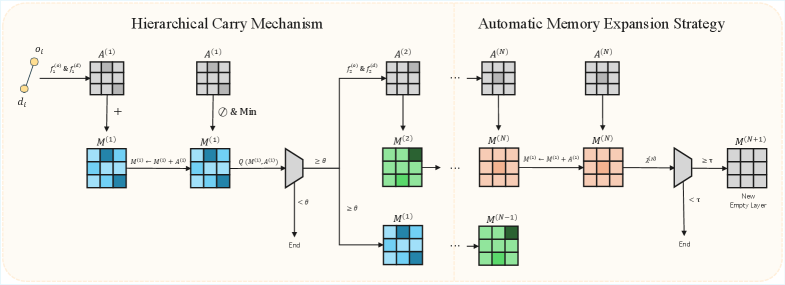

Crane employs a hierarchical memory organization to enhance summarization performance by distinguishing between frequently occurring and infrequently occurring items within the graph stream. This structure consists of multiple levels of memory, with higher levels dedicated to storing representations of more frequent items and lower levels handling the less common ones. By prioritizing the storage and processing of frequent items, which contribute disproportionately to overall graph structure and importance, Crane reduces computational overhead and improves the accuracy of its summaries. This separation also allows for efficient handling of evolving data streams, as the memory allocated to frequent items can be adjusted dynamically without significantly impacting the storage of infrequent elements.

Crane’s hierarchical memory structure leverages the frequently observed ZipfDistribution of item frequencies in graph streams. This distribution indicates that a small number of items occur with high frequency, while the vast majority occur infrequently. The `HierarchicalCarryMechanism` capitalizes on this by maintaining separate memory components for frequent and infrequent items; frequent items are tracked with high precision in a dedicated, smaller memory, while infrequent items are aggregated and summarized to manage memory usage. This separation allows Crane to efficiently prioritize the most impactful elements within the graph stream, reducing computational overhead and improving summarization accuracy compared to uniform tracking methods.

Crane employs a NeuralEncoder to generate vector embeddings for each graph element, facilitating the capture of nuanced relationships beyond simple co-occurrence. This encoder, trained end-to-end with the summarization objective, learns distributed representations that encode semantic information about nodes and edges. The resulting embeddings allow Crane to differentiate between elements with similar structural roles but distinct characteristics, thereby improving the accuracy of the hierarchical summarization process. The learned representations are crucial for effectively capturing essential information, especially in dynamic graph streams where element importance can evolve over time, as it moves beyond solely relying on frequency-based metrics.

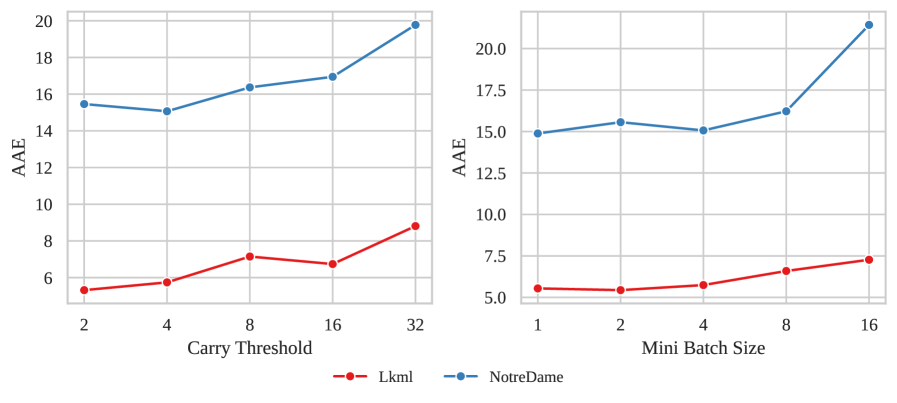

Crane’s scalability and adaptability to dynamic data streams are achieved through the implementation of mini-batch processing and automatic memory expansion. Mini-batch processing allows the system to process incoming graph data in discrete batches rather than individual elements, significantly reducing computational overhead and improving throughput. Furthermore, Crane dynamically adjusts its memory footprint using automatic memory expansion; as data rates increase or the diversity of observed graph elements grows, the system automatically allocates additional memory to maintain summarization accuracy and prevent performance degradation. This ensures that Crane can effectively handle varying data velocities and maintain consistent performance without requiring manual intervention or pre-defined memory limits.

Demonstrating Crane’s Impact on Frequency Estimation

The efficacy of Crane in estimating the frequency of events within dynamic graphs is rigorously assessed through key metrics like AveragedRelativeError and MeanAbsoluteError. These measures quantify the difference between Crane’s estimations and the actual frequencies, providing a precise understanding of its accuracy. AveragedRelativeError calculates the average percentage difference, offering a normalized view of error across various frequencies, while MeanAbsoluteError determines the average magnitude of errors, irrespective of their direction. By employing these metrics, researchers can comprehensively evaluate Crane’s performance and compare it against existing techniques, ensuring reliable frequency estimation in rapidly evolving graph streams.

The efficacy of Crane’s neural network hinges significantly on the carefully selected LossFunction, which serves as the guiding force during the training process. This function quantifies the discrepancy between Crane’s frequency estimations and the actual ground truth, providing a measure of error that the network actively seeks to minimize. A well-defined LossFunction not only directs the network towards accurate estimations but also influences the speed and stability of the learning process. Through iterative adjustments of the network’s parameters based on the feedback from the LossFunction, Crane progressively refines its ability to capture the evolving dynamics of the graph stream, ultimately leading to substantial improvements in frequency estimation accuracy and a demonstrable reduction in error metrics like AveragedRelativeError and MeanAbsoluteError.

Crane’s performance benefits significantly from the inclusion of NodeFlux as a key feature, allowing the system to more effectively model the evolving characteristics of the graph stream. NodeFlux quantifies the change in a node’s connectivity over time, providing crucial information about the dynamic nature of the graph. By incorporating this measure of temporal change, Crane moves beyond static graph properties and gains a nuanced understanding of how the graph’s structure is shifting. This is particularly important in real-world graph streams where edges and nodes are constantly appearing and disappearing. Experimental results demonstrate that leveraging NodeFlux dramatically improves estimation accuracy, with a 3162x improvement observed on the NotreDame dataset – a testament to its ability to capture subtle yet impactful shifts in graph topology and enhance the precision of frequency estimations.

Evaluations on established benchmark graph stream datasets reveal that Crane delivers state-of-the-art performance in frequency estimation. The system consistently achieves an AveragedRelativeError of less than 1.0, signifying a substantial advancement over current methodologies. This represents more than a ten-fold reduction in error when contrasted with techniques like CountMinSketch and GSS, demonstrating Crane’s superior capacity to accurately track evolving graph dynamics and provide reliable frequency counts within streaming data environments. The consistent low error rate highlights the effectiveness of Crane’s architecture and training process in minimizing estimation inaccuracies.

Evaluations on the NotreDame dataset reveal Crane’s substantial advancement in frequency estimation accuracy. The system achieves an AveragedRelativeError of less than 2.5, demonstrably exceeding the performance of existing state-of-the-art methods by a factor of over ten. This improvement is further amplified by the incorporation of NodeFlux as a key feature, which resulted in a remarkable 3162-fold increase in estimation accuracy specifically on the NotreDame dataset, highlighting its effectiveness in capturing complex graph dynamics and solidifying Crane’s position as a leading solution for dynamic graph analysis.

Crane demonstrates substantial processing capability, achieving a throughput of 0.2 million operations per second (Mops) when implemented on NVIDIA’s CUDA platform. This performance level is indicative of the system’s efficiency in handling the computational demands of dynamic graph analysis. The utilization of CUDA, a parallel computing platform and programming model, allows Crane to leverage the massive parallelism of GPUs, significantly accelerating the frequency estimation process. This throughput enables Crane to process graph streams at a rate suitable for real-time applications and large-scale datasets, positioning it as a viable solution for scenarios requiring rapid and accurate graph analytics.

Looking Ahead: The Future of Neural Graph Sketching

Building upon the foundation laid by Crane, researchers are now investigating more sophisticated neural sketching techniques like Mayfly and LegoSketch to address limitations in scalability and adaptability. These emerging methods move beyond Crane’s static approach by incorporating novel neural architectures designed for dynamic graph summarization. Mayfly, for instance, utilizes a probabilistic framework to capture uncertainty in the sketching process, while LegoSketch employs a modular approach, assembling graph summaries from pre-defined building blocks. These advancements promise to unlock the potential for handling significantly larger and more complex datasets, enabling more accurate and insightful graph analyses across a wide range of applications.

Emerging neural graph sketching methods, such as Mayfly and LegoSketch, represent a significant step towards overcoming limitations inherent in earlier approaches. These techniques differentiate themselves through the implementation of innovative neural architectures – including attention mechanisms and graph neural networks – which allow for more efficient processing of increasingly complex datasets. This architectural focus directly translates to improved scalability, enabling the analysis of graphs with substantially more nodes and edges than previously possible. Furthermore, these methods exhibit greater adaptability, dynamically adjusting to variations in graph structure and node attributes. Critically, the refined architectures also contribute to enhanced accuracy in graph summarization, yielding more faithful and informative sketches that preserve crucial network characteristics and facilitate deeper insights.

Advancing neural graph sketching necessitates a shift towards adaptive summarization techniques, allowing systems to tailor the level of detail presented to the user or application. Current methods often provide a static, one-size-fits-all summary, which can be inefficient or unhelpful depending on the context. Future research will likely explore algorithms that dynamically assess the information needs – considering factors such as the task at hand, the user’s expertise, or the available computational resources – and subsequently adjust the granularity of the sketched graph accordingly. This means a system could, for example, present a high-level overview for a quick scan, then progressively reveal more intricate details upon request, or automatically prioritize key connections relevant to a specific analytical goal. Such adaptability promises not only to enhance the usability of neural graph sketching but also to unlock its potential in resource-constrained environments and complex, real-world applications.

The potential of neural graph sketching extends far beyond its initial applications, offering a transformative approach to understanding complex, real-world data. Applying these techniques to diverse data streams – such as the intricate connections within social networks, the continuous flow of information from sensor networks, and the dynamic patterns of financial transactions – promises to reveal previously hidden relationships and predictive insights. For instance, anomaly detection in financial data could be significantly improved by sketching transaction networks to identify unusual patterns, while understanding information diffusion in social networks becomes more tractable through summarized representations of user connections. This adaptability suggests that neural graph sketching isn’t merely a visualization tool, but a powerful analytical engine capable of driving innovation across a multitude of fields, from public health monitoring and urban planning to cybersecurity and personalized medicine.

The design of Crane, as detailed in this work, echoes a fundamental principle of systemic integrity. The separation of frequent and infrequent items across hierarchical layers isn’t merely a technical optimization; it’s an architectural decision reflecting the interconnectedness of the whole system. This approach acknowledges that altering one component-in this case, how frequency estimation is handled-necessitates consideration of its impact on the entire graph stream summarization process. As Henri Poincaré observed, “It is through science that we arrive at truth, but it is through art that we make it understood.” Crane demonstrates this beautifully; the art lies in translating complex algorithmic concepts into an elegantly structured system, ensuring both accuracy and scalability – a testament to the power of holistic design. The method’s automatic memory expansion is particularly notable in this regard, allowing Crane to adapt and maintain equilibrium as the data stream evolves.

The Horizon Beckons

The elegance of Crane lies in its deliberate stratification – a recognition that not all information deserves equal attention. However, the question of how to optimally delineate frequent from infrequent items remains. Current approaches rely on heuristics; future work might explore adaptive layer allocation, where the hierarchy itself evolves based on stream characteristics. A truly resilient system shouldn’t simply estimate frequency, but understand the underlying generative process, however imperfectly.

Scalability, as this work demonstrates, isn’t a matter of throwing resources at a problem. It’s about structural integrity. But the ecosystem of graph streams is rarely static. The arrival of novel nodes, or sudden shifts in edge weights, can disrupt even the most carefully balanced hierarchy. Research should focus on graceful degradation – systems that acknowledge their limitations and offer meaningful summaries even under duress. Automatic memory expansion is a step, but a system that learns its own tolerance for error would be far more compelling.

Ultimately, the value of any summarization technique isn’t just accuracy, but interpretability. A perfect sketch that obscures the underlying data is a failure. The next frontier isn’t simply faster estimation, but the development of sketches that reveal, rather than conceal, the essential structure of the graph. It is a subtle distinction, but one that separates a tool from a mirror.

Original article: https://arxiv.org/pdf/2602.15360.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Gold Rate Forecast

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- These are the 25 best PlayStation 5 games

- Critics Say Five Nights at Freddy’s 2 Is a Clunker

- Best Zombie Movies (October 2025)

2026-02-19 04:37