Author: Denis Avetisyan

New research explores how deep learning can improve bird strike prevention by automatically identifying species and predicting flock behavior near airports.

This review compares deep learning models for avian and aircraft classification, aiming to enhance the effectiveness of bird strike prevention systems in aviation.

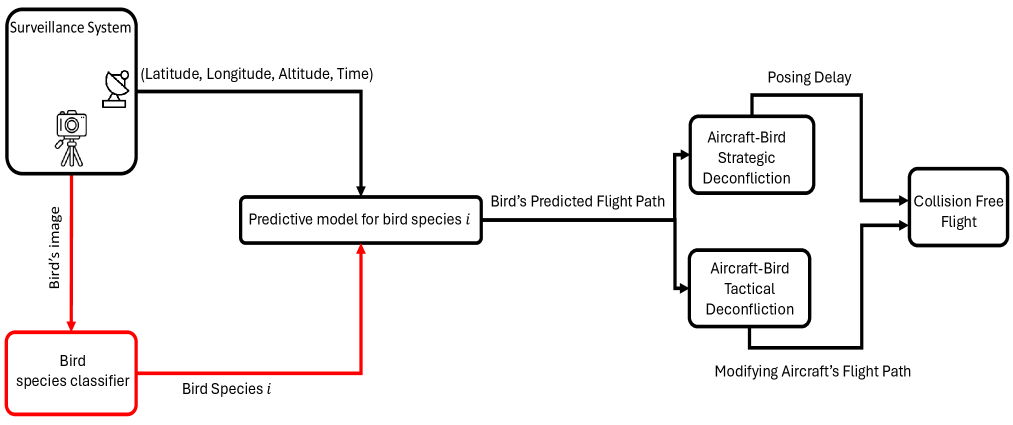

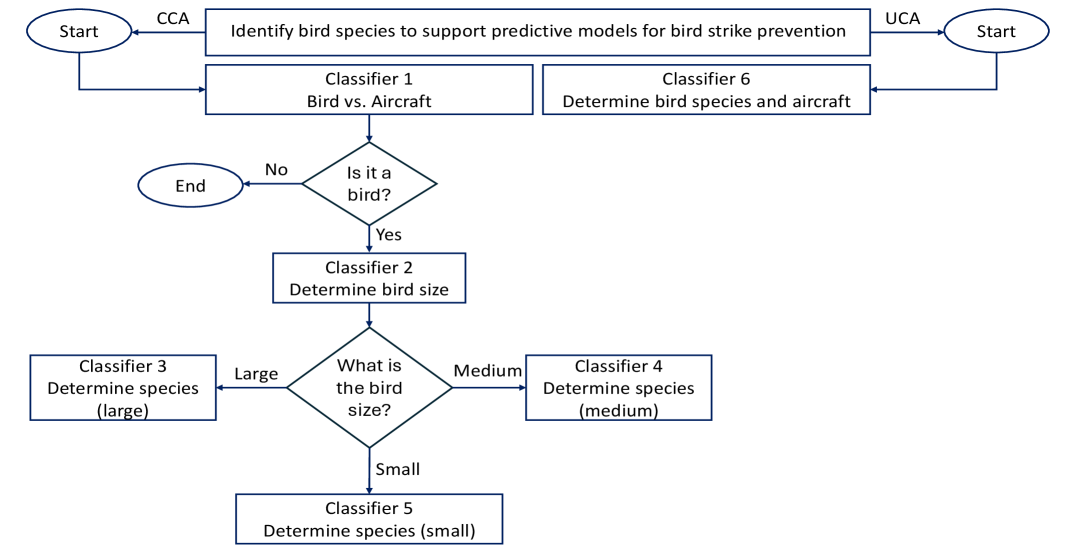

Despite advances in avian radar, current bird strike prevention strategies lack the nuanced species identification crucial for accurate flight path prediction. This limitation motivates the research presented in ‘Deep Learning Based Multi-Level Classification for Aviation Safety’, which proposes an image-based framework leveraging Convolutional Neural Networks to classify bird species, flock type, and size for improved risk assessment. The system demonstrates the potential to move beyond simple detection, offering supplementary data on collective flight behavior and potential impact severity. Could this multi-level classification approach fundamentally reshape proactive safety measures in aviation and significantly reduce bird strike incidents?

Unveiling the Patterns of Avian Hazard

Bird strikes, while seemingly infrequent, pose a substantial and escalating threat to aviation safety worldwide. These collisions between aircraft and birds result in over $400 million in damages annually to civil aviation, and while catastrophic events are rare, they do occur – with potentially devastating consequences. The vulnerability extends across all phases of flight, from takeoff and landing – periods of heightened bird activity near airports – to cruising altitudes where migratory patterns can intersect flight paths. Beyond direct damage to engines, wings, and windshields, bird strikes can necessitate emergency landings, disrupt flight schedules, and contribute to significant economic losses for airlines. Understanding the frequency, distribution, and characteristics of these events is therefore paramount to developing effective mitigation strategies and safeguarding air travel.

Current strategies for preventing bird strikes largely depend on post-incident reporting and reactive adjustments to flight paths or schedules. These methods often prove insufficient because they address the problem after a potential hazard has already presented itself. Existing radar systems, while capable of detecting airborne objects, frequently struggle to differentiate between birds and other aircraft, leading to false alarms or delayed responses. Visual observation by pilots, though valuable, is limited by visibility and relies on immediate proximity for detection. Consequently, the aviation industry requires a shift towards predictive technologies capable of anticipating bird movements and proactively mitigating the risk of collision, rather than simply responding to events as they unfold.

Truly effective bird strike mitigation hinges on a shift from reactive responses to proactive interventions, demanding precise, real-time data on avian activity. Current preventative measures often rely on broad seasonal adjustments or generalized hazard warnings, but these lack the specificity needed to address the nuanced risks posed by different species and flock behaviors. Sophisticated systems are being developed to leverage advanced sensors – including radar, lidar, and acoustic monitoring – coupled with machine learning algorithms capable of identifying bird species, estimating flock size and trajectory, and predicting potential collision paths. This granular level of understanding allows for targeted responses, such as localized airspace adjustments, altered flight paths, or the deployment of bird dispersal techniques only when and where they are most needed, ultimately minimizing the probability of damaging and costly bird strikes and enhancing overall aviation safety.

Image-Based Classification: A Proactive Shield

Image-based classification utilizes machine learning algorithms to analyze visual data, offering a preventative strategy for bird strikes. This approach moves beyond reactive measures by identifying birds in proximity to aircraft operational areas – such as runways and flight paths – before a potential collision occurs. By processing images captured from various sources, including cameras and radar systems, the system can classify bird species and estimate their position and trajectory. This information enables proactive mitigation strategies, like temporary operational adjustments or bird dispersal techniques, ultimately reducing the risk of bird strikes and improving aviation safety. The core functionality relies on the ability of these systems to automatically detect, identify, and track birds within the visual data stream.

Convolutional Neural Networks (CNNs) currently represent the leading methodology for image-based bird species identification due to their demonstrated accuracy and efficiency. These deep learning algorithms excel at processing visual data and extracting relevant features for classification. Performance metrics indicate overall accuracy rates of up to 96.25% have been achieved using CNNs in controlled testing environments. This surpasses traditional image processing techniques and allows for proactive bird strike risk mitigation through automated species detection.

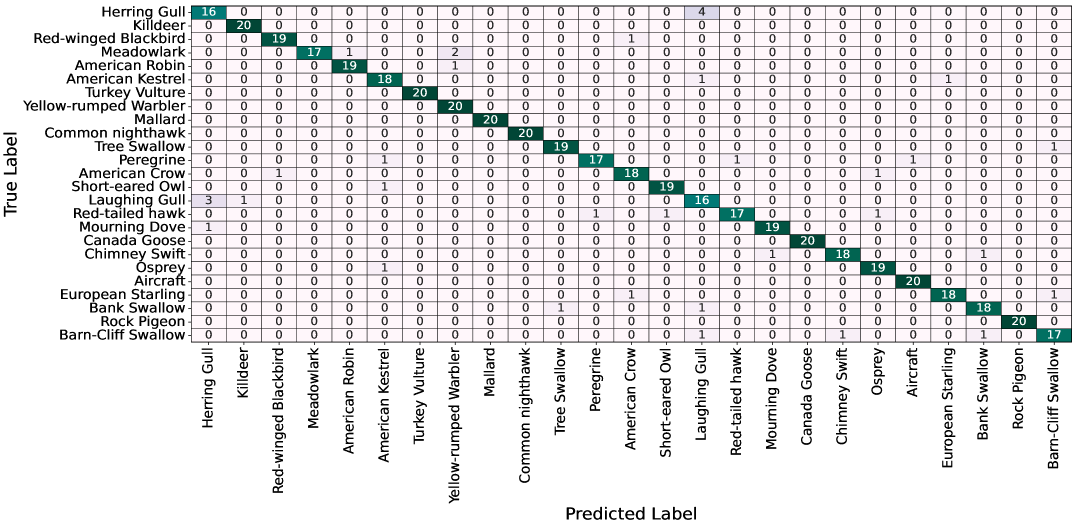

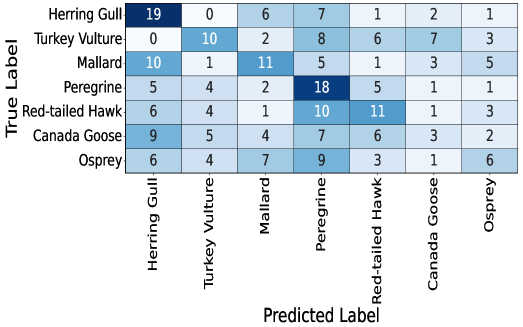

Classification accuracy in image-based bird strike prevention systems is significantly impacted by both image quality and model resilience. ResNet-50V2 Convolutional Neural Networks have demonstrated strong performance across varying bird sizes; specifically, the model achieves 97.62% accuracy in identifying large birds, 96.25% for medium birds, and 92.86% for small birds. These results indicate that while the model is highly effective, accuracy decreases with decreasing bird size, suggesting that higher resolution imagery and model optimization focused on smaller features are crucial for comprehensive detection.

Architectural Foundations for Precision

The ResNet architecture, specifically variations such as ResNet50 and ResNet101, functions as a foundational benchmark in comparative analyses of convolutional neural network (CNN) models designed for bird species classification. Its pre-trained weights, typically obtained from ImageNet, are utilized for transfer learning, enabling researchers to assess the incremental performance gains achieved by novel architectures or training methodologies. The ResNet architecture’s established performance and relatively standardized implementation facilitate objective comparison; improvements are measured against its baseline accuracy, precision, recall, and F1-score on standard bird species datasets like Caltech-UCSD Birds 200 (CUB-200). This comparative approach allows for a quantitative evaluation of the effectiveness of new models and techniques within the field.

Cascade and unified classification approaches represent differing strategies for bird species identification. Cascade methods employ a multi-stage pipeline, typically prioritizing coarse categorization followed by refinement in subsequent stages. Conversely, unified classification approaches perform direct identification in a single stage. Recent evaluations demonstrate the efficacy of both methods, with the Unified Classification Approach (UCA) achieving an accuracy of 92.80%. This result marginally surpasses the performance of the Cascade Classification Approach (CCA), indicating a potential advantage for single-stage identification in this specific application, although the difference in accuracy is minimal.

Data augmentation addresses limitations in training dataset size and variability by creating modified versions of existing samples. Techniques include geometric transformations such as rotations, flips, and crops; color space adjustments altering brightness, contrast, and saturation; and the application of random noise. These transformations artificially expand the dataset, exposing the model to a wider range of conditions and improving its ability to generalize to unseen data. This is particularly beneficial in scenarios with limited data or where variations in lighting, pose, or background significantly impact classification accuracy. By increasing the effective size and diversity of the training set, data augmentation reduces overfitting and enhances the robustness of bird species classification models.

Decoding the Dynamics of Avian Collective Behavior

Precise identification of flock size and formation – whether a tight column, a dispersed line, or another configuration – is fundamentally important for anticipating bird movements and mitigating potential hazards. Understanding these characteristics allows for proactive risk assessment, particularly concerning aviation safety, as large flocks can pose a significant threat to aircraft. Accurate classification enables the development of predictive models that forecast flock trajectories, providing crucial lead time for implementing avoidance strategies. Beyond aviation, this knowledge is valuable for ecological studies, informing conservation efforts by revealing patterns in bird behavior and migration, and helping to understand how flocks respond to environmental changes or disturbances. Consequently, advancements in automated flock analysis directly contribute to both safety and scientific understanding.

The analysis of bird flock behavior relies heavily on robust computer vision models, but acquiring sufficiently large and varied datasets of real-world flock imagery presents a significant challenge. To address this, researchers are increasingly turning to synthetic image generation as a crucial component of both model training and rigorous evaluation. By creating photorealistic simulations of flocks – varying in size, density, and formation – a virtually limitless supply of labeled data becomes available. This approach not only overcomes the limitations of scarce real-world examples but also allows for controlled experimentation, enabling researchers to specifically test model performance under diverse conditions and isolate the impact of various flock characteristics. The resulting models, trained and validated with synthetic data, demonstrate improved accuracy in classifying flock size and type, ultimately contributing to better prediction of bird movements and mitigation of potential hazards.

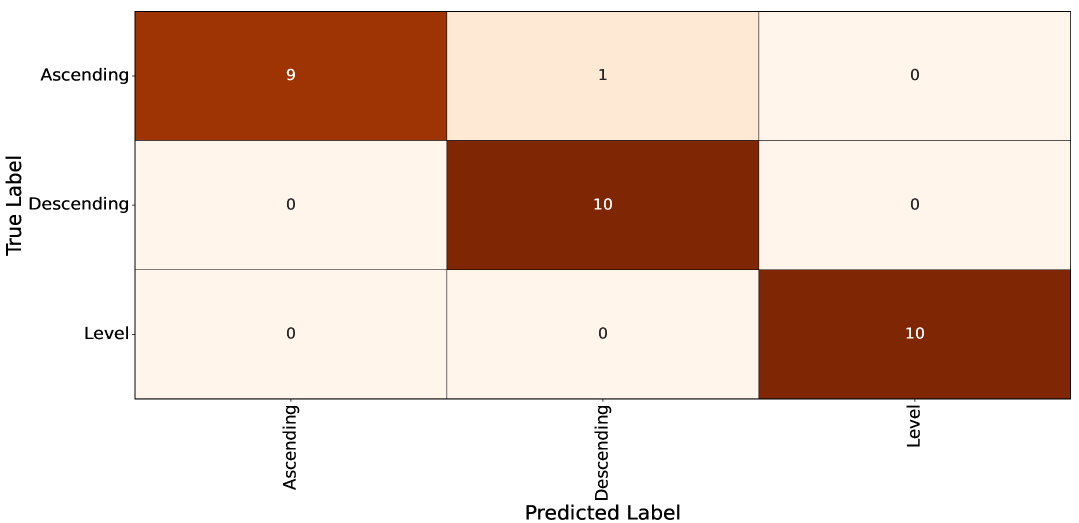

Recent investigations into automated flock analysis reveal a significant advantage for Convolutional Neural Networks (CNNs) over conventional machine learning algorithms. When tasked with discerning flock characteristics from synthetic images, CNNs consistently outperformed methods such as Support Vector Machines, Random Forests, and K-Nearest Neighbors. This superiority is evidenced by a 94.44% accuracy rate in identifying flock type – categorizing formations as columns, lines, or other arrangements – and a 90.67% accuracy in classifying flock size. These results highlight the capacity of deep learning to effectively process the complex visual information inherent in flocking behavior, offering a robust foundation for predictive models designed to mitigate avian collision risks and enhance understanding of collective animal movement.

Towards a Future of Proactive Aviation Safety

Aviation safety stands to benefit significantly from the convergence of image-based classification and avian radar technologies. Historically, bird strike prevention has relied on reactive measures and post-incident analysis; however, this emerging integration facilitates a proactive approach. By combining the broad detection range of radar with the detailed analytical capabilities of advanced image recognition – capable of identifying species, estimating flock sizes, and predicting flight paths – systems can now assess the actual risk posed by birds in real-time. This allows for dynamic adjustments to flight operations, such as temporary route deviations or speed modifications, minimizing the probability of potentially catastrophic collisions and fostering a more secure environment for air travel. The technology promises a future where bird strikes are not simply managed after the fact, but actively anticipated and avoided.

The future of aviation safety increasingly relies on the capacity to not merely react to bird strikes, but to anticipate and avoid them. Emerging technologies facilitate the real-time identification of avian species, accurate assessment of flock size, and precise prediction of flight trajectories. This granular level of data empowers air traffic control and flight crews to make proactive adjustments – subtly altering flight paths, adjusting speeds, or implementing temporary holding patterns – before a potentially hazardous encounter occurs. Such dynamic responsiveness represents a paradigm shift from reactive damage control to preventative risk management, promising a substantial reduction in bird strike incidents and a corresponding enhancement of operational efficiency and passenger safety. The system’s ability to differentiate between species is crucial, as behaviors and flocking patterns vary significantly, allowing for more targeted preventative measures.

The future of bird strike mitigation relies heavily on the continued development of sophisticated artificial intelligence. Current avian detection systems, while promising, can be significantly enhanced through innovations in Convolutional Neural Network (CNN) architectures – exploring designs that improve both speed and accuracy in identifying bird species and predicting their flight paths. Crucially, generating synthetic data – realistic simulations of bird behavior under various conditions – addresses the limitations of real-world data collection, allowing for the training of more robust and reliable algorithms. This focused research isn’t merely about refining detection rates; it’s about building predictive models capable of informing proactive adjustments to flight operations, ultimately minimizing the risk of bird strikes and fostering a demonstrably safer and more efficient aviation landscape.

![The total number of annual bird strikes with civil aircraft in the United States has fluctuated between approximately 7,000 and 17,000 incidents from 1990 to 2023 [4].](https://arxiv.org/html/2602.07019v1/Figure-1.png)

The pursuit of avian radar classification, as detailed in this research, mirrors a fundamental principle of pattern recognition. The study’s comparative analysis of deep learning models for species and aircraft identification isn’t simply about achieving accuracy; it’s about discerning meaningful signals from complex visual data. Fei-Fei Li aptly observes, “AI is not about replacing humans; it’s about empowering them.” This sentiment resonates deeply with the core of this work, where automated classification aims to augment human vigilance in bird strike prevention, ultimately enhancing aviation safety through a synergy of machine intelligence and expert oversight. The errors encountered during model development, far from being setbacks, provide valuable insights into refining the system’s ability to interpret flock behavior and identify potential hazards.

Future Trajectories

The pursuit of automated avian hazard mitigation, as demonstrated by this work, inevitably reveals the inherent ambiguity within seemingly ordered visual data. While deep learning models offer increasingly nuanced classifications of species and flocking behaviors, the very act of categorization implies a simplification of complex biological realities. The models excel at detecting patterns, but discerning intent, predicting deviation from established norms, or accounting for the unpredictable ‘noise’ of natural systems remains a substantial challenge. Future iterations must move beyond mere identification to incorporate predictive modeling of avian movement, accounting for environmental factors and behavioral anomalies.

A particularly intriguing avenue lies in exploring the limits of current classification schemes. The granularity of ‘species identification’ may, in itself, be a misleading construct. Birds do not adhere to neat taxonomic boundaries when making flight decisions; they respond to stimuli, navigate airflow, and react to the presence of larger objects – like aircraft. Perhaps a shift towards classifying behavioral states – foraging, migrating, avoiding threats – rather than species will yield more actionable insights for preventative systems.

Ultimately, the successful integration of these technologies relies not simply on algorithmic refinement, but on a rigorous understanding of the inherent limitations of visual analysis. Every successfully classified image is, in a sense, a testament to what the model doesn’t see. The true measure of progress will be a growing awareness of those blind spots, and a commitment to building systems that acknowledge the irreducible complexity of the natural world.

Original article: https://arxiv.org/pdf/2602.07019.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Thieves steal $100,000 worth of Pokemon & sports cards from California store

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- Landman Recap: The Dream That Keeps Coming True

- These are the last weeks to watch Crunchyroll for free. The platform is ending its ad-supported streaming service

- Future Assassin’s Creed Games Could Have Multiple Protagonists, Says AC Shadows Dev

- Hunt for Aphelion blueprint has started in ARC Raiders

- Player 183 hits back at Squid Game: The Challenge Season 2 critics

- Puzzled by “Table decoration that you can light up” in Cookie Jam? Let’s solve this puzzle together

2026-02-10 20:14