Author: Denis Avetisyan

A new approach combines the reasoning power of large language models with generative AI to significantly improve the accuracy of network traffic predictions.

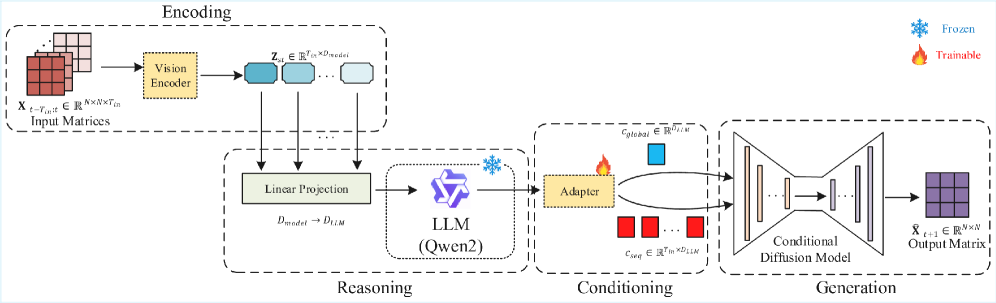

Researchers introduce LEAD, an LLM-enhanced adapter-based conditional diffusion model for robust network traffic matrix prediction and spatio-temporal analysis.

Accurate network traffic prediction remains a persistent challenge despite increasing demands for adaptive and intelligent network operations. This paper introduces LEAD, ‘Accurate Network Traffic Matrix Prediction via LEAD: an LLM-Enhanced Adapter-Based Conditional Diffusion Model’, a novel framework that synergistically combines the semantic reasoning of Large Language Models with the generative power of diffusion models to forecast network traffic matrices. By transforming traffic data into image representations and employing a frozen LLM with trainable adapters, LEAD achieves significant improvements in prediction accuracy and robustness-demonstrating a 45.2\% reduction in RMSE on the Abilene dataset compared to state-of-the-art baselines. Could this approach unlock a new era of proactive and self-optimizing network management?

Navigating the Complexities of Network Prediction

Effective network management and efficient resource allocation hinge on the ability to accurately predict network traffic demands. However, conventional statistical techniques – such as Autoregressive Integrated Moving Average (ARIMA) and Kalman Filtering – are increasingly challenged by the inherent complexity and rapid fluctuations of contemporary networks. These methods often depend on linear models and stationary data assumptions that fail to adequately represent the non-linear, bursty, and self-similar characteristics common in modern data streams. Consequently, forecasts generated by these traditional approaches can exhibit significant errors, leading to inefficient bandwidth provisioning, increased latency, and a degraded quality of service for end-users. The escalating volume of data and the accelerating pace of network changes demand more adaptive and nuanced predictive models capable of capturing the intricate dynamics at play.

Traditional statistical forecasting models, while historically valuable, frequently stumble when applied to modern network traffic due to inherent simplifying assumptions. These models often treat network data as linear and stationary, neglecting the complex, nonlinear relationships and temporal dependencies that characterize real-world network behavior. For instance, assuming a consistent pattern overlooks the impact of flash crowds, denial-of-service attacks, or even daily usage cycles. This oversimplification introduces significant forecast errors, leading to inefficient bandwidth allocation, increased latency, and ultimately, suboptimal network performance. Consequently, network operators may experience service disruptions or incur unnecessary costs due to the inability of these methods to accurately anticipate traffic demands and proactively adjust resources.

Modern networks generate data at an unprecedented rate, demanding forecasting techniques that surpass the limitations of conventional statistical models. The sheer velocity of this data-packets traversing networks in milliseconds-compounds the challenge, as models must adapt in near real-time to maintain accuracy. Consequently, researchers are increasingly turning to machine learning approaches, particularly deep learning, which excel at identifying and learning complex, non-linear patterns within massive datasets. These techniques, unlike traditional methods, don’t rely on pre-defined assumptions about data distribution and can dynamically adjust to evolving network conditions, offering the potential for significantly improved prediction accuracy and, ultimately, more efficient network resource allocation and performance.

From Data Streams to Visual Representations

The Traffic-to-Image Paradigm represents a departure from conventional network traffic analysis by converting Network Traffic Matrix (TM) data – representing traffic volume between network nodes – into a visual RGB image format. Each element within the TM is mapped to a pixel’s color value, effectively creating a spatial representation of network activity. This transformation facilitates the application of computer vision techniques, specifically convolutional neural networks (CNNs), which are designed to analyze image data for patterns and features. By leveraging the established capabilities of CNNs, this paradigm allows for the identification of complex relationships and potential anomalies within network traffic that might not be readily apparent through traditional time-series analysis of numerical traffic data.

Converting network traffic matrices into image formats facilitates the application of convolutional neural networks (CNNs) designed for image processing. CNNs, such as U-Net, are specifically architected to identify spatial hierarchies and patterns within data; when applied to traffic data represented as images, these networks can detect correlations between different network connections based on their relative positions within the image. This approach bypasses the need for feature engineering often required in time-series analysis and allows the network to automatically learn relevant features from the spatial arrangement of traffic flows. U-Net, in particular, excels at image segmentation and can identify anomalous traffic patterns represented as localized features within the image, enabling more effective network monitoring and intrusion detection.

Representing network traffic as an image allows for the detection of relationships and irregularities that are often obscured in conventional time-series analyses. Traditional methods primarily focus on temporal dependencies, examining traffic volume changes over time for individual flows or nodes. However, the image-based approach captures spatial correlations – the relationships between multiple flows or nodes simultaneously – by encoding traffic patterns into pixel values and their relationships. This allows computer vision algorithms to identify complex, multi-dimensional anomalies that manifest as visual patterns, potentially indicating coordinated attacks, unusual routing behavior, or systemic network issues that would be difficult to detect through purely temporal analysis. The inherent two-dimensional representation facilitates the identification of these correlations, offering a complementary analytical approach to time-series methods.

LEAD: A Diffusion-Based Framework for Predictive Insight

LEAD is a novel prediction framework integrating three core components to address limitations in traditional traffic forecasting. It leverages the contextual understanding capabilities of Large Language Models (LLMs), specifically adapting them to the visual domain of traffic imagery. To mitigate the computational demands of fine-tuning LLMs, LEAD employs parameter-efficient adaptation techniques, utilizing LLaMA-Adapter to reduce the number of trainable parameters. Finally, a conditional diffusion model is incorporated to generate probabilistic, high-fidelity predictions; this allows LEAD to model the inherent uncertainty in traffic patterns and produce more robust forecasts compared to deterministic approaches.

LEAD incorporates Spatial Attention and Multi-Scale Convolution to address the inherent spatial relationships within traffic imagery. Spatial Attention allows the model to focus on relevant regions of the input image, weighting features based on their importance to future traffic states. Multi-Scale Convolution employs convolutional filters of varying sizes to capture dependencies at different granularities; larger filters identify broad patterns while smaller filters refine local details. This combined approach enables LEAD to model complex interactions between vehicles and road segments, ultimately improving the accuracy of traffic prediction by better representing the spatial context of the scene.

LEAD achieves high-quality traffic predictions and computational efficiency through the integration of conditional diffusion models and the Denoising Diffusion Implicit Models (DDIM) sampling method. Conditional diffusion models allow the network to generate predictions guided by input traffic imagery, enabling the creation of plausible future states. DDIM accelerates the sampling process inherent in diffusion models by reducing the number of required denoising steps, thereby decreasing computational cost without significantly impacting prediction quality. This combination facilitates both detailed and rapid generation of traffic forecasts, addressing a key limitation of traditional diffusion-based approaches.

Demonstrating Predictive Power Through Empirical Validation

Rigorous experimentation using the Abilene and GÉANT datasets confirms that the LEAD model consistently achieves superior predictive accuracy when contrasted with both established traditional techniques and current deep learning approaches. These datasets, representing distinct network topologies and traffic patterns, served as crucial benchmarks for evaluating LEAD’s performance across varied conditions. The results demonstrate not merely incremental improvements, but a substantial advancement in the field of network traffic prediction, highlighting LEAD’s potential for optimizing network resource allocation and enhancing overall network efficiency. This consistent outperformance suggests a robust and generalizable model capable of adapting to the complexities of real-world network environments.

Evaluations conducted on the Abilene dataset reveal a substantial performance advantage for LEAD, demonstrating a 45.2% reduction in Root Mean Squared Error (RMSE) when contrasted with the MTGNN model. LEAD attains an RMSE of 0.1098, a marked improvement over MTGNN’s recorded RMSE of 0.2003. This significant decrease in error indicates that LEAD provides notably more accurate predictions regarding network traffic, suggesting its potential for enhanced network management and resource allocation strategies. The demonstrated accuracy on this widely used dataset establishes a strong baseline for LEAD’s effectiveness in real-world network prediction scenarios.

Evaluations conducted on the GÉANT dataset reveal that LEAD attains a root mean squared error (RMSE) of 0.0220, signifying its robust predictive capabilities within varied network topologies and traffic patterns. This result highlights the model’s ability to generalize effectively beyond the specific characteristics of the Abilene dataset, demonstrating adaptability to the complexities inherent in real-world network infrastructures. The exceptionally low RMSE indicates that LEAD accurately forecasts network behavior across a diverse range of operational conditions, suggesting its potential for reliable performance monitoring and proactive resource allocation in heterogeneous network environments. This level of precision is critical for maintaining optimal network performance and ensuring consistent quality of service.

Investigations into the architecture of LEAD demonstrate a significant performance boost derived from the incorporation of global conditioning. Specifically, analyses reveal that the model achieves a reduced Root Mean Squared Error (RMSE) of 0.1164 when utilizing global conditioning, a notable improvement over the 0.1098 RMSE recorded without this feature. This suggests that providing the model with broader contextual information – encompassing network-wide characteristics – enables more accurate predictions of network traffic patterns. The enhancement highlights the importance of holistic data integration within the model, allowing it to transcend localized observations and leverage a comprehensive understanding of the network’s overall state for improved forecasting capabilities.

Evaluations revealed a notable sensitivity in the model’s performance to the input data’s visual representation; specifically, transitioning from a full-color, RGB graph depiction to a grayscale equivalent induced a significant performance decline. The Root Mean Squared Error (RMSE) increased substantially to 0.1658 when utilizing grayscale graphs, indicating that color information plays a crucial role in the model’s predictive capabilities. This suggests the model effectively leverages color variations within the network visualizations to discern patterns and relationships essential for accurate traffic prediction, highlighting the importance of preserving chromatic data during the input processing stage.

The pursuit of accurate network traffic prediction, as demonstrated by LEAD, echoes a fundamental principle of systemic design: understanding the whole is crucial. Every new dependency introduced into a network – a new application, user, or data stream – creates hidden costs, affecting overall stability and predictability. As Paul Erdős famously stated, “A mathematician knows a lot of things, but not everything.” This highlights the inherent limitations in modeling complex systems; LEAD attempts to bridge this gap by integrating semantic reasoning via Large Language Models with the generative power of diffusion models. By acknowledging the interconnectedness of network elements, LEAD strives for a more holistic and robust approach to prediction, recognizing that isolating individual components offers an incomplete picture.

Beyond the Horizon

The pursuit of accurate network traffic prediction, as exemplified by this work, frequently fixates on increasingly complex architectures. Yet, the elegance of a solution is often inversely proportional to its intricacy. The integration of Large Language Models and diffusion models, while promising, merely shifts the complexity – the semantic reasoning and generative capabilities are powerful, but demand substantial resources and careful calibration. A truly robust system will not simply predict traffic, but understand its underlying causes – a holistic view currently obscured by the focus on surface-level correlations.

The current emphasis on generative modeling, while innovative, risks treating symptoms rather than addressing the fundamental inefficiencies within network design itself. If a system requires a sophisticated model to anticipate congestion, perhaps the network architecture is the primary flaw. Future work should explore how these predictive models can be used not just for forecasting, but for actively shaping network behavior – a feedback loop that prioritizes simplicity and resilience over sheer predictive accuracy.

Ultimately, the field must resist the temptation of cleverness. A fragile design, however ingeniously constructed, will inevitably fail. The most valuable advancement will not be a model that predicts traffic with marginally greater precision, but a network that renders such prediction largely unnecessary.

Original article: https://arxiv.org/pdf/2601.21437.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- Ragnarok X Next Generation Class Tier List (January 2026)

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- YouTuber streams himself 24/7 in total isolation for an entire year

- Gold Rate Forecast

- 2026 Upcoming Games Release Schedule

- Best Doctor Who Comics (October 2025)

- Silver Rate Forecast

- All Songs in Helluva Boss Season 2 Soundtrack Listed

2026-01-31 12:37