Author: Denis Avetisyan

Researchers have developed a novel approach to time series forecasting that not only predicts future values but also rigorously quantifies the uncertainty surrounding those predictions.

ProbFM leverages Deep Evidential Regression to decompose uncertainty into epistemic and aleatoric components, improving probabilistic forecasting and risk assessment in financial applications.

While time series foundation models show promise for zero-shot financial forecasting, a critical limitation remains in their ability to provide well-calibrated and interpretable uncertainty estimates. This paper introduces ‘ProbFM: Probabilistic Time Series Foundation Model with Uncertainty Decomposition’, a novel transformer-based framework that leverages Deep Evidential Regression to explicitly decompose uncertainty into epistemic and aleatoric components. By learning optimal uncertainty representations, ProbFM offers a theoretically-grounded approach to probabilistic forecasting without relying on restrictive distributional assumptions or complex sampling procedures. Could this principled quantification of uncertainty unlock more robust and reliable financial decision-making?

The Limits of Prediction: Knowing What You Don’t Know

While time series foundation models demonstrate remarkable predictive capabilities across diverse datasets, a critical limitation lies in their often inadequate assessment of forecast uncertainty. These models frequently generate point predictions without robust quantification of the potential range of outcomes, resulting in an unwarranted sense of confidence. This isn’t necessarily a flaw in the predictive power itself, but rather a deficit in communicating the limits of that power. Consequently, decision-makers relying on these forecasts may underestimate the true risks involved, particularly when facing novel or rapidly changing conditions. A highly accurate prediction presented without accompanying uncertainty estimates can be more dangerous than a less precise forecast accompanied by a clear understanding of potential errors, as it obscures the possibility of unfavorable outcomes and discourages proactive risk mitigation strategies.

Conventional forecasting techniques often treat all uncertainty as equal, obscuring a critical distinction between inherent randomness and a lack of knowledge. This conflation of aleatoric uncertainty – the natural variability within a system, like the unpredictable bounce of a ball – and epistemic uncertainty – stemming from incomplete or inaccurate data, or a flawed model – significantly impedes effective decision-making. A forecast might accurately reflect the range of possible outcomes given the current understanding, but fail to acknowledge the potential for drastically different scenarios arising from unknown factors. Consequently, risk assessments can be fundamentally skewed, leading to overconfidence in predictions and potentially disastrous outcomes, as decision-makers struggle to differentiate between noise they cannot control and gaps in their understanding that could be addressed with further investigation or refined modeling.

The reliability of any predictive model hinges not just on its accuracy, but crucially on its ability to communicate the limits of its knowledge. A forecast devoid of quantified uncertainty can be profoundly misleading, especially within complex, high-stakes domains like financial markets. Models often present a single point prediction without revealing the range of plausible outcomes, creating a false sense of certainty that encourages risky decision-making. This is particularly dangerous when models extrapolate beyond the data they were trained on, or encounter unforeseen events – situations where a clear articulation of ‘what it doesn’t know’ is paramount. Without acknowledging these limitations, stakeholders may misinterpret predictions as guarantees, leading to substantial economic consequences and systemic instability.

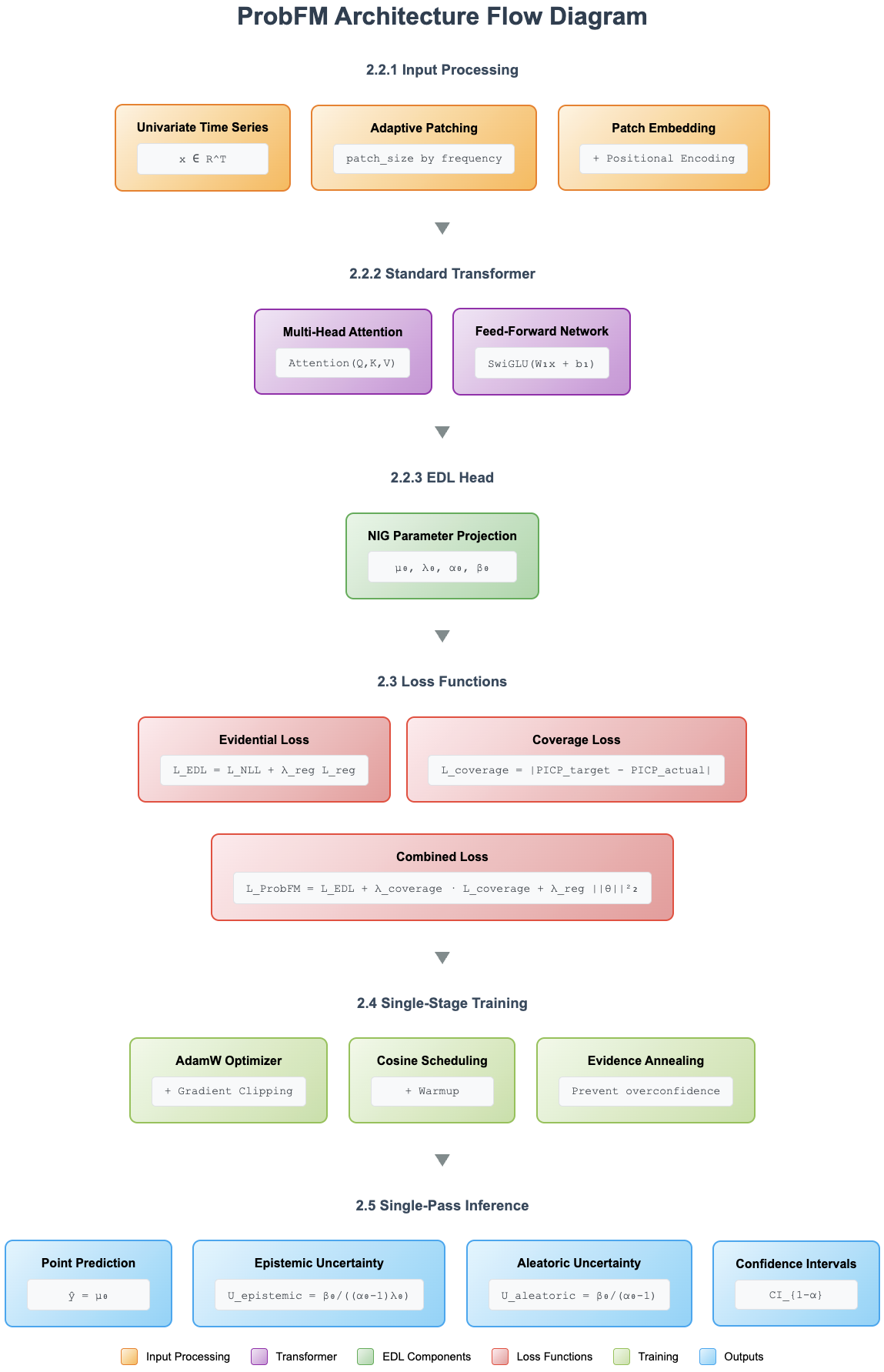

ProbFM: A Framework for Quantifying Uncertainty

ProbFM is a forecasting framework employing a transformer architecture to directly quantify prediction uncertainty, addressing limitations inherent in conventional methods which often provide point forecasts without associated confidence intervals. Unlike traditional techniques that may rely on residual analysis or heuristic adjustments to estimate uncertainty, ProbFM integrates uncertainty modeling into the core forecasting process. This is achieved by framing the forecasting problem as a probabilistic inference task, enabling the generation of predictive distributions rather than single-valued predictions. The framework’s transformer-based design allows it to effectively capture temporal dependencies in the data, contributing to more accurate and reliable uncertainty estimates, and facilitating improved decision-making in scenarios where quantifying risk is critical.

Deep Evidential Regression forms the foundational element of ProbFM, allowing for the separation of total uncertainty into two distinct components: epistemic and aleatoric. Aleatoric uncertainty, inherent to the data and representing noise or irreducible randomness, is further divided into homoscedastic – constant across inputs – and heteroscedastic – varying with input. Epistemic uncertainty, conversely, reflects the model’s lack of knowledge and can be reduced with more data. This decomposition is achieved by modeling the output distribution parameters – specifically, the mean and variance – as functions of the input features, allowing ProbFM to not only predict values but also quantify the confidence associated with those predictions through \sigma^2 , the variance, which represents the total uncertainty.

ProbFM employs a Normal-Inverse-Gamma (NIG) prior distribution to facilitate Bayesian inference for uncertainty quantification. This choice is significant because the NIG distribution forms a conjugate prior with the Gaussian likelihood commonly used in regression tasks. Conjugacy simplifies the Bayesian update process, allowing for closed-form posterior distributions and reducing computational complexity. Specifically, given a Gaussian likelihood and a NIG prior on the precision τ and mean μ, the posterior distribution also follows a NIG distribution, enabling efficient parameter estimation. This ultimately leads to well-calibrated uncertainty estimates, as the posterior distribution directly reflects the updated belief about the model parameters and their associated uncertainties.

Adaptive Techniques for Robust Uncertainty Modeling

Adaptive Patching within ProbFM addresses the challenge of varying temporal dependencies in time series data by dynamically adjusting the size of input patches used for analysis. Traditional fixed-size patching methods can struggle with signals containing both rapid fluctuations and long-term trends; smaller patches may miss broader context while larger patches can obscure short-term dynamics. ProbFM’s implementation analyzes the input time series characteristics – specifically, identifying periods of high variance or changing signal complexity – and modifies the patch size accordingly. This allows the model to utilize smaller patches when detailed, localized analysis is required, and larger patches to capture longer-range dependencies, ultimately improving its ability to model complex temporal relationships and enhance predictive performance across diverse time series datasets.

Evidence Annealing is a regularization method used during ProbFM training to manage the accumulation of evidence used for probabilistic predictions. This technique introduces a time-varying scaling factor to the evidence terms in the likelihood function, initially allowing for rapid learning but gradually decreasing the influence of new evidence as training progresses. By controlling the rate of evidence accumulation, overfitting is mitigated, particularly in scenarios with limited or noisy data. This process leads to more stable and reliable uncertainty estimates, as the model is discouraged from placing excessive confidence in potentially spurious correlations within the training set, and promotes generalization to unseen data.

Coverage Loss is a critical regularization term within the ProbFM training process designed to enforce probabilistic calibration. Specifically, it quantifies the discrepancy between the predicted coverage probability of the prediction intervals and the observed frequency with which true values fall within those intervals. This loss function penalizes deviations from the target coverage level – typically 90% or 95% – thereby encouraging the model to generate prediction intervals that accurately reflect the uncertainty in its predictions. Mathematically, the Coverage Loss often takes the form of a binary cross-entropy loss calculated on a set of test data, where the target is 1 if the true value is within the predicted interval and 0 otherwise. Minimizing this loss directly improves the reliability and trustworthiness of the probabilistic forecasts generated by ProbFM.

Impact on Financial Decision-Making

Probabilistic Forecasting with Mixture Models (ProbFM) introduces a refined approach to financial trade filtering by moving beyond simple point predictions to provide a calibrated measure of uncertainty alongside each forecast. This allows for the implementation of strategies that selectively execute trades only when the predicted outcome falls within a pre-defined confidence interval, effectively mitigating risk by avoiding potentially unfavorable market conditions. Rather than blindly acting on every signal, a trader utilizing ProbFM can establish thresholds – for example, only initiating a buy order when there is a 90% probability of a price increase – leading to a more discerning and potentially more profitable trading style. This nuanced control, facilitated by accurate uncertainty quantification, distinguishes ProbFM from traditional methods that often treat all predictions as equally reliable, regardless of inherent ambiguity.

Probabilistic Forecasting Models (ProbFM) redefine portfolio optimization by moving beyond traditional methods that often rely on point estimates of asset returns. This approach enables a far more nuanced understanding of potential risk and reward, as ProbFM doesn’t just predict what will happen, but also how likely different outcomes are. Consequently, portfolio construction shifts from simply maximizing expected return for a given risk level, to intelligently allocating capital based on the full probability distribution of future returns. This leads to portfolios that are not only more efficient-achieving higher returns for the same level of risk-but also more robust, exhibiting greater resilience to unexpected market fluctuations and a more stable long-term performance profile. The ability to quantify uncertainty allows for strategic diversification and hedging techniques, ultimately creating portfolios better aligned with an investor’s risk tolerance and financial goals.

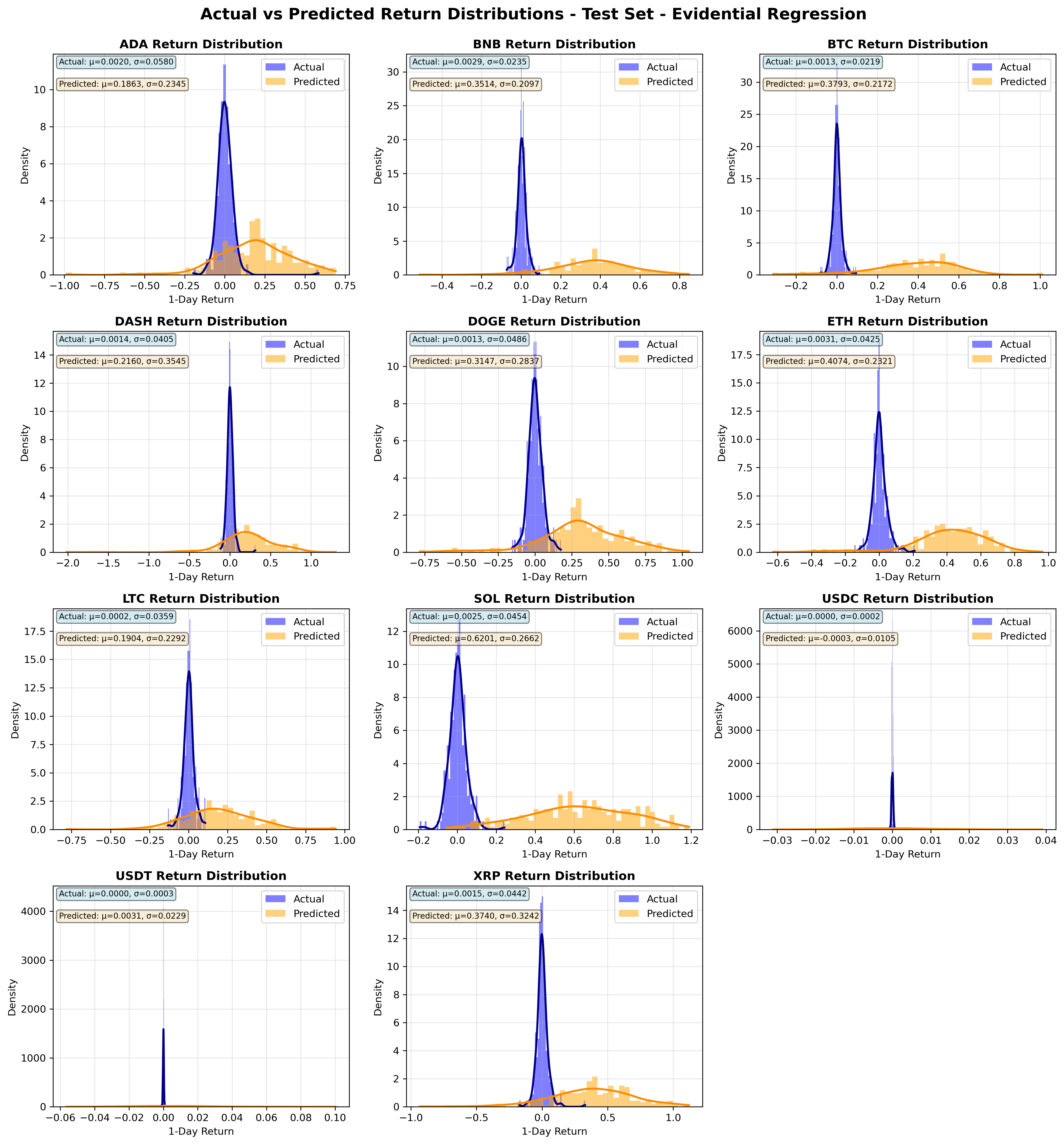

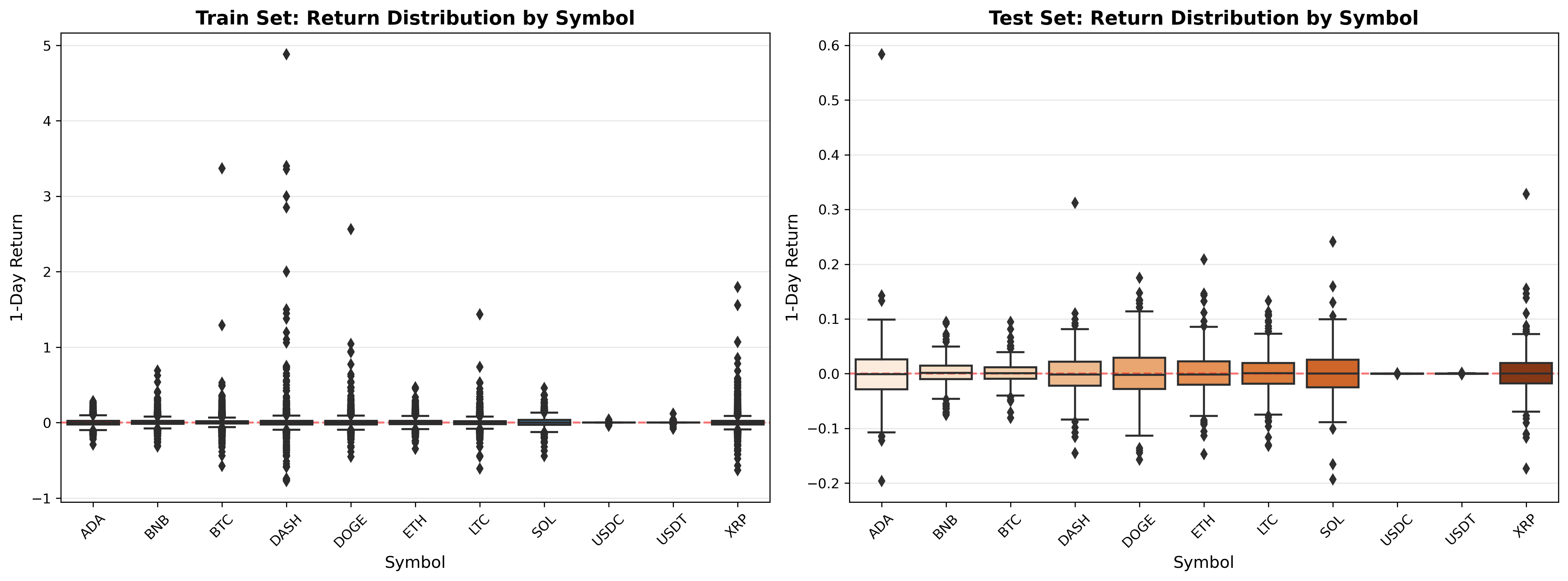

In the volatile landscape of cryptocurrency trading, ProbFM demonstrated a marked ability to generate superior risk-adjusted returns. Evaluations revealed a Sharpe Ratio of 1.33, a key metric for evaluating investment performance, significantly exceeding the 0.90 achieved by a standard Mean Squared Error (MSE) baseline. This indicates that for every unit of risk taken, ProbFM generated 33% more return compared to the baseline model. The improvement suggests that ProbFM’s probabilistic forecasting capabilities enable more informed trading decisions, capitalizing on favorable market conditions while mitigating potential losses, ultimately leading to enhanced profitability in a challenging asset class.

The efficacy of ProbFM extends to its impressive performance in risk-adjusted return metrics. Specifically, the model achieved a Sortino Ratio of 2.27, a substantial improvement over the 1.52 recorded by the baseline MSE model; this indicates a superior ability to generate returns relative to downside risk. Complementing this, ProbFM’s Calmar Ratio reached 3.04, demonstrably exceeding the MSE baseline’s 1.52 and signifying robust returns in relation to maximum drawdown. These results collectively suggest that ProbFM not only delivers positive returns but does so with a heightened level of stability and efficiency, providing a compelling advantage in financial applications where minimizing risk is paramount.

Probabilistic Forecasting Models (ProbFM) demonstrate a compelling advantage in financial trading strategies, achieving a 0.52 win rate – the highest observed among the evaluated methods. This indicates a greater proportion of successful trades, translating to increased profitability potential. Importantly, this performance isn’t achieved at the expense of forecast accuracy; ProbFM maintains a competitive Continuous Ranked Probability Score (CRPS) of 2.65, only slightly exceeding the 2.21 reported for Gaussian Negative Log-Likelihood (NLL) models. This balance between predictive skill, as measured by CRPS, and a superior win rate positions ProbFM as a robust tool for consistently identifying favorable trading opportunities and maximizing returns in dynamic financial markets.

The development of ProbFM exemplifies a crucial tenet of robust system design: structure dictates behavior. This model doesn’t merely forecast; it decomposes uncertainty into epistemic and aleatoric components, acknowledging that predictability isn’t absolute. As G. H. Hardy observed, “The essence of mathematics lies in its economy.” ProbFM embodies this economy by distilling complex time series data into a manageable, interpretable framework for uncertainty. The model’s ability to separate what is known from what is inherently random allows for more informed risk assessment, mirroring the elegance of a well-structured system where each component contributes to a coherent and predictable whole. Such decomposition isn’t simply a technical refinement; it’s an architectural principle, acknowledging that a system’s behavior over time is defined by the interplay of its internal components and their inherent limitations.

What Lies Ahead?

The pursuit of a universally capable time series foundation model, as exemplified by ProbFM, inevitably forces a reckoning with what constitutes ‘understanding’ in forecasting. The decomposition of uncertainty – distinguishing between what is knowable, and what is fundamentally random – is a step toward this, yet begs the question of what is actually being optimized for. Is the goal truly superior predictive accuracy, or rather, a more faithful representation of the underlying generative process? The current emphasis on epistemic and aleatoric uncertainty, while valuable, may prove insufficient. A more holistic view will likely require acknowledging, and modeling, the inherent limitations of the data itself – the biases, the gaps, the unobserved confounders that irrevocably shape any forecast.

Future work must move beyond simply quantifying uncertainty to actively leveraging it. ProbFM provides a framework, but the true test lies in demonstrating demonstrable improvements in decision-making under risk. This demands a shift from benchmark-driven performance metrics to real-world evaluations, focused on the impact of probabilistic forecasts on practical outcomes. Moreover, the apparent elegance of a single foundation model should not obscure the possibility that different domains – finance, climate science, epidemiology – require specialized architectures, or even entirely different paradigms.

Simplicity, it should be remembered, is not minimalism. It is the discipline of rigorously distinguishing the essential from the accidental. The journey toward genuinely intelligent time series analysis will not be measured by the complexity of the models, but by the clarity with which they reveal the underlying structure of the world.

Original article: https://arxiv.org/pdf/2601.10591.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- 10 Worst Sci-Fi Movies of All Time, According to Richard Roeper

- ‘The Night Manager’ Season 2 Review: Tom Hiddleston Returns for a Thrilling Follow-up

- New horror game goes viral with WWE wrestling finishers on monsters

- ‘I Can’t Say It On Camera.’ One Gag In Fackham Hall Was So Naughty It Left Thomasin McKenzie ‘Quite Concerned’

- Marvel Studios’ 3rd Saga Will Expand the MCU’s Magic Side Across 4 Major Franchises

- Disney’s Biggest Sci-Fi Flop of 2025 Is a Streaming Hit Now

- Pokemon Legends: Z-A Is Giving Away A Very Big Charizard

- Brent Oil Forecast

- ‘John Wick’s Scott Adkins Returns to Action Comedy in First Look at ‘Reckless’

2026-01-16 22:44