Author: Denis Avetisyan

As artificial intelligence increasingly powers player risk detection in the gambling industry, a critical gap in standardized evaluation threatens effective harm reduction.

This review argues for the development of performance benchmarks to objectively measure and improve the efficacy of AI-enabled player risk detection systems.

Despite the growing reliance on artificial intelligence to identify and support at-risk gamblers, a critical gap in standardized evaluation hinders meaningful progress and transparency in the field. This paper, ‘The Need for Benchmarks to Advance AI-Enabled Player Risk Detection in Gambling’, addresses this limitation by arguing for the development of a robust benchmarking framework for these increasingly prevalent systems. We propose a conceptual model enabling objective, comparative assessment using standardized datasets and metrics to drive innovation and improve effectiveness. Will such a framework ultimately foster responsible AI adoption and demonstrably reduce gambling-related harm?

The Inevitable Drift: Recognizing Vulnerability in Play

Conventional responsible gambling measures frequently address problematic behavior after it has already manifested, representing a significant limitation in harm reduction. This reactive approach typically relies on individuals self-identifying as having a problem, or seeking help only when substantial financial, emotional, or social damage has occurred. Consequently, a critical gap exists in preventing initial engagement with harmful gambling behaviors, leaving a large proportion of at-risk individuals unaddressed. This reliance on post-hoc intervention necessitates a shift towards predictive strategies that anticipate potential harm, allowing for timely support and preventative measures before gambling-related issues escalate into full-blown addiction and its associated consequences. Addressing this gap requires a fundamental rethinking of responsible gambling, moving beyond damage control to prioritize proactive identification and early intervention.

Current responsible gambling strategies frequently rely on individuals self-identifying as having a problem, a method demonstrably limited by underreporting and delayed intervention. A more effective approach necessitates shifting towards the objective identification of at-risk behaviors. This involves analyzing patterns in player activity – such as escalating stakes, chasing losses, increased frequency of play, or unusual times of engagement – to detect vulnerability before an individual explicitly seeks help. These behavioral markers, derived from data analysis, offer a far more nuanced and proactive understanding of risk, allowing for targeted interventions and support that can prevent harm from escalating and ultimately foster a safer gambling environment. The focus moves from reacting to problems to anticipating and mitigating them, fundamentally reshaping responsible gambling protocols.

Player Risk Detection represents a significant shift in responsible gambling strategies, moving beyond reactive measures to embrace predictive analytics. By leveraging routinely collected behavioral data – encompassing factors like wagering frequency, bet sizes, game types, and spending patterns – sophisticated algorithms can identify players exhibiting early indicators of potential harm. This data-driven approach allows operators to proactively intervene with targeted support, such as personalized messaging, spending limits, or offers for self-exclusion, before problematic gambling behavior escalates into significant financial or personal distress. The system doesn’t aim to predict gambling itself, but rather to pinpoint deviations from established player baselines, flagging anomalies that suggest a player may be at increased risk and require additional care. Ultimately, this allows for a more preventative, and potentially more effective, approach to safeguarding vulnerable individuals.

Data as a Lens: Mapping the Terrain of Responsible Play

Data-driven Responsible Gambling (RG) models utilize artificial intelligence and machine learning algorithms to process large datasets of player activity. These datasets include variables such as deposit frequency, wagering amounts, game types played, session durations, and changes in betting patterns. By applying techniques like clustering, regression, and classification, these models identify anomalies and statistically significant deviations from established baselines of normal player behavior. The identified patterns can then be used to predict potential problematic gambling behaviors, including the development of addiction or financial hardship. The models do not operate on demographic data alone; the core functionality relies on observed behavioral data to provide a dynamic, individualized risk assessment.

Data-driven Responsible Gambling (RG) models move beyond generalized preventative measures by utilizing individual player data to personalize interventions. These models analyze behavioral patterns – including wagering frequency, spend, game types, and session durations – to construct risk profiles for each player. Based on these profiles, targeted interventions such as deposit limits, game restrictions, or personalized messaging regarding responsible play can be implemented. This approach contrasts with blanket restrictions and aims to address potential problem gambling behaviors at an early stage, increasing the likelihood of positive outcomes by focusing resources on players demonstrating indicators of risk, as opposed to all players indiscriminately.

Effective evaluation of data-driven Responsible Gambling (RG) models necessitates a systematic approach employing standardized benchmarks. Currently, performance assessment lacks consistent metrics, hindering comparative analysis and reliable improvement tracking. This work proposes a conceptual framework to address this gap, advocating for the use of pre-defined benchmarks covering key performance indicators such as precision and recall in identifying problematic gambling behavior, alongside measures of intervention effectiveness and player privacy preservation. The framework emphasizes the importance of utilizing diverse datasets for validation, ensuring generalizability across player populations and gaming platforms, and establishing clear criteria for acceptable performance levels to guide model development and deployment.

The Measure of Foresight: Establishing Benchmarks for Model Integrity

Performance benchmarking is a critical component in validating Data-Driven Responsible Gambling (RG) models. This process establishes the degree to which a model reliably and accurately identifies players exhibiting problematic gambling behaviors. Without rigorous benchmarking, there is no objective measure of a model’s effectiveness, potentially leading to misidentification of at-risk individuals – either false positives requiring unnecessary intervention, or, more critically, false negatives where individuals in need of support are overlooked. Establishing a standardized benchmarking process ensures consistency in evaluation and allows for meaningful comparisons between different RG models, ultimately contributing to improved player protection strategies.

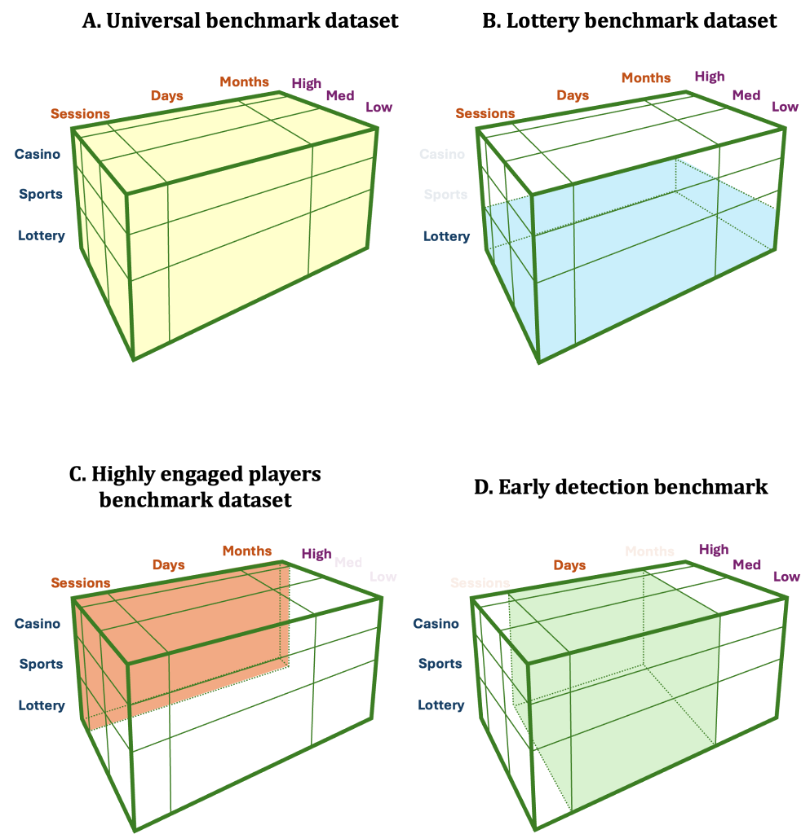

Benchmark datasets for evaluating Data-Driven Responsible Gambling (RG) models are structured around three core dimensions: Gambling Vertical, Time, and Engagement Level. Gambling Vertical specifies the type of game being played (e.g., slots, table games, sports betting), allowing for performance assessment specific to different game characteristics. The Time dimension incorporates historical data, enabling evaluation of model accuracy over varying periods and identification of temporal patterns in risk. Engagement Level quantifies player interaction with the platform, encompassing factors like frequency of play, wager size, and deposit amounts; this allows for performance assessment across differing levels of player activity and potential risk exposure. The integration of these dimensions creates a multi-faceted evaluation framework capable of comprehensively assessing model performance across a range of player behaviors and gambling contexts.

Sensitivity and Precision are core metrics for evaluating Data-Driven Responsible Gambling (RG) model performance. Sensitivity, also known as recall, measures the proportion of actual at-risk players correctly identified by the model; it is calculated as $TP / (TP + FN)$, where TP represents true positives and FN false negatives. Precision quantifies the accuracy of positive predictions, representing the proportion of players flagged as at-risk who genuinely are; it is calculated as $TP / (TP + FP)$, where FP represents false positives. These metrics, used within the proposed standardized benchmarking framework, allow for comparative analysis of different models and the identification of specific areas requiring refinement to balance the detection of at-risk individuals with the minimization of unnecessary intervention.

The Shadow of the Algorithm: Navigating Transparency and Bias

The increasing sophistication of machine learning models, while powerful in identifying gambling risk, often presents a significant hurdle known as the “Black Box Problem.” These complex algorithms, particularly deep neural networks, can achieve remarkable accuracy but operate in ways that are difficult for even experts to understand. This lack of transparency hinders trust and accountability, as it becomes challenging to discern why a model made a specific prediction. Consequently, substantial research focuses on enhancing interpretability and explainability through techniques like feature importance analysis and the development of more inherently transparent model architectures. Addressing this challenge is crucial not only for regulatory compliance but also for fostering user trust and enabling informed intervention strategies, allowing for a more nuanced and responsible approach to identifying and supporting individuals at risk.

Data bias represents a critical vulnerability in predictive models used for responsible gambling, potentially undermining the fairness and accuracy of interventions. These biases aren’t necessarily intentional; they often arise from skewed or unrepresentative training data – for example, if a model is primarily trained on data from a specific demographic, it may inaccurately assess risk for players outside that group. This can lead to inappropriate or ineffective support being offered, or conversely, unnecessary restrictions being placed on individuals who don’t pose a significant risk. The consequences extend beyond individual harm, as biased systems can perpetuate existing societal inequalities and erode trust in the fairness of the gambling environment. Mitigating this requires careful data curation, robust testing for disparate impact, and ongoing monitoring to ensure models consistently deliver equitable outcomes for all players, regardless of background or playing style.

Responsible gambling initiatives are increasingly employing a dual-strategy approach to player protection. Universal strategies, such as age verification, deposit limits, and self-exclusion options, create a baseline level of safety for all users, regardless of their individual risk profiles. However, these broad measures are thoughtfully paired with targeted interventions – personalized feedback on spending habits, proactive outreach based on behavioral patterns, and customized support resources. This combination aims to provide a comprehensive safety net, acknowledging that gambling behaviors vary significantly and require nuanced responses. The implementation of both universal and targeted approaches ensures consistency in applying responsible gambling principles while simultaneously addressing the unique needs of each player, fostering a safer and more sustainable environment for everyone involved.

The pursuit of AI-enabled player risk detection, as detailed in this paper, mirrors a broader challenge in systems design: ensuring longevity amidst inevitable decay. The call for standardized benchmarks isn’t merely about quantifying performance; it’s about establishing a foundation for graceful aging. As Edsger W. Dijkstra observed, “Simplicity is prerequisite for reliability.” A robust benchmarking framework, built on transparent data and clear metrics, provides that essential simplicity, allowing for meaningful evaluation and iterative improvement. Without it, systems become opaque, hindering the ability to adapt and maintain effectiveness in identifying and supporting at-risk gamblers-a critical component of responsible gambling initiatives.

What’s Next?

The pursuit of benchmarks for AI-enabled player risk detection isn’t merely a technical exercise; it’s an acknowledgement of entropy. Every model, no matter how elegantly constructed, will degrade in efficacy as the gambling landscape shifts – new game formats emerge, player behaviors evolve, and adversarial strategies are refined. Versioning, then, becomes a form of memory, a desperate attempt to capture fleeting performance before the arrow of time inevitably points toward refactoring. The absence of standardized metrics isn’t a failure of ingenuity, but a symptom of a deeper truth: systems aren’t built to solve problems, but to delay their inevitable return.

Future efforts must move beyond simple accuracy scores. True progress lies in quantifying the cost of false positives and negatives – not in abstract terms, but in concrete measures of harm reduction and player wellbeing. This requires a willingness to confront the ethical ambiguities inherent in predictive modeling, and to acknowledge that even the most sophisticated algorithms are, at best, imperfect approximations of complex human behavior.

The field will inevitably fragment, with competing benchmarks reflecting different priorities and philosophical assumptions. This isn’t necessarily a detriment. Such divergence can serve as a vital stress test, revealing the limitations of any single framework and forcing a continuous reevaluation of what constitutes ‘responsible’ AI. The work isn’t about achieving a perfect solution, but about gracefully navigating an inherently imperfect system.

Original article: https://arxiv.org/pdf/2511.21658.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Tom Cruise? Harrison Ford? People Are Arguing About Which Actor Had The Best 7-Year Run, And I Can’t Decide Who’s Right

- What If Karlach Had a Miss Piggy Meltdown?

- How to Complete the Behemoth Guardian Project in Infinity Nikki

- This Minthara Cosplay Is So Accurate It’s Unreal

- The Beekeeper 2 Release Window & First Look Revealed

- Burger King launches new fan made Ultimate Steakhouse Whopper

- ‘Zootopia 2’ Is Tracking to Become the Biggest Hollywood Animated Movie of All Time

- Gold Rate Forecast

- Amazon Prime Members Get These 23 Free Games This Month

- Brent Oil Forecast

2025-11-27 20:41