Author: Denis Avetisyan

A new analysis of millions of online forum posts reveals a surge in concerns about digital privacy, security threats, and the growing need for tailored support.

Research into over 3 million Reddit posts identifies patterns in help-seeking behavior related to digital privacy and safety, highlighting rising anxiety about scams and the limitations of generic online advice.

Navigating the increasingly complex landscape of digital life often places the burden of security directly on individual users. Our research, ‘Understanding Help Seeking for Digital Privacy, Safety, and Security’, addresses this challenge by analyzing over three million Reddit posts to map the scope and nature of online help-seeking behaviors related to digital threats. This large-scale analysis reveals a growing demand for assistance with scams and account compromises, alongside a diverse ecosystem of communities offering support. How can these insights inform the development of more effective resources-from user guides to AI-powered agents-that address the nuanced combinations of threats, platforms, and emotional contexts users face?

The Noise and the Signal: Finding Those Who Need Help

The expansive landscape of online platforms, particularly those like Reddit with their user-driven content, presents a significant challenge for digital safety initiatives. While these platforms are replete with individuals potentially seeking assistance with online harassment, privacy concerns, or other digital threats, the sheer volume of posts renders manual identification of those in need wholly impractical. Human review, even with dedicated teams, simply cannot keep pace with the constant stream of content, meaning many cries for help remain unseen. This bottleneck hinders timely intervention and support, highlighting the urgent need for scalable solutions that can efficiently sift through the noise and pinpoint those requiring assistance within the vast digital sphere.

The proliferation of online content, particularly on platforms like Reddit, presents a significant challenge to identifying individuals in need of digital safety assistance. While automated systems are essential to process the immense volume of posts, reliance on simple keyword detection proves remarkably ineffective. Language is rarely straightforward; sarcasm, coded language, and evolving online slang frequently mask genuine cries for help. A post containing terms associated with abuse, for instance, might be part of a discussion about harmful behavior, not a direct request for intervention. Consequently, automated systems must move beyond basic lexical matching and incorporate contextual understanding – a complex task requiring advanced natural language processing techniques to differentiate genuine help-seeking from ambiguous or irrelevant content.

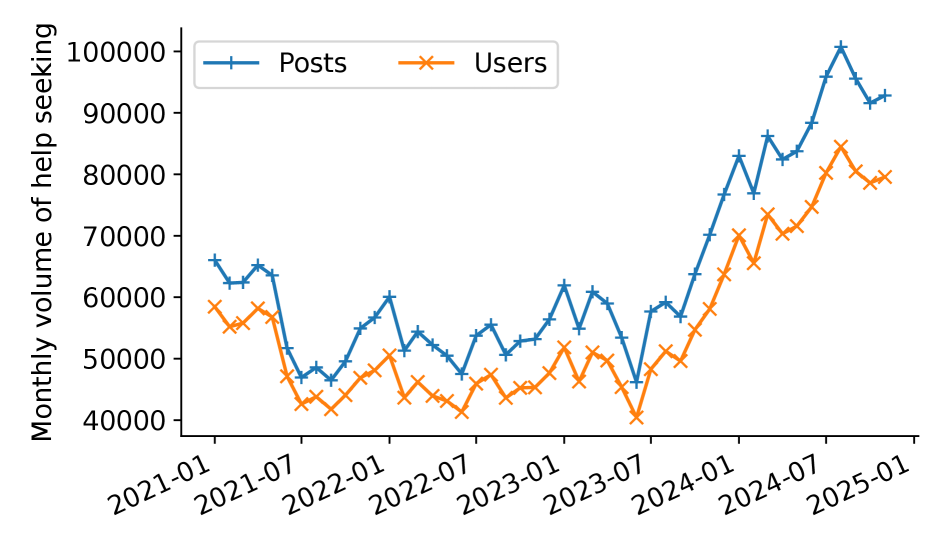

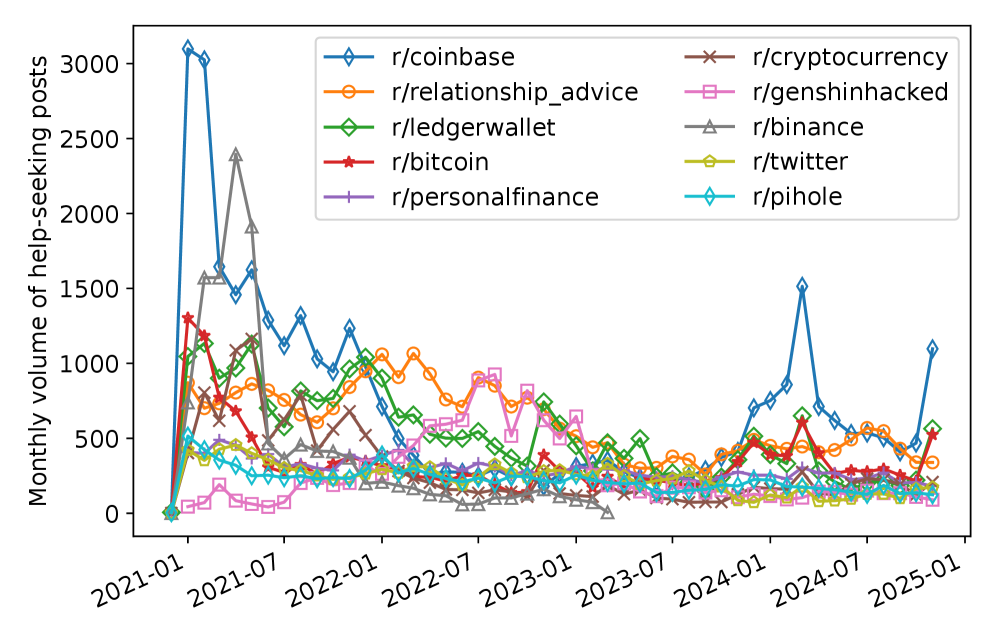

The escalating prevalence of online harm necessitates increasingly sophisticated methods for identifying individuals actively seeking digital safety assistance, yet conventional approaches are proving inadequate to the task. Traditional keyword-based flagging systems often misinterpret nuanced language or fail to detect emerging threats, creating a critical gap in timely support. Compounding this challenge is the remarkable 66% surge in help-seeking activity observed on platforms like Reddit over the past year, demonstrating a growing need that outpaces the capacity of manual review and simple automated detection. Effectively recognizing cries for help requires a shift toward more intelligent systems capable of understanding context, identifying subtle indicators of distress, and adapting to the ever-changing landscape of online abuse – a necessity for ensuring vulnerable users receive prompt and appropriate intervention.

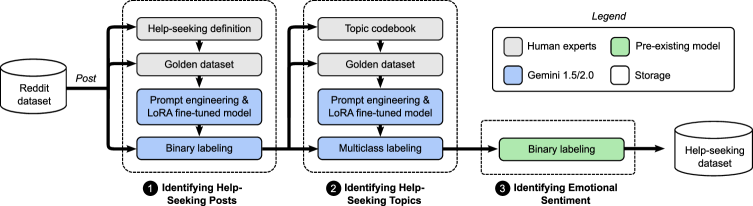

Beyond Keywords: Teaching Machines to Understand Distress

Traditional methods of identifying users needing assistance relied on keyword spotting, which often resulted in false positives and missed nuanced requests. To address this, we implemented Large Language Model (LLM) fine-tuning, a process of adapting a pre-trained LLM to a specific task using a labeled dataset. This allows the system to analyze the semantic content of user posts, moving beyond literal keyword matches to understand the underlying intent and context. The fine-tuned LLM can therefore differentiate between a user explicitly asking for help and one merely mentioning a related term, substantially improving the accuracy of help detection and reducing the number of irrelevant alerts.

The Large Language Model (LLM) was trained using a curated dataset of Reddit posts manually labeled to indicate the presence of digital safety and privacy concerns. This dataset encompassed a range of topics, including but not limited to online harassment, scams, account compromise, and requests for information regarding privacy tools. The labeling process identified linguistic patterns, phrasing, and contextual cues commonly associated with users seeking assistance related to these issues. The LLM then learned to associate these features with the presence of a help request, allowing it to generalize beyond specific keywords and identify nuanced expressions of need within user-generated content. The dataset’s size and diversity were critical in enabling the model to effectively discern genuine requests from general discussion or unrelated posts.

An automated pipeline was established leveraging Large Language Model (LLM) technology and the extensive data available on the Reddit platform to identify users expressing a need for support. This system processes posts to flag potential help-seeking behavior and route them for review. Evaluation of this pipeline demonstrated a combined precision and recall of 93% in accurately identifying posts indicative of users requiring assistance, representing a significant improvement over previous keyword-based methods. This performance metric indicates that the system effectively minimizes both false positives – incorrectly flagging posts as needing help – and false negatives – failing to identify genuine requests for support.

What Are They Asking For? Uncovering Themes in Digital Distress

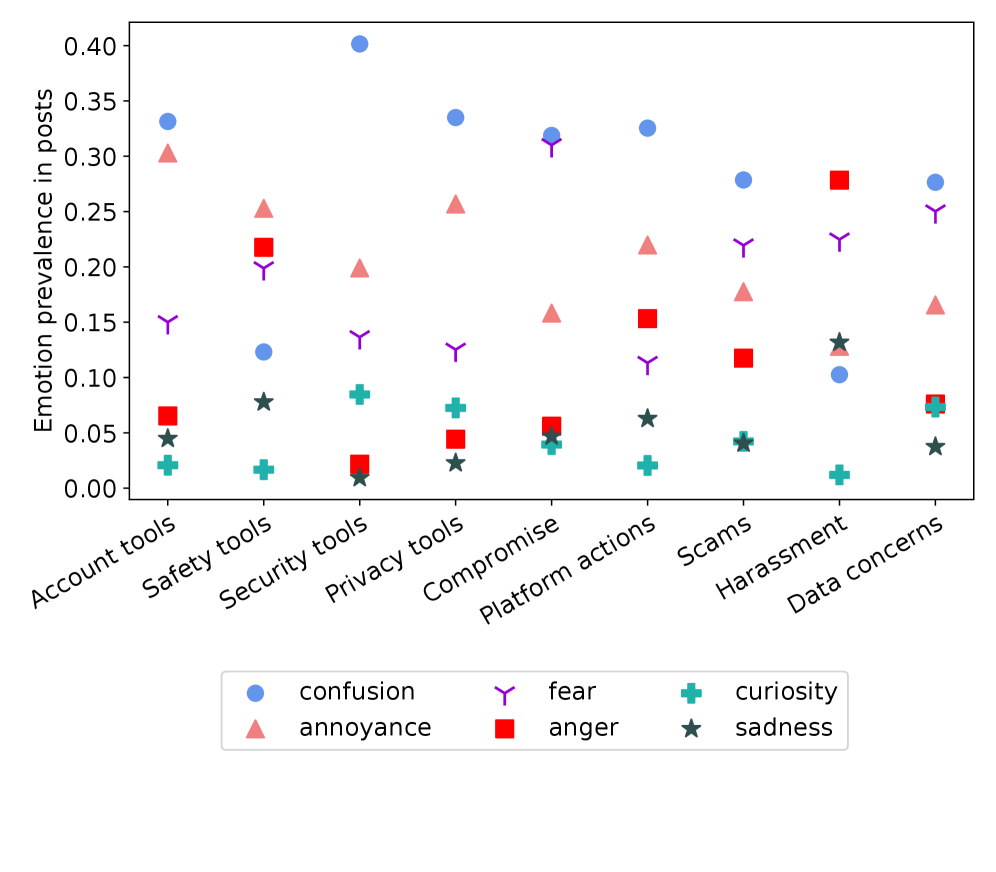

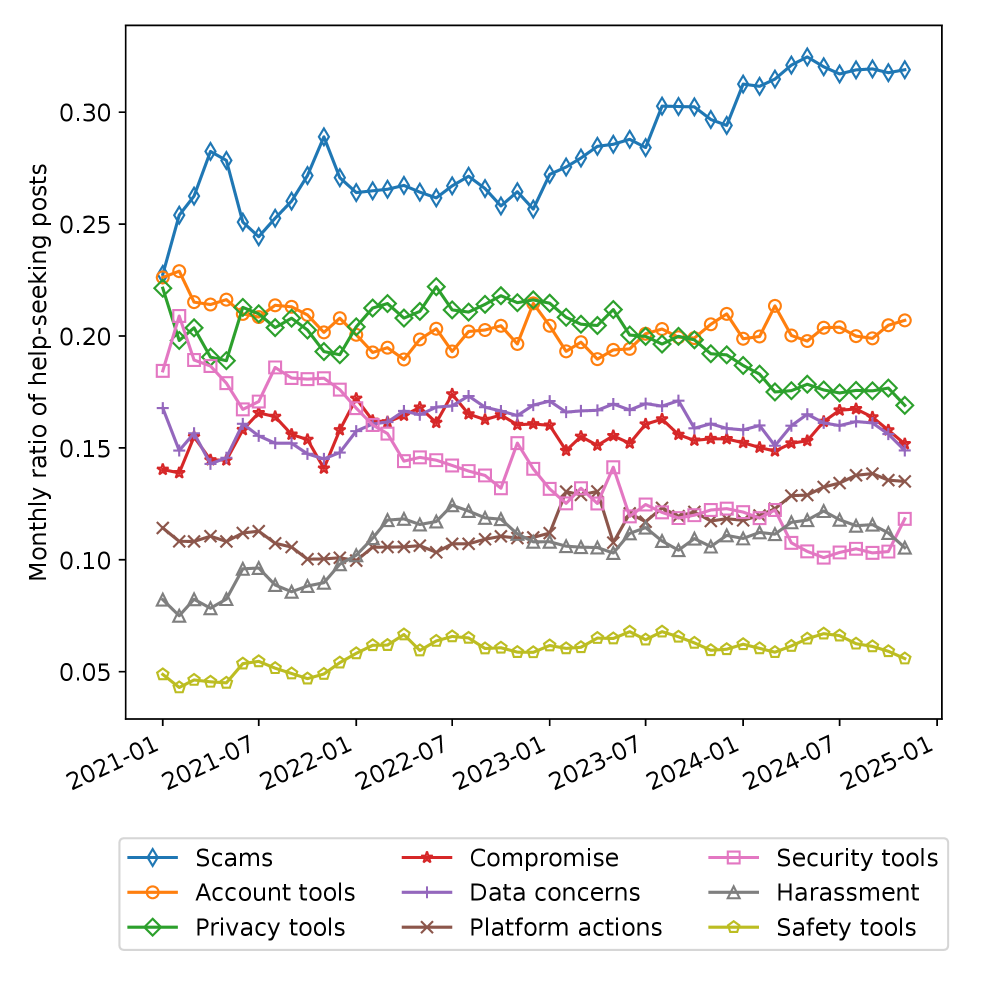

Qualitative analysis of over 100,000 help-seeking posts submitted through the end of 2024 identified three primary themes driving user requests for assistance. These themes encompassed issues related to compromised user accounts, reports of scams and fraudulent activities, and broader concerns regarding data privacy. The analysis involved a manual review of post content to categorize recurring issues and establish prevalence. This categorization revealed that users frequently sought support regarding unauthorized account access, suspected phishing attempts, and the protection of personally identifiable information, forming the core of these identified themes.

Analysis of user-submitted help requests indicates a consistent demand for assistance with fundamental digital security practices. Users commonly sought guidance on regaining access to compromised accounts, with requests detailing forgotten passwords, suspected unauthorized access, and account lockout issues. A significant portion of inquiries centered on identifying phishing attempts, including requests for verification of suspicious emails, links, and websites. Furthermore, users frequently requested information on protecting personal information, such as advice on data minimization, strong password creation, and understanding privacy settings across various online platforms.

Analysis of user-submitted help requests revealed a consistent negative emotional tone accompanying technical issues. Specifically, posts detailing compromised accounts, scams, or data privacy concerns frequently expressed feelings of fear, frustration, and helplessness. The prevalence of these emotions suggests that effective support requires not only technical assistance but also empathetic communication to address the user’s emotional state. Ignoring these emotional cues can exacerbate user distress and hinder successful resolution of the underlying issue, reinforcing the need for support agents to prioritize compassionate and understanding interactions.

Analysis of user-submitted help requests revealed a consistent volume of user-reported issues, exceeding 100,000 posts monthly. Within this data, scam and fraud related inquiries constitute the most prevalent concern, accounting for 28% of all submitted posts. This represents the single largest category of assistance requested, highlighting the significant impact of fraudulent activities on the user base and the associated need for targeted support and preventative measures.

From Reaction to Prevention: Building a More Resilient Online Community

Through the application of topic extraction techniques, help-seeking posts on social media platforms were systematically categorized, revealing prevalent user needs concerning account management, privacy settings, and the avoidance of online scams. This granular understanding facilitated the creation of precisely targeted resources – from tutorials on utilizing platform security features to informative guides on identifying phishing attempts – directly addressing the most common challenges users face. By moving beyond generalized support materials, this approach ensures individuals receive relevant and actionable guidance, enhancing their ability to navigate the digital landscape safely and confidently. The resulting categorized data serves as a dynamic knowledge base, constantly refined by emerging trends in user inquiries and allowing for the continuous improvement of preventative educational content.

Analyzing aggregated help-seeking data reveals patterns indicative of evolving online threats before they become widespread problems. This proactive capability allows for the swift development of targeted educational resources, such as easily digestible guides and interactive tutorials, designed to preemptively address emerging scams and security vulnerabilities. By shifting from simply reacting to user reports, this data-driven methodology facilitates the creation of preventative materials that empower individuals with the knowledge to navigate the digital landscape more safely and confidently. The result is a move toward a more resilient online community, better equipped to recognize and avoid potential harm, and ultimately fostering a heightened sense of digital security for all.

A shift towards preventative digital support necessitates a deep understanding of user challenges beyond simply responding to reported issues. Current systems often address problems after they’ve occurred, leaving individuals vulnerable to ongoing threats. However, by meticulously analyzing patterns in help-seeking behavior, platforms can anticipate emerging needs and proactively deliver educational resources. This approach moves beyond damage control, fostering a more secure online environment where users are empowered with the knowledge to recognize and avoid potential risks. Such preventative guidance not only reduces the burden on reactive support systems but also cultivates a more resilient user base capable of navigating the complexities of the digital landscape with greater confidence and security.

Recent analyses of social media interactions reveal a significant surge – a 66% increase year-over-year – in individuals actively seeking digital assistance, underscoring a growing need for proactive online safety measures. This heightened help-seeking behavior presents a unique opportunity to leverage the wealth of data generated on these platforms, not simply to respond to crises as they occur, but to anticipate and address emerging threats before they impact a wider audience. By systematically analyzing these requests, researchers can pinpoint prevalent issues, identify vulnerable user groups, and develop targeted educational resources and preventative tools. The potential extends beyond immediate problem-solving; it offers a pathway to building a more secure digital ecosystem where timely assistance and preventative guidance are readily available, ultimately empowering individuals to navigate the online world with greater confidence and resilience.

The study meticulously dissects the chaos of online help requests – over three million Reddit posts, no less. It’s a predictable deluge, really. The research highlights a surge in concerns about scams, a problem as old as commerce itself, merely rebranded for the digital age. Andrey Kolmogorov once observed, “The most important things are often the ones we cannot measure.” This feels apt; the sheer volume of pleas for help reveals a growing anxiety, but quantifying the impact of those anxieties – the real-world damage from these scams – remains elusive. The report dutifully catalogs the questions, but one suspects production environments are already overflowing with the consequences, proving once again that everything new is just the old thing with worse docs.

What’s Next?

The analysis of Reddit data, while revealing a surge in privacy and security concerns – particularly regarding scams – merely quantifies a problem that has always existed. The observation that generalized advice often falls short is not a revelation; it’s the predictable outcome of applying broad solutions to nuanced individual vulnerabilities. One anticipates the inevitable refinement of ‘threat modeling as a service,’ a concept that, while presented as novel, echoes countless iterations of security consulting repackaged for the digital age.

Future research will undoubtedly explore the application of Large Language Models to automate help-seeking responses. The promise of personalized advice, generated at scale, is tempting. Yet, one suspects that these systems will primarily excel at generating plausible-sounding, but ultimately ineffective, reassurance. The difficulty lies not in processing the data, but in truly understanding the context of individual risk – something even human experts struggle with.

The real challenge, predictably, will not be technical. It will be behavioral. Understanding why individuals repeatedly fall prey to scams, despite readily available warnings, requires a level of psychological insight that data analysis alone cannot provide. The field will likely circle back to the fundamental problem: people are remarkably consistent in their inconsistencies, and no amount of scalable security can fully account for that.

Original article: https://arxiv.org/pdf/2601.11398.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- The MCU’s Mandarin Twist, Explained

- These are the 25 best PlayStation 5 games

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- SHIB PREDICTION. SHIB cryptocurrency

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- Rob Reiner’s Son Officially Charged With First Degree Murder

- MNT PREDICTION. MNT cryptocurrency

- ‘Stranger Things’ Creators Break Down Why Finale Had No Demogorgons

2026-01-21 05:16