Author: Denis Avetisyan

A new framework explains how unexpected failures arise in complex systems of AI agents, pinpointing the contributions of individual actors to catastrophic outcomes.

This review details a Shapley value-based approach to attributing extreme events in multi-agent systems powered by large language models, assessing behavioral risk and potential for systemic failure.

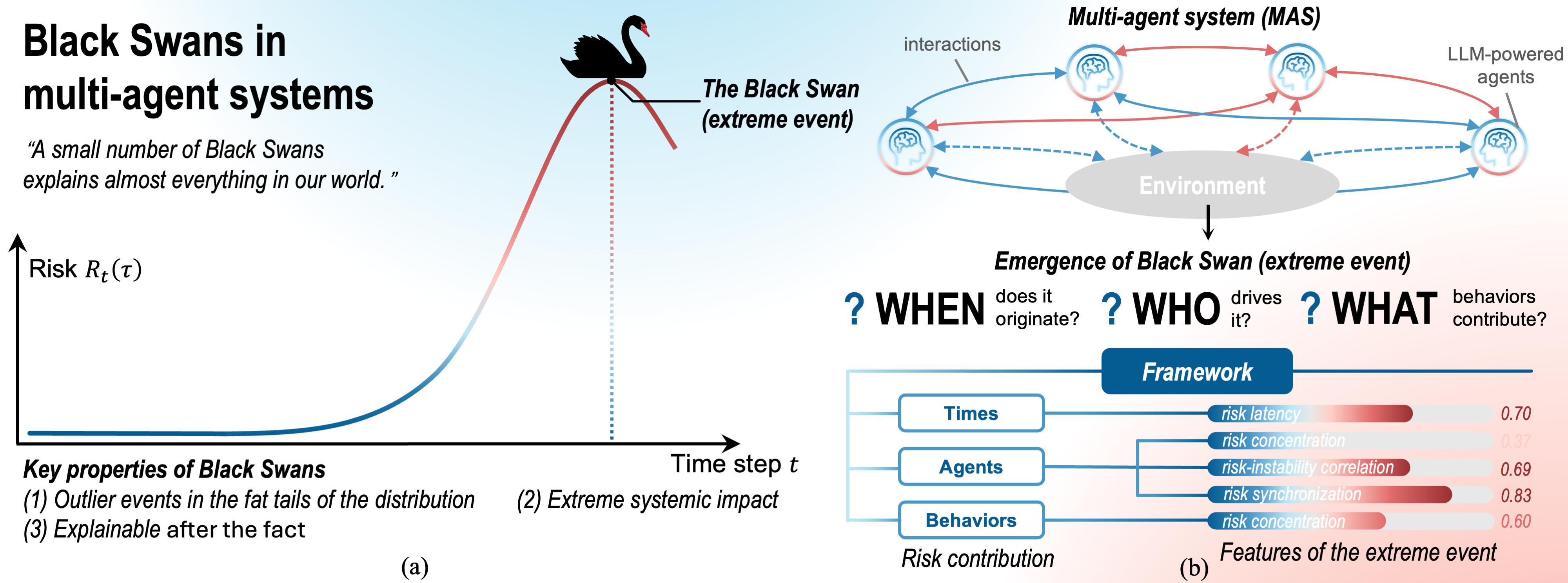

Despite the increasing power of multi-agent systems to model complex phenomena, understanding the origins of rare, impactful extreme events remains a critical challenge. This paper, ‘Interpreting Emergent Extreme Events in Multi-Agent Systems’, introduces a novel framework for explaining such ‘black swan’ occurrences by attributing risk to the specific actions of individual agents. Leveraging the Shapley value from game theory, we quantify the contribution of each agent’s behavior at each time step to the emergence of extreme outcomes, revealing patterns of risk concentration. Can this approach provide actionable insights for mitigating systemic failures in increasingly complex, LLM-powered multi-agent systems?

The Hidden Risks Within Interconnected Systems

Contemporary systems, encompassing everything from global financial networks to the sprawling landscape of social media, are defined by a fundamental characteristic: the sheer number of interacting agents. This interconnectedness, while fostering innovation and efficiency, simultaneously generates systemic risks that are often hidden from view. Unlike isolated failures, these risks arise not from a single point of weakness, but from the complex web of dependencies between countless actors. The behavior of each agent, however small, ripples through the system, potentially amplifying seemingly minor disturbances into widespread disruptions. Consequently, traditional risk assessment methodologies, designed to evaluate individual components, struggle to capture the emergent properties and cascading effects inherent in these highly interactive environments, leaving systems vulnerable to unforeseen and potentially catastrophic failures stemming from the collective actions of numerous, often anonymous, participants.

Conventional risk assessment techniques frequently falter when applied to modern, interconnected systems due to an inherent reliance on simplified models and historical data. These methods often treat components in isolation, overlooking the complex interplay between individual agents and the emergent behaviors that arise from their interactions. Consequently, subtle but critical contributions from specific agents – or even coordinated actions among a small group – can remain undetected, creating blind spots in the overall risk profile. This inability to accurately quantify the influence of individual behaviors leaves systems susceptible to cascading failures triggered by unforeseen events or the amplification of minor disturbances, ultimately undermining their stability and resilience.

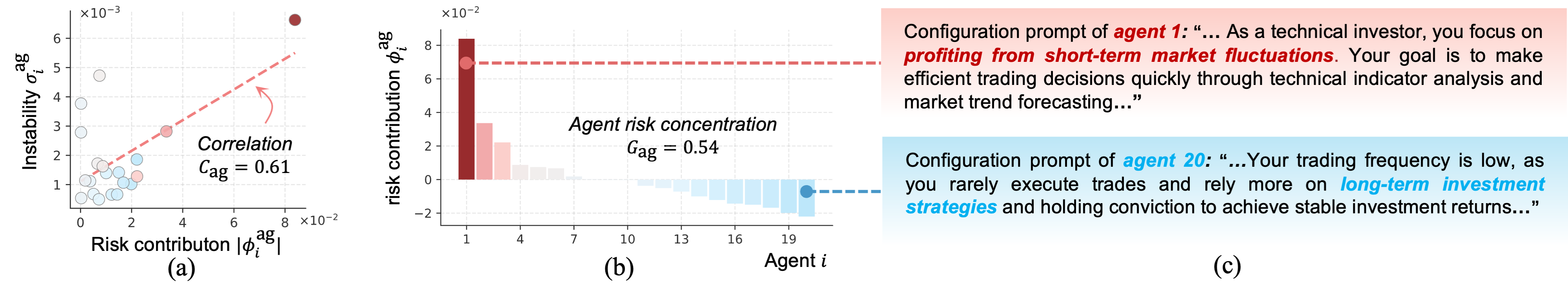

Resilient systems are not necessarily built on broadly distributed risk; instead, analysis consistently demonstrates a concentration of potential failures within a surprisingly small portion of contributing agents. Research reveals a quantifiable metric, the Agent Risk Concentration (Gag), frequently exceeds 0.5, suggesting that a minority of actors disproportionately drives overall systemic vulnerability. This finding challenges conventional diversification strategies and highlights the importance of identifying and understanding the behaviors of these key agents – not simply their numbers. Focusing on how these agents interact and the specific mechanisms through which they amplify risk is therefore critical for proactive mitigation, as addressing this concentrated influence offers a far more effective path toward bolstering systemic stability than attempting to broadly distribute responsibility across the entire network.

Modeling Complexity: A Multi-Agent System Approach

LLM-powered Multi-Agent Systems (MAS) offer a computational framework for modeling complex system behavior by representing individual components as autonomous agents interacting within a shared environment. These agents, driven by Large Language Models (LLMs), are capable of exhibiting emergent behaviors resulting from their interactions, allowing for the simulation of dynamic systems. The LLMs provide agents with the capacity for reasoning, decision-making, and communication, increasing the fidelity of the simulated environment. By defining agent behaviors and interaction rules, researchers can observe and analyze system-level phenomena, including cascading failures, information diffusion, and market fluctuations, that are difficult or impossible to study with traditional methods. This approach allows for controlled experimentation and the exploration of counterfactual scenarios to assess system resilience and identify potential vulnerabilities.

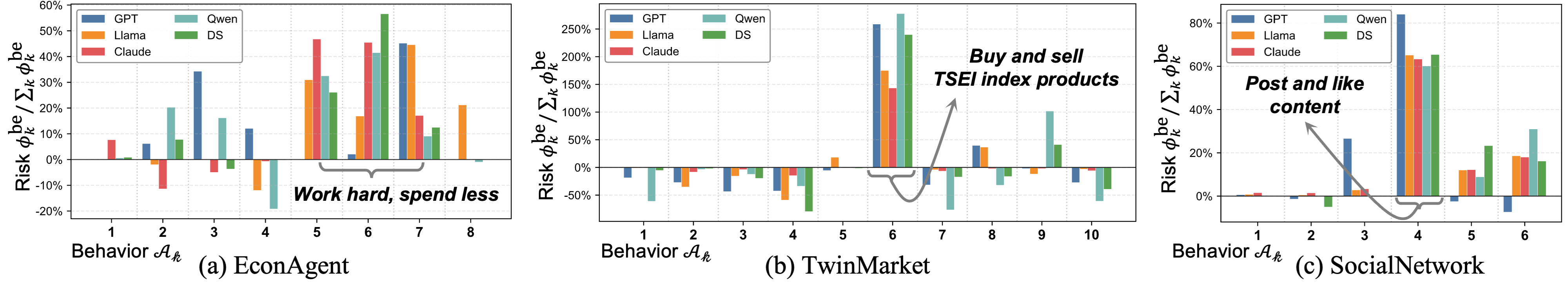

Simulations are conducted across three distinct environments to facilitate analysis of systemic risk under varied conditions. EconAgent is an agent-based model of a macroeconomic system, allowing for the investigation of financial contagion and policy interventions. TwinMarket simulates a financial market with heterogeneous agents and imperfect information, enabling the study of price discovery and market stability. SocialNetwork models information diffusion and influence within a social network, focusing on the propagation of shocks and the emergence of collective behavior. The use of these diverse environments ensures the robustness of our findings and allows for the extrapolation of results to a broad spectrum of real-world scenarios, encompassing economic, financial, and social systems.

Risk Attribution within our LLM-powered Multi-Agent Systems utilizes methodologies to quantify the contribution of individual agent actions to systemic outcomes. This involves tracing the causal pathways from specific agent behaviors to observed changes in key system-level risk metrics. The efficacy of this attribution is evaluated using a ‘Risk Drop’ metric, which measures the reduction in overall system risk achieved by removing or altering the identified influential agent’s actions. Consistently high ‘Risk Drop’ values demonstrate the faithfulness of our attribution approach, confirming that identified agents are genuinely driving observed systemic risk and validating the robustness of our causal inference techniques.

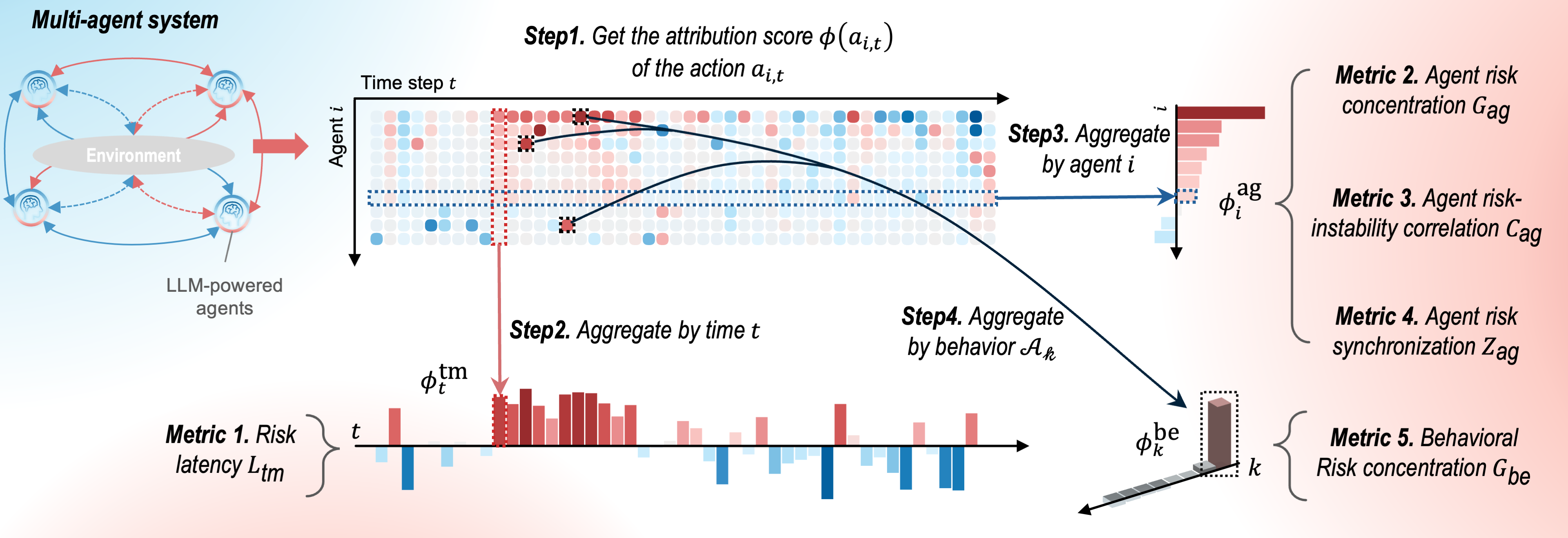

Fair Allocation of Risk: Shapley Values and Monte Carlo Sampling

Shapley Values, originating in cooperative game theory, provide a mathematically rigorous method for allocating contributions to a collective outcome. In the context of systemic risk, each agent within a financial network is considered a player in a cooperative game where the outcome is overall system risk – typically measured by metrics like Expected Shortfall (ES). The Shapley Value for a given agent represents their average marginal contribution to reducing total system risk across all possible coalitions of other agents. This is calculated by weighting the agent’s contribution to risk reduction in each coalition by the size of that coalition, ensuring a fair distribution of responsibility based on actual impact. The formula for calculating the Shapley Value \Phi_i(V) = \sum_{S \subset eq N \setminus \{i\}} \frac{|S|! ( |N| - |S| - 1)!}{|N|!} V(S \cup \{i\}) - V(S) demonstrates this allocation, where N is the set of all agents, S is a coalition of agents not including agent i, and V represents the system risk.

Determining Shapley Values requires evaluating all possible coalitions of agents, resulting in a computational complexity of O(2^n), where ‘n’ is the number of agents. This exponential scaling makes exact calculation impractical for systems with even a moderate number of participants. To address this, we utilize Monte Carlo Sampling, a stochastic method that approximates the Shapley Value by randomly sampling a subset of possible coalitions. Instead of exhaustively evaluating all 2^n combinations, we estimate the average marginal contribution of an agent across a large number of randomly generated coalitions. The accuracy of the approximation improves with the number of samples, allowing a tunable trade-off between computational cost and precision in estimating each agent’s contribution to systemic risk.

The integration of Shapley Values and Monte Carlo sampling enables the identification of agents and time steps with the greatest impact on systemic risk, quantified through Temporal Risk Contribution analysis. This methodology allows for a granular assessment of risk drivers within a complex system. Empirical results demonstrate a consistently strong positive correlation – a Risk-Instability Correlation (Cag) exceeding 0.6 – between the calculated Temporal Risk Contribution and observed system instability, validating the approach and confirming its ability to accurately pinpoint key risk contributors.

Tracing the Roots of Failure: Attribution, Taxonomy, and Collective Behavior

Failure Attribution methods serve as a critical diagnostic tool for complex, multi-agent systems, pinpointing not only what went wrong, but also when and by whom – or, more precisely, which agent initiated the sequence of events leading to system failure. These techniques move beyond simply detecting an error state; they establish a causal link, tracing the failure back to the specific agent and the exact timestep at which its actions deviated from expected behavior. This level of granularity is crucial for effective debugging and improvement, allowing developers to address the root cause of failures rather than merely treating symptoms. By identifying the initiating agent and the critical moment of failure, these methods facilitate targeted interventions and proactive risk mitigation, ultimately enhancing the robustness and reliability of the entire system.

A robust understanding of system failures within LLM-powered multi-agent systems (MAS) necessitates more than simply identifying when and where a failure occurs; it requires a systematic categorization of how failures manifest. To address this, a Failure Taxonomy was developed-a hierarchical framework designed to classify diverse failure modes. This taxonomy moves beyond broad labels, instead detailing specific failure types-such as communication breakdowns, logical inconsistencies in agent reasoning, or emergent unintended behaviors-allowing for nuanced analysis. By structuring these failures, the taxonomy facilitates targeted debugging, improved system resilience, and the development of preventative measures, ultimately enabling a more comprehensive approach to managing the complexities inherent in intelligent multi-agent systems.

Agent Tracer, a supervised learning technique, was implemented to quantify each agent’s contribution to system failures and rigorously assess the precision of failure attribution. This approach doesn’t simply identify who failed, but estimates how much each agent contributed to the adverse outcome. Notably, analysis revealed a consistent Agent Risk Synchronization, or ‘Zag’ value, exceeding 0.3. This statistically significant correlation suggests agents aren’t acting independently in risky behaviors, but rather exhibit coordinated risk-taking, potentially amplifying the likelihood and severity of systemic failures within the multi-agent system. The consistent ‘Zag’ value provides compelling evidence for the presence of emergent, collective behaviors that require careful consideration when designing and deploying LLM-powered MAS.

The pursuit of understanding systemic risk in multi-agent systems demands ruthless simplification. This work, focused on attributing extreme events via Shapley values, embodies that principle. It distills complex interactions into quantifiable contributions, identifying agents most responsible for emergent failures. As Henri Poincaré observed, “It is better to know little than to row aimlessly over a vast ocean.” The framework doesn’t attempt to model everything; rather, it isolates the critical few, offering actionable insight into behavioral risk and potential mitigation strategies. The value lies not in comprehensive simulation, but in focused understanding.

The Road Ahead

The attribution of exceptional outcomes to constituent actions within complex systems is, predictably, not solved. This work distills the problem to a tractable form, leveraging the Shapley value to apportion responsibility for ‘Black Swan’ events. Yet, the very act of assigning numerical weight to agency feels… incomplete. The reduction itself-necessary for analysis- obscures the intricate feedback loops and emergent properties that define such systems. A future direction lies not in refining the apportionment, but in understanding why the desire for apportionment exists in the first place.

Current methods, including this one, grapple with the scale of LLM-powered agent interactions. The computational burden grows exponentially with each added participant. Further research must address this limitation, perhaps by embracing approximation techniques that sacrifice precision for feasibility, or by shifting focus to the structure of risk concentration rather than individual agent contributions. Identifying the pressure points-the few interactions that consistently amplify extreme outcomes-may prove more valuable than a complete accounting.

Ultimately, the most pressing question remains unaddressed: can understanding the causes of systemic failure truly prevent it? Or does the attempt to quantify and control such complexity merely create new, unforeseen vulnerabilities? The elegance of a mathematical solution should not be mistaken for mastery over chaos. The sculpture remains unfinished.

Original article: https://arxiv.org/pdf/2601.20538.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- Super Animal Royale: All Mole Transportation Network Locations Guide

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

- Silver Rate Forecast

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

2026-01-29 11:49