Author: Denis Avetisyan

A new probabilistic forecasting framework improves power grid resilience by accurately predicting extreme energy demand, even with limited data.

Adaptive Conditional Neural Processes provide robust uncertainty quantification for few-shot extreme load forecasting under distribution shift.

Accurate electricity demand forecasting is increasingly challenged by climate change-induced extreme weather events, which cause unpredictable load fluctuations. This paper, ‘Resilient Load Forecasting under Climate Change: Adaptive Conditional Neural Processes for Few-Shot Extreme Load Forecasting’, addresses this critical need by introducing AdaCNP, a probabilistic forecasting model that leverages adaptive weighting within a Conditional Neural Process framework to improve predictions during periods of scarce and irregular extreme load data. Experimental results demonstrate that AdaCNP reduces forecasting errors by 22% and provides more reliable probabilistic outputs compared to state-of-the-art baselines. Could this approach pave the way for more robust and resilient power grid operations in the face of escalating climate risks?

The Fragility of Prediction: Forecasting in a Dynamic System

Maintaining a stable electricity grid hinges on the ability to accurately predict power demand, yet conventional forecasting techniques frequently falter when confronted with extreme events. These methods, often reliant on historical data and statistical patterns, assume a degree of consistency that simply doesn’t hold during anomalies like heat waves, cold snaps, or sudden economic disruptions. The inherent unpredictability of these occurrences introduces substantial deviations from established norms, rendering traditional models-designed to extrapolate from the past-increasingly unreliable. Consequently, grid operators face significant challenges in ensuring a consistent power supply, as underestimation can lead to blackouts while overestimation results in wasted resources and economic inefficiency. The difficulty lies not just in predicting these events, but in accounting for their potentially drastic impact on electricity consumption – a challenge demanding innovative forecasting approaches.

Electricity demand forecasting faces a unique challenge when confronted with extreme events, as these occurrences fundamentally alter the statistical landscape of historical data. Conventional forecasting models rely on the assumption that future demand will be distributed similarly to past demand – a principle known as stationarity. However, weather anomalies like heatwaves or cold snaps, or unforeseen circumstances such as large-scale industrial failures or sudden population shifts, introduce a distribution shift. This means the probability of different demand levels changes drastically, rendering historical patterns unreliable and invalidating the core assumptions of traditional models. Consequently, forecasts built on outdated distributions can significantly underestimate peak loads or overestimate baseline demand, potentially leading to grid instability and service disruptions; accurate prediction necessitates methods capable of adapting to these non-stationary conditions and quantifying the increased uncertainty inherent in extreme event scenarios.

A fundamental limitation of current electricity load forecasting lies in the inadequate representation of predictive uncertainty. Traditional statistical methods and even many machine learning algorithms often provide point forecasts – single best-guess predictions – without a robust quantification of the potential range of outcomes. This leaves grid operators exposed to substantial risk during extreme events, as the actual demand may deviate significantly from the predicted value. Consequently, insufficient reserves may be allocated, leading to blackouts, or excessive reserves maintained, increasing operational costs. Advanced probabilistic forecasting techniques, capable of generating prediction intervals or full probability distributions, are crucial for effective risk management, enabling operators to proactively prepare for a wider spectrum of possible scenarios and maintain a stable power supply even under duress.

Neural Processes: Modeling Function as Probability

Neural Processes (NPs) represent a class of probabilistic models designed to represent functions. Unlike traditional methods that predict a single output for a given input, NPs output a probability distribution, quantifying the uncertainty associated with each prediction. This is achieved by modeling the function itself as a random variable, allowing the model to express both its belief about the function’s value and the confidence in that belief. Formally, an NP defines a distribution over functions p(f|\mathcal{D}), where \mathcal{D} represents the observed data. This probabilistic approach enables not only point predictions but also the generation of credible intervals and the assessment of risk, making them suitable for applications where uncertainty estimation is crucial, such as time series forecasting and reinforcement learning.

Conditional Neural Processes (CNPs) enhance standard Neural Process functionality by incorporating observed context into the predictive model. This is achieved through a conditioning mechanism where the model’s parameters are modulated by a representation of the input context set. Unlike standard CNPs which generate predictions based solely on the input location, conditional variants allow the predictive distribution to vary depending on the provided contextual information, effectively enabling adaptation to changing conditions. This contextual conditioning is implemented by encoding the context set into a latent representation which is then used to parameterize the function approximator and the variability network, leading to predictions that are specific to the observed context and improving performance in non-stationary environments.

Conditional Neural Processes utilize historical load data to establish a relationship between input context and the resulting probability distribution of predicted values. This is achieved through an encoding mechanism that transforms the observed data into a latent representation, effectively creating a function that maps context sets to distributions over possible function values. Consequently, the model learns to generalize beyond the training data by predicting plausible functions given new, unseen contexts, and quantifying the uncertainty associated with those predictions through the variance of the predictive distribution. This capability is particularly valuable in scenarios where the underlying function is expected to vary based on contextual factors, enabling more accurate and reliable forecasting.

AdaCNP: Adapting to the Unforeseen

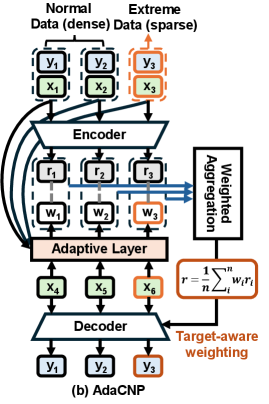

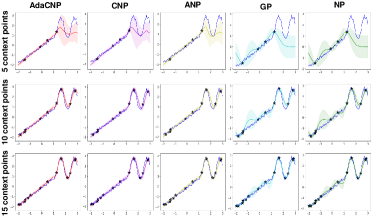

AdaCNP is a forecasting framework built on conditional neural processes (CNPs) and designed to improve predictive performance when dealing with extreme or unusual data conditions. Unlike standard CNPs which utilize a fixed approach to historical data, AdaCNP dynamically adjusts the weighting of past data points based on their relevance to the current prediction target. This adaptation is achieved through target-conditioned relevance and context weighting mechanisms, allowing the model to prioritize information most likely to impact the forecast under challenging circumstances. Rigorous evaluation using metrics such as Negative Log-Likelihood (NLL), Mean Squared Error (MSE), and Pinball Loss demonstrates AdaCNP’s ability to outperform existing models, including standard CNPs and Neural Processes (NPs), on datasets like PJM and ISO-NE.

AdaCNP utilizes a mechanism of target-conditioned relevance and context weighting to refine the contribution of historical data to forecasting. This is achieved by dynamically assigning weights to past data points based on their relevance to the specific target value being predicted. The framework doesn’t treat all historical data equally; instead, it prioritizes data points that exhibit strong correlations with the current prediction target, effectively focusing on the most informative historical instances. This weighting process allows AdaCNP to adapt to changing conditions and improve prediction accuracy by mitigating the influence of irrelevant or noisy historical data.

AdaCNP incorporates permutation invariance, a property ensuring that the model’s predictions remain consistent irrespective of the sequence in which historical context data points are presented; this is achieved through architectural choices designed to eliminate positional dependencies. Rigorous evaluation of AdaCNP’s performance utilizes standard probabilistic forecasting metrics, including Negative Log-Likelihood (NLL), which quantifies the accuracy of predicted probability distributions, and Mean Squared Error (MSE), measuring the average squared difference between predicted and observed values. These metrics provide a quantitative basis for comparing AdaCNP against other forecasting models and validating its improvements in predictive accuracy, particularly under challenging conditions.

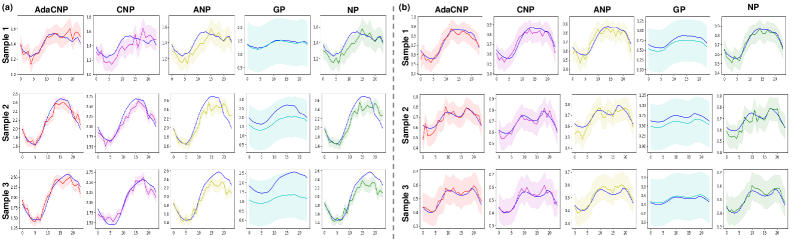

AdaCNP demonstrates superior forecasting performance, achieving up to a 17% reduction in Mean Squared Error (MSE) when benchmarked against Adaptive Neural Processes (ANP) and Neural Processes (NP) utilizing the PJM and ISO-NE datasets. This performance improvement indicates AdaCNP’s enhanced ability to model complex temporal dependencies and extrapolate accurately under varying conditions. The evaluation, conducted on these established datasets for power load forecasting, consistently positions AdaCNP as a state-of-the-art solution for time-series prediction tasks where accuracy is critical.

Evaluations on the PJM dataset indicate AdaCNP’s improved forecasting capabilities, specifically yielding a 2.7% reduction in Negative Log-Likelihood (NLL) compared to the Conditional Neural Process (CNP) baseline. Additionally, AdaCNP demonstrates a 1.3% decrease in Pinball Loss, a metric sensitive to quantile prediction accuracy, when benchmarked against CNP on the same dataset. These results quantify AdaCNP’s ability to provide more accurate probabilistic forecasts and better capture the uncertainty inherent in power load prediction for the PJM region.

Evaluation of the AdaCNP framework on the ISO-NE dataset demonstrates significant performance gains; specifically, the Mean Squared Error (MSE) was reduced by over 22% when compared to both Conditional Neural Process (CNP) and Neural Process (NP) models. Beyond MSE, AdaCNP also achieved a 2.4% reduction in Pinball Loss, indicating improved quantile forecasting accuracy on this dataset. These results highlight AdaCNP’s ability to generate more accurate predictions, particularly in complex and volatile conditions represented by the ISO-NE data.

Beyond Prediction: Understanding System Resilience

The accurate prediction of grid instability hinges on understanding phase transition phenomena – those abrupt shifts in system behavior that can lead to cascading failures. AdaCNP demonstrates a unique capacity to model these transitions, going beyond traditional forecasting methods that often struggle with nonlinear dynamics. This capability allows for the early detection of critical thresholds, identifying points where small disturbances could escalate into widespread outages. By accurately representing these complex system behaviors, AdaCNP provides grid operators with valuable lead time to implement preventative measures, such as adjusting load distribution or activating backup systems, ultimately bolstering grid resilience and preventing costly disruptions. The model’s predictive power isn’t simply about forecasting load; it’s about anticipating the way the system will respond to stress, a crucial distinction for maintaining a stable and reliable power supply.

Conventional methods for comparing electrical grid load patterns often struggle when those patterns are stretched or compressed in time – a common occurrence due to changing weather or consumer behavior. The integration of AdaCNP with Dynamic Time Warping (DTW) offers a solution by enabling robust similarity measurement, even with significant temporal distortions. DTW functions by finding the optimal alignment between two time series, effectively ‘warping’ the time axis to maximize their correlation. This allows AdaCNP to accurately identify analogous load scenarios, regardless of timing variations, improving the precision of forecasting models. Consequently, grid operators gain a more reliable understanding of potential future loads, leading to better resource allocation and a more stable power supply.

A critical advancement in power grid management lies in the ability to not only predict energy load, but also to quantify the uncertainty inherent in those predictions. This framework delivers precisely that, providing grid operators with reliable estimates of potential forecast errors. These estimates are not merely academic; they directly inform decision-making processes, enabling a nuanced balancing of cost and risk. For instance, if a forecast indicates high demand with significant uncertainty, operators can proactively secure additional resources – even if expensive – to avoid potential blackouts. Conversely, if the forecast is confident, they can optimize resource allocation and minimize costs. Ultimately, this capability moves grid management beyond reactive responses to disruptions, fostering a proactive and economically sound approach to maintaining a stable and resilient energy supply.

Traditional power grid forecasting often struggles with the increasing complexity and variability introduced by renewable energy sources and fluctuating demand, leading to potential imbalances and instabilities. AdaCNP offers a significant advancement by directly addressing these limitations through its adaptive modeling capabilities and robust uncertainty quantification. This framework moves beyond simplistic extrapolations, instead learning the underlying dynamics of energy consumption and generation, even in the presence of unforeseen events or shifts in patterns. Consequently, grid operators gain a more accurate and reliable predictive tool, enabling proactive adjustments to maintain grid stability, optimize resource allocation, and ultimately facilitate the integration of a greater proportion of sustainable energy sources – a crucial step towards a more resilient and environmentally responsible energy future.

The pursuit of robust load forecasting, as detailed in this work, acknowledges the inherent instability of complex systems. AdaCNP, with its adaptive weighting mechanism, attempts to mitigate the effects of distribution shift – a natural consequence of climate change – by dynamically adjusting to new data. This echoes Henri Poincaré’s sentiment: “Mathematics is the art of giving reasons.” The framework doesn’t merely predict; it provides a reasoned, probabilistic assessment, quantifying uncertainty in a way that static models cannot. Every version of the forecasting model, informed by increasingly scarce and volatile data, represents a new chapter in understanding power system behavior, and delaying adaptation is indeed a tax on ambition, as the system ages and the stakes grow higher.

What Remains to Be Seen

The pursuit of robust load forecasting, even with frameworks like AdaCNP, merely postpones the inevitable confrontation with systemic decay. The adaptive weighting offers a temporary bulwark against distribution shift – a useful tactic, but one that implicitly acknowledges the non-stationarity inherent in power systems and climate itself. Every abstraction carries the weight of the past; the model’s performance, however impressive in few-shot scenarios, is ultimately tethered to the limitations of its historical data and the assumptions embedded within its architecture.

Future work will undoubtedly focus on extending AdaCNP’s capabilities to incorporate more granular climate data and to model the complex interdependencies between load and increasingly volatile weather patterns. However, a more fundamental question remains: can a purely data-driven approach truly anticipate the unforeseen extreme events – the black swans – that will inevitably test the resilience of any predictive system?

The true measure of success will not be in achieving higher accuracy for known extremes, but in gracefully degrading performance as conditions diverge from the historical record. Only slow change preserves resilience. The longevity of such frameworks hinges not on their capacity to predict the future, but on their ability to adapt to its inherent unpredictability – a subtle, yet crucial, distinction.

Original article: https://arxiv.org/pdf/2602.04609.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Gold Rate Forecast

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Mario Tennis Fever Review: Game, Set, Match

- NBA 2K26 Season 5 Adds College Themed Content

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Train Dreams Is an Argument Against Complicity

- Beyond Linear Predictions: A New Simulator for Dynamic Networks

- Every Death In The Night Agent Season 3 Explained

2026-02-05 22:28