Author: Denis Avetisyan

As artificial intelligence models become smaller and more efficient, the potential for misuse grows, even with limited computing resources.

This review characterizes the emerging safety challenges posed by increasingly accessible, low-compute AI models and their implications for AI governance.

While current AI governance largely focuses on managing risks associated with high-compute models, a critical gap is emerging as increasingly capable low-compute systems proliferate. This report, ‘Small models, big threats: Characterizing safety challenges from low-compute AI models’, details how advances in model compression and agentic workflows are enabling powerful large language models to run on consumer-grade hardware. Our analysis of over 5,000 models reveals a more than 10x decrease in model size needed to achieve competitive benchmarks, alongside the demonstrated feasibility of launching harmful digital campaigns-like disinformation and fraud-using readily available resources. As these threats become both more accessible and potent, how can policymakers and technologists effectively address the unique security challenges posed by this new class of AI systems?

The Shifting Landscape of Artificial Intelligence

For years, the promise of truly intelligent language models remained largely confined to the realm of large corporations and well-funded research institutions. The development and operation of powerful Large Language Models (LLMs) historically necessitated substantial computational infrastructure – racks of specialized processors and enormous energy consumption. This high barrier to entry effectively limited access to advanced AI capabilities, hindering widespread innovation and practical application. The sheer cost of training and deploying these models meant that only a select few could afford to explore their potential, creating a significant disparity in the distribution of AI technology and slowing the pace of progress beyond a concentrated group of experts. Consequently, the benefits of LLMs – from automated content creation to sophisticated data analysis – were not readily available to smaller businesses, individual developers, or the general public.

The landscape of artificial intelligence is rapidly shifting, driven by a surge in ‘Low-Compute AI’ capabilities. Recent breakthroughs demonstrate a remarkable trend: the computational resources needed to achieve comparable performance on standard AI benchmarks have decreased by a factor of ten within the last year. This isn’t merely incremental improvement; it represents a paradigm shift, allowing sophisticated Large Language Models to move beyond the confines of massive server farms and operate effectively on consumer-grade hardware, like laptops, smartphones, and edge devices. The implications are substantial, promising broader access to AI technologies and fostering innovation in applications previously limited by computational cost and infrastructure requirements. This democratization of AI is poised to unlock new possibilities in personalized computing, localized data processing, and a new wave of intelligent applications.

Expanding Access, Expanding Risk

The increasing availability of Low-Compute Artificial Intelligence models is substantially reducing the technical skill and resources required for malicious cyber activity. Historically, launching sophisticated attacks – such as large-scale disinformation campaigns or highly targeted phishing – necessitated significant computational power and specialized expertise. However, recent advancements in model optimization and quantization allow comparable functionality to be achieved on readily available consumer hardware, including standard laptops and mobile devices. This democratization of AI tools expands the pool of potential attackers beyond state-sponsored actors and organized crime, enabling individuals with limited technical backgrounds to execute harmful campaigns. The reduced costs associated with deployment also facilitate broader and more frequent attacks, increasing the overall risk to individuals and organizations.

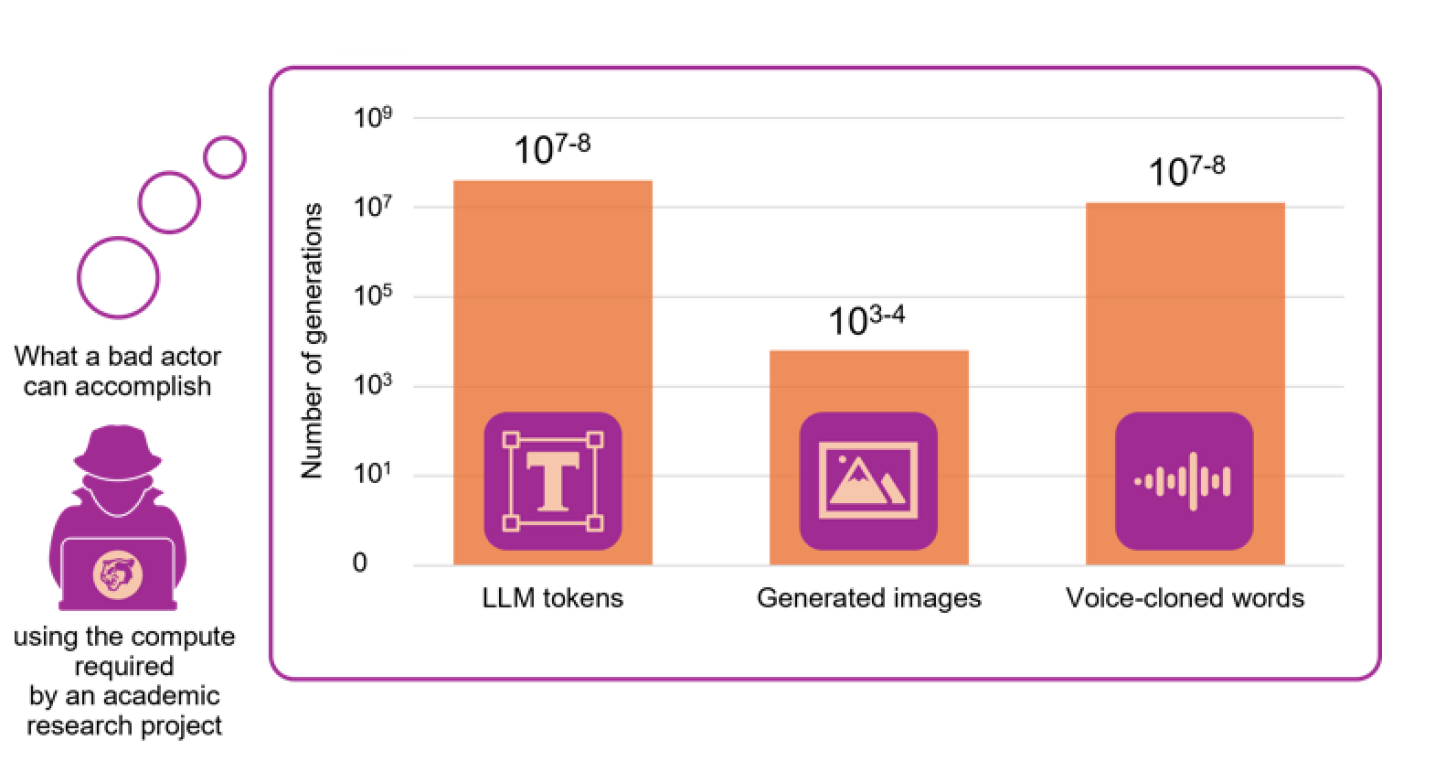

The increasing accessibility of low-compute Artificial Intelligence is directly correlated with an expansion in the scope and scale of social harm campaigns. These campaigns now encompass a broader range of malicious activities, including the dissemination of disinformation, increasingly sophisticated spear-phishing attacks, and the creation of highly realistic voice clones for fraudulent purposes. Recent data indicates significant activity in these areas; for example, simulated Brexit-level disinformation campaigns have demonstrated the capacity to generate 65,000 Tweets, while models utilized in sextortion schemes have recorded over 100,000 downloads. This proliferation suggests a growing ability for malicious actors to conduct impactful campaigns with relatively limited resources and technical expertise, thereby lowering the barrier to entry for widespread social manipulation and fraud.

The HuggingFace LLM Leaderboard demonstrates a consistent trend of decreasing model size without significant performance loss, enabling deployment on increasingly accessible hardware. Simulations indicate that malicious campaigns, previously requiring substantial computational resources, are now executable on consumer-grade devices. For example, a disinformation campaign comparable in scale to the Brexit-related activity – approximately 65,000 Tweets – can be launched using these miniaturized models. Furthermore, models specifically utilized in sextortion schemes have been downloaded over 100,000 times, highlighting the widespread availability of tools that facilitate harmful activities even with limited technical expertise and infrastructure.

Building Layers of Defense

Model compression techniques are critical for deploying complex machine learning models on resource-constrained devices and networks. These strategies reduce the computational and memory footprint of models through methods like quantization, pruning, knowledge distillation, and low-rank factorization. Quantization reduces the precision of numerical representations, decreasing model size and accelerating inference. Pruning removes redundant or unimportant connections within the neural network. Knowledge distillation transfers learning from a large, accurate model to a smaller, more efficient one. Low-rank factorization decomposes weight matrices into lower-dimensional representations, significantly reducing the number of parameters. Successful implementation of these techniques allows for maintaining acceptable performance levels – measured by metrics like accuracy and latency – while significantly lowering resource demands in low-compute environments such as mobile devices, embedded systems, and edge computing platforms.

Digital watermarking and content filtering represent distinct but complementary technical approaches to malicious content mitigation. Digital watermarking embeds identifying information directly into digital assets-images, video, audio, or text-allowing for provenance tracking and authentication, and aiding in the identification of altered or illegally distributed material. Content filtering, conversely, operates by analyzing content against predefined rules or machine learning models to identify and block or flag potentially harmful elements. These filters can operate at various levels, including URL blacklists, keyword detection, hash-based matching of known malicious files, and increasingly, semantic analysis to detect subtle forms of abuse or misinformation. While watermarking focuses on asset identification and tracking, content filtering actively prevents access to or dissemination of undesirable material, providing a layered defense against malicious content.

Proactive Defensive AI systems utilize machine learning algorithms to identify and mitigate potential threats in real-time, before malicious actions are completed. These systems move beyond reactive security measures by employing techniques such as anomaly detection, behavioral analysis, and predictive modeling. Current development focuses on identifying patterns indicative of adversarial attacks, including data poisoning, model evasion, and backdoor triggers. Neutralization strategies range from automated patching and input sanitization to dynamic resource allocation and the deployment of counter-measures designed to disrupt or contain the threat. Performance metrics prioritize low-latency detection and minimal false positive rates, requiring substantial computational resources and ongoing model refinement to maintain effectiveness against evolving attack vectors.

Capability-Based Risk Evaluation represents a shift in security assessment away from solely measuring computational resources, such as Floating Point Operations Per Second (FLOPs), and toward evaluating the actual potential for harm posed by a model or system. Traditional FLOPs metrics provide limited insight into a model’s ability to successfully complete a malicious task; a large model does not inherently equate to a greater threat. This evaluation focuses on what a system can accomplish – its capabilities – and then assesses the potential negative consequences of those capabilities being exploited. This approach necessitates defining specific, measurable harms and developing testing methodologies to determine if a system possesses the capacity to inflict them, providing a more nuanced and actionable risk profile than simple computational cost.

Beyond Technology: Systemic Resilience

Despite considerable progress in artificial intelligence safety research, restrictions on the export of specialized hardware, particularly graphics processing units (GPUs), continue to serve as a critical layer of risk mitigation. These powerful processors remain fundamental to training and deploying advanced AI models; limiting their availability to potentially malicious actors or nations can significantly slow the development of capabilities posing substantial societal threats. While not a singular solution, export controls buy valuable time for safety measures to mature and for international norms around responsible AI development to solidify. This approach acknowledges that even the most sophisticated algorithmic safeguards are contingent on the underlying computational resources available, and proactively addresses potential misuse at a foundational level, complementing-rather than replacing-ongoing efforts in AI alignment and robustness.

The European Union’s AI Act utilizes a ‘Systemic Risk Threshold’ predicated on Floating Point Operations per second (FLOPs) to identify potentially dangerous AI models. However, this metric is becoming rapidly outdated as advancements in algorithmic efficiency dramatically reduce the computational power needed to achieve comparable performance. Recent breakthroughs suggest a ten-fold, or 10x, reduction in compute requirements is now possible for certain tasks, meaning models previously considered low-risk based on FLOPs could now pose significant challenges. This shift necessitates a re-evaluation of the threshold and a move toward more nuanced risk assessment criteria that consider not only computational scale but also model architecture, data quality, and potential societal impact, to ensure effective oversight of increasingly powerful and efficient AI systems.

Achieving genuine resilience against the potential risks of advanced artificial intelligence necessitates a coordinated strategy extending far beyond purely technical solutions. While robust safeguards – such as differential privacy and adversarial training – are vital first steps, their effectiveness is significantly amplified when paired with thoughtfully designed policy frameworks. These frameworks must address issues of accountability, transparency, and responsible innovation, fostering an environment where AI development aligns with societal values. Crucially, resilience isn’t solely the responsibility of developers and policymakers; a well-informed public, capable of critically evaluating AI systems and understanding their implications, forms an indispensable layer of defense. Increased public awareness, coupled with educational initiatives, empowers individuals to participate meaningfully in shaping the future of AI and mitigating potential harms, creating a truly robust and adaptive system.

The accelerating pace of technological advancement necessitates a fundamental shift in how societies approach innovation, moving beyond a singular focus on progress to prioritize holistic well-being. A truly resilient future isn’t built solely on technical safeguards, but on an adaptive strategy that anticipates and mitigates potential societal disruptions. This demands proactive governance frameworks capable of evolving alongside rapidly changing capabilities, coupled with a heightened public awareness of both the benefits and risks inherent in emerging technologies. Rather than reacting to challenges as they arise, a forward-looking approach integrates ethical considerations, social impact assessments, and robust safety measures directly into the innovation process, ensuring that technological development serves – and enhances – the collective good. This requires ongoing dialogue between researchers, policymakers, and the public, fostering a shared understanding and commitment to responsible innovation.

The study highlights a concerning trend: diminishing computational barriers to deploying potentially harmful AI. This echoes Edsger W. Dijkstra’s assertion that, “Simplicity is prerequisite for reliability.” While the pursuit of model compression and efficient AI-reducing the ‘compute power’ needed-offers benefits, it simultaneously lowers the threshold for malicious actors. The research demonstrates how readily available resources now enable the execution of sophisticated campaigns with surprisingly small models. This isn’t merely a technical issue; it’s a systemic one, where the elegance of streamlined AI design inadvertently creates vulnerabilities if not accompanied by robust governance and safety measures. Structure, as the article implies, fundamentally dictates behavior, and a poorly secured, readily accessible structure poses a clear and present danger.

Where Do We Go From Here?

The demonstrated accessibility of potent language models, even on modest hardware, reveals a fundamental truth: sophistication is no longer the primary barrier to impactful – and potentially harmful – deployment. The focus, therefore, must shift from chasing ever-larger parameter counts to understanding the emergent properties of even ‘small’ models when scaled across distributed, low-cost infrastructure. If a design feels clever, it’s probably fragile; attempts to mitigate risk through complexity will likely prove insufficient. A more elegant solution lies in recognizing that the real threat isn’t the models themselves, but the architecture of deployment.

Current safety evaluations largely assume a centralized control point. This assumption is increasingly untenable. Future research should prioritize techniques for robust monitoring of distributed inference, methods for attributing malicious activity, and, crucially, an exploration of incentive structures that discourage widespread, harmful deployments. The question isn’t simply can a dangerous campaign be launched, but whether the cost of doing so outweighs the potential benefit – a calculation that is rapidly shifting.

Ultimately, the field needs to embrace a systems-level view. Structure dictates behavior; simply patching vulnerabilities in models will only address symptoms. A truly resilient approach demands a deep understanding of the entire ecosystem – from model compression techniques to the economics of compute, and the motivations of those who would deploy these tools. Simplicity always wins in the long run, and a simple, robust architecture is the only sustainable path forward.

Original article: https://arxiv.org/pdf/2601.21365.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Gold Rate Forecast

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Silver Rate Forecast

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

2026-01-30 14:49