Author: Denis Avetisyan

A new analysis reveals how platforms like Polymarket transform subjective beliefs into seemingly objective forecasts, creating an illusion of neutrality.

This paper demonstrates how prediction markets systematically ‘launder’ complex human beliefs and financial motivations, obscuring power dynamics and creating accountability gaps in information markets.

Despite claims of objectivity, prediction markets often obscure the complex social and financial forces shaping their forecasts. This paper, ‘Prediction Laundering: The Illusion of Neutrality, Transparency, and Governance in Polymarket’, presents a sociotechnical audit of the Polymarket platform, revealing how subjective beliefs and capital asymmetries are systematically transformed into seemingly neutral probabilistic signals. We demonstrate that this process-which we term ‘Prediction Laundering’-actively conceals power dynamics and accountability gaps through stages of algorithmic aggregation and governance erasure. Ultimately, does this sanitized form of synthetic truth genuinely represent collective intelligence, or does it simply reflect the biases of those with the most to gain?

The Illusion of Influence

Prediction markets, intended as mechanisms to distill collective intelligence, frequently exhibit a troubling paradox: they don’t so much aggregate knowledge as amplify pre-existing biases. While the theory suggests a diverse range of perspectives will converge on accurate forecasts, in practice, individuals with greater financial resources – and therefore, a disproportionate influence on market prices – can significantly skew outcomes. This isn’t necessarily a reflection of superior insight, but rather a demonstration of how capital can masquerade as information. Studies reveal that early momentum, driven by well-funded participants, often dominates, creating self-fulfilling prophecies and suppressing the contributions of less-resourced, yet potentially more accurate, predictors. The result is a system that appears to reflect collective wisdom, but is, in reality, a concentrated expression of existing power structures and inherent biases, hindering its potential as a truly democratic forecasting tool.

The celebrated ‘Wisdom of Crowds’ relies on the assumption of diverse, independent perspectives, but this principle falters when participation is unevenly distributed. Research demonstrates that forecasts generated by crowds become significantly skewed when certain demographics, expertise levels, or financial interests dominate the pool of contributors. This imbalance doesn’t simply add noise to the data; it systematically biases predictions, obscuring the signal of genuinely collective intelligence. Consequently, the aggregated forecast may reflect the prevailing opinions of a powerful minority rather than a true representation of the broader collective knowledge, potentially leading to flawed decision-making and reinforcing existing societal inequalities. The illusion of objectivity, therefore, masks a reality where access and influence determine the perceived ‘wisdom’ of the crowd.

Prediction markets, while appearing to offer objective forecasts, often operate as ‘black boxes’ regarding influence and participation. Current platforms frequently lack detailed public records of trader identities, trading volumes per user, or the rationale behind individual predictions. This opacity creates a misleading sense of impartiality; probabilistic outputs aren’t necessarily representative of genuinely collective intelligence, but may instead reflect the disproportionate impact of well-funded players or those with pre-existing biases. Without greater transparency regarding who is driving the forecasts, and how, the perceived objectivity of these markets remains an illusion, potentially leading to flawed decision-making based on skewed or incomplete information. The aggregation of knowledge is only meaningful when the contributions are equitably distributed and readily auditable.

The Mechanics of Prediction Laundering

Prediction Laundering begins with Structural Sanitization, a process of initial curation where incoming predictions or data points are filtered and reshaped to conform to pre-existing frameworks or biases. This is followed by Probabilistic Flattening, which reduces the influence of varied or conflicting motivations behind those predictions by assigning probabilities or weights, effectively obscuring the original diversity of perspectives and potentially diminishing signals from less-represented viewpoints. This two-stage process prepares the data for further manipulation by diminishing identifiable traces of initial biases or specific interests before subsequent stages of laundering can take effect.

Architectural Masking involves concealing the financial sources that incentivize specific predictions, often through complex organizational structures or indirect funding mechanisms. This obfuscation prevents scrutiny of potential biases stemming from capital influence. Simultaneously, Epistemic Hardening systematically removes records of dissenting opinions, alternative analyses, or the reasoning behind prior forecast revisions. By presenting a streamlined, unchallenged narrative, this process constructs a perception of objective truth, even when the underlying ‘facts’ are incomplete or selectively presented. The combined effect is the creation of forecasts that appear authoritative, but lack transparency regarding their origins and the debates that shaped them, potentially leading to flawed or misleading conclusions.

Epistemic transmission, the reliable conveyance of knowledge from source to recipient, is significantly impaired by prediction laundering processes. The manipulation of data through stages like structural sanitization and probabilistic flattening introduces bias and obscures the original reasoning behind a forecast. This distortion prevents accurate evaluation of the prediction’s basis and hinders the recipient’s ability to independently verify or challenge it. Consequently, the forecast’s integrity is compromised, as the recipient operates on a potentially flawed understanding, divorced from the initial conditions and supporting evidence that generated it. This breakdown in reliable knowledge transfer ultimately reduces the forecast’s utility and increases the risk of misinformed decisions.

Designing for Algorithmic Accountability

Friction-Positive Design interventions are proposed as a method to mitigate Prediction Laundering and enhance Algorithmic Accountability within prediction markets. These interventions intentionally introduce calculated impedance into the system, making it more difficult to obscure the origin and intent of predictions or wagers. By increasing transparency and traceability, the goal is to discourage manipulative behaviors where actors attempt to falsely inflate or deflate market signals. This approach differs from traditional efficiency-focused design, prioritizing auditability and the ability to identify and address potentially harmful algorithmic behaviors over purely minimizing transaction costs or latency.

Concentration Metrics and Whale Alerts provide transparency into capital distribution within a prediction market. Concentration Metrics quantify the percentage of total capital held by the top n participants, highlighting potential imbalances of influence. Whale Alerts specifically flag unusually large wagers or shifts in position by significant capital holders, notifying other participants of potentially market-moving activity. Complementing these, Multi-Signal Disclosure aggregates and presents multiple data points – including wager size, direction, and historical trading patterns – to infer the underlying intent behind a given wager, moving beyond simple price action and providing insight into strategic motivations.

Audit-Ready Resolution Trails maintain a comprehensive, immutable archive of all dispute instances within a prediction market. These trails record detailed information including the initiating wager, the grounds for the dispute, evidence submitted by both parties, the resolution process undertaken-including any involved adjudicators-and the final outcome with associated rationale. This data facilitates post-hoc analysis of market outcomes, enabling identification of systematic biases, vulnerabilities to manipulation, or deficiencies in market rules. The archived records also serve as a critical component for building user trust by providing transparency into the dispute resolution process and demonstrating accountability for fair outcomes. Furthermore, the structured format of these trails allows for efficient auditing by external parties and supports the development of automated dispute resolution mechanisms.

Polymarket: A Platform for Observation

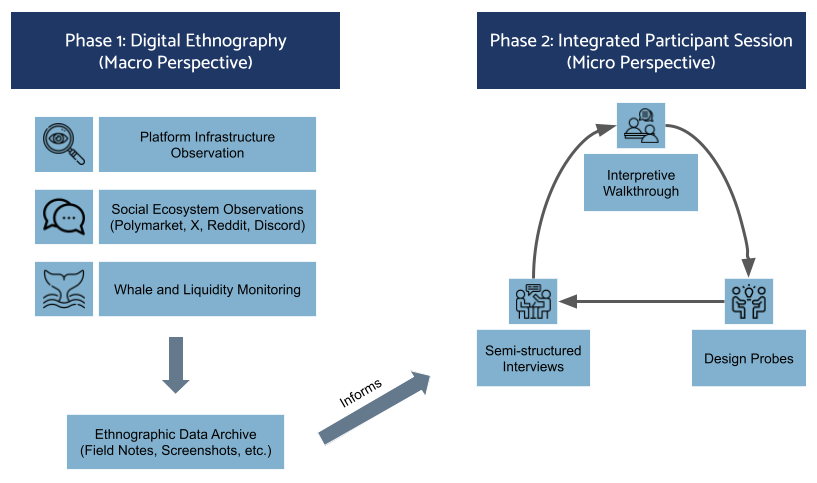

This research employs a mixed-methods approach centered on digital ethnography and semi-structured interviews to comprehensively analyze user behavior and perceptions within the Polymarket platform. Digital ethnography involves observing and documenting user interactions with the platform’s interface and markets, capturing naturally occurring behaviors and patterns. Complementing this, semi-structured interviews allow for in-depth exploration of user motivations, strategies, and understandings of the platform’s mechanisms, providing qualitative data to contextualize observed behaviors and uncover nuanced perspectives on the user experience. Data gathered from both methods will be triangulated to ensure the validity and reliability of research findings regarding user engagement and platform usability.

Polymarket operates on a blockchain infrastructure, specifically utilizing the 0x protocol for decentralized exchange and prediction market functionality. This foundation enables transparent and auditable transactions, recording all wagers and outcomes on a public ledger. Furthermore, Polymarket employs Automated Market Makers (AMMs) which algorithmically determine the pricing of prediction market shares. Unlike traditional order book exchanges, AMMs rely on liquidity pools and mathematical formulas to facilitate trading, reducing the need for centralized intermediaries and enabling continuous market operation. This combination of blockchain technology and AMMs creates a controlled environment for testing design interventions aimed at improving market efficiency and user behavior, as all on-chain interactions are readily available for analysis.

The research proposes a visualization system to address potential issues of asymmetry of information within Polymarket. By displaying the concentration of capital held by individual addresses and surfacing expressed wager intent – the rationale behind predictions – the system intends to improve participant understanding of market dynamics. This increased transparency is hypothesized to mitigate the risk of manipulative trading practices, as concentrated positions and stated motivations become visible to all users. The design aims to empower participants to assess the credibility of predictions and make more informed decisions, ultimately contributing to a fairer and more efficient prediction market.

Toward a Future of Decentralized Integrity

Efforts to mitigate Prediction Laundering are not simply about correcting isolated instances of manipulation, but rather represent a crucial step toward realizing truly decentralized oracles and resilient forecasting systems. Current predictive markets often rely on centralized data feeds, creating single points of failure and vulnerability to biased or deliberately misleading information. By identifying and neutralizing the techniques used to artificially inflate or deflate predictions, this work aims to build systems where forecasts emerge from a genuinely distributed network of informed participants. This fosters greater accuracy and reliability, moving beyond predictions shaped by concentrated influence towards outcomes reflecting the collective knowledge of a broader, more representative group – enabling more trustworthy and robust insights across a variety of applications.

By equipping individuals with the tools to dissect information and identify potential manipulation, these interventions aim to fundamentally shift the dynamics of forecasting and prediction. Rather than passively accepting aggregated results, users gain agency in assessing the provenance and potential biases within data streams. This critical engagement not only bolsters resistance to deceptive practices, such as prediction laundering, but also actively improves the accuracy and dependability of collective forecasts. When individuals are empowered to challenge assumptions and scrutinize methodologies, the resulting predictions become more robust, reflecting a genuinely informed consensus rather than a manufactured one. This fosters a cycle of continuous improvement, building greater trust in decentralized systems and unlocking the full potential of collective intelligence.

The pursuit of decentralized platforms extends beyond mere technological innovation; it envisions a future where collective intelligence is genuinely harnessed and accurately reflected. This requires systems capable of aggregating knowledge from diverse sources, mitigating biases inherent in centralized control, and fostering an environment where informed participation is incentivized. Such platforms promise not only more reliable predictions and insights, but also a more equitable distribution of knowledge and power, empowering individuals and communities to navigate complex challenges with greater agency. Ultimately, this work seeks to build digital ecosystems that move beyond simply connecting people, towards genuinely amplifying collective wisdom and creating a more informed and just world for all.

The study illuminates a process of epistemic laundering, where subjective human beliefs are transformed into seemingly objective probabilities within Polymarket. This echoes Ada Lovelace’s observation that “The Analytical Engine has no pretensions whatever to originate anything.” The engine, like Polymarket, merely processes inputs – in this case, human motivations and financial incentives – and presents an output that appears neutral. However, the article demonstrates this ‘objectivity’ is illusory, obscuring the underlying power dynamics that shape those initial inputs. The system doesn’t eliminate bias; it repackages it, creating a veneer of transparency that masks accountability gaps. The work effectively argues that a system needing constant auditing has already failed to achieve genuine neutrality.

Future Forecasts

The work presented clarifies a simple, yet persistent, problem: the reduction of messy human cognition to neat numerical outputs does not resolve complexity; it merely displaces it. Prediction markets, built on blockchain infrastructure, offer an illusion of neutrality. This is not a technical flaw, but an inherent property of any system attempting to aggregate subjective probabilities. The core question remains: what is lost in translation? Future research must move beyond documenting that laundering occurs, to mapping how specific power dynamics are obscured within the probabilistic signal.

A sociotechnical audit, rigorously applied, is not a solution, but a necessary diagnostic. It reveals the fingerprints on the machine, not the machine’s inherent goodness. The field requires novel methodologies for tracing the origins of information and capital flowing into these markets. Focusing solely on forecast accuracy is a category error. The relevant metric is not ‘truth,’ but the distribution of influence-who benefits from the illusion of objectivity?

Ultimately, the goal is not to ‘fix’ prediction markets, but to understand them. Clarity is the minimum viable kindness. Recognizing the inherent limitations of such systems-the inescapable presence of epistemic laundering-is a prerequisite for responsible design and deployment. The pursuit of perfect prediction is, predictably, a perfect waste of time.

Original article: https://arxiv.org/pdf/2602.05181.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Gold Rate Forecast

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Mario Tennis Fever Review: Game, Set, Match

- 4. The Gamer’s Guide to AI Summarizer Tools

- Beyond Linear Predictions: A New Simulator for Dynamic Networks

- Every Death In The Night Agent Season 3 Explained

- These are the 25 best PlayStation 5 games

2026-02-06 15:24