Author: Denis Avetisyan

New research reveals that public conversations surrounding advanced artificial intelligence often prioritize engagement over rigorous verification, potentially leading to the premature acceptance of claims.

Delayed verification in high-visibility online discourse can shape pre-interaction trust in agentic AI systems and reinforce unverified narratives.

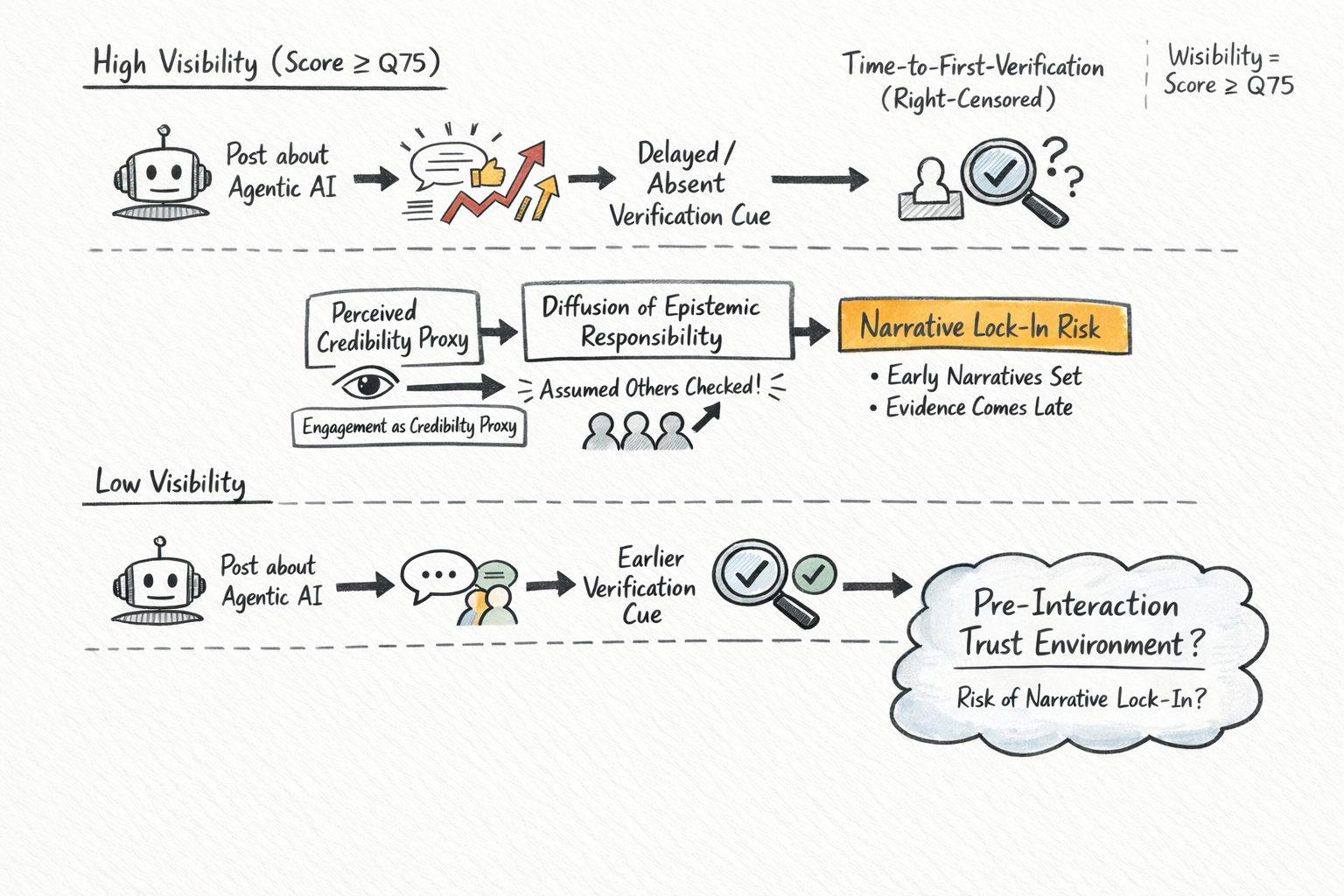

It is paradoxical that public perception of emerging technologies often solidifies before rigorous evaluation can occur. This research, detailed in ‘When Visibility Outpaces Verification: Delayed Verification and Narrative Lock-in in Agentic AI Discourse’, investigates how online discussions surrounding agentic AI systems-autonomous entities capable of independent action-are shaped by the timing of claim verification. Analyzing Reddit communities, we find that high-visibility threads exhibit significantly delayed or absent verification cues, creating a window for unverified claims to become entrenched cognitive biases. Does this “credibility-by-visibility” effect necessitate design interventions to foster more evidence-driven engagement with potentially transformative AI technologies?

The Shifting Sands of Discourse: Visibility and the Erosion of Critical Assessment

The conversation surrounding agentic AI systems is undergoing a significant shift, increasingly taking place within the confines of online platforms. This dependence on platforms like social media and online forums creates an environment where immediate accessibility often overshadows careful consideration. While offering unprecedented opportunities for broad dissemination of information, this shift bypasses traditional gatekeepers of knowledge – peer review, expert analysis, and fact-checking – leading to a potential decline in critical evaluation. The ease with which claims, both substantiated and unsubstantiated, can gain traction online fosters a reactive, rather than reflective, discourse, potentially shaping public perception before thorough scrutiny can occur. This dynamic poses a challenge to responsible innovation and informed public understanding of these rapidly evolving technologies.

The mechanics of online platforms, designed to elevate engaging content, can paradoxically diminish critical assessment of information regarding agentic AI. Algorithms prioritizing visibility – measured by likes, shares, and comments – inadvertently give prominence to claims irrespective of their factual basis. This creates an echo chamber effect, where frequently repeated assertions are mistaken for established truths, fostering a false sense of consensus. Consequently, discussions gain traction not through rigorous examination, but through sheer volume of exposure, potentially misleading the public and hindering informed debate about the capabilities and risks of increasingly autonomous systems. The intended function of highlighting popular content, therefore, can become a vehicle for unverified information to circulate widely, overshadowing nuanced perspectives and evidence-based analysis.

The increasing prominence of agentic AI in online discussions inadvertently cultivates a reliance on social proof, where the popularity of a claim often overshadows its factual basis. This phenomenon creates a susceptibility to misinformation, as individuals tend to infer credibility from the sheer volume of engagement rather than rigorous evidence. A recent quantitative study reveals a concerning correlation: higher visibility of agentic AI discussions on online platforms is demonstrably associated with both delayed and, in many cases, absent verification of the claims made within those discussions. This suggests that the mechanics of platform visibility, while intended to surface relevant content, can paradoxically hinder the critical evaluation necessary to distinguish substantiated ideas from unsubstantiated ones, potentially leading to the widespread acceptance of inaccurate or misleading information about increasingly powerful technologies.

The Rhythm of Scrutiny: Timing as a Measure of Trust

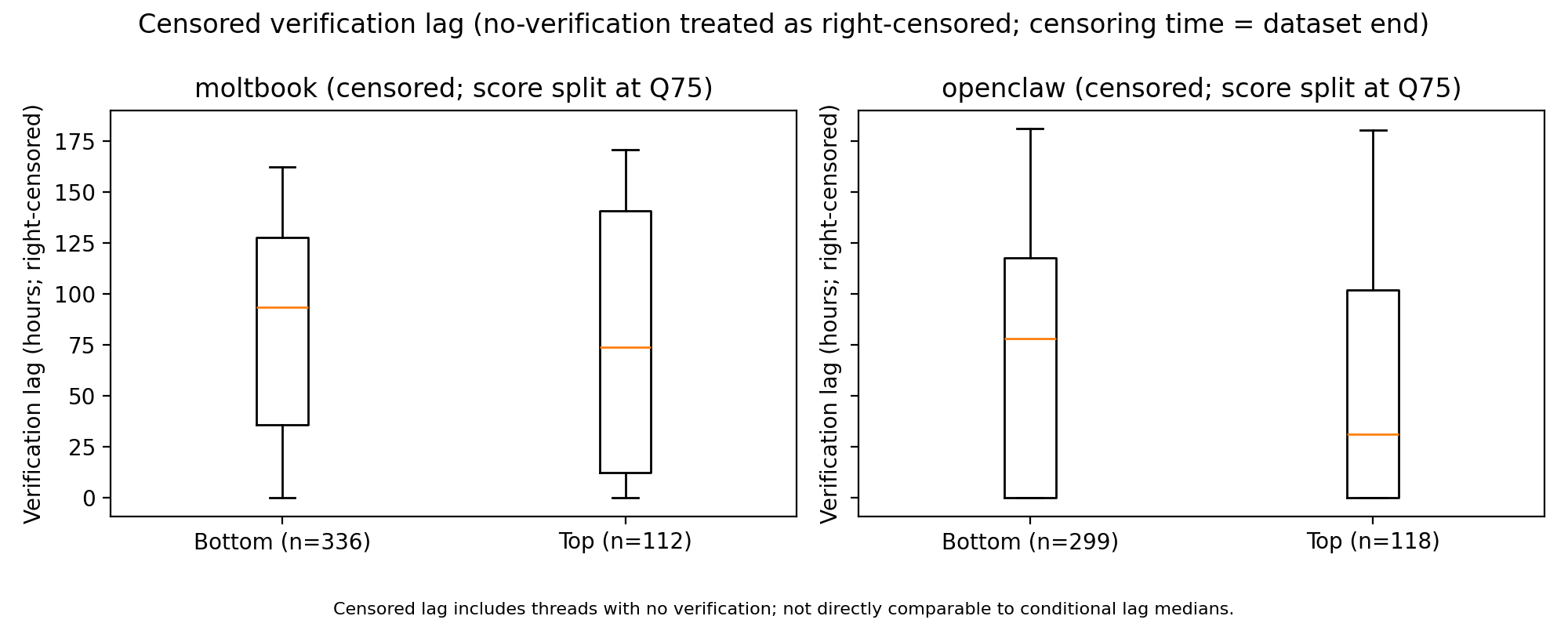

The timing of requests for evidence or substantiation – termed Verification Timing – plays a crucial role in how trust is established and calibrated within online discussions. Analysis of Reddit communities r/moltbook and r/openclaw revealed that verification cues appeared significantly earlier in low-visibility threads. Specifically, the median time to the first verification request was 0.34 hours in low-visibility r/moltbook threads, compared to 4.21 hours in high-visibility threads (p-value = 4.00 x 10^-4). This indicates a delayed request for evidence in more visible discussions, suggesting that increased visibility can prematurely foster acceptance of claims without initial scrutiny, impacting the calibration of trust amongst participants.

Analysis of online discussions in the r/moltbook and r/openclaw subreddits demonstrates a correlation between a claim’s visibility and the timing of verification requests. Specifically, threads with lower visibility exhibited a significantly higher likelihood of containing any verification cue compared to high-visibility threads. This effect was statistically significant in both communities, with an odds ratio of 2.57 (p-value = 2.18 x 10-4) for r/moltbook and 2.18 (p-value = 3.96 x 10-4) for r/openclaw, suggesting that claims receiving greater initial attention are less likely to be immediately challenged or require substantiation from other users.

Lexical analysis was utilized to quantitatively measure verification timing within online discussions. This method involved identifying specific linguistic cues – phrases and terms indicative of requests for evidence, sources, or substantiation – across the Reddit communities r/moltbook and r/openclaw. By automatically detecting these cues, we were able to determine the time elapsed between an initial claim and the subsequent appearance of a verification attempt, enabling a large-scale assessment of how quickly information is challenged or corroborated within these online environments. This approach moved beyond manual content analysis, allowing for a more rigorous and scalable measurement of verification behavior.

Analysis of verification timing in the r/moltbook subreddit revealed a statistically significant delay in requesting evidence for claims made in high-visibility threads. Specifically, the median time to the first verification request was 0.34 hours for low-visibility threads, compared to 4.21 hours for high-visibility threads (Permutation test p-value = 4.00 x 10^-4). This indicates that claims receiving greater initial attention were subjected to verification attempts considerably later in the discussion, suggesting a potential correlation between visibility and the immediacy of scrutiny regarding information accuracy.

Addressing the Shadows: Accounting for Incomplete Observation

A significant methodological difficulty in quantifying trust dynamics arises from the potential absence of explicit verification cues during interactions. Participants may internally seek confirmation of claims made by others, but if these attempts to verify information are not outwardly expressed – for example, through questioning or seeking corroborating evidence – they remain unobserved. This creates a ‘right-censoring’ effect, where the data only reflects instances of expressed verification attempts, systematically underestimating the total frequency of verification behavior. Consequently, analyses based solely on observed cues may yield biased results, as the unobserved attempts represent a hidden portion of the complete verification process and contribute to an incomplete understanding of trust calibration mechanisms.

Right-Censoring is a statistical method employed to address incomplete observation of events, specifically in this research, instances of participants seeking verification during discussions. Traditional analyses assume complete data, potentially biasing results when verification attempts occur but are not explicitly recorded. This technique treats unobserved verification attempts as censored data – known to have occurred after a certain point but with the exact timing unknown. By modeling these censored observations, Right-Censoring provides a more accurate estimate of Verification Timing distributions and reduces the impact of unobserved data on the overall analysis, thereby improving the robustness of conclusions drawn from the research.

The implementation of Right-Censoring enabled a more precise quantification of Verification Timing by accounting for instances where participants implicitly sought, but did not overtly express, verification cues. Traditional analytical methods, without this adjustment, could underestimate the duration of verification processes, potentially leading to skewed results. By statistically modeling these unobserved attempts, the methodology reduces bias associated with incomplete data, providing a more accurate assessment of how quickly individuals assess information credibility. This refined measurement of Verification Timing directly improves the reliability of subsequent analyses examining the relationship between factors like visibility and the calibration of trust.

The implementation of Right-Censoring methodology directly enhances the reliability of findings concerning the correlation between visibility and trust calibration. By accounting for unobserved verification attempts – instances where participants likely sought, but did not overtly express, verification cues – this approach reduces potential biases associated with incomplete data. This correction allows for a more accurate assessment of Verification Timing, which is a key variable in understanding how individuals adjust their trust based on observable characteristics. Consequently, conclusions drawn regarding the impact of visibility on trust calibration are more robust and less susceptible to errors stemming from data limitations.

The Persistence of Belief: How Timing Shapes Acceptance

The enduring power of misinformation, even when demonstrably false, is significantly linked to when people bother to check the facts. Research indicates a phenomenon termed the Continued Influence Effect, where initial exposure to inaccurate information creates a lasting impression that resists correction. This persistence isn’t simply about stubborn belief; it’s fueled by a cognitive shortcut called Credibility-by-Visibility – the tendency to initially perceive information as truthful simply because it’s widely circulated. Consequently, delayed verification – putting off fact-checking until later – allows the initial misinformation to take root, becoming harder to dislodge even after corrections are presented. This suggests that the timing of evidence requests plays a crucial role in shaping beliefs, and that proactive verification is far more effective than reactive debunking in combating the spread of false narratives.

Research indicates a significant correlation between the timing of verification and the efficacy of corrections regarding pre-existing beliefs. When individuals encounter claims, a delay in seeking or receiving verification allows those claims to become more deeply entrenched, making subsequent corrections less impactful. This phenomenon suggests that initial exposure, even to inaccurate information, creates a cognitive framework that resists alteration; the longer a claim persists unchallenged, the more difficult it becomes to dislodge. The study demonstrates that immediate scrutiny and evidence-based validation are crucial for preventing the solidification of false beliefs, while delayed verification effectively reinforces misinformation, hindering the ability of corrective information to successfully update an individual’s understanding.

Research indicates that strategically implemented “design friction” – features requiring increased cognitive effort from users – can serve as a powerful tool against the persistence of misinformation. These elements, such as prompts asking for evidence before sharing or requiring users to confirm information sources, don’t aim to obstruct access, but rather to encourage a more deliberate and reflective evaluation of content. By briefly interrupting the automatic flow of information consumption, these frictions prompt individuals to question claims and seek corroborating evidence before beliefs are firmly established, thus reducing the impact of false narratives. The effect isn’t about making information harder to find, but about shifting the cognitive process from passive acceptance to active scrutiny, potentially mitigating the “continued influence effect” where corrections struggle to overcome initially encountered misinformation.

Effective strategies to counter misinformation in online spaces hinge on a nuanced understanding of how correction timing interacts with the timing of initial verification efforts. Research indicates that delayed verification – allowing misinformation to circulate unchecked for a period – exacerbates the continued influence effect, rendering subsequent corrections less impactful. This interplay suggests that interventions focused solely on correcting false claims after they’ve gained traction may be insufficient; proactive measures that encourage earlier scrutiny and evidence-seeking are essential. By fostering environments where claims are immediately subject to verification, and by minimizing the window of opportunity for misinformation to establish itself, it becomes possible to significantly reduce its persistence and limit its influence on public discourse. This highlights the need for platform designs and informational interventions that prioritize immediate fact-checking and promote cognitive reflection before beliefs become firmly entrenched.

The study of agentic AI discourse reveals a curious dynamic: visibility often precedes rigorous verification. This phenomenon isn’t merely a technological failing, but a demonstration of how systems evolve, prioritizing engagement over epistemic soundness. As Robert Tarjan aptly stated, “Algorithms must reflect trade-offs.” This holds true here – platforms implicitly trade thorough verification for immediate user interaction, a trade-off that shapes narratives before evidence can fully accumulate. The research suggests that this ‘narrative lock-in’ isn’t a bug, but a feature of systems prioritizing speed and social proof, a testament to how even the most advanced technologies are susceptible to the forces of time and the pressures of maintaining momentum.

The Horizon Recedes

The study of delayed verification in agentic AI discourse reveals a pattern familiar to any system approaching entropy: visibility, once a boon, becomes a constraint. Engagement accrues, not as a measure of understanding, but as a testament to the system’s momentum. The research suggests that platforms, in prioritizing immediate interaction, inadvertently cultivate a form of epistemic inertia. This isn’t necessarily malicious; it’s simply the predictable outcome of optimizing for a single metric – attention – while neglecting the slower, more laborious process of calibration. Technical debt, in this context, isn’t a bug to be fixed, but the system’s memory – a record of assumptions made in the service of expediency.

Future work must address the question of how to reintroduce friction. Not as a barrier to access, but as a necessary component of discernment. The current trajectory suggests a growing reliance on social proof as a proxy for genuine assessment, a shortcut that ultimately diminishes the capacity for critical evaluation. Understanding the conditions under which this substitution occurs – and, crucially, the long-term consequences for trust – will require a shift in focus from merely detecting misinformation to actively fostering epistemic resilience.

Any simplification, even in the pursuit of accessibility, carries a future cost. The challenge lies not in eliminating these costs, but in acknowledging them and designing systems that can accommodate – and even learn from – the inevitable accumulation of uncertainty. The horizon of verification will always recede; the art lies in building mechanisms to navigate the distance with grace, rather than attempting to close it entirely.

Original article: https://arxiv.org/pdf/2602.11412.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- World of Warcraft Decor Treasure Hunt riddle answers & locations

- The Coming Infrastructure Crisis: Can Networks Handle the AI Boom?

- The 1 Scene That Haunts Game of Thrones 6 Years Later Isn’t What You Think

- Ragnarok X Next Generation Class Tier List (January 2026)

- Will there be a Wicked 3? Wicked for Good stars have conflicting opinions

- LINK PREDICTION. LINK cryptocurrency

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- 3 Best Movies To Watch On Prime Video This Weekend (Dec 13-14)

- First Glance: “Wake Up Dead Man: A Knives Out Mystery”

2026-02-15 17:51