Author: Denis Avetisyan

A novel method overcomes limitations in forecasting signals obscured by complex, scale-free noise, opening doors for more accurate predictions in diverse applications.

This review introduces a practical algorithm leveraging Toeplitz operators and causal estimation to forecast signals with non-rational spectra, improving upon traditional Wiener filter approaches.

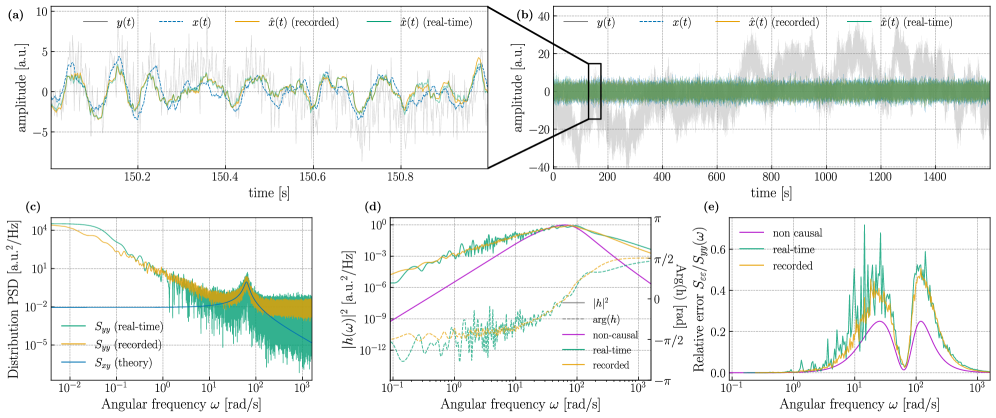

Extracting meaningful signals from noise is a fundamental challenge across scientific disciplines, yet conventional forecasting techniques rely on assumptions about noise spectra that rarely hold in real-world scenarios. This work, ‘Forecasting in the presence of scale-free noise’, introduces a novel method for optimal forecasting when noise exhibits scale-free, or non-rational, power spectra-a characteristic prevalent in diverse systems. By recasting the problem through the lens of Toeplitz operators and employing a duality with control theory, we establish performance guarantees and a practical algorithm for causal estimation. Could this approach unlock improved predictive capabilities in fields ranging from neuroscience and finance to fluid dynamics and quantum measurement?

The Essence of Spectral Estimation

Effective signal analysis frequently hinges on identifying the constituent frequencies within a complex waveform; however, conventional techniques like Fourier analysis encounter limitations when confronted with real-world data. These methods often assume signals are stationary – meaning their frequency content doesn’t change over time – a condition rarely met in practical applications such as speech recognition or seismic monitoring. Furthermore, the presence of noise significantly degrades the accuracy of these estimations, obscuring the true underlying frequencies and introducing spurious artifacts. Consequently, researchers have developed more sophisticated approaches, like time-frequency analysis, to address these challenges by providing a means to track how the frequency content of a signal evolves and to mitigate the impact of unwanted noise, ultimately enabling more robust and reliable signal characterization.

Spectral density, a cornerstone of signal processing, characterizes how a signal’s power is distributed across different frequencies – essentially, a fingerprint of the signal’s frequency components. Rather than simply identifying dominant frequencies, it quantifies the amount of signal energy at each frequency, providing a comprehensive view of the signal’s composition. This is achieved mathematically by examining the Fourier transform of the signal, and calculating the power associated with each frequency bin – often represented as S(f), where f denotes frequency. Crucially, this framework extends beyond static signals; time-varying spectral densities can track how frequency content changes over time. The resulting spectral information isn’t merely descriptive, but serves as the foundation for advanced filtering techniques – allowing engineers to selectively enhance or suppress specific frequency bands, remove noise, and ultimately isolate the desired signal with remarkable precision.

The Wiener Filter: An Optimal Solution Defined

The Kolmogorov-Wiener filter is an optimal linear filter derived through a mathematical framework that explicitly minimizes the mean-squared error (MSE) between the estimated signal and the desired signal. This minimization is achieved by formulating a Wiener-Hopf equation, a convolution integral, that defines the optimal filter coefficients. The derivation relies on assumptions of stationarity and linearity for both the signal and the noise, allowing for spectral analysis using power spectral densities. Specifically, the optimal filter transfer function H(f) is expressed as the ratio of the auto-correlation of the desired signal to the sum of the auto-correlation of the desired signal and the power spectral density of the noise: H(f) = \frac{R_{ss}(f)}{R_{ss}(f) + R_{nn}(f)}, where R_{ss}(f) is the power spectrum of the signal and R_{nn}(f) is the power spectrum of the noise. Consequently, the filter’s performance is directly tied to accurate knowledge of these spectral characteristics.

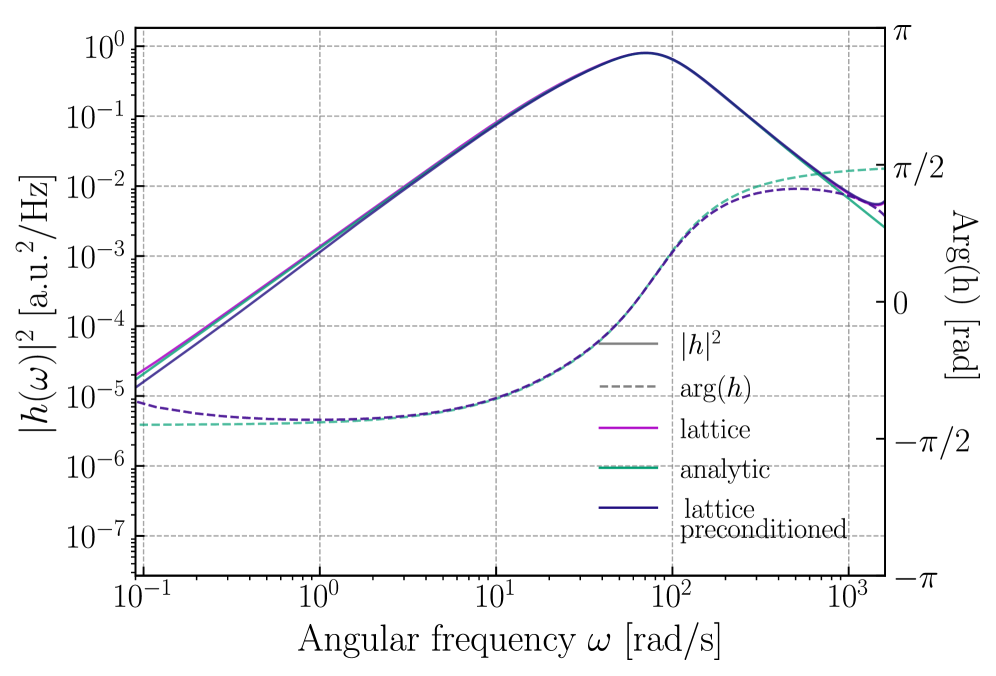

Wiener filters are frequently implemented using factorization techniques, notably Wiener-Hopf factorization, which decomposes the filter transfer function into components representing the signal and noise power spectra; this allows for a simplified design process and improved stability. Furthermore, formulating the filter within a State-Space Model offers computational advantages, particularly for real-time applications and high-dimensional data. State-space representation transforms the filter design into a set of linear equations that can be efficiently solved using standard numerical algorithms, enabling practical implementations of the theoretically optimal Wiener filter, even with complex signal and noise characteristics. H(z) = N(z) / D(z) represents a general transfer function form often used in these implementations.

The design of an effective Wiener filter fundamentally relies on characterizing the power spectra of both the desired signal and the noise corrupting it. These power spectra, typically expressed as functions of frequency, detail the signal and noise energy distribution across the frequency domain. A rational power spectrum – one expressible as a ratio of polynomials – allows for a simplified filter design process, particularly when utilizing techniques like Wiener-Hopf factorization. Accurate estimation of these spectra, often achieved through methods like periodograms or autoregressive modeling, directly impacts the filter’s ability to minimize the mean-squared error; errors in spectral estimation propagate directly into filter coefficient inaccuracies and reduced performance. The ratio of the signal power spectrum to the sum of the signal and noise power spectra forms the basis of the filter’s frequency response H(f) = \frac{S(f)}{S(f) + N(f)}, highlighting the importance of precise spectral characterization.

Causality and the Refinement of Filter Design

The standard Wiener filter, while optimal in the least-squares sense, is inherently non-causal, meaning its output depends on future inputs. This is impractical for real-time applications. The Causal Wiener Filter addresses this limitation by explicitly enforcing causality; its impulse response h[n] is constrained such that h[n] = 0 for n < 0. This ensures the filter’s output at any given time n depends solely on the input signal values at time n and all previous times. The enforcement of causality necessitates modifications to the standard Wiener filter formulation, typically involving a one-sided spectral estimation and a corresponding adjustment to the filter’s frequency response to maintain stability and optimality under the causality constraint.

Causal filter implementation frequently utilizes the Hilbert Transform to create zero-phase filters from non-causal designs, effectively shifting the phase response without altering the magnitude response. This allows for the approximation of ideal filters while maintaining causality. Furthermore, the inherent structure of many causal filter designs lends itself to representation using Toeplitz operators – matrices where each descending diagonal from top-left to bottom-right has the same element. This property enables significant computational efficiencies, particularly in the frequency domain, by allowing convolution operations to be performed as matrix-vector multiplications, reducing complexity from O(n2) to O(n log n) using the Fast Fourier Transform (FFT).

The variational formulation offers a robust method for designing causal Wiener filters by framing the filter design as an optimization problem subject to causality constraints. This approach ensures the resulting filter achieves optimal performance given these constraints and, crucially, guarantees the convergence of a truncated filter implementation. Specifically, the convergence rate of the truncated filter is mathematically defined as O(n^(1-m-α)), where ‘n’ represents the filter order, ‘m’ denotes the order of the Wiener process, and ‘α’ represents a regularization parameter controlling the trade-off between performance and stability. This quantifiable convergence rate allows for predictable performance scaling as the filter complexity increases, facilitating practical implementation and analysis.

Function Spaces: A Rigorous Foundation for Filter Analysis

The performance and stability of filters aren’t simply assessed through traditional frequency response analysis; a more robust approach leverages the mathematical framework of function spaces, notably Hardy Space. This space allows for a precise characterization of a filter’s behavior by examining its analytic properties – essentially, how well the filter handles signals with varying frequencies and complexities. By representing filters as functions within Hardy Space, researchers can rigorously define and quantify key characteristics like boundedness, convergence, and the filter’s ability to reject unwanted noise. This method moves beyond empirical observations, providing mathematical guarantees about a filter’s behavior under diverse conditions, and enabling the design of filters optimized for specific signal processing tasks with demonstrable stability-crucial for applications ranging from audio processing to medical imaging.

The decomposition of filters benefits significantly from the synergy between Toeplitz Operators and the Riesz Projector. Toeplitz Operators, which represent filters as infinite matrices with specific structures, allow for the analysis of their spectral properties through matrix theory. However, direct application can be computationally challenging. The Riesz Projector provides a crucial mechanism for truncating these infinite matrices while preserving key spectral characteristics, enabling efficient and accurate analysis. This combination facilitates the identification of filter stability, the determination of frequency response characteristics, and the design of optimized filter structures. By projecting the filter onto a finite-dimensional subspace, the Riesz Projector allows researchers to approximate the infinite Toeplitz matrix with a manageable size, unlocking practical applications in signal processing and data analysis. The resulting finite-dimensional representation retains enough information to reliably predict the filter’s behavior and performance.

The efficacy of filter design is fundamentally linked to the characteristics of the signal being processed, specifically its membership within a Hoelder Class. This classification dictates the rate at which a function’s change diminishes, and crucially, impacts the convergence and accuracy of filter approximations. Mathematical analysis demonstrates that solution stability is guaranteed when the minimal eigenvalue of the associated operator is bounded below by the infimum of S_y(y), a measure derived from the signal’s properties. Furthermore, the discrepancy between a truncated filter – a practical necessity for implementation – and its ideal, infinite-precision counterpart can be rigorously quantified. This error is shown to diminish at a predictable rate, bounded by |h_n(ω) - h(ω)| < A*n^(1-m-α), where ‘n’ represents the truncation level and the exponents ‘m’ and ‘α’ are directly tied to the signal’s Hoelder regularity, thus providing a clear pathway for optimizing filter performance and ensuring reliable signal reconstruction.

The pursuit of accurate forecasting, as detailed in this work concerning scale-free noise, often encounters limitations when traditional methods struggle with non-rational spectra. This investigation cleverly sidesteps those issues by reframing the problem through the lens of Toeplitz operators, allowing for a more robust approach to causal estimation. It recalls Niels Bohr’s assertion: “Every great advance in natural knowledge begins with an intuition that is usually at odds with what is accepted.” The paper challenges conventional signal processing norms, seeking a solution beyond established limitations-a testament to the power of questioning assumptions and embracing novel perspectives in the face of complex data.

What Remains?

The pursuit of forecasting, stripped to its essence, is not about anticipating the inevitable, but about quantifying ignorance. This work offers a sharpened tool for that task, particularly when confronted with the stubborn reality of scale-free noise. The reformulation via Toeplitz operators, while elegant, does not erase the fundamental limitation: all models are, ultimately, approximations of a universe that refuses to be fully known. The practical algorithm presented addresses a specific deficiency, yet it invites further scrutiny. How robust is this approach to deviations from strict scale-free behavior? What unforeseen biases are introduced by the chosen regularization parameters?

Future work will likely focus on extending this framework to non-stationary processes – signals whose statistical properties evolve over time. This introduces a complexity that demands careful consideration; chasing temporal variations risks overfitting and a loss of generalization. A more fruitful path may lie in embracing model misspecification, not as a failure, but as an inherent property of the forecasting problem. The challenge then becomes not to find the ‘true’ model, but to construct one that is ‘sufficiently wrong’ in a predictable manner.

Ultimately, the value of this contribution resides not in its predictive power-for perfect prediction remains a chimera-but in its capacity to illuminate the limits of predictability itself. The signal is not in the noise; the noise is the signal. And what remains, after the equations are simplified and the assumptions exposed, is a clearer understanding of what cannot be known.

Original article: https://arxiv.org/pdf/2601.22294.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- NBA 2K26 Season 5 Adds College Themed Content

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- Heated Rivalry Adapts the Book’s Sex Scenes Beat by Beat

- All Itzaland Animal Locations in Infinity Nikki

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

- Mario Tennis Fever Review: Game, Set, Match

- EUR INR PREDICTION

2026-02-02 13:36