Author: Denis Avetisyan

Researchers are leveraging the power of transformer networks to model and predict the collective behavior of chaotic many-body systems, opening new avenues for understanding complex phenomena.

This work demonstrates that self-attention mechanisms can effectively capture non-Markovian dynamics and provide accurate statistical forecasting in chaotic systems, offering a novel approach to reduced order modeling for quantum and classical many-body problems.

Predicting the long-term behavior of complex many-body systems is fundamentally challenged by inherent chaos and non-Markovian dynamics. This limitation is addressed in ‘Transformer Learning of Chaotic Collective Dynamics in Many-Body Systems’, which introduces a novel approach leveraging self-attention-based transformer networks to learn reduced descriptions directly from time-series data. The authors demonstrate that these networks effectively capture the statistical “climate” of chaotic systems, including temporal correlations, even when pointwise long-term predictions diverge. Could this method unlock new avenues for modeling and forecasting in a wider range of complex physical and engineered systems exhibiting similar characteristics?

The Intractable Many-Body Problem: A Foundation for Dynamical Inquiry

A fundamental challenge in physics lies in accurately predicting the behavior of systems comprised of many interacting quantum particles. While the laws governing individual particles are well-established, their collective dynamics rapidly become intractable due to what is known as the ‘many-body problem’. The difficulty isn’t a lack of understanding of the underlying physics, but rather an exponential scaling of computational complexity with the number of particles. Each interaction introduces a new variable, quickly overwhelming even the most powerful computers. Traditional methods, such as solving the Schrödinger equation directly, become impossible for all but the simplest systems, necessitating the development of innovative approximation techniques and computational strategies to navigate this inherent complexity and unlock the secrets of collective quantum phenomena.

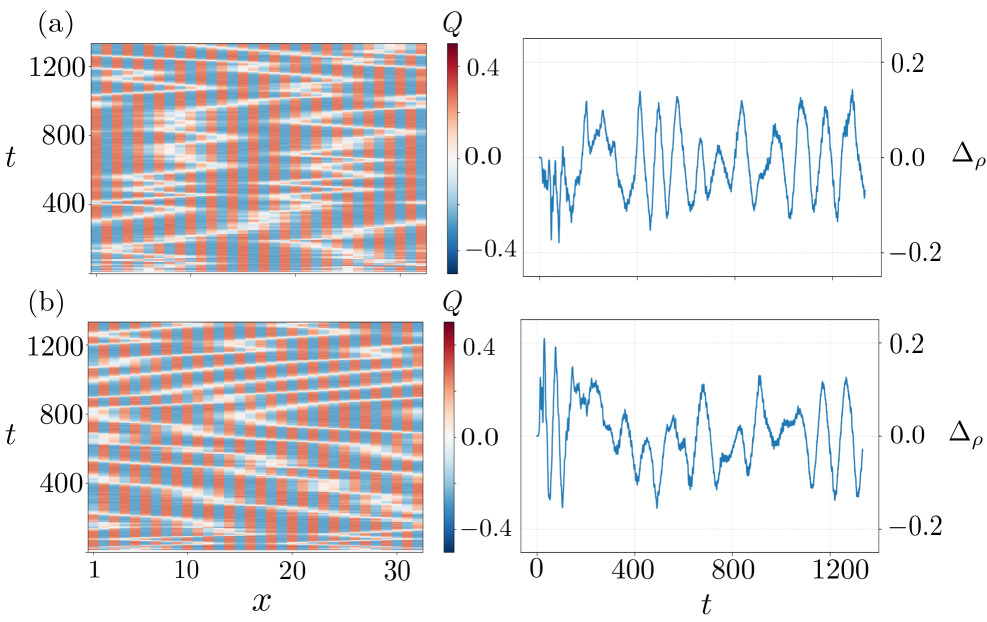

The Holstein model, a cornerstone in condensed matter physics, provides a surprisingly versatile platform for developing and validating new techniques to tackle the intractable problem of many-body quantum dynamics. This simplified representation of electrons interacting with vibrations in a crystal lattice – essentially, electrons hopping between sites coupled to local vibrational modes – captures essential physics while remaining computationally tractable. Researchers frequently employ the Holstein model as a proving ground because its relatively small parameter space allows for detailed comparisons between analytical predictions, numerical simulations, and even experimental observations in real materials. By benchmarking novel approaches – from sophisticated tensor network methods to machine learning algorithms – against the well-understood behavior of the Holstein model, scientists gain confidence in their ability to address more complex and realistic systems where interactions are strong and conventional methods fail. This ensures progress toward understanding phenomena like high-temperature superconductivity and correlated electron materials, where the collective behavior of many interacting particles dictates the material’s properties.

Accurately predicting the future state of a complex quantum system hinges on acknowledging that its evolution isn’t always dictated solely by present conditions; rather, the system frequently exhibits non-Markovian behavior. This means the system’s past states retain an influence on its future trajectory, creating a ‘memory’ effect that conventional, Markovian approaches-which assume future states depend only on the present-fail to capture. This memory arises from the intricate correlations between particles within the system, and understanding its emergence is crucial for modeling realistic dynamics, especially over extended timescales. For instance, in materials with strong electron interactions, these correlations can lead to the formation of quasi-particles with lifetimes affected by the system’s history, demanding a theoretical framework that goes beyond simple present-state dependencies to achieve reliable long-term predictions of material properties and behaviors.

Semiclassical Approximation: A Necessary Compromise

Semiclassical dynamics addresses the computational demands of simulating the Holstein model – a key representation of electron-phonon interactions in materials – by leveraging approximations that blend quantum and classical descriptions. While a full quantum treatment of many-body systems is often computationally prohibitive, semiclassical methods approximate quantum evolution using classical trajectories, significantly reducing the required resources. These techniques are particularly valuable for systems where the potential energy surface can be reasonably approximated classically, allowing for the efficient propagation of wavepackets or Wigner functions. This approach provides a computationally tractable pathway to investigate the dynamics of coupled electronic and vibrational degrees of freedom, enabling the study of phenomena like polaron formation and transport properties that are difficult to access with purely quantum methods.

Semiclassical dynamics, when applied to the Holstein model, utilizes the Ehrenfest theorem to describe the time evolution of the average quantum state. This theorem yields classical equations of motion for the expectation values of quantum operators, allowing for propagation of the system’s quantum state using classical integration techniques. Simultaneously, the von Neumann equation, a quantum mechanical equation of motion, provides an alternative, fully quantum mechanical, route to time evolution. The von Neumann equation describes the evolution of the density matrix ρ, which encapsulates the statistical state of the system, and is often employed in conjunction with the Ehrenfest theorem to assess and improve the accuracy of the semiclassical approximation by providing a benchmark for comparison or through the development of corrected dynamics.

Despite employing semiclassical methods like the Ehrenfest theorem and the von Neumann equation, accurately simulating the Holstein model presents ongoing difficulties. These challenges stem from the model’s inherent complexity, particularly in capturing non-Markovian dynamics where the system’s future state depends on its entire past trajectory, not just the present state. Traditional approximations often fail to adequately represent the system’s memory effects, leading to inaccuracies in predicted behavior. Resolving these non-Markovian effects necessitates computationally expensive methods or the development of novel approximation techniques capable of preserving long-range correlations within the quantum state evolution.

Harnessing Transformer Architectures for Dynamical Prediction

The application of Transformer architectures to the Holstein model represents a departure from their conventional use in natural language processing. The Holstein model, a widely studied example in dynamical systems, describes the behavior of a single degree of freedom subject to damping, driving, and nonlinearity. By framing the time evolution of the Holstein model as a sequence, the Transformer can be trained to predict future system states given a history of observations. This approach leverages the Transformer’s ability to model sequential data, traditionally used for tasks like machine translation and text generation, and adapts it to the domain of physical system modeling. The model receives time-series data representing the system’s state – typically position and momentum – and learns to extrapolate future values based on learned patterns in the historical data.

The Transformer architecture, when applied to dynamical systems, functions by ingesting time series data that represents the sequential evolution of the system’s state. This data is treated as an input sequence, analogous to text in natural language processing. The model then learns a mapping from past observations to future states, effectively extrapolating the system’s trajectory. This learning process involves identifying patterns and dependencies within the time series, allowing the Transformer to predict subsequent values based on the observed history. The length of the input sequence, representing the observed history, is a critical parameter influencing the model’s ability to capture relevant dynamics and make accurate predictions.

The self-attention mechanism within the Transformer architecture operates by calculating a weighted sum of input time steps, enabling the model to focus on the most relevant historical data when predicting future states. This is achieved through the computation of attention weights, derived from queries, keys, and values associated with each time step; these weights determine the contribution of each past time step to the current prediction. Critically, this mechanism allows for the capture of long-range temporal dependencies without being limited by the fixed window size constraints of recurrent neural networks or convolutional neural networks, as the attention weights are calculated dynamically based on the input data itself, effectively allowing the model to ‘attend’ to any point in the past time series regardless of distance.

Revealing Statistical Climate and Long-Term System Behavior

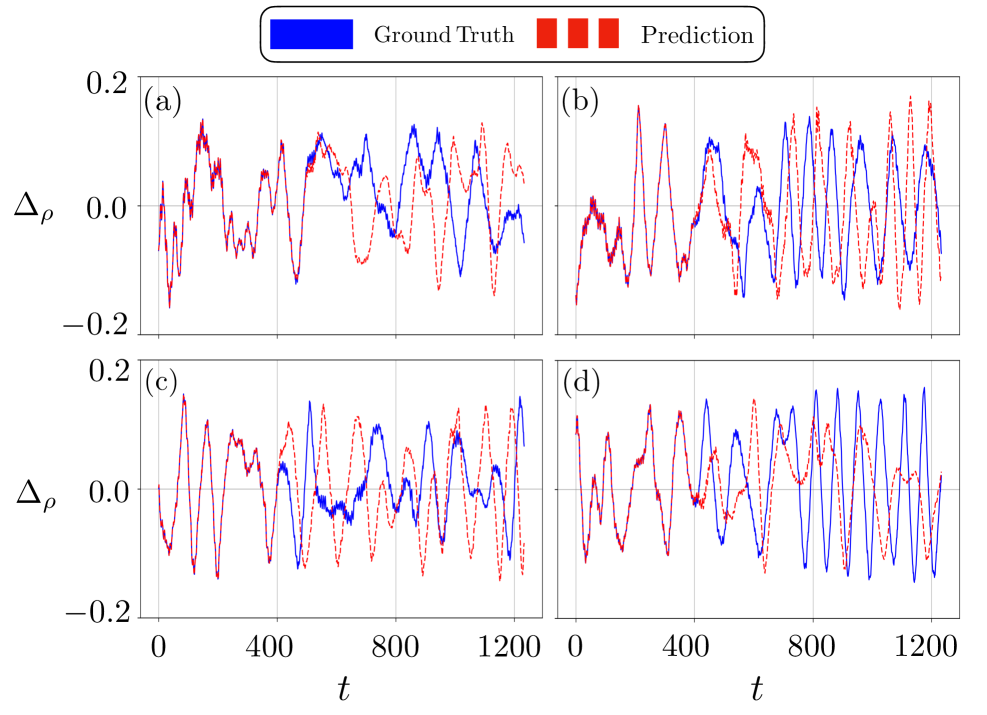

This research demonstrates a novel application of Transformer models to predict the statistical climate of complex dynamical systems, specifically using data derived from the Holstein model – a well-known example exhibiting chaotic behavior. By training the Transformer on this generated data, the model learns to accurately forecast the system’s overarching statistical properties, such as how values at different points in time are correlated. This isn’t about predicting exact future states, but rather understanding the probability of certain behaviors over extended periods. The ability to capture these temporal correlations is crucial for characterizing the system’s long-term behavior and provides insights into its inherent predictability despite its chaotic nature. This approach moves beyond short-term forecasting, offering a means to statistically describe the system’s ‘climate’-its typical patterns and tendencies-without relying on traditional methods that struggle with the complexities of non-linear dynamics.

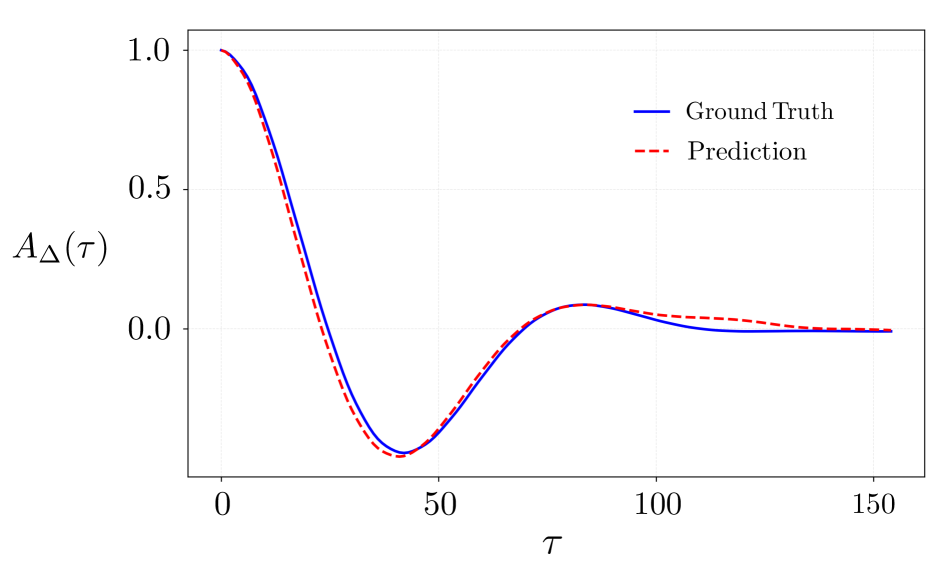

The Transformer model’s ability to forecast the Holstein chaotic system extends beyond simple prediction; it effectively captures the system’s non-Markovian dynamics. This means the model doesn’t treat each state as independent but recognizes that the system’s future behavior is influenced by its entire history-a form of ‘memory’. Traditional Markovian models assume the future depends only on the present, yet this research demonstrates the Transformer’s capacity to discern and replicate these temporal dependencies, even in a chaotic environment. By successfully modeling this ‘memory’, the Transformer accurately reproduces complex, long-term correlations within the system’s behavior, revealing insights into how past states shape its evolution and offering a significant advancement in understanding and predicting chaotic systems.

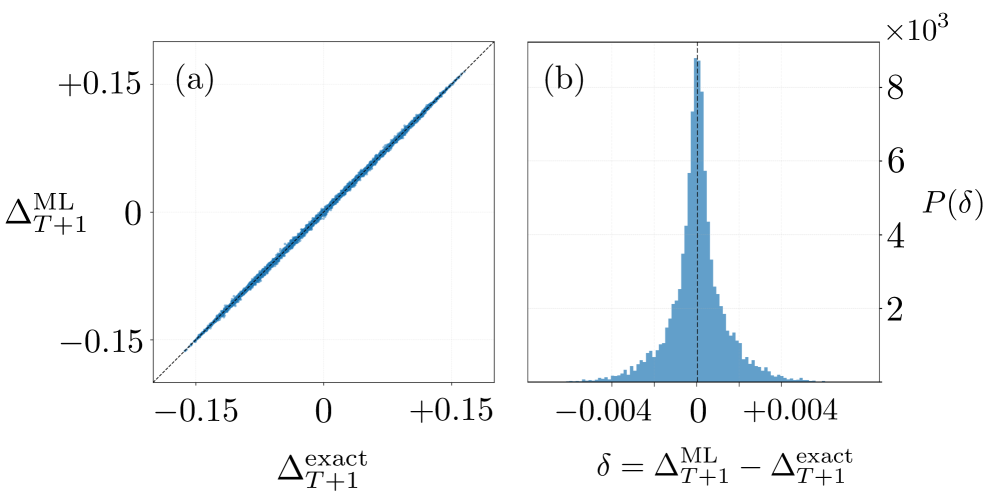

The predictive power of the Transformer model is quantitatively demonstrated through an exceptionally low Mean Squared Error of 10-5 achieved during training, confirming its capacity for precise forecasting. This accuracy isn’t simply a matter of short-term prediction; generated trajectories exhibit remarkable alignment with those obtained from exact simulations, crucially reproducing the system’s autocorrelation function – a measure of how past states influence future ones. Importantly, this success occurs despite the inherently chaotic nature of the modeled system, where even minute changes in initial conditions can lead to vastly different outcomes; the model doesn’t predict specific trajectories, but rather accurately captures the underlying statistical properties that govern the system’s long-term behavior, offering a powerful approach to understanding complex dynamical systems.

The pursuit of modeling chaotic many-body systems demands a formalism rooted in mathematical precision. This work, leveraging transformer networks for time series forecasting, aligns with the necessity of establishing rigorous definitions before attempting solutions. The authors demonstrate an ability to capture the statistical ‘climate’ of these systems, even when deterministic long-term prediction fails-a result achievable not through approximation, but through an accurate representation of the underlying dynamics. As Richard Feynman stated, “The first principle is that you must not fool yourself – and you are the easiest person to fool.” This principle underscores the importance of a provably correct model, rather than one that merely appears to function, a concept central to the authors’ approach to reduced order modeling and the inherent complexities of chaotic systems.

Beyond the Horizon

The demonstrated capacity of transformer networks to model the statistical properties of chaotic many-body systems is, at first glance, encouraging. However, the crucial distinction between forecasting statistical ‘climates’ and predicting individual trajectories must be rigorously maintained. The current formalism, while adept at capturing correlations, sidesteps the fundamental issue of Lyapunov exponents and the inherent limits on predictability. Future work must address the question of whether these networks merely describe chaos, or offer a pathway to understanding its underlying mechanisms-a distinction critical for any claim of genuine scientific advancement.

A pressing concern lies in the computational scaling. While self-attention provides a powerful inductive bias, its quadratic complexity with sequence length represents a significant bottleneck for simulating truly large systems. Investigation into sparse attention mechanisms or alternative architectures that preserve expressive power while reducing computational cost is therefore paramount. Furthermore, the reliance on empirically-derived training data introduces a degree of arbitrariness; a more principled approach would involve incorporating known symmetries or conserved quantities directly into the network architecture.

Ultimately, the success of this approach hinges not on achieving ever-more-accurate short-term predictions, but on its potential to illuminate the fundamental relationship between information, entropy, and emergent behavior in complex systems. The true test will be whether these networks can move beyond pattern recognition and contribute to a deeper, mathematically rigorous understanding of the very nature of chaos.

Original article: https://arxiv.org/pdf/2601.19080.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Silver Rate Forecast

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

- Gold Rate Forecast

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Super Animal Royale: All Mole Transportation Network Locations Guide

2026-01-29 01:38