Author: Denis Avetisyan

A new framework uses artificial intelligence to predict and mitigate risks in live streaming by analyzing patterns of behavior across multiple sessions.

This paper introduces CS-VAR, a retrieval-augmented generation framework leveraging cross-session evidence to improve live streaming risk assessment through behavioral pattern analysis.

Detecting coordinated malicious behavior in live streaming presents a unique challenge due to its gradual accumulation and recurrence across disparate sessions. To address this, we introduce CS-VAR, a framework detailed in ‘Deja Vu in Plots: Leveraging Cross-Session Evidence with Retrieval-Augmented LLMs for Live Streaming Risk Assessment’, which leverages large language models to identify and analyze ‘behavioral patches’ that reappear across streams. By retrieving and reasoning over cross-session evidence, CS-VAR empowers a lightweight model to perform efficient, structured risk assessment in real-time. Could this approach of recognizing ‘déjà vu’ in streaming patterns fundamentally reshape how platforms proactively mitigate emerging threats?

The Inevitable Cascade: Risk in the Live Stream

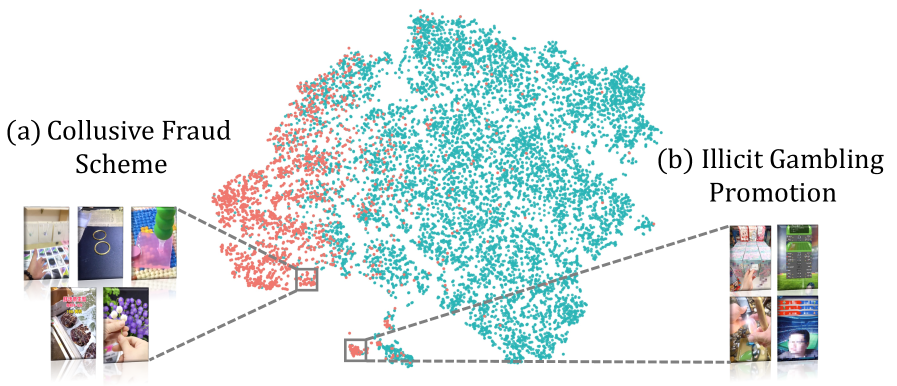

The proliferation of live streaming has created a fertile ground for emerging risks, particularly concerning fraudulent activities and illicit gambling operations. Platforms are engaged in a perpetual struggle to identify and mitigate these threats, a challenge intensified by the sheer scale and speed of live content. Unlike traditional content moderation, which often deals with static assets, live streams demand instantaneous risk assessment. Delays, even of a few seconds, can allow malicious actors to exploit vulnerabilities and inflict significant harm, both financially and reputationally. Consequently, platforms are compelled to invest heavily in sophisticated detection systems capable of analyzing streams in real-time, flagging suspicious behavior, and intervening before damage occurs. This necessitates a shift from reactive moderation to proactive risk management, requiring constant adaptation to evolving tactics and a commitment to minimizing latency in the detection process.

Conventional risk assessment techniques, designed for static datasets and deliberate analysis, are increasingly inadequate when applied to the frenetic pace of live streaming platforms. These systems typically rely on batch processing and rule-based detection, creating a significant lag between the occurrence of a risky event and its identification. The sheer volume of data – encompassing user interactions, chat messages, transaction details, and video content – overwhelms these traditional methods. Furthermore, the velocity at which this data streams in demands immediate processing, a capability beyond the reach of systems designed for slower, more considered evaluation. This mismatch between processing speed and data flow results in delayed responses, allowing fraudulent activities or harmful content to propagate before intervention, ultimately undermining the safety and integrity of the live streaming experience.

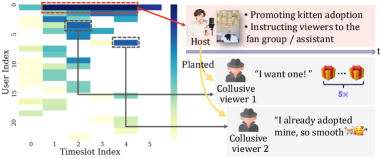

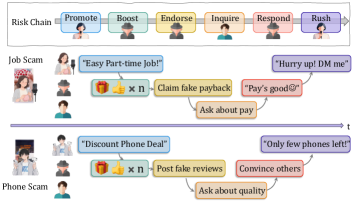

Detecting malicious activity within live streams necessitates a shift from static risk profiles to the analysis of behavioral sequences. Unlike traditional methods that assess individual actions in isolation, effective risk detection now centers on understanding the pattern of user interactions over time. A user engaging in seemingly innocuous actions – such as repeatedly sending messages, making small donations, or rapidly switching between viewing different streamers – can, when observed as a sequence, reveal coordinated fraudulent behavior or attempts to manipulate the platform. These behavioral fingerprints, built from the temporal ordering of actions, allow systems to identify anomalies indicative of risk, even when individual actions appear legitimate. Consequently, advanced algorithms are being developed to model typical user behavior and flag deviations, providing a crucial defense against evolving threats in the fast-paced environment of live broadcasting.

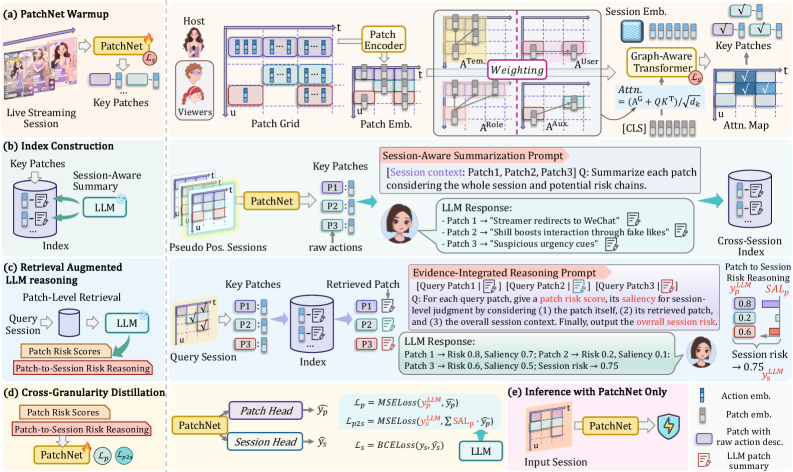

The Hybrid Loom: Weaving Session Understanding

CS-VAR utilizes a hybrid architecture integrating PatchNet, a lightweight neural network, with a Large Language Model (LLM) to achieve session understanding. PatchNet is responsible for the efficient extraction and encoding of user behavior, while the LLM provides reasoning and analytical capabilities. This combination allows CS-VAR to leverage the speed and efficiency of neural networks for feature extraction with the complex contextual understanding provided by LLMs. The framework is designed to benefit from the strengths of both approaches, creating a system capable of both rapid processing and nuanced interpretation of user session data.

PatchNet operates by identifying and encoding discrete sequences of user actions, termed ‘behavioral patches’, within live stream data. These patches are not simply individual actions, but rather temporally-bound groupings representing complete, meaningful segments of user behavior – for example, a user navigating to a specific product page, adding it to a cart, and initiating checkout. The architecture employs a lightweight neural network to efficiently process the raw action sequences, creating vector embeddings that capture the essential characteristics of each patch. This process allows for the reduction of variable-length action sequences into fixed-size representations, facilitating subsequent analysis and comparison with other behavioral patterns. The resulting patch embeddings serve as a concise and informative input for the LLM, enabling it to reason about user intent and potential risk factors.

The Large Language Model (LLM) in CS-VAR performs risk evaluation by analyzing behavioral patches-encoded sequences of user actions-and incorporating evidence from comparable sessions. This process involves retrieving relevant sessions based on similarity to the current session’s patch sequences, and then presenting the LLM with both the current patch and supporting evidence from those analogous sessions. The LLM utilizes this combined information to assess risk levels, allowing for more nuanced and accurate evaluations than could be achieved through analysis of a single session in isolation. This cross-session reasoning capability enables the system to identify patterns and anomalies indicative of potential risks.

Distilling Insight: The Transfer of Knowledge

Cross-Granularity Distillation is implemented to enhance PatchNet’s performance by leveraging the knowledge embedded within a large language model (LLM). This process involves transferring insights from the LLM, trained on extensive datasets, to the PatchNet architecture. Specifically, the LLM serves as a ‘teacher’ model, providing supervisory signals to guide the training of PatchNet, the ‘student’ model. This knowledge transfer isn’t simply replicating outputs; rather, it focuses on distilling the reasoning process and nuanced understandings of behavioral patterns learned by the LLM, enabling PatchNet to generalize more effectively and improve its predictive capabilities without requiring the same scale of data or computational resources.

PatchNet incorporates Graph-Aware Attention to model dependencies between behavioral patches within a user session. This mechanism represents the sequence of patches as nodes in a graph, enabling the model to learn relationships beyond simple sequential order. Attention weights are calculated based on the connections between patches, allowing the model to prioritize and aggregate information from related patches more effectively. This improves the encoding of session behavior by capturing complex, non-linear interactions between different user actions, leading to a more nuanced representation of user intent and risk.

The model utilizes a saliency score to quantify the contribution of individual behavioral patches to the Large Language Model’s (LLM) overall risk assessment. This score, generated during the distillation process, represents the relative importance of each patch in influencing the LLM’s final decision. By assigning a numerical value to each patch’s contribution, the system provides a degree of explainability, allowing for the identification of specific behavioral patterns driving risk predictions. This granular insight facilitates debugging, model refinement, and improved trust in the system’s outputs by clarifying the rationale behind each assessment.

Implementation of Cross-Granularity Distillation, Graph-Aware Attention, and saliency-guided reasoning resulted in state-of-the-art performance metrics. Specifically, the model achieved a 5.0% improvement in Precision-Recall Area Under the Curve (PR-AUC) and a 10.9% reduction in the false positive rate when benchmarked against existing methods in the same threat detection tasks. These gains demonstrate the effectiveness of the combined approach in both identifying malicious activity and minimizing unnecessary alerts.

The Inevitable View: Proactive Control and the Path Forward

The core of proactive risk management in dynamic live streams lies in the creation of a holistic ‘session representation’. This is achieved through CS-VAR, a system that doesn’t merely flag individual problematic instances, but instead generates a vector embedding-a numerical translation-of the entire streaming session. This embedding effectively captures the evolving risk level, factoring in the duration, content, and user interactions. By condensing a potentially lengthy stream into a compact vector, CS-VAR allows for a global assessment of risk, enabling moderators to quickly identify sessions demanding immediate attention and prioritize their review efforts, rather than reacting to isolated events.

Session-aware summarization serves as a crucial bridge between automated detection and effective human intervention in live streaming moderation. Rather than simply flagging potentially harmful content, the system generates concise, contextual summaries of each streaming session, highlighting key events and risk indicators. This allows human moderators to quickly grasp the nature of the threat – whether it involves fraudulent activity, gambling references, or other policy violations – and respond appropriately with minimal delay. By providing this nuanced understanding, the framework significantly reduces the cognitive load on moderators, enabling them to efficiently review a higher volume of sessions and make informed decisions, ultimately enhancing the safety and quality of the live streaming platform.

The developed framework demonstrates efficacy beyond simple detection of illicit activities like fraud and gambling, actively enabling proactive risk control within live streaming platforms. Rigorous evaluation reveals a Recall score of 0.6321, indicating the system successfully identifies a substantial proportion of risky sessions. Importantly, this performance is achieved while maintaining a low false positive rate, minimizing unnecessary intervention and ensuring a streamlined moderation process. This balance between sensitivity and precision highlights the framework’s potential to not only flag problematic content but also to preemptively mitigate risks before they escalate, fostering a safer and more reliable user experience.

The adaptability of this risk control framework extends beyond live streaming, with ongoing research dedicated to its implementation in other rapidly changing digital environments – such as dynamic online games and real-time financial trading platforms. Crucially, future iterations will integrate real-time feedback loops, allowing the system to learn continuously from its performance and refine its risk assessment capabilities. This continuous improvement process will involve incorporating human moderator input and observed outcomes, effectively creating a self-optimizing system that proactively anticipates and mitigates emerging threats with increasing accuracy and efficiency. The goal is not simply detection, but a predictive and responsive risk management solution capable of evolving alongside the ever-changing landscape of online interactions.

The pursuit of a flawless system for live streaming risk assessment, as explored within CS-VAR, inherently courts stagnation. The framework, while striving to identify coordinated malicious behaviors across sessions, operates within the understanding that complete prevention is illusory. This echoes a fundamental principle: a system that never breaks is, in effect, already dead. Tim Berners-Lee observed, “The Web is more a social creation than a technical one.” This sentiment applies directly to CS-VAR; the evolving nature of malicious behavior necessitates a continuously adapting system, one built not on absolute security, but on resilient growth and the capacity to learn from inevitable failures. The architecture isn’t a fortress, but an ecosystem.

What’s Next?

The framework detailed herein does not solve live streaming risk assessment; it merely shifts the point of failure. Focusing on cross-session evidence is a tacit admission that single-stream analysis is insufficient-a truth long suspected by those who understand malicious coordination isn’t born within a stream, but between them. Long stability in detection rates will not indicate success, but camouflage a more subtle, evolving attack surface. The system will eventually identify patterns it was designed to identify, leaving the truly novel threats to flourish undetected.

Future work will inevitably focus on expanding the scope of “behavioral patches.” This is not a path towards resilience, but a deepening commitment to a losing game of catch-up. The true challenge lies not in recognizing existing malicious behaviors, but in anticipating the emergence of new ones. Perhaps the field should consider abandoning the premise of ‘assessment’ entirely, and instead explore methods for gracefully accommodating risk, treating streams not as spaces to be secured, but as complex adaptive systems.

The temptation to treat CS-VAR, or its successors, as a complete solution should be resisted. Such systems do not offer protection; they offer the illusion of it. The real work lies in acknowledging the inherent unpredictability of large-scale online interaction, and designing for a future where failures are not exceptions, but inevitable expressions of systemic complexity.

Original article: https://arxiv.org/pdf/2601.16027.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- Thieves steal $100,000 worth of Pokemon & sports cards from California store

- Landman Recap: The Dream That Keeps Coming True

- 3 Best Movies To Watch On Prime Video This Weekend (Dec 13-14)

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- LINK PREDICTION. LINK cryptocurrency

- The 1 Scene That Haunts Game of Thrones 6 Years Later Isn’t What You Think

- First Glance: “Wake Up Dead Man: A Knives Out Mystery”

- World of Warcraft Decor Treasure Hunt riddle answers & locations

- Hell Let Loose: Vietnam Gameplay Trailer Released

2026-01-25 18:34