Author: Denis Avetisyan

A new deep learning framework leverages multi-sensor data to significantly improve communication reliability for unmanned aerial vehicles in complex urban environments.

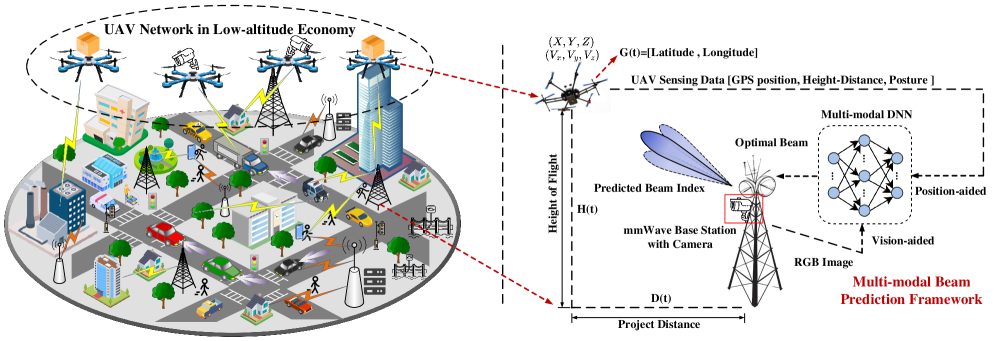

This paper introduces SaM²B, a reliability-aware, dynamically weighted multi-modal fusion approach for accurate beam prediction in integrated sensing and communication (ISAC) systems utilizing UAVs.

Reliable communication is paramount as the low-altitude economy expands through applications like drone delivery and aerial sensing, yet current multi-sensor approaches often treat all data streams as equally trustworthy. This limitation motivates the work presented in ‘Empower Low-Altitude Economy: A Reliability-Aware Dynamic Weighting Allocation for Multi-modal UAV Beam Prediction’, which introduces SaM²B, a framework that dynamically adjusts the contribution of each sensor modality-visual, GPS, and inertial measurement units-based on real-time reliability assessments. By aligning semantic representations across these modalities using contrastive learning, SaM²B demonstrably improves beam prediction accuracy in challenging low-altitude environments. Could this adaptive weighting scheme unlock more robust and efficient UAV communication networks for a future increasingly reliant on aerial systems?

The Expanding Horizon of UAV Networks and the Demand for Intelligent Beamforming

The increasing deployment of Unmanned Aerial Vehicle (UAV) networks, particularly for applications like Low Altitude Environments (LAE) – encompassing drone delivery, precision agriculture, and infrastructure inspection – is creating a substantial need for communication systems capable of handling ever-increasing data demands. These dynamic aerial environments require significantly more bandwidth than traditional wireless networks were designed to provide. The proliferation of UAVs, often operating in dense formations and complex urban landscapes, generates considerable radio frequency interference and necessitates highly reliable, low-latency connections to ensure safe and efficient operation. Consequently, advancements in wireless communication technologies are critical to unlocking the full potential of UAV networks and supporting the expanding range of applications reliant on their connectivity.

Conventional beamforming techniques, frequently built upon Orthogonal Frequency Division Multiplexing (OFDM), encounter significant limitations when deployed in the volatile conditions typical of unmanned aerial vehicle (UAV) networks. OFDM’s reliance on precise channel state information – a detailed understanding of signal propagation – proves problematic as UAVs move and the wireless environment shifts rapidly. Acquiring and maintaining this information demands substantial computational resources and introduces latency, hindering real-time adaptation to interference and changing link conditions. Consequently, the performance of these established methods degrades quickly in dynamic scenarios, necessitating more sophisticated approaches that minimize reliance on exhaustive channel estimation and maximize responsiveness to environmental fluctuations.

Robust connectivity in modern UAV networks hinges on sophisticated beam management techniques that transcend the limitations of conventional methods. Unlike static beamforming approaches, intelligent systems dynamically adjust signal direction and strength to counter the inherent volatility of aerial environments and mitigate interference. These adaptive systems leverage real-time channel feedback and predictive algorithms to anticipate shifts in UAV positions and signal obstructions, ensuring a consistently strong and reliable link. The ability to quickly reconfigure beam patterns-responding to both intended and unintended signal disruptions-is paramount for maintaining high data rates and minimizing latency, particularly in demanding applications like low-altitude communications and environmental monitoring. Ultimately, this proactive beam management not only enhances network performance but also improves the overall resilience and dependability of UAV-based communication systems.

Harnessing Multi-Modal Fusion and Deep Learning for Adaptive Beam Prediction

Multi-Modal Fusion improves beam prediction accuracy by combining data from Global Positioning System (GPS) receivers, Inertial Measurement Units (IMUs), and visual sensors. GPS provides absolute location data, while IMUs offer precise, short-term measurements of orientation and movement. Visual information, typically obtained from cameras, adds contextual awareness of the surrounding environment. Integrating these disparate data streams allows the system to overcome the limitations of any single sensor; for example, GPS signals can be unreliable in urban canyons, but IMU data can bridge these gaps. The fusion process typically involves data synchronization, transformation into a common coordinate frame, and the application of sensor fusion algorithms – such as Kalman filtering or deep learning techniques – to create a more robust and accurate estimate of the device’s state, directly improving the prediction of optimal beamforming indices.

Deep Learning (DL) techniques address the limitations of conventional beam prediction methods by leveraging substantial computational resources to model the non-linear relationships within complex sensor data. Traditional approaches, such as Kalman filtering or rule-based systems, often struggle with the high dimensionality and intricate dependencies present in multi-modal inputs. DL models, specifically those employing architectures like Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), can learn these mappings directly from data, identifying subtle patterns indicative of optimal beam indices. This data-driven approach allows for adaptive beam prediction that surpasses the performance of hand-engineered algorithms, particularly in dynamic and unpredictable environments. The capacity of DL to process and extract features from high-dimensional datasets enables more accurate and responsive beam steering, ultimately improving signal quality and network performance.

Linear projection techniques, such as Principal Component Analysis (PCA) and Autoencoders, are employed to reduce the dimensionality of multi-modal sensor data prior to input into Deep Learning (DL) models. These methods transform the original high-dimensional data into a lower-dimensional representation while preserving the most significant variance, thereby decreasing computational complexity and storage requirements. Reducing dimensionality mitigates the risk of overfitting in DL models, particularly when training data is limited, and accelerates training and inference times. Furthermore, lower-dimensional data representations can improve model generalization performance by focusing on the most informative features and reducing noise inherent in high-dimensional datasets. The selection of the optimal projection technique and the number of retained dimensions are crucial parameters determined through experimentation and validation to balance dimensionality reduction and information loss.

SaM²B: An Attention-Based Framework for Robust Beam Prediction

The SaM²B framework employs attention mechanisms to modulate the contribution of each input modality – GPS, Inertial Measurement Unit (IMU), and vision – based on its estimated reliability. These mechanisms assign weights to each modality’s feature representation, allowing the model to prioritize more trustworthy data sources and downplay those deemed less reliable under current conditions. This dynamic weighting process is implemented through attention layers that analyze the features from each modality and calculate attention scores, effectively determining the importance of each modality in the final beam prediction. The attention scores are then used to create a weighted combination of the modality-specific features, enhancing the robustness of the system to sensor noise or failures.

Contrastive learning within the SaM²B framework enhances robustness by training the model to recognize similarities and differences between data representations. This is achieved by minimizing the distance between representations of the same scene or event, even when subject to noise or variations in environmental factors such as lighting or weather. Specifically, the model learns to map different views of the same underlying scenario to nearby points in the embedding space, while pushing apart representations of distinct scenarios. This process results in learned features that are less sensitive to superficial changes and more focused on invariant characteristics, ultimately improving generalization performance and prediction accuracy in challenging real-world conditions.

The SaM²B framework employs a ResNet-50 convolutional neural network as its visual feature extractor. To focus processing on relevant areas within each image and reduce computational load, a bounding box (BBOX) clipping technique is applied. This technique isolates the region of interest – the UAV itself – before feature extraction, effectively discarding irrelevant background information. By concentrating on the UAV’s visual characteristics, the ResNet backbone can more efficiently learn discriminative features, which ultimately contributes to improved beam prediction accuracy. This approach allows SaM²B to achieve a Top-1 accuracy of 88.63% using only BBOX data, compared to 86.32% when processing the entire image.

Evaluation of the SaM²B framework using the DeepSense 6G Dataset demonstrates a Top-1 accuracy approaching 90% on real-world unmanned aerial vehicle (UAV) datasets. Performance analysis indicates that utilizing bounding box (BBOX) data alone yields a Top-1 accuracy of 88.63%, while processing the complete image results in a Top-1 accuracy of 86.32%. These results confirm SaM²B’s ability to effectively predict beam direction with high accuracy, even when relying solely on limited visual information from BBOX detections.

Implications for 6G and the Future of Wireless Sensing Applications

The convergence of intelligent beamforming techniques and unmanned aerial vehicle (UAV) networks promises to be a cornerstone of future 6G mobile communications, particularly in enabling Low-Altitude Edge (LAE) computing and significantly enhanced connectivity. By dynamically focusing radio signals, intelligent beamforming overcomes the challenges of signal propagation in complex environments, while UAVs provide flexible and rapidly deployable network infrastructure. This synergy facilitates the creation of dense, localized networks capable of supporting data-intensive applications like extended reality, precision agriculture, and real-time video analytics. Furthermore, the adaptability of UAVs allows networks to scale on demand, responding to fluctuating user density and coverage requirements, ultimately improving spectral efficiency and overall system capacity for the next generation of wireless communication.

The SaM²B framework exhibits notable efficacy in Integrated Sensing and Communication (ISAC) scenarios due to its dynamic weight allocation strategy. This adaptive approach allows the system to intelligently prioritize either communication or sensing based on real-time demands, optimizing performance for both functionalities concurrently. Unlike traditional methods that often treat these as competing objectives, SaM²B effectively balances them by assigning varying importance to different data streams. This is achieved through a learned weighting system that adjusts to environmental conditions and task requirements, enabling the network to simultaneously deliver reliable communication links while accurately perceiving its surroundings – a critical capability for future wireless applications demanding both connectivity and environmental awareness. The framework’s ability to dynamically manage resources makes it uniquely positioned to support emerging 6G technologies and complex ISAC deployments.

Millimeter wave (mmWave) links offer substantially increased bandwidth compared to lower frequency radio waves, but their short wavelengths are susceptible to blockage and propagation loss, particularly in complex environments. To overcome these limitations, intelligent beam management techniques dynamically focus the mmWave signal, concentrating energy towards the intended receiver and mitigating interference in dense deployments. This adaptive focusing not only extends the range of mmWave communications but also dramatically improves spectral efficiency – the amount of data that can be transmitted per unit of bandwidth. By intelligently steering and shaping the mmWave beam, systems can effectively reuse frequencies across a wider area, maximizing capacity and supporting a greater number of connected devices without significant performance degradation – a crucial capability for future 6G networks and high-density wireless sensing applications.

Recent investigations into the SaM²B framework reveal substantial advancements in performance when contrasted with conventional methodologies; the system achieved a peak Top-1 accuracy of 90%, demonstrating a marked improvement in precision. Notably, a fusion of GPS, height, and distance data contributed to a still-impressive 73.42% Top-1 accuracy, underscoring the considerable benefits of multi-modal data integration for enhanced reliability and robustness. This ability to synthesize information from diverse sources allows SaM²B to overcome limitations inherent in single-sensor approaches, offering a more complete and accurate understanding of the surrounding environment and ultimately paving the way for more sophisticated wireless sensing applications.

The pursuit of robust beam prediction, as detailed in this study, highlights a fundamental principle of system design: structure dictates behavior. SaM²B’s dynamic weighting allocation isn’t merely about combining data; it’s about establishing a hierarchical relationship between sensor modalities, allowing the system to prioritize reliable information in fluctuating low-altitude conditions. This echoes Donald Davies’ observation that, “Simplicity scales, cleverness does not.” The framework’s elegance lies in its ability to adapt – to shift emphasis based on contextual reliability – rather than relying on complex, static fusion algorithms. A system that understands and responds to the inherent trade-offs between modalities, as SaM²B demonstrates, is far more likely to maintain consistent performance even when individual components falter, illustrating how good architecture is often invisible until it breaks.

Beyond the Horizon

The pursuit of accurate beam prediction for UAV communication, as demonstrated by SaM²B, highlights a perennial truth: the map is not the territory. While dynamic weighting of sensor modalities offers a compelling refinement, it addresses a symptom, not the inherent chaos of the low-altitude environment. Scalability will not arise from increasingly complex algorithms, but from a more fundamental understanding of how information truly propagates within these spaces. The system, after all, is only as robust as its weakest link, and current frameworks largely treat sensors as isolated inputs rather than interdependent components of a larger, dynamic whole.

Future work must shift focus from purely algorithmic improvements to a more holistic, ecological perspective. Consider the implications of sensor correlation – how the noise in one modality informs the reliability of another. Beyond contrastive learning, exploring methods that actively model uncertainty, not just predict values, could prove vital. The true challenge lies not in squeezing more performance from existing sensors, but in designing systems that gracefully degrade – that recognize their own limitations and adapt accordingly.

Ultimately, a genuinely scalable solution will resemble a living organism, capable of self-regulation and repair. It will not be defined by the cleverness of a single algorithm, but by the elegant simplicity of its underlying principles. The ambition should not be to control the environment, but to harmonize with it.

Original article: https://arxiv.org/pdf/2512.24324.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Deadwood’s Forgotten Episode Is Finally Being Recognized as the Greatest Hour of Western TV

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

- Gold Rate Forecast

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Firefly’s Most Problematic Character Still Deserves Better 23 Years Later

- When is Pluribus Episode 5 out this week? Release date change explained

- Banks & Tokens: Oh, the Drama! 🎭

2026-01-03 23:11