Author: Denis Avetisyan

This review explores how bringing machine learning processing closer to IoT devices can dramatically improve network efficiency and reduce environmental impact.

A systematic analysis of the application of edge computing and machine learning techniques to enhance the sustainability of Internet of Things networks.

Despite the proliferation of interconnected devices, the escalating energy demands and resource constraints of Internet of Things (IoT) networks pose a significant sustainability challenge. This systematic literature review, ‘Machine Learning on the Edge for Sustainable IoT Networks: A Systematic Literature Review’, comprehensively examines the potential of deploying machine learning algorithms directly on edge devices to optimize IoT operations and minimize environmental impact. Our analysis reveals a growing body of research demonstrating that edge-based machine learning can substantially improve energy efficiency, reduce bandwidth consumption, and enhance data privacy within IoT ecosystems. However, realizing the full sustainability benefits requires further investigation into real-world hardware implementations and holistic system evaluations-what novel approaches will best bridge the gap between theoretical promise and practical, scalable solutions for truly sustainable IoT networks?

Unraveling the Energy Paradox of a Connected World

The exponential growth of Internet of Things (IoT) devices-from smart home appliances and wearable sensors to industrial monitoring systems and connected vehicles-is creating an unprecedented surge in global energy demand. This proliferation isn’t simply a matter of increased electricity consumption; it’s a fundamental strain on resources, contributing to carbon emissions and exacerbating concerns about long-term sustainability. While individual devices may require minimal power, the sheer scale of deployment-projected to reach tens of billions of connected devices in the coming years-amplifies this demand significantly. Traditional power sources are increasingly burdened, and reliance on fossil fuels to meet this growing need undermines efforts to mitigate climate change, prompting a critical need for innovative, energy-efficient IoT solutions and a reevaluation of how these networks are powered and maintained.

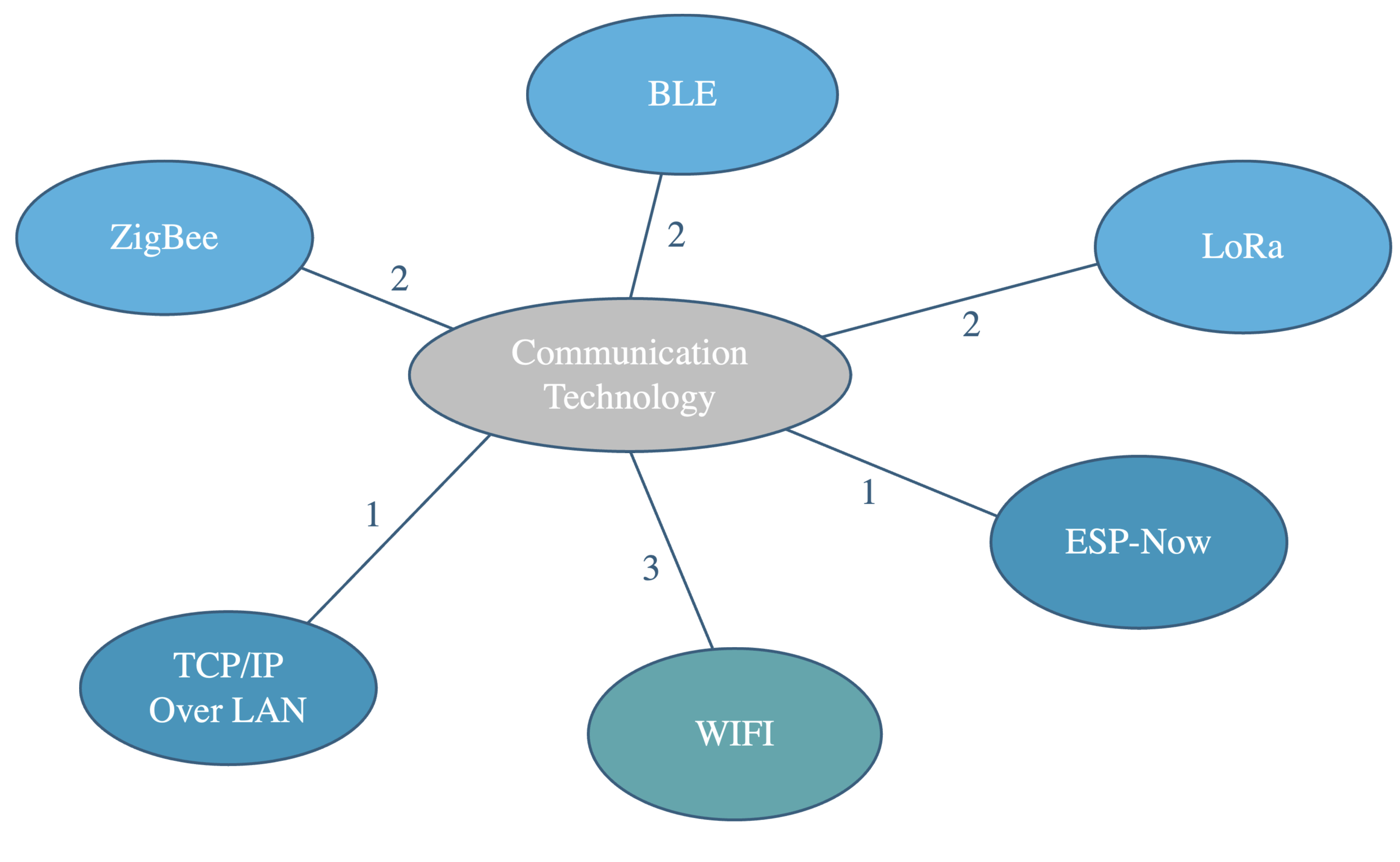

Conventional data handling strategies, designed for robust, power-abundant systems, struggle within the limitations of energy-constrained Internet of Things networks. These networks, often comprised of numerous, remotely deployed sensors and actuators, operate under strict power budgets, frequently relying on battery power or energy harvesting. Traditional methods – like frequent, high-bandwidth data transmission and centralized processing – quickly deplete these limited resources, hindering long-term functionality and scalability. The inherent need for continuous operation in many IoT applications, such as environmental monitoring or predictive maintenance, exacerbates this problem, demanding innovative approaches to data compression, edge computing, and communication protocols that minimize energy expenditure without sacrificing data integrity or application performance. Consequently, a fundamental rethinking of data transmission and processing paradigms is crucial for realizing the full potential of widespread IoT deployment.

The sustained growth of the Internet of Things necessitates a fundamental change in how devices are powered and communicate, as current energy demands pose a significant threat to the long-term scalability of these networks. Simply increasing battery capacity or relying on traditional power grids is unsustainable given the projected billions of connected devices; instead, innovation must focus on minimizing energy consumption at every stage of data transmission and processing. Researchers are actively exploring methodologies such as energy harvesting, ultra-low-power communication protocols, and edge computing to reduce reliance on centralized power sources and extend device lifespans. The viability of future IoT deployments-from smart cities and precision agriculture to industrial automation and remote healthcare-is inextricably linked to the successful implementation of these energy-efficient strategies, ensuring a connected world doesn’t come at an unsustainable cost.

Deconstructing Sustainability: A Systematic Inquiry

A systematic literature review, conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) methodology, identified a range of potential energy-saving techniques applicable to Internet of Things (IoT) deployments. This review process involved a comprehensive search of relevant databases and a defined inclusion/exclusion criteria, ultimately yielding ten studies that specifically investigated the application of machine learning algorithms to improve the sustainability of IoT systems. The identified studies focused on diverse areas such as energy consumption prediction, resource allocation optimization, and intelligent device management, demonstrating a growing research interest in leveraging machine learning for energy efficiency within the IoT domain.

Machine learning techniques offer substantial potential for optimization within Internet of Things (IoT) deployments, directly impacting energy consumption. These algorithms enable predictive maintenance, reducing downtime and associated energy waste; intelligent resource allocation, dynamically adjusting power based on real-time needs; and anomaly detection, identifying and mitigating inefficient operations. The application of machine learning allows IoT systems to move beyond pre-programmed responses to adaptive behaviors, leading to significant reductions in overall energy usage and improved sustainability. This is achieved through data-driven insights derived from sensor data, network activity, and device performance metrics, which are then used to refine operational parameters and optimize energy expenditure.

Several machine learning algorithms demonstrate potential for improving energy efficiency within Internet of Things deployments. Support Vector Machines (SVMs) are effective for classification and regression tasks, enabling optimized resource allocation based on predicted demand. Random Forest algorithms, utilizing ensemble learning, provide robust and accurate predictions of energy consumption patterns, even with complex datasets. Long Short-Term Memory (LSTM) networks, a type of recurrent neural network, are particularly suited for analyzing time-series data, allowing for the forecasting of future energy needs and proactive adjustments to IoT device operation, thereby minimizing waste and maximizing sustainability.

![This systematic review adhered to the PRISMA methodology to ensure a rigorous and transparent process, as detailed in references [25, 26].](https://arxiv.org/html/2601.11326v1/x1.jpeg)

Re-Engineering Efficiency: Advanced Techniques for Power Conservation

Edge computing architectures process data at or near the point of data generation, thereby minimizing the amount of data transmitted to centralized cloud servers. This localized processing significantly reduces bandwidth requirements, as only essential information or results are communicated externally. The reduction in data transmission directly translates to lower energy expenditure, both at the data source and within the network infrastructure. By decreasing latency and reliance on long-haul communication, edge computing offers a more energy-efficient alternative to traditional cloud-based processing, particularly for applications generating high volumes of data, such as IoT devices and real-time sensor networks.

Data compression techniques, including lossless and lossy algorithms, directly reduce energy consumption by minimizing the amount of data that needs to be transmitted or stored. Reducing data size lowers bandwidth requirements, thereby decreasing transmission power and associated energy expenditure. Complementary to data compression, sleep scheduling involves transitioning device components or entire systems into low-power states during periods of inactivity. This is typically achieved by selectively powering down unused hardware modules or reducing clock speeds. The efficacy of sleep scheduling is contingent on accurate prediction of activity patterns and efficient state transition mechanisms to avoid overhead that negates energy savings; implementations often utilize duty cycling or adaptive power management to optimize these transitions.

Dynamic Voltage and Frequency Scaling (DVFS) is a power management technique used in computing devices to adjust the voltage and clock frequency of a processor or other component based on the current workload. By reducing the voltage and frequency during periods of low activity, DVFS directly lowers power consumption, as power is proportional to the square of the voltage and linearly proportional to the frequency. This adaptation is typically managed by the operating system or embedded system firmware, which monitors resource utilization and dynamically adjusts parameters to optimize the balance between performance and energy efficiency. Modern implementations often employ predictive algorithms to anticipate workload changes, enabling proactive scaling and minimizing performance degradation while maximizing energy savings.

Anomaly detection and deep reinforcement learning (DRL) enable proactive energy management by identifying deviations from normal operating patterns and dynamically adjusting system behavior. DRL algorithms can learn optimal energy-saving policies through interaction with the environment, predicting future energy demands and preemptively optimizing resource allocation. Recent implementations utilizing RF-based adaptive communication protocol selection have demonstrated significant energy reduction, with reported savings of up to 68% compared to static communication schemes. These techniques analyze radio frequency signal characteristics to intelligently switch between communication protocols, minimizing transmission power and maximizing data throughput for improved energy efficiency.

Validating the Paradigm: Real-World Testbed Results

A dedicated testbed was constructed to facilitate the evaluation of energy-saving methodologies within a representative Internet of Things (IoT) deployment. This testbed consists of multiple sensor nodes equipped with various communication interfaces and processing capabilities, mirroring the characteristics of real-world IoT devices. The environment allows for precise control over operational parameters, including transmission power, duty cycle, and data rates, enabling repeatable experimentation. Data collected from the testbed includes energy consumption measurements at the node level, communication statistics such as packet delivery ratio and latency, and application-specific performance metrics. This controlled environment ensures that the performance of energy-saving techniques can be quantified independently of external factors and accurately reflect their potential in practical deployments.

A dedicated testbed environment enabled the quantitative assessment of energy reductions achieved by individual techniques and their combinations. Empirical results demonstrated that employing Reinforcement Learning (RL) for Media Access Control (MAC) layer duty cycle control yielded a 4.5x improvement in device lifetime. This performance gain was determined through controlled experimentation within the testbed, allowing for precise measurement of energy consumption and operational duration under various configurations and workloads. The methodology facilitated the isolation and validation of RL’s contribution to extended device lifespan compared to conventional duty cycling approaches.

Evaluations within the established testbed demonstrate substantial energy savings across a range of IoT applications. Specifically, the implementation of Reinforcement Learning for MAC layer duty cycle control resulted in a 4.5x improvement in device lifetime. Furthermore, co-scheduling of CPU and GPU utilizing Deep Reinforcement Learning achieved a 46.33% improvement in energy efficiency. These results confirm the viability and potential of the proposed energy-saving techniques for practical deployment in resource-constrained IoT environments, validating the efficacy of the implemented approaches and providing quantifiable data on performance gains.

Optimized communication protocol design is essential for realizing the full potential of energy-efficient algorithms in IoT devices. Research indicates that Deep Reinforcement Learning (DRL)-based co-scheduling of the CPU and GPU can significantly improve energy efficiency, demonstrating a 46.33% improvement in performance metrics. This co-scheduling approach dynamically allocates processing resources to minimize energy consumption while maintaining operational requirements, highlighting the synergy between protocol-level optimizations and intelligent resource management techniques.

Towards a Sustainable Future: Charting the Next Iteration

Significant reductions in the energy demands of the Internet of Things necessitate ongoing innovation in machine learning and optimization algorithms. Current approaches often rely on static models, failing to adapt to the dynamic and often unpredictable operational environments of IoT devices. Researchers are actively exploring techniques like federated learning, which enables model training across decentralized devices without exchanging sensitive data, and reinforcement learning, allowing devices to learn energy-efficient behaviors through trial and error. Furthermore, advancements in algorithm compression and edge computing are crucial, minimizing the computational burden on resource-constrained devices and reducing data transmission requirements. These efforts promise not only to extend battery life and decrease reliance on traditional power sources but also to unlock the potential for truly pervasive and sustainable IoT deployments, paving the way for a future where interconnected devices operate with minimal environmental impact.

Realizing the full potential of energy-efficient machine learning algorithms within the Internet of Things necessitates their seamless integration into widely adopted, standardized platforms. Currently, fragmented ecosystems and proprietary systems hinder the broad deployment of these innovations; a unified approach simplifies implementation and reduces compatibility issues. By embedding optimized algorithms directly into common IoT frameworks, developers gain immediate access to powerful tools for minimizing energy usage without requiring specialized expertise or extensive custom coding. This standardization fosters scalability, allowing sustainable practices to proliferate rapidly across diverse applications, from smart homes and cities to industrial monitoring and precision agriculture, ultimately maximizing the environmental and economic impact of a more efficient IoT landscape.

Achieving genuinely sustainable Internet of Things systems demands a comprehensive strategy that transcends isolated improvements in either hardware or software. While advancements in low-power microprocessors and energy-harvesting technologies are vital, these gains are easily offset by inefficient algorithms and communication protocols. Similarly, sophisticated software cannot fully compensate for power-hungry sensors or unreliable data transmission. Instead, a synergistic approach is required – one where hardware designs are explicitly tailored to support computationally lean software, and algorithms are optimized to minimize data transfer and processing demands. This co-design methodology necessitates cross-disciplinary collaboration, fostering innovation at the intersection of materials science, computer engineering, and data analytics, ultimately ensuring that the benefits of pervasive connectivity do not come at an unsustainable environmental cost.

The proliferation of interconnected devices defining the Internet of Things presents a significant, and growing, energy demand. Without a dedicated focus on minimizing this footprint, the very sustainability of the IoT is jeopardized; current trajectories suggest an unsustainable rise in energy consumption, potentially offsetting the efficiency gains these technologies promise. Prioritizing energy efficiency isn’t simply a matter of technological advancement, but a fundamental shift in design philosophy, demanding lifecycle assessments that consider manufacturing, operation, and eventual disposal. Environmental responsibility extends beyond energy, encompassing material sourcing, e-waste reduction, and the development of biodegradable or easily recyclable components. Ultimately, the IoT’s long-term success hinges on its ability to demonstrate a net positive environmental impact, transforming from a potential burden into a key enabler of a truly circular and sustainable economy.

The systematic literature review demonstrates a clear pursuit of optimized systems-specifically, IoT networks-through the implementation of machine learning at the edge. This endeavor mirrors a fundamental principle of reverse engineering: to truly understand a network’s limitations, one must push against its boundaries. As Henry David Thoreau observed, “Go confidently in the direction of your dreams. Live the life you’ve imagined.” This applies here; researchers aren’t simply accepting current IoT architectures, but actively probing for improvements in sustainability and energy efficiency. Every proposed algorithm, every tested model, is a confident stride toward a more efficient and ecologically sound network-a life imagined for the Internet of Things. The study’s identification of gaps isn’t a confession of failure, but rather a clear-eyed assessment of where further exploration is needed to truly ‘hack’ the system for optimal performance.

What’s Next?

The systematic distillation of machine learning at the network edge, as presented, reveals a curious pattern. A wealth of theoretical propositions, elegantly crafted, yet conspicuously detached from the messiness of actual hardware. The pursuit of sustainability, ironically, often halts at the simulation stage. The true cost-in energy, materials, and the inevitable entropy of real-world deployment-remains largely uncalculated. The field now faces a necessary collision with practicality; the algorithms must be forced to contend with limited processing, intermittent connectivity, and the inherent unpredictability of sensors exposed to the elements.

Future progress will not be measured in improved accuracy on benchmark datasets, but in the demonstrable reduction of resource consumption in functioning systems. This necessitates a shift in evaluation metrics, prioritizing long-term stability, adaptability to changing conditions, and the ability to gracefully degrade in the face of failure. The current focus on model optimization is valuable, yet insufficient. The architecture itself-the very fabric of these edge networks-must become a subject of rigorous experimentation.

Ultimately, the enduring questions aren’t about what these algorithms can learn, but how they learn to survive. A truly sustainable system isn’t merely efficient; it’s resilient, self-repairing, and capable of evolving in response to an environment that, by its very nature, resists perfect prediction. The elegance of a solution is irrelevant if it collapses under the weight of reality.

Original article: https://arxiv.org/pdf/2601.11326.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- The MCU’s Mandarin Twist, Explained

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- SHIB PREDICTION. SHIB cryptocurrency

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- Rob Reiner’s Son Officially Charged With First Degree Murder

- MNT PREDICTION. MNT cryptocurrency

- Every Death In The Night Agent Season 3 Explained

2026-01-20 05:42