Author: Denis Avetisyan

A new deep learning architecture combines seismological expertise with artificial intelligence to dramatically improve the accurate classification of seismic events.

This research introduces a Physics-Informed Convolutional Recurrent Neural Network (PI-CRNN) for enhanced earthquake, blast, and noise discrimination with improved interpretability.

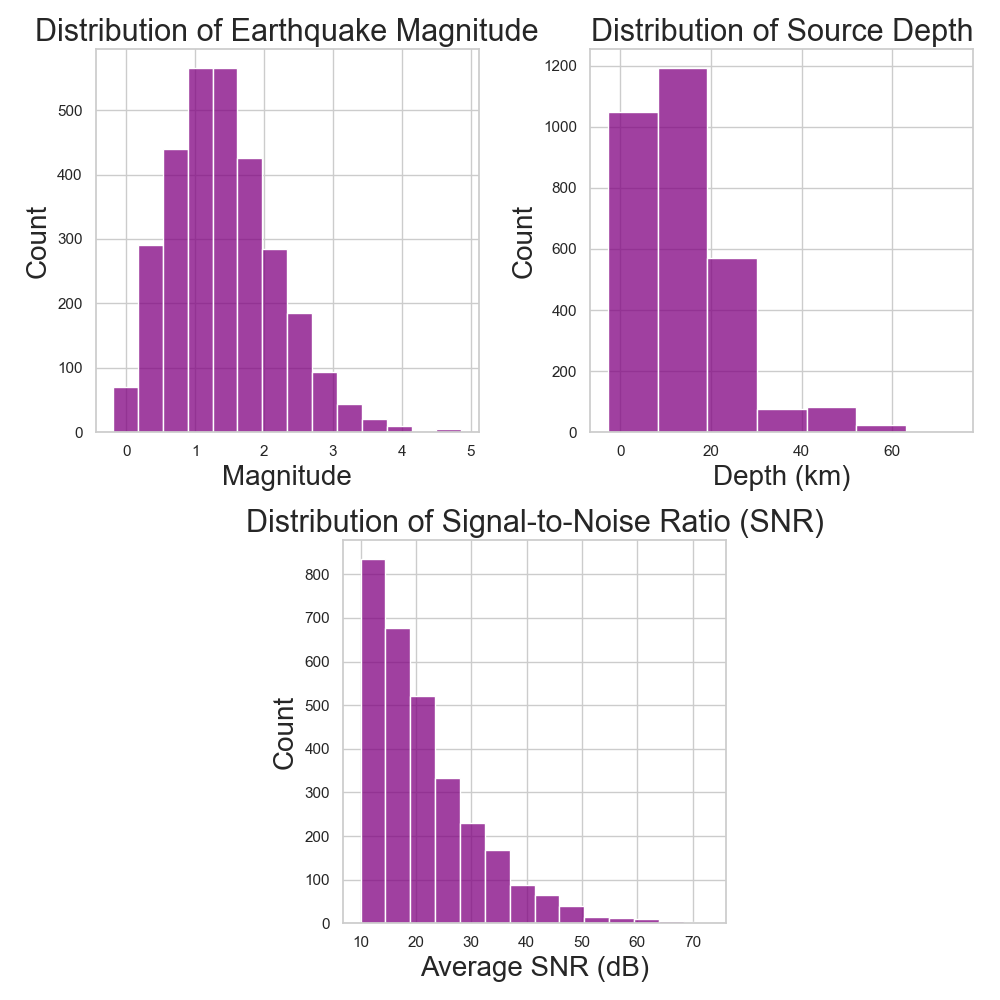

Accurate discrimination of seismic events remains challenging due to the similarity of signals from natural earthquakes and anthropogenic explosions. This is addressed in ‘An Interpretable Physics Informed Multi-Stream Deep Learning Architecture for the Discrimination between Earthquake, Quarry Blast and Noise’, which introduces a novel Physics-Informed Convolutional Recurrent Neural Network (PI-CRNN) designed to integrate seismological domain knowledge directly into the learning process. Achieving 97.56% classification accuracy on the Curated Pacific Northwest AI ready Seismic Dataset, the PI-CRNN outperforms standard deep learning and traditional methods by leveraging a multi-stream architecture and demonstrably learning distinct physical signatures. Could this approach pave the way for more reliable, transparent, and scalable AI-driven seismic monitoring systems?

Decoding the Earth’s Signals: A Foundation for Vigilance

The accurate differentiation of seismic signals – discerning naturally occurring earthquakes from those generated by explosions, or even simply background noise – represents a foundational challenge in global monitoring efforts. This capability is not merely academic; it’s central to international treaty verification, allowing for the confirmation of compliance with agreements limiting nuclear testing. Beyond arms control, precise seismic discrimination is vital for tracking natural hazards, assessing the impact of underground industrial activity, and even understanding the Earth’s internal structure. A misidentified event can lead to false alarms, wasted resources, or, conversely, a failure to detect a genuine threat, highlighting the critical need for robust and reliable analytical techniques capable of navigating the complexities of seismic data.

Current seismic event identification relies heavily on techniques such as the Shear Wave Amplitude Ratio and Short-Term Average to Long-Term Average (STA/LTA) triggering, yet these methods demonstrate surprisingly limited efficacy. Despite decades of refinement, analyses reveal that these traditional approaches correctly classify only approximately 45% of seismic signals. This considerable margin of error arises from the difficulty in differentiating between the nuanced waveforms generated by earthquakes, underground explosions, and naturally occurring ambient noise – particularly when signals are faint, distorted by geological complexities, or occur at great distances. The relatively low accuracy highlights a critical need for more sophisticated analytical tools capable of resolving ambiguous seismic data and improving the reliability of event discrimination for both scientific monitoring and treaty verification purposes.

The demand for precise and automated seismic event discrimination is escalating due to a confluence of factors, including the necessity for robust monitoring of natural hazards and the ongoing need to verify compliance with international treaties related to nuclear testing. Traditional seismic analysis techniques, while foundational, are increasingly challenged by the complexity of modern seismic data and the subtlety of signals from smaller events or those occurring in ambiguous geological settings. Consequently, research is heavily focused on developing advanced analytical techniques – incorporating machine learning, waveform classification, and sophisticated signal processing – to improve the accuracy and reliability of event identification. These innovations aim to move beyond simple amplitude ratios and trigger algorithms, enabling a more nuanced understanding of subsurface phenomena and reducing the rate of false positives, ultimately bolstering global security and disaster preparedness.

The Allure of Automation: Deep Learning Steps In

Deep Learning (DL) presents a viable method for automating the discrimination of seismic events through its capacity to identify intricate patterns within data. Traditional seismic analysis relies on manually defined features, which can be limited in capturing the full complexity of seismic waveforms. DL algorithms, particularly neural networks, circumvent this limitation by automatically learning hierarchical representations directly from raw or minimally processed data. This allows the system to identify subtle characteristics indicative of different event types – such as earthquakes, explosions, or landslides – without explicit programming for each characteristic. The efficacy of DL stems from its ability to model non-linear relationships and high-dimensional data, critical for accurately classifying complex seismic signals and reducing false positive rates.

Initial attempts at automated seismic event discrimination leveraged machine learning algorithms including Feed Forward Networks, Logistic Regression, and Decision Trees. These methods, while capable of basic pattern recognition, proved limited in their ability to effectively process the complexity inherent in seismic waveforms. Specifically, the algorithms struggled to differentiate between subtle variations caused by different event types – such as earthquakes versus explosions or naturally occurring tremors versus human activity – due to an inability to model the high-dimensional, non-linear relationships within the signal data. The reliance on manually engineered features further restricted performance, as these features often failed to capture the full range of information contained within nuanced waveforms, resulting in lower accuracy and increased false positive rates.

While initial deep learning models for seismic event discrimination demonstrated limited success, subsequent implementations utilizing Random Forest and Capsule Neural Networks achieved accuracy rates of up to 95%. Despite this improvement, these advanced architectures haven’t consistently met the high reliability standards required for critical applications. Performance degradation is often observed when processing data from previously unseen seismic stations or events with atypical waveforms, indicating a need for further refinement and more robust generalization capabilities. Current research focuses on addressing these limitations through techniques like data augmentation, transfer learning, and the development of novel network architectures designed to improve adaptability and reduce false positive/negative rates.

Constraining the Algorithm: Physics-Informed Deep Learning

Physics-Informed Loss Functions improve deep learning model performance by incorporating known physical principles directly into the training process. Traditionally, deep learning models rely solely on data to learn relationships; however, this approach can lead to unreliable predictions when data is limited or noisy. A Physics-Informed Loss Function augments the standard loss function with terms representing the governing physical equations of the modeled system. This constraint guides the model towards solutions that adhere to established physical laws, thereby enhancing generalization to unseen data and improving robustness, particularly in scenarios where data availability is scarce or subject to uncertainty. The function effectively penalizes physically implausible solutions, ensuring the model learns not only from the data but also respects the underlying physics.

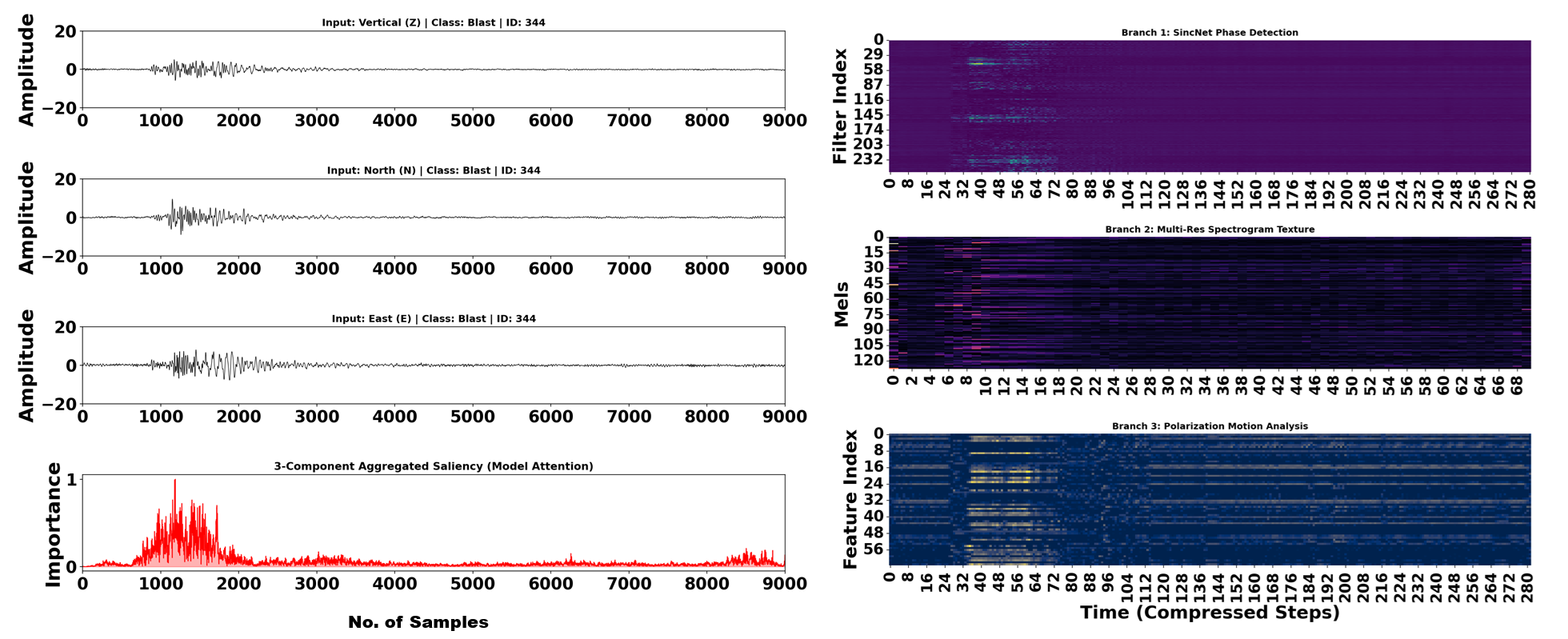

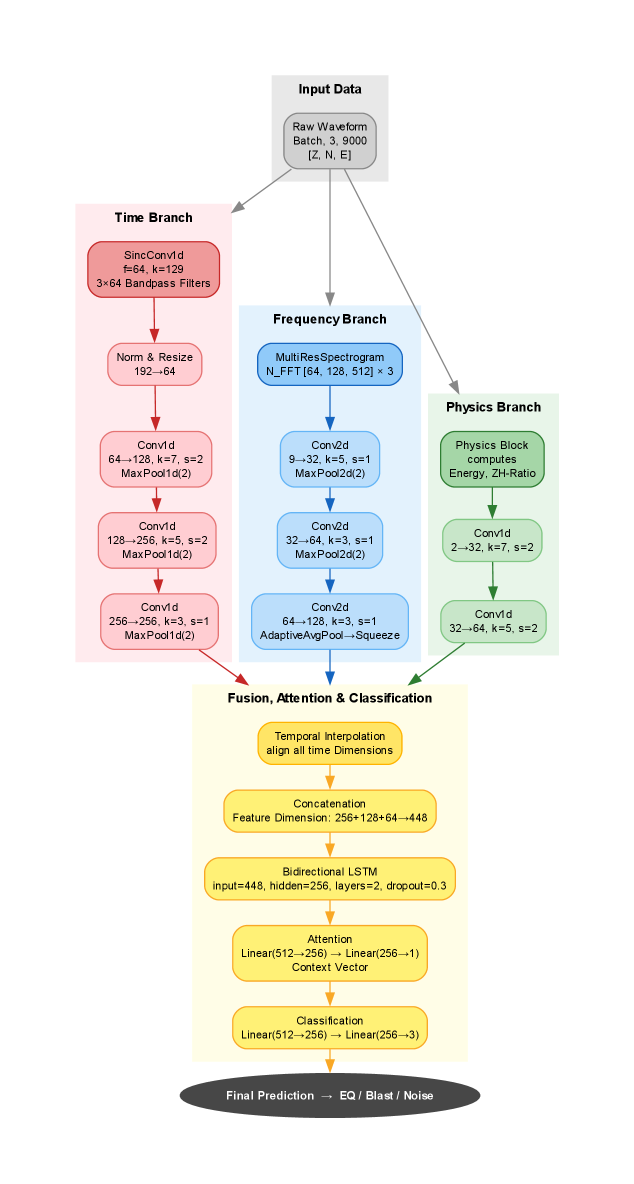

The PI-CRNN architecture combines a Convolutional Recurrent Neural Network to process sequential seismic data, enhancing feature extraction through the integration of SincNet and a Bi-directional Long Short-Term Memory (Bi-LSTM) network. SincNet layers apply learnable sinc functions as convolutional filters, effectively capturing frequency-domain characteristics relevant to seismic event identification. The Bi-LSTM component then processes these extracted features in both forward and reverse time directions, allowing the model to capture temporal dependencies and contextual information crucial for distinguishing between earthquake and blast waveforms. This combination allows the PI-CRNN to learn robust and discriminative features directly from the raw seismic data, improving classification performance.

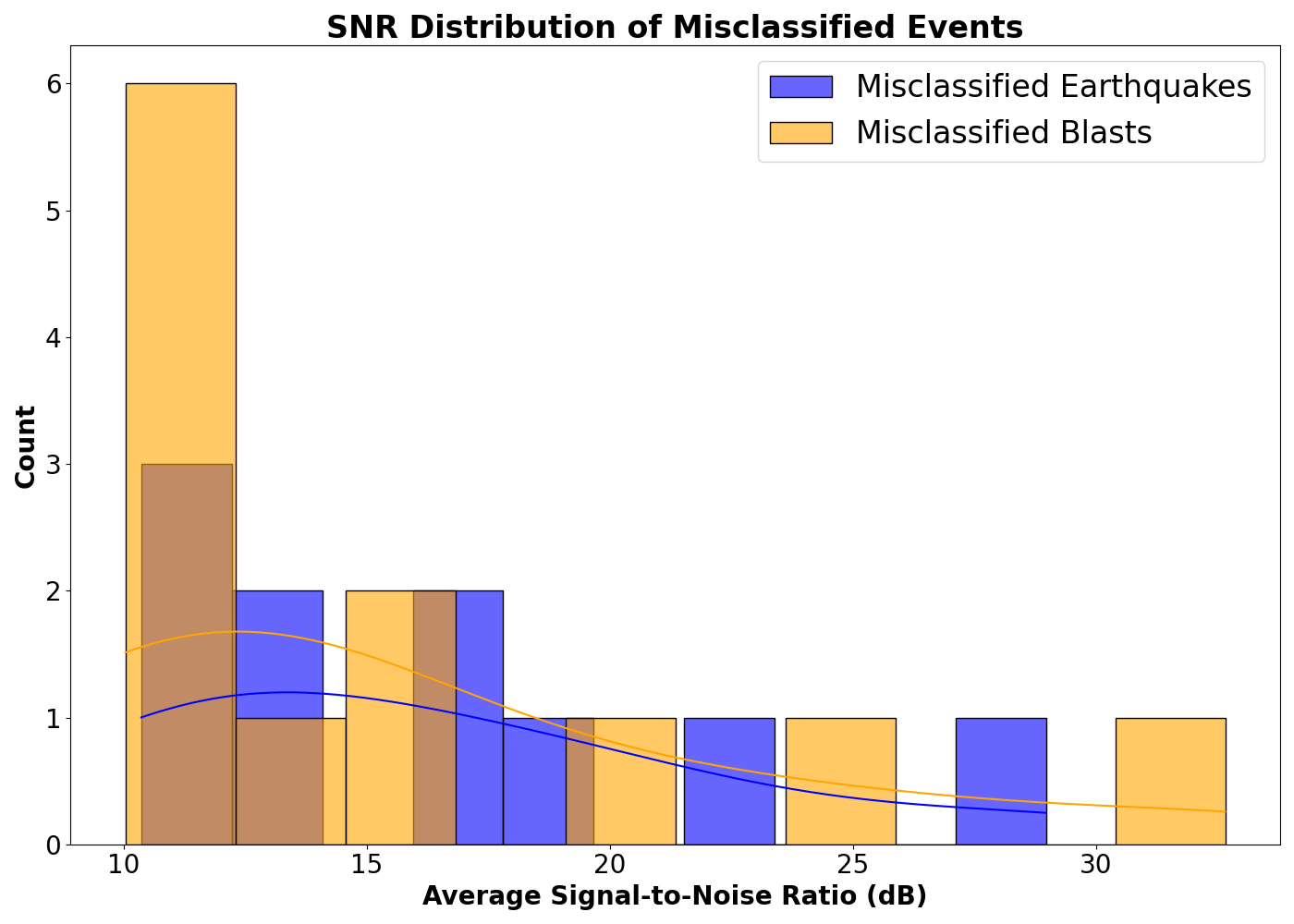

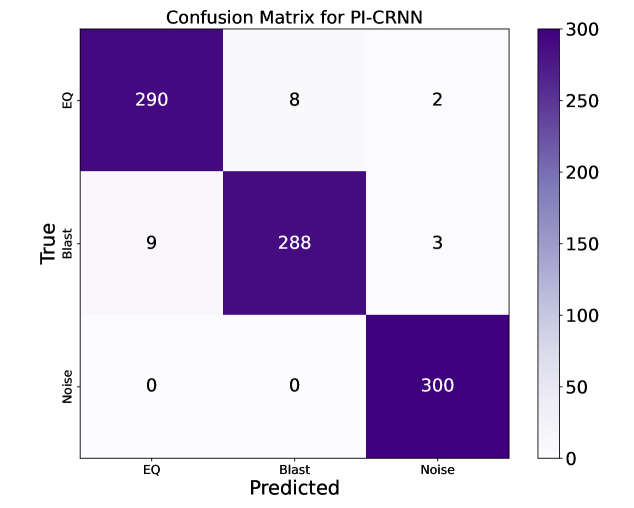

Evaluation of the proposed model was performed using the Curated Pacific Northwest AI-ready Seismic Dataset. Results demonstrate a classification accuracy of 97.56%, representing a statistically significant improvement over the 95% accuracy achieved by the baseline model. This performance increase is particularly evident in the reduction of misclassifications; the proposed model exhibited over a 50% decrease in instances where earthquakes were incorrectly identified as blasts, and vice versa, indicating enhanced discriminatory power between these seismic events.

Beyond Prediction: Towards Reliable and Transparent Seismic Intelligence

Automated seismic monitoring stands as a cornerstone of both global security and disaster preparedness, and the PI-CRNN framework presents a significant advancement in achieving reliable analysis. Traditional automated systems often struggle with the inherent complexities and noise within seismic data, leading to false positives or missed events – a critical flaw when verifying compliance with test ban treaties or responding to earthquakes. This new framework, however, leverages the power of deep learning – specifically, a convolutional recurrent neural network – to identify seismic signals with improved accuracy and consistency. By automating the initial assessment of seismic events, the PI-CRNN not only accelerates the process of distinguishing between natural occurrences, explosions, and other signals, but also reduces the reliance on manual analysis, freeing up expert resources for more nuanced investigations and ultimately bolstering global monitoring capabilities.

The PI-CRNN framework demonstrates enhanced resilience in seismic data analysis through the deliberate incorporation of established physical constraints. Unlike purely data-driven approaches, this model isn’t simply learning patterns; it’s guided by the fundamental principles governing seismic wave propagation. This integration functions as a powerful noise filter, diminishing the influence of spurious signals and irrelevant variations commonly found in real-world seismic recordings. Consequently, the model exhibits increased robustness across datasets of differing quality, maintaining reliable performance even when faced with incomplete or ambiguous information. This proactive approach to data integrity is particularly crucial in scenarios like remote monitoring or rapid disaster assessment, where data quality can be unpredictable and timely, accurate analysis is paramount.

The next phase of development for this seismic analysis framework centers on integrating Explainable AI (XAI) techniques, moving beyond simply accurate predictions to understanding why those predictions are made. This pursuit of transparency is critical for building trust in automated seismic monitoring systems, particularly in high-stakes applications like treaty verification and disaster response. By employing XAI, the model’s internal reasoning will become more accessible, revealing which seismic features most strongly influence its classifications and allowing experts to validate its conclusions. This enhanced interpretability not only bolsters confidence in the system’s reliability but also facilitates the identification of potential biases or unexpected behaviors, paving the way for continuous improvement and refinement of the analytical process.

The pursuit of discerning seismic events – earthquake, blast, or mere noise – reveals a fundamental truth about how humans approach complex problems. This architecture, integrating physics-informed neural networks, doesn’t simply seek the optimal solution, but rather a solution that feels right, aligning with pre-existing geological understanding. As Galileo Galilei observed, “You can know your fathers religion, but not know his reasons.” Similarly, this model doesn’t just predict; it attempts to illuminate why a particular event is classified as it is, offering a degree of transparency often missing in ‘black box’ deep learning systems. It’s a reassurance, a validation of both the data and the underlying principles, because people don’t choose the optimal, they choose what feels okay.

What Lies Ahead?

The pursuit of automated seismic event discrimination, as demonstrated by this work, is less a problem of signal processing and more a mapping of collective anxieties. Each refined algorithm, each incorporated ‘physics-informed’ constraint, is merely a more efficient articulation of what humans believe an earthquake or an explosion should look like. The current architecture, while promising improved interpretability, does not address the fundamental problem: the data itself is a product of imperfect sensing and, more crucially, deliberate obfuscation. Consider the inevitable arms race between increasingly sophisticated detection systems and those attempting to mask their activities.

Future efforts will undoubtedly focus on expanding the training datasets and incorporating multi-modal data – infrasound, atmospheric anomalies, even social media chatter. However, the true limitation lies in the assumption of stationarity. Seismic signatures shift with geological context, instrumentation changes, and the evolving methods of detonation. The model’s reliance on pre-defined ‘physics’ risks becoming a self-fulfilling prophecy, reinforcing existing biases and overlooking genuinely novel events.

Ultimately, the goal isn’t simply to classify events, but to understand the underlying intent. A tremor caused by fracking is seismically similar to a naturally occurring earthquake, yet the implications are profoundly different. The architecture offers a step toward automated classification, but it remains a tool, and like all tools, it reflects the priorities – and the fears – of its creators.

Original article: https://arxiv.org/pdf/2602.15993.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Not My Robin Hood

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- Silver Rate Forecast

- RaKai denies tricking woman into stealing from Walmart amid Twitch ban

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Gold Rate Forecast

2026-02-19 13:03