Author: Denis Avetisyan

A new study demonstrates that pairing advanced AI models with external knowledge sources dramatically improves their ability to identify misleading content related to climate change.

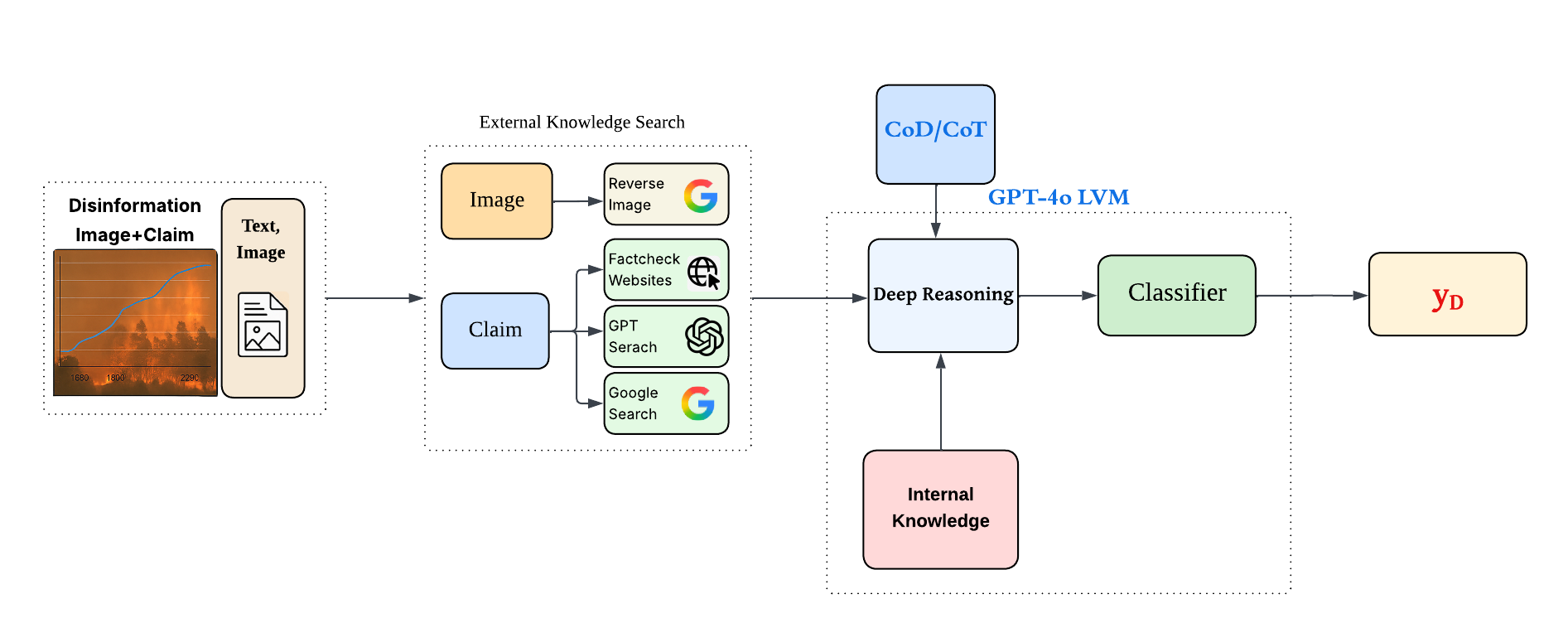

Integrating reverse image search, web search, and fact-checking tools with GPT-4o significantly enhances the detection of multimodal climate disinformation.

Despite advances in artificial intelligence, detecting increasingly sophisticated climate disinformation remains a significant challenge, particularly when presented through compelling visual media. This paper, ‘Multimodal Climate Disinformation Detection: Integrating Vision-Language Models with External Knowledge Sources’, addresses this limitation by demonstrating that augmenting large vision-language models-specifically GPT-4o-with real-time access to external knowledge sources dramatically improves their ability to identify misleading content. Our approach, leveraging tools like reverse image search and fact-checking databases, substantially enhances the model’s performance beyond its pre-trained knowledge base. As the volume and velocity of online disinformation continue to grow, can such knowledge-augmented systems effectively safeguard public understanding of critical climate issues?

The Rising Tide of Deception

The intensifying climate crisis is shadowed by a parallel surge in disinformation, creating a complex challenge for effective action. This isn’t simply a matter of differing opinions; increasingly sophisticated campaigns deliberately spread misleading narratives, often leveraging social media algorithms to amplify false or distorted information. The erosion of public trust in scientific consensus, fueled by these deceptive efforts, directly impedes the implementation of mitigation and adaptation strategies. Consequently, addressing climate change requires not only technological innovation and policy changes, but also a concerted effort to combat the spread of disinformation and restore faith in evidence-based understanding of the planet’s changing conditions. The consequences extend beyond delayed action; a distrustful public is less likely to support necessary investments or accept potentially disruptive changes to established lifestyles.

The proliferation of climate disinformation is increasingly characterized by multimodal content, where deceptive narratives are constructed through the strategic combination of imagery and text. This presents a significant challenge to detection efforts because traditional fact-checking primarily focuses on textual claims. Assessing the veracity of multimodal disinformation requires analyzing not only what is said, but also what is shown, and crucially, how the two interact to create a misleading impression. Subtle manipulations – such as pairing accurate text with misleading images, or vice versa – can bypass automated detection systems and exploit human cognitive biases. Consequently, identifying these deceptive combinations demands sophisticated analytical tools capable of cross-modal reasoning, as well as a deeper understanding of visual rhetoric and persuasive communication techniques.

The current landscape of climate disinformation presents a significant challenge to conventional fact-checking approaches. While traditional methods often rely on verifying singular claims within textual content, deceptive narratives are increasingly complex and multimodal, combining images, videos, and text in ways that obfuscate falsehoods and appeal to emotional reasoning. This shift necessitates far more resource-intensive investigations, as debunking requires not only identifying inaccuracies but also tracing the origins and spread of manipulated media. Furthermore, the sheer volume of disinformation being produced – often by automated accounts and coordinated campaigns – overwhelms the capacity of fact-checkers, creating a persistent gap between the emergence of false claims and their effective debunking. Consequently, even demonstrably false narratives can gain significant traction before being addressed, eroding public trust in scientific consensus and hindering meaningful climate action.

Beyond Isolated Facts: Seeking External Validation

Vision-Language Models (VLMs) demonstrate initial capabilities in analyzing visual and textual data; however, their performance in debunking disinformation is limited by the scope of their pre-training. These models operate primarily on information embedded within their internal parameters during training, creating a knowledge boundary that prevents verification of claims requiring information acquired after the training period or not present in the training data. This reliance on internally stored knowledge renders VLMs susceptible to inaccuracies and ineffective against novel disinformation campaigns or claims concerning specific, current events not adequately represented in their training corpus. Consequently, while capable of identifying some inconsistencies, VLMs frequently lack the external awareness necessary for comprehensive fact verification.

Augmenting Vision-Language Models (VLMs) with external knowledge access is crucial for improving veracity assessment beyond their internally stored data. This involves equipping VLMs with the capability to query and process information from sources like web search engines and dedicated fact-checking websites. By retrieving contextual evidence related to a claim, the model can move beyond pattern recognition and internal associations to evaluate consistency with documented realities. This external knowledge is not simply appended to the VLM’s input; effective architectures, such as the CMIE Framework and ERNIE2.0, are designed to integrate this evidence into the reasoning process, enabling the model to weigh the claim against the retrieved information and ultimately determine its likelihood of being true or false.

GPT-4o, while possessing substantial internal knowledge, demonstrably improves its ability to assess claim veracity when integrated with external information retrieval techniques. Specifically, pairing the model with web search functionality allows it to access current events and broader contextual data not present in its training corpus. Reverse image search capabilities further enhance this process by enabling verification of image authenticity and source identification, crucial for debunking visually-based disinformation. This combined approach allows GPT-4o to move beyond recalling stored facts and instead evaluate claims against a dynamically sourced, external knowledge base, facilitating more accurate and reliable assessments.

The CMIE (Contextualized Multi-modal Inference) Framework and the ERNIE2.0 model represent distinct architectures designed to integrate external evidence into the reasoning process of Vision-Language Models. CMIE achieves this through a modular design allowing for the incorporation of varied knowledge sources, while ERNIE2.0 leverages a continuous pre-training approach to enhance knowledge integration capabilities. Empirical results demonstrate that combining data from all four external knowledge sources – web search, fact-checking websites, reverse image search, and knowledge graphs – within these frameworks yields a zero rejection rate in assessing claim veracity, indicating a consistently accurate determination of true or false statements when sufficient external evidence is available.

The Architecture of Truth: A Multi-Stage Reasoning Process

Effective reasoning transcends simple keyword detection and necessitates a structured, multi-stage process. This involves initial evidence gathering, followed by critical analysis to determine relevance and validity. Subsequent steps include synthesizing information from multiple sources, identifying potential biases or inconsistencies, and ultimately formulating a logical conclusion supported by the analyzed evidence. This systematic approach contrasts with superficial pattern matching and aims to establish a robust and justifiable rationale for any claim or decision, minimizing the risk of errors based on incomplete or misinterpreted data.

Chain-of-Thought (CoT) and Chain-of-Draft (CoD) prompting are techniques used to enhance the reasoning capabilities of large language models by explicitly requesting a step-by-step explanation of the model’s decision-making process. CoT prompting involves providing the model with example question-answer pairs that include intermediate reasoning steps, guiding it to generate similar chains of thought when presented with new questions. CoD prompting extends this by encouraging the model to first draft a complete response, then iteratively refine it through multiple reasoning steps before finalizing its answer. These methods increase the transparency of the model’s reasoning, allowing for easier identification of potential errors or biases, and generally lead to more reliable and accurate outputs compared to direct prompting methods.

Prompting strategies such as Chain-of-Thought (CoT) and Chain-of-Draft (CoD) enable structured reasoning within GPT-4o when assessing climate-related claims. These techniques move beyond simple claim verification by explicitly requesting the model to detail its analytical steps, including evidence identification and justification for conclusions. Applying CoT and CoD allows for a granular examination of the model’s reasoning process, facilitating identification of potential biases or inaccuracies in its evaluation of climate data and arguments. This controlled approach improves the reliability and transparency of GPT-4o’s responses to complex climate-related questions, and allows for focused error analysis and refinement of the model’s reasoning capabilities.

The CLiME Dataset is a resource specifically designed for assessing and improving the reasoning abilities of large language models in the context of climate change. It comprises a collection of claims related to climate science, accompanied by multimodal evidence including text, charts, and tables. Each claim within the dataset is meticulously annotated with labels indicating its verifiability based on the provided evidence, and the specific evidence supporting or refuting the claim is identified. This granular annotation enables quantitative evaluation of a model’s ability to accurately assess climate-related assertions and justify its conclusions based on multimodal inputs, facilitating research into more robust and reliable AI reasoning systems.

Beyond Accuracy: Validating and Interpreting Detection Systems

Establishing the true efficacy of any disinformation detection system demands a commitment to rigorous evaluation. Simply achieving a high score on a limited dataset is insufficient; a robust assessment must consider performance across diverse examples, varying degrees of deceptive intent, and different modalities of information-text, image, and video. This necessitates utilizing established benchmarks and carefully designed evaluation metrics that move beyond simple accuracy to capture nuances like the ability to correctly identify why a piece of content is misleading, not just that it is. Without such thorough testing, it remains difficult to ascertain whether improvements represent genuine advancements in deception detection or merely overfitting to specific datasets, hindering the development of truly reliable tools against the growing threat of misinformation.

The evaluation of disinformation detection models benefits significantly from standardized benchmarks, and DECEPTIONDECODED represents a crucial step in this direction. This framework moves beyond simple accuracy metrics by specifically assessing a model’s capacity to utilize supporting evidence when determining misleading intent. Rather than merely identifying whether a statement is false, DECEPTIONDECODED challenges models to pinpoint why it’s deceptive, demanding a demonstration of reasoning based on provided facts. This focus on evidence-based reasoning is critical, as it mirrors the human process of critical thinking and allows for a more nuanced understanding of a model’s capabilities – and limitations – in discerning truth from falsehood. Consequently, DECEPTIONDECODED provides a more robust and reliable method for comparing different approaches to disinformation detection and driving improvements in this vital field.

To foster confidence in automated disinformation detection, techniques such as Local Interpretable Model-agnostic Explanations, or LIME, are increasingly vital. LIME functions by approximating the complex decision-making process of a model with a simpler, interpretable model locally around a specific prediction. This allows researchers and users to understand which features – be they specific words, image elements, or data points – most influenced the model’s classification of a piece of content as disinformation. By revealing these key indicators, LIME doesn’t just deliver a ‘yes’ or ‘no’ answer, but provides a rationale for that determination, thereby enhancing transparency and accountability. This capability is crucial for building trust in these systems, especially when dealing with sensitive issues where misclassification could have significant consequences, and enables developers to identify and address potential biases within the model itself.

Recent investigations have revealed a substantial enhancement in the detection of climate-related disinformation through the strategic integration of external knowledge sources with the GPT-4o model. Utilizing a dataset encompassing over 2 million tokens distributed across 500 distinct prompts, the study demonstrated a marked improvement in identifying misleading multimodal content. This approach leverages the model’s capacity to synthesize information from diverse sources, enabling it to more effectively discern factual accuracy within complex narratives. The findings suggest that augmenting large language models with external knowledge not only increases detection rates but also strengthens the model’s ability to contextualize information and resist manipulation, offering a promising avenue for combating the spread of climate misinformation.

The study reveals a critical dependence on external validation. Internal knowledge, while useful, proves insufficient to combat sophisticated disinformation. This echoes a timeless sentiment: “The eloquence of fools is always convincing.” Blaise Pascal observed this long ago. The paper’s success isn’t solely about the power of GPT-4o, but about anchoring that power to verifiable facts. Abstractions age, principles don’t. Every complexity-like discerning truth from falsehood-needs an alibi, and that alibi is found in external knowledge sources. The integration of tools like reverse image search isn’t merely a technical enhancement; it’s a methodological necessity.

The Road Ahead

The demonstrable improvement achieved by supplementing a large language model with external validation does not represent a triumph, but an admission. The system required instruction – a cross-reference with established reality. A truly intelligent system would not need to ask what is, but already know. The persistence of error, even in a model of this scale, suggests the core challenge isn’t building bigger systems, but more fundamentally accurate ones. The integration of reverse image search and fact-checking represents a scaffolding, necessary for present function, but ideally temporary.

Future work must address the limitations inherent in relying on external sources. Those sources, after all, are themselves imperfect and subject to manipulation. The pursuit of ‘truth’ through aggregation is a fragile endeavor. The focus should shift toward building models capable of identifying not just factual inaccuracies, but also the structure of disinformation – the rhetorical devices, the logical fallacies, the appeals to emotion that circumvent rational assessment.

Ultimately, the goal is not to detect lies, but to cultivate discernment. A system that merely flags falsehoods is a crutch. A system that teaches critical thinking, however – that is a step toward genuine intelligence. Clarity is, after all, courtesy-not just to the user, but to the very concept of understanding.

Original article: https://arxiv.org/pdf/2601.16108.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Thieves steal $100,000 worth of Pokemon & sports cards from California store

- ‘Veronica’: The True Story, Explained

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- Future Assassin’s Creed Games Could Have Multiple Protagonists, Says AC Shadows Dev

- Moonpay’s New York Trust Charter: The Digital Gold Rush Gets Regulated! 🚀

- First Glance: “Wake Up Dead Man: A Knives Out Mystery”

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

2026-01-24 07:23