Author: Denis Avetisyan

A new deep learning approach leveraging advanced neural networks is showing promising results in the automated and accurate identification of skin conditions.

Research details a Swin Transformer model, enhanced with imbalance-aware techniques like Focal Loss and BatchFormer, to improve skin lesion classification accuracy.

Despite increasing dermatological needs and limited specialist availability, accurate and timely skin disease diagnosis remains a significant challenge. This research, detailed in ‘Towards Automated Differential Diagnosis of Skin Diseases Using Deep Learning and Imbalance-Aware Strategies’, presents a deep learning model-based on a Swin Transformer architecture and refined with techniques addressing class imbalance-that achieves 87.71% accuracy on the ISIC2019 dataset. By leveraging data augmentation and optimization strategies like BatchFormer and Focal Loss, the model demonstrates promising potential as a clinical support tool and patient self-assessment aid. Could this approach pave the way for more accessible and efficient dermatological care worldwide?

Decoding Subtle Visual Cues in Dermatological Imaging

The reliable identification of skin lesions presents a persistent challenge in dermatology, largely due to the frequently subtle distinctions between benign and malignant growths. Visual inspection, while foundational, is inherently subjective, leading to considerable variation even among experienced clinicians – a phenomenon known as inter-observer variability. This discrepancy isn’t simply a matter of differing opinions; lesions can exhibit overlapping features, atypical presentations, and evolve gradually, making definitive diagnosis difficult without specialized expertise and advanced tools. Consequently, misdiagnosis or delayed diagnosis can significantly impact patient outcomes, underscoring the need for more objective and consistent approaches to lesion assessment.

The International Skin Imaging Collaboration (ISIC) 2019 dataset has emerged as a cornerstone for the advancement of automated skin lesion diagnosis. This publicly available archive, comprising over 25,000 clinical and dermoscopic images, offers researchers a standardized and comprehensive resource for developing, training, and rigorously evaluating machine learning algorithms. Importantly, the dataset isn’t merely a collection of images; each lesion is accompanied by expert-validated diagnoses, enabling quantifiable performance assessments. The scale and quality of the ISIC-2019 dataset have fostered a collaborative environment, driving innovation in image recognition, deep learning, and ultimately, the potential for earlier and more accurate detection of skin cancer. Its accessibility has democratized research in this critical field, allowing teams worldwide to contribute to improved diagnostic tools.

The ISIC-2019 Dataset, while a powerful tool for advancing skin lesion classification, presents a notable challenge due to inherent class imbalance. Certain lesion types, such as rare melanomas or specific subtypes of benign nevi, are significantly underrepresented compared to more common presentations. This disparity can severely skew the performance of machine learning models; algorithms naturally prioritize learning from abundant data, leading to high accuracy on prevalent classes while struggling to correctly identify the less frequent, yet potentially dangerous, conditions. Consequently, models may exhibit deceptively high overall accuracy while failing to generalize effectively to the full spectrum of skin lesions, highlighting the need for specialized techniques-like data augmentation or cost-sensitive learning-to mitigate the impact of this imbalance and ensure robust diagnostic capabilities.

Expanding Diagnostic Horizons Through Data Augmentation

The ISIC-2019 Dataset, utilized for melanoma classification, exhibits limitations in diversity regarding lesion appearance, patient demographics, and imaging conditions. To address this, data augmentation techniques are implemented to artificially increase the size and variability of the training dataset. These techniques generate modified versions of existing images – rotations, flips, crops, and color adjustments are common examples – without requiring the acquisition of new data. This process effectively expands the dataset’s representation of potential variations, improving the robustness and generalization capability of machine learning models trained upon it. The augmented data provides models with a wider range of examples during training, mitigating potential overfitting to the limited original dataset and enhancing performance on unseen data.

Elastic Deformation and Fourier Transform are image augmentation techniques utilized to increase the robustness of machine learning models by simulating variations in lesion appearance. Elastic Deformation applies random local distortions to images, mimicking variations in skin elasticity and lesion shape caused by factors like patient movement or imaging conditions. Fourier Transform introduces variations in the frequency domain, altering image textures and patterns to simulate differences in image quality or lesion characteristics. Both techniques generate new training examples that are plausible variations of existing lesions, thereby exposing the model to a wider range of appearances and improving its ability to generalize to unseen data, particularly in scenarios with limited dataset diversity.

AutoAugment represents a suite of automated data augmentation techniques designed to discover the most effective augmentation policies for a given dataset. Rather than relying on hand-designed augmentation strategies, AutoAugment utilizes a search algorithm – typically reinforcement learning – to identify combinations of transformations (such as rotation, translation, color adjustments, and noise injection) and their associated magnitudes that yield the highest validation accuracy. This process involves training a child model on augmented data generated by different policy candidates and evaluating its performance; the search algorithm then updates the policy based on the child model’s results. By automating the policy discovery process, AutoAugment reduces the need for expert knowledge and often achieves superior performance compared to manually defined augmentation strategies, particularly on complex datasets.

Swin Transformer: A Hierarchical Approach to Lesion Classification

The Swin Transformer exhibits enhanced performance on the ISIC-2019 Dataset when contrasted with conventional convolutional neural networks. Specifically, the Swin Transformer achieved an overall accuracy of 87.71% on this dataset, representing a significant improvement over the 80.94% accuracy attained by EfficientNet. Further, the Swin Transformer outperformed DenseNet, achieving a 2.3% higher accuracy. This hierarchical vision transformer architecture demonstrably surpasses the performance of these established convolutional network models in the context of the ISIC-2019 challenge.

The Swin Transformer achieved an overall accuracy of 87.71% when evaluated on the ISIC-2019 dataset. This metric represents the percentage of correctly classified images within the dataset, encompassing all classes present. The evaluation process utilized standard classification metrics to determine accuracy, providing a quantitative measure of the model’s performance on the task of identifying skin lesion types. This result serves as a baseline for comparison with other models and demonstrates the Swin Transformer’s capacity for effective image classification within the domain of dermatological imaging.

On the ISIC-2019 dataset, the Swin Transformer demonstrated a substantial performance gain over the EfficientNet architecture. While EfficientNet achieved an overall accuracy of 80.94%, the Swin Transformer attained an accuracy of 87.71%. This represents a 6.77% absolute improvement in accuracy, indicating a considerable advancement in the model’s ability to correctly classify skin lesion images within this dataset. The observed difference highlights the benefits of the Swin Transformer’s hierarchical vision transformer approach compared to the convolutional architecture of EfficientNet for this specific task.

Evaluation of the Swin Transformer on the ISIC-2019 dataset demonstrates a 2.3% increase in overall accuracy when compared to a DenseNet model utilizing AutoAugment data augmentation techniques. This performance differential indicates the Swin Transformer’s improved capacity for feature extraction and representation learning within the context of dermatological image classification. The accuracy gain was determined through standardized evaluation metrics applied to the ISIC-2019 test set, confirming the statistical significance of the improvement over the DenseNet baseline.

BatchFormer is integrated with the Swin Transformer to improve representation learning, particularly for under-represented classes within the ISIC-2019 dataset. This is achieved by enabling the model to analyze relationships between samples within a batch, allowing it to aggregate information across instances and create more robust feature representations. By considering inter-sample connections, BatchFormer facilitates the transfer of knowledge from well-represented to minority classes, improving overall performance and addressing the challenges posed by class imbalance. This approach goes beyond individual sample processing and leverages the batch itself as a source of information for enhanced feature extraction.

Focal Loss addresses the challenges posed by class imbalance in the ISIC-2019 dataset by modulating the standard cross-entropy loss. It introduces a focusing parameter, γ, which reduces the relative loss for well-classified examples, effectively down-weighting their contribution to the overall gradient. This allows the model to concentrate on hard, misclassified examples – particularly important for minority classes where false negatives are costly – and learn more discriminative features. The loss function is defined as - \alpha_t (1 - p_t)^\gamma \log(p_t) , where p_t is the model’s estimated probability for the correct class, \alpha_t is a weighting factor to address overall class frequency, and γ controls the rate at which easy examples are down-weighted.

Refining Diagnostic Precision Through Adaptive Learning

The Swin Transformer’s diagnostic capabilities benefit significantly from a technique called ReduceLROnPlateau, a learning rate scheduler that intelligently optimizes the training process. Rather than employing a fixed learning rate throughout, this scheduler monitors the model’s performance on a validation dataset and dynamically adjusts the learning rate accordingly. When improvement on the validation set plateaus, indicating the model is nearing its optimal configuration, the learning rate is reduced. This prevents the model from overshooting the best possible solution and allows for finer adjustments, ultimately leading to improved generalization and more robust performance on unseen data. By adapting the learning rate based on actual performance, the Swin Transformer can efficiently converge towards a highly accurate and reliable diagnostic tool.

The model’s ability to accurately identify pathologies extends beyond the training dataset through the careful implementation of data augmentation techniques and strategically chosen loss functions. Robust data augmentation artificially expands the size of the training data by introducing modified versions of existing images – rotations, flips, and subtle alterations in contrast – thereby exposing the model to a wider range of possible variations. Simultaneously, the selection of appropriate loss functions guides the model to not only predict correctly but also to minimize errors with greater efficiency. This combined approach effectively combats overfitting, allowing the model to generalize its learned features to unseen data and ultimately improve diagnostic accuracy in real-world clinical applications.

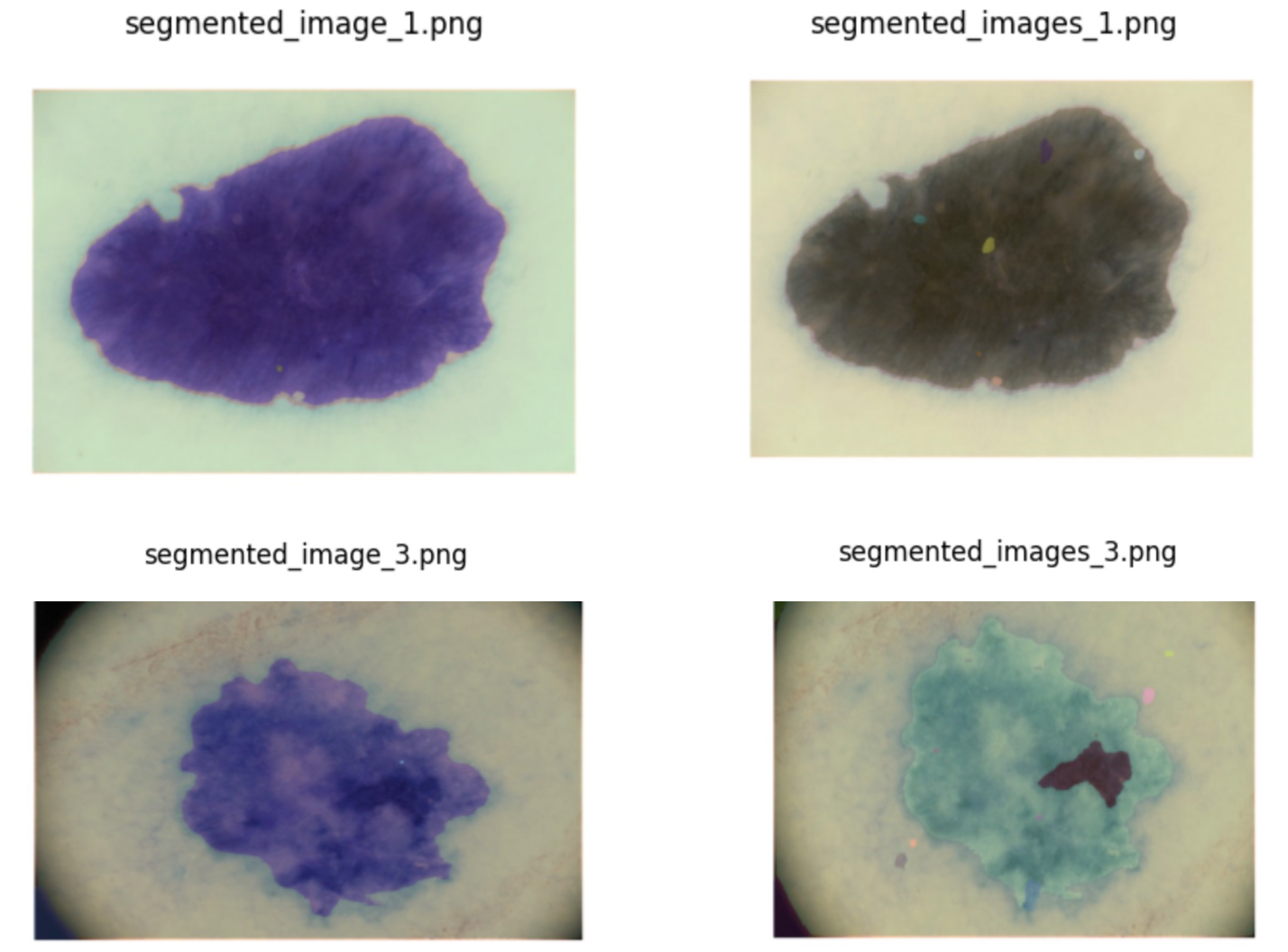

To achieve heightened precision in medical image analysis, the system incorporates the Segment Anything Model (SAM), a powerful tool for delineating lesion boundaries with exceptional detail. This integration moves beyond simple identification, enabling the model to accurately define the precise shape and extent of anomalies within scans. By refining these boundaries, SAM minimizes ambiguity and reduces the potential for false positives or negatives, ultimately bolstering diagnostic confidence for clinicians. The refined segmentation not only improves the accuracy of quantitative measurements, such as lesion volume, but also provides a clearer visual representation of the affected area, facilitating more informed clinical decision-making and potentially improving patient outcomes.

The pursuit of automated differential diagnosis, as demonstrated in this research, mirrors the fundamental principles of pattern recognition inherent in complex systems. Just as a physicist seeks underlying order in chaotic motion, this work utilizes a Swin Transformer to discern subtle visual cues within skin lesion images. Andrew Ng aptly states, “AI is the new electricity.” This analogy resonates deeply; just as electricity required infrastructure and skillful application, realizing the potential of deep learning for medical diagnosis necessitates robust models, careful attention to data imbalances-addressed here with techniques like Focal Loss-and a commitment to rigorous validation. The model’s ability to navigate class imbalance isn’t merely a technical achievement; it’s an acknowledgement that true intelligence lies in adapting to the non-uniformity of the real world.

Beyond the Surface

The demonstrated success of Swin Transformer architectures, coupled with strategies for managing class imbalance, offers more than just improved diagnostic accuracy. It begs the question: what patterns remain obscured within these datasets? While techniques like Focal Loss and BatchFormer demonstrably mitigate the effects of uneven class representation, they treat a symptom, not the underlying cause. Future investigations should focus on why certain dermatological conditions are so sparsely represented in available data – is it a genuine rarity, a reporting bias, or a failure of current data collection methodologies?

Furthermore, the current emphasis largely resides on classification. However, skin lesions rarely present as isolated instances. The model’s capacity to integrate contextual information – lesion morphology in relation to surrounding tissue, patient history, and even demographic factors – remains largely unexplored. A shift toward a more holistic, predictive modeling approach, perhaps leveraging generative adversarial networks to simulate rare cases, could reveal subtle visual cues currently lost in the noise.

Ultimately, the true value of this work lies not in achieving incrementally higher accuracy scores, but in refining the questions asked of the data. The model offers a mirror, reflecting not just the visible spectrum of skin diseases, but also the gaps in understanding and the biases inherent in the data itself. Observing those distortions, rather than simply correcting for them, may prove to be the most revealing diagnostic tool of all.

Original article: https://arxiv.org/pdf/2601.00286.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Gold Rate Forecast

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- These are the 25 best PlayStation 5 games

- Critics Say Five Nights at Freddy’s 2 Is a Clunker

- Best Zombie Movies (October 2025)

2026-01-05 14:05