Author: Denis Avetisyan

A novel forecasting framework leverages agentic systems and frequency-aware modeling to anticipate and reconstruct critical ramp events in wind power generation.

This paper introduces an event-centric forecasting method utilizing Ramp Boundary Analysis (RBAθ) and an agentic system for dynamic model selection to improve wind power prediction accuracy.

Accurate wind power forecasting is challenged by inherent variability and complex, site-specific dynamics, yet traditional trajectory-first approaches often struggle with generalisation and operational alignment. This paper introduces an event-centric forecasting framework, detailed in ‘Agentic Workflow Using RBA$_θ$ for Event Prediction’, that directly predicts ramp events via frequency-aware modeling and reconstructs power trajectories thereafter. By integrating wavelet decomposition, adaptive feature selection, and an agentic layer for dynamic workflow selection-built upon an enhanced Ramping Behaviour Analysis RBA_θ-we demonstrate stable, long-horizon prediction and zero-shot transferability. Could this event-first paradigm unlock a more robust and operationally intelligent approach to wind power integration and grid stability?

The Inevitable Flux: Forecasting Grid Instability

The reliable operation of modern power grids hinges on the ability to anticipate and manage ‘Ramp Events’ – those swift, substantial shifts in energy demand or renewable generation. These fluctuations, if left unchecked, can destabilize the grid, potentially leading to cascading failures and widespread blackouts. Maintaining a consistent energy supply requires a delicate balance between generation and consumption; Ramp Events disrupt this equilibrium, stressing transmission infrastructure and demanding rapid responses from grid operators. The increasing integration of intermittent renewable sources, like solar and wind, exacerbates this challenge, as their output is inherently variable and less predictable. Consequently, accurate forecasting of these events isn’t merely a matter of operational efficiency-it’s fundamental to ensuring a resilient and dependable energy future for communities and industries alike.

Conventional time-series analyses, such as Seasonal Autoregressive Integrated Moving Average (SARIMAX), frequently encounter limitations when applied to forecasting ‘Ramp Events’ – rapid shifts in power demand or renewable energy output. These models are built upon the assumption of stationarity – that the statistical properties of a time series remain constant over time – a condition rarely met by the volatile dynamics characterizing these events. The inherent complexity arises from numerous interacting factors – fluctuating weather patterns impacting solar and wind generation, unpredictable consumer behavior, and unforeseen grid disturbances – which introduce non-linearities and abrupt changes that traditional methods struggle to capture. Consequently, forecasts generated by SARIMAX and similar techniques often exhibit significant errors, particularly during periods of high volatility or rapid transition, hindering proactive grid management and increasing the risk of instability.

Predicting critical ramp events – those sudden, substantial shifts in power generation or demand – necessitates a departure from conventional forecasting techniques. Traditional time-series analysis, while useful in many contexts, often falters when confronted with the non-stationary and inherently chaotic nature of these events. Successfully anticipating these fluctuations demands algorithms capable of discerning subtle, previously unnoticed patterns within the data, and crucially, of proactively modeling the potential for abrupt, discontinuous changes. This requires sophisticated approaches that move beyond simple extrapolation, embracing techniques like machine learning and advanced statistical modeling to capture the complex interplay of factors influencing grid dynamics and ultimately enhance power system resilience.

Shifting the Focus: Event-Centric Prediction

Event-Centric Forecasting represents a departure from traditional time-series forecasting by explicitly targeting the prediction of Ramp Events – defined periods of significant and rapid change within a dataset. Instead of predicting a continuous value at a future time, this approach focuses on determining the probability of an event occurring, its expected magnitude when it does occur, and the anticipated duration of the event. This reframing allows for a more granular analysis and modeling of key system behaviors, concentrating predictive efforts on the most impactful periods rather than attempting to model all data points equally. The core principle involves identifying and characterizing these discrete events as the primary objects of prediction, thereby shifting the focus from point forecasts to event-based forecasts.

Feature extraction for Ramp Event identification relies heavily on techniques like RBAθ, which decomposes time-series data into a set of basis functions representing recurring patterns. Specifically, RBAθ utilizes a radial basis function to approximate the underlying dynamics, allowing for the quantification of event precursors such as rate of change, magnitude, and duration. This decomposition process isolates the components of the time series most relevant to ramp events, effectively reducing dimensionality and improving the signal-to-noise ratio. The resulting features – coefficients associated with these basis functions – serve as inputs for forecasting models, enabling the prediction of future ramp event characteristics based on historical data and identified patterns.

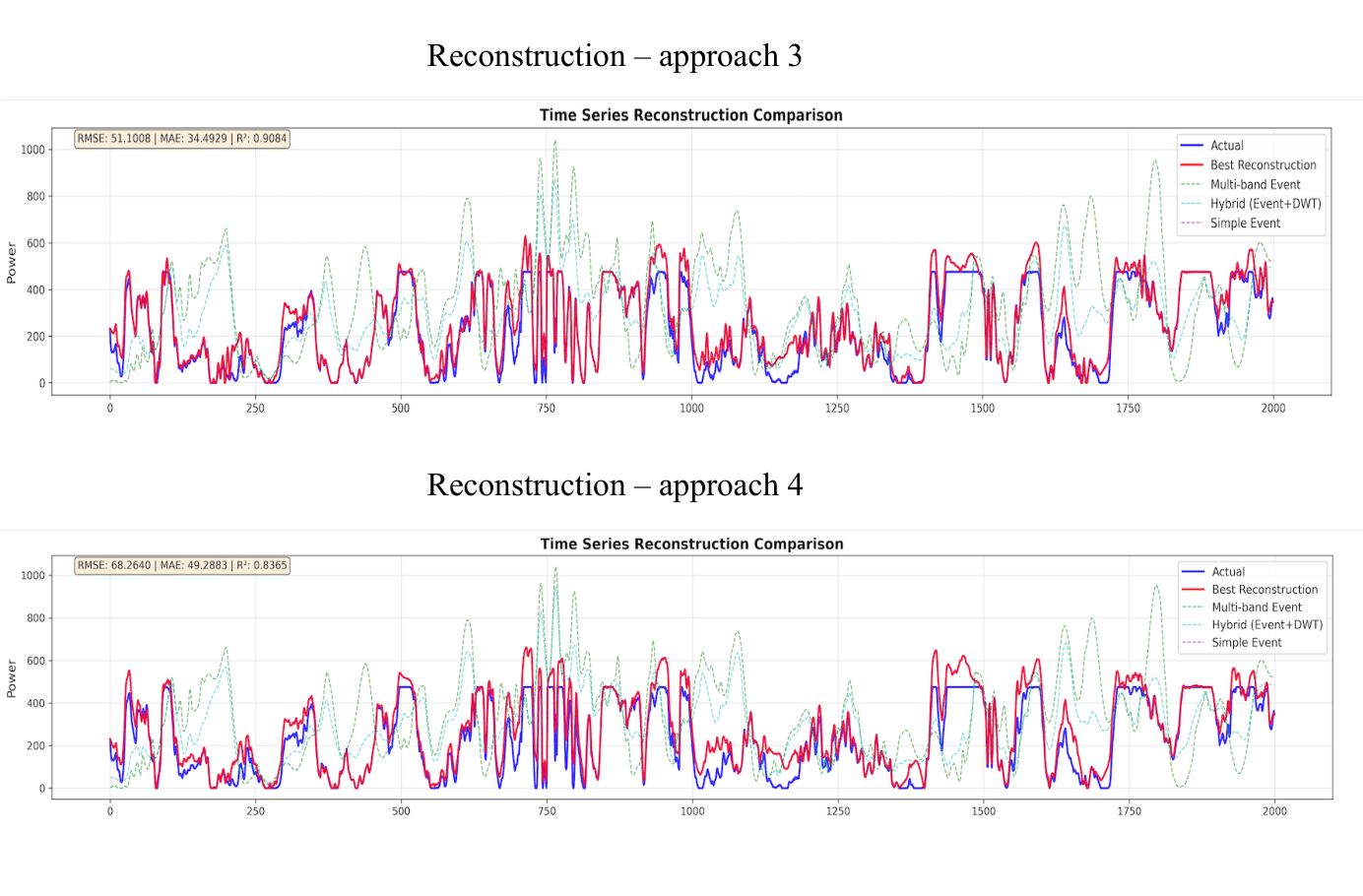

Event-centric forecasting demonstrates enhanced predictive performance over conventional methods by directly modeling the dynamics of ramp events – specifically, their initiation, intensity, and temporal extent. This approach yields a reconstruction R2 score of approximately 0.90, indicating a high degree of explained variance in the reconstructed time-series data. This improved accuracy and robustness stems from focusing on the underlying event mechanisms rather than relying on broad statistical patterns, allowing for more precise identification and prediction of significant changes within the data.

Unveiling Hidden Rhythms: Frequency-Aware Modeling

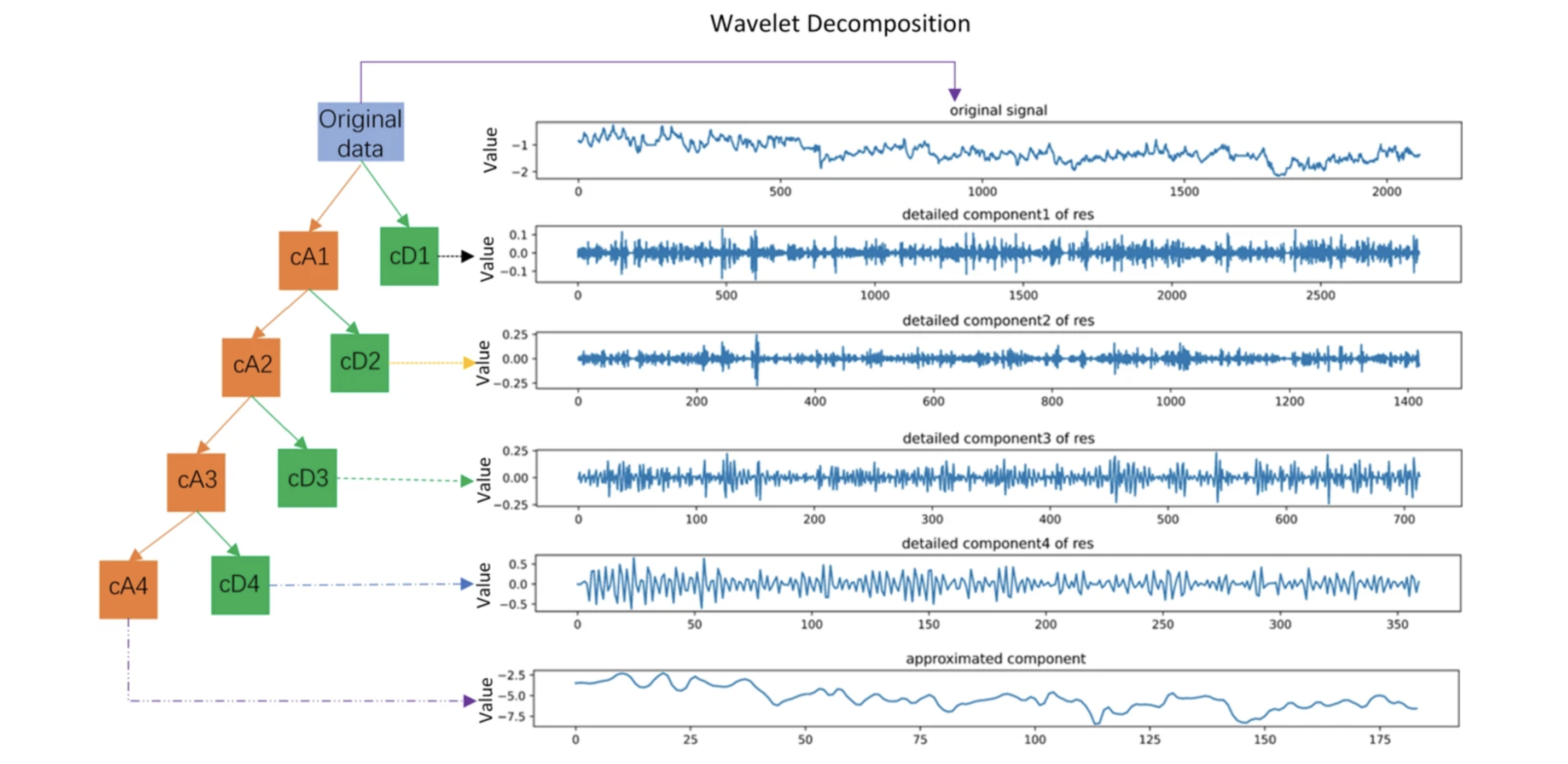

Frequency-Aware Modeling leverages techniques such as the Discrete Wavelet Transform (DWT) to decompose a time series into constituent frequency bands. This decomposition process effectively separates the signal into different frequency components – high, medium, and low – allowing for the identification of patterns that may be obscured in the original time series data. The DWT, in particular, provides time-frequency representation by using wavelet functions, enabling the analysis of non-stationary signals where frequency content changes over time. By analyzing these individual frequency bands, researchers can isolate specific signal characteristics, such as short-duration transients or long-term trends, revealing hidden patterns and improving the accuracy of subsequent analysis or predictive modeling.

Integrating the Discrete Wavelet Transform (DWT) with advanced deep learning architectures, specifically Long Short-Term Memory (LSTM) networks and Transformer models, facilitates multi-scale feature extraction from time series data. DWT decomposes the signal into different frequency components, providing a time-frequency representation. These decomposed components, representing varying scales of detail, are then used as input features to the LSTM or Transformer. This allows the models to learn temporal dependencies and relationships at different levels of granularity, capturing both short-term fluctuations and long-term trends. The combination overcomes limitations of traditional time series analysis and improves the model’s ability to identify complex patterns and make accurate predictions, particularly in noisy or non-stationary data.

Multi-Armed Bandit (MAB) algorithms address feature selection in time series forecasting by dynamically allocating resources to different features based on their observed performance. Unlike static feature selection methods, MAB algorithms treat each feature as an “arm” in a multi-armed bandit problem, balancing exploration – testing less-utilized features – with exploitation – prioritizing features currently yielding high predictive accuracy. This adaptive approach is particularly beneficial in non-stationary environments where the relevance of features changes over time. Algorithms like Upper Confidence Bound (UCB) and Thompson Sampling are commonly employed to determine which features to include in the model at each time step, optimizing performance by focusing on the most informative features while continuously learning and adapting to evolving data patterns. This results in improved model robustness and accuracy compared to methods reliant on a fixed feature set.

Orchestrating Resilience: Intelligent Prediction Systems

Agentic Workflow Orchestration introduces a system capable of intelligently shifting between forecasting models-such as LSTMs and Transformers-based on the nuances of incoming data. Rather than relying on a single, static predictive algorithm, the framework continuously assesses real-time data characteristics and dynamically selects the model best suited to the current conditions. This adaptive approach mimics expert decision-making, leveraging the unique strengths of each model-for example, employing Transformers to capture long-range dependencies when appropriate, or utilizing LSTMs for their efficiency with sequential data. The result is a robust prediction system capable of maintaining accuracy even as data patterns evolve, offering a significant advantage over traditional, inflexible forecasting methods.

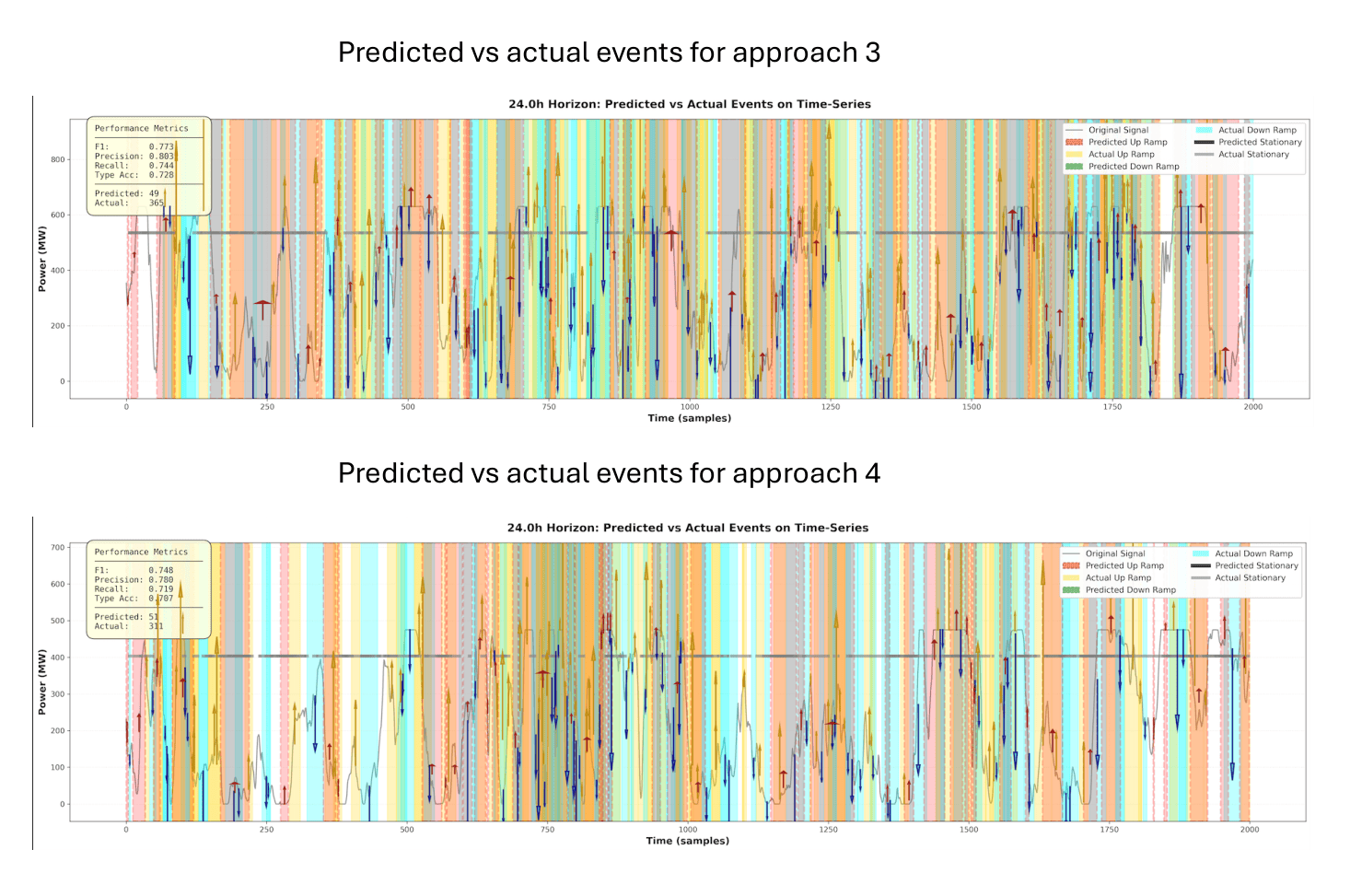

The system’s predictive power stems from an adaptive methodology that intelligently combines the capabilities of various forecasting models. Rather than relying on a single, potentially limited approach, it dynamically selects the most suitable model-be it an LSTM or a Transformer-based on the characteristics of incoming data. This flexibility not only enhances overall predictive accuracy but also dramatically improves resilience in fluctuating conditions. Notably, rigorous testing on previously unseen datasets consistently demonstrates a precision of approximately 0.60 in event prediction – achieved without any model fine-tuning, highlighting the system’s capacity for robust, generalizable performance and minimizing the need for costly and time-consuming recalibration.

The forecasting system demonstrates a remarkable capacity for zero-shot transfer learning, effectively generalizing predictions to entirely new environments without requiring any site-specific training data. This capability, validated across unseen wind farms, achieves a consistent event prediction precision of approximately 0.60 – a significant result given the inherent variability of wind energy production. The system’s architecture allows it to discern underlying patterns applicable across diverse geographical locations and turbine configurations, effectively bypassing the need for costly and time-consuming individual model calibrations for each wind farm. This adaptability highlights the potential for widespread deployment and improved forecasting accuracy in renewable energy management, even in the absence of localized historical data.

A Systemic View: Modeling Interdependencies

The inherent interconnectedness of modern power grids means that a sudden, large shift in renewable energy generation – a Ramp Event – often doesn’t occur in isolation. Researchers are now leveraging Hawkes causality, a statistical framework originally developed for modeling event sequences like earthquakes and social media interactions, to capture these temporal dependencies. This approach doesn’t simply forecast that a Ramp Event will occur, but also assesses the probability that one event will trigger others, revealing a cascade effect. By identifying these triggering mechanisms – perhaps a rapid drop in solar output followed by increased demand on transmission lines – grid operators gain critical insight into systemic vulnerabilities. This allows for proactive intervention, moving beyond reactive responses to instability and ultimately bolstering the grid’s resilience through a more nuanced understanding of cause and effect.

The ability to model temporal dependencies between ramp events, facilitated by Hawkes causality, extends beyond simply improving forecasts. This approach unlocks crucial insights into the systemic vulnerabilities of power grids by revealing how one event can trigger a cascade of instability. Researchers find that by identifying these causal links, they can pinpoint previously hidden factors – such as specific generator responses or transmission line constraints – that contribute to grid stress. This understanding moves beyond reactive responses to instability and allows for proactive mitigation strategies, potentially redesigning grid operations or infrastructure to enhance overall resilience and prevent widespread disruptions before they occur. The focus shifts from predicting when a ramp event will happen to understanding why, and ultimately, how to build a more robust and adaptive power system.

A truly robust power grid demands more than simply predicting failures; it requires a proactive, intelligent response to evolving conditions. Recent advancements are converging event-centric forecasting – pinpointing the likelihood of specific disturbances – with intelligent orchestration, which dynamically adjusts grid resources. Crucially, these capabilities are now being underpinned by causal modeling, allowing operators to understand why certain events occur and how they influence each other. This synergistic approach moves beyond reactive measures to enable a grid that anticipates, adapts, and ultimately, maintains stability even in the face of increasing complexity and unforeseen challenges, representing a fundamental shift towards a more resilient and future-proof energy infrastructure.

The pursuit of predictive accuracy, as demonstrated by this agentic workflow, often leads to intricate systems built on the assumption that comprehensive modeling will yield perfect foresight. However, such constructions invariably trade flexibility for optimization. As Paul Erdős observed, “A mathematician knows how to solve any problem; he just doesn’t know if he has enough time.” This sentiment resonates deeply; the framework’s dynamic model selection, while elegant, is still a temporary solution. The inherent unpredictability of wind power-the very ‘ramp events’ it aims to forecast-suggests that even the most sophisticated agentic system will eventually encounter scenarios outside its predictive capacity. Scalability, in this context, isn’t about handling more data, but accepting the inevitability of unforeseen circumstances.

The Horizon of Prediction

This work, focused on anticipating ramp events in wind power, inevitably reveals the limits of even the most sophisticated forecasting. The agentic system, dynamically selecting models, is not a solution to uncertainty – it is an acknowledgment of it. Each chosen model represents a temporary respite from the inevitable divergence between prediction and reality, a narrowing of the cone of possibility that will, in time, widen once more. The system does not conquer chaos; it navigates it, delaying the moment when the inherent unpredictability of the system overwhelms the forecast.

The emphasis on frequency-aware modeling, while promising, merely refines the granularity of the failure. One can model the shape of uncertainty with increasing precision, but the fundamental problem remains: systems connected to the real world are, by definition, subject to external forces. To believe that improved modeling will eliminate error is to mistake a local minimum for a global solution. The architecture encourages dependency – dependency on data, on the chosen models, on the very notion of a stable relationship between past and future.

Future work will undoubtedly explore more complex agentic architectures, perhaps even attempting to model the confidence of the models themselves. But the true horizon lies not in better prediction, but in better adaptation. The eventual task is not to foresee the fall, but to build systems resilient enough to withstand it – systems that understand that every connection is a potential point of failure, and that the most elegant architecture is often the simplest, most readily disassembled one.

Original article: https://arxiv.org/pdf/2602.06097.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- These are the 25 best PlayStation 5 games

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- SHIB PREDICTION. SHIB cryptocurrency

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Rob Reiner’s Son Officially Charged With First Degree Murder

- Gold Rate Forecast

2026-02-09 12:21