Author: Denis Avetisyan

Researchers have developed a statistically rigorous method for modeling dependencies within network data, offering improvements over existing approaches.

This paper introduces a flexible network propagation regression framework for analyzing relationships in graph data, demonstrating superior performance in simulations and a real-world social media study.

Existing network regression models often rely on restrictive assumptions or complex estimation procedures, limiting their broad applicability. This paper introduces ‘A Propagation Framework for Network Regression’, a unified and computationally efficient approach that models outcomes as functions of covariates propagating through network connections, capturing both direct and indirect effects. Demonstrating superior performance in simulations and a real-world social media analysis, this framework offers a statistically sound and interpretable alternative to established methods. Could this propagation approach unlock more robust insights from increasingly complex relational data across diverse fields?

The Illusion of Independence: Why Networks Break Regression

Traditional regression analysis fundamentally assumes that errors in prediction are independent of each other – a condition rarely met when examining networked data. This simplification overlooks the reality that individuals within a network invariably influence one another, creating dependencies that ripple through the data. For instance, if one person’s outcome affects another’s, their errors are no longer isolated; a positive error for one individual is likely to be mirrored in the other, violating the independence assumption. Consequently, standard regression can produce biased parameter estimates and inflated measures of statistical significance, leading to inaccurate conclusions about the true relationships within the network. Recognizing this limitation is crucial, as ignoring these dependencies undermines the validity of analyses across diverse fields, from understanding the spread of innovations to predicting health outcomes.

The reliability of statistical inferences hinges on the assumption that errors in prediction are independent of one another; however, this assumption frequently falters when analyzing data where individuals demonstrably influence each other. When this interdependence exists – as is common in social networks, epidemiological studies, or even economic modeling – standard regression techniques can yield biased parameter estimates and inflated measures of statistical significance. This occurs because the correlated errors violate the underlying mathematical principles of these models, leading to an underestimation of true uncertainty and potentially misleading conclusions about the relationships being investigated. Consequently, failing to address these dependencies can distort the understanding of phenomena driven by interconnectedness, hindering accurate prediction and effective intervention strategies.

The increasing prevalence of networked data – from social interactions to biological systems – necessitates a shift away from traditional statistical modeling techniques. Standard approaches, like ordinary least squares regression, operate under the assumption of independent errors, a condition rarely met when observations are interconnected. These interdependencies, arising from influence, contagion, or shared environments, introduce correlations that violate the assumptions of conventional methods, potentially leading to biased parameter estimates and inflated false positive rates. Consequently, researchers are developing models specifically designed to incorporate these network effects, such as spatial autoregression and stochastic actor models. These advanced techniques explicitly account for the ways in which an individual’s outcome is influenced by the characteristics and behaviors of their connections, offering a more accurate and nuanced understanding of complex phenomena and improving the reliability of statistical inference in interconnected systems.

The failure to account for network effects significantly compromises the accuracy of models attempting to understand complex social and biological processes. Phenomena such as the spread of ideas, purchasing behaviors, or even infectious diseases are not simply the result of individual decisions made in isolation; rather, they emerge from intricate webs of interaction. Traditional statistical methods, by assuming independence between observations, overlook the crucial reality that an individual’s characteristics or actions are often influenced by their connections to others. This oversight introduces bias into estimations and hinders predictive power, particularly when analyzing data where individuals demonstrably impact one another – whether through social pressure, direct contact, or shared information. Consequently, a nuanced understanding of network dynamics is essential for constructing robust and reliable models in fields ranging from public health and marketing to political science and sociology.

Propagating Influence: A Framework for Dependency

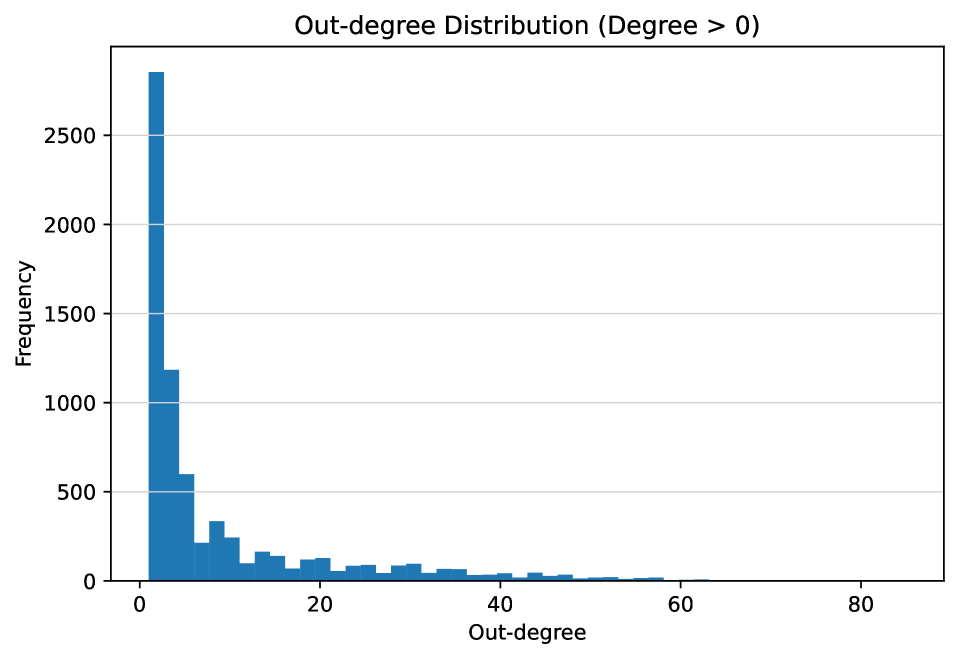

Network Propagation Regression (NPR) builds upon standard regression models by incorporating information about the relationships between data points, represented by an AdjacencyMatrix. This matrix defines the network structure, indicating connections or dependencies between individuals or nodes. Instead of treating each data point as independent, NPR leverages these connections to propagate covariate information across the network. Specifically, the value of a covariate for a given data point is adjusted based on the values of its neighbors, as defined by the AdjacencyMatrix. This process effectively diffuses information throughout the network, allowing the regression model to account for the influence of interconnected data points and potentially improve predictive accuracy in scenarios where independence assumptions are violated.

The PropagationOrder parameter in Network Propagation Regression controls the depth of information diffusion across the network. A value of 0 indicates no propagation, equivalent to standard regression. Values greater than 0 specify the number of ‘hops’ or network connections through which covariate information is propagated; for example, PropagationOrder = 1 propagates information from immediate neighbors, while PropagationOrder = 2 includes information from neighbors of neighbors. Each propagation step involves averaging the covariate values of connected nodes, weighted by the AdjacencyMatrix, and adding this average to the target node’s predicted value, thus incorporating the influence of increasingly distant network peers based on the specified order.

Information diffusion within Network Propagation Regression operates by iteratively updating each node’s predicted value based on the values of its directly connected neighbors, as defined by the AdjacencyMatrix. This process simulates the ‘borrowing’ of information; a node’s initial covariate value is adjusted by a weighted average of its neighbors’ values, with the weights determined by the network structure and the PropagationOrder parameter. Each iteration of this diffusion allows information to propagate further across the network, effectively incorporating the influence of increasingly distant peers into the prediction for each node. The final predicted value represents a composite of the original covariate and the diffused information from the network.

Traditional regression models often assume statistical independence between observations, a limitation when analyzing networked data where individuals are demonstrably influenced by their connections. Network Propagation Regression addresses this by explicitly incorporating the network’s AdjacencyMatrix into the regression model, allowing for the modeling of dependencies. This approach relaxes the independence assumption, acknowledging that the outcome of one node is not solely determined by its covariates, but also by the characteristics of its neighbors. Consequently, this framework provides more accurate estimations and improved predictive performance when applied to data exhibiting inherent network dependencies, such as social networks, biological systems, and communication networks.

Extending the Reach: Modeling Diverse Outcomes

Network Propagation Regression can be adapted to predict both binary and time-to-event outcomes. NetworkLogisticRegression extends the framework to model binary variables by incorporating a logistic link function, enabling prediction of probabilities for categorical outcomes. For time-to-event data, NetworkCoxRegression utilizes the Cox proportional hazards model, allowing for prediction of time until an event occurs while accounting for censoring. Both extensions retain the core network propagation mechanism for feature aggregation but modify the link function to align with the requirements of their respective outcome types, maintaining computational efficiency.

NetworkLogisticRegression and NetworkCoxRegression build upon the foundational network propagation process by incorporating appropriate link functions for their respective outcome types. While the core mechanism of iteratively propagating information across the network remains consistent, the transformation of the linear predictor differs; NetworkLogisticRegression utilizes the logistic function to model binary outcomes, producing probabilities, and NetworkCoxRegression employs the hazard function to model time-to-event data. This adaptation allows the framework to accommodate diverse data types without fundamentally altering the information propagation strategy, preserving computational efficiency and scalability.

Parameter estimation within the Network Propagation Regression framework utilizes two primary methods: Maximum Likelihood Estimation (MLE) and Generalized Method of Moments (GMM). MLE identifies parameter values that maximize the likelihood of observing the given data, assuming a specific probability distribution. GMM, conversely, relies on minimizing the distance between theoretical moments – calculated from the model – and the sample moments derived directly from the observed data. Both methods offer computational efficiency, allowing for rapid model calibration even with large network datasets, and provide statistically sound estimates of model parameters. The choice between MLE and GMM often depends on the specific data characteristics and the assumptions regarding the error distribution.

Evaluation of the model on a social media sentiment analysis task yielded an Area Under the ROC Curve (AUC) of 93.50%. This performance metric indicates a high degree of discrimination between positive and negative sentiment classes. Comparative analysis demonstrates that this AUC score represents a significant improvement in predictive power when contrasted with alternative machine learning approaches applied to the same dataset, suggesting the model’s network propagation mechanism effectively captures nuanced relationships influencing sentiment expression within social media contexts.

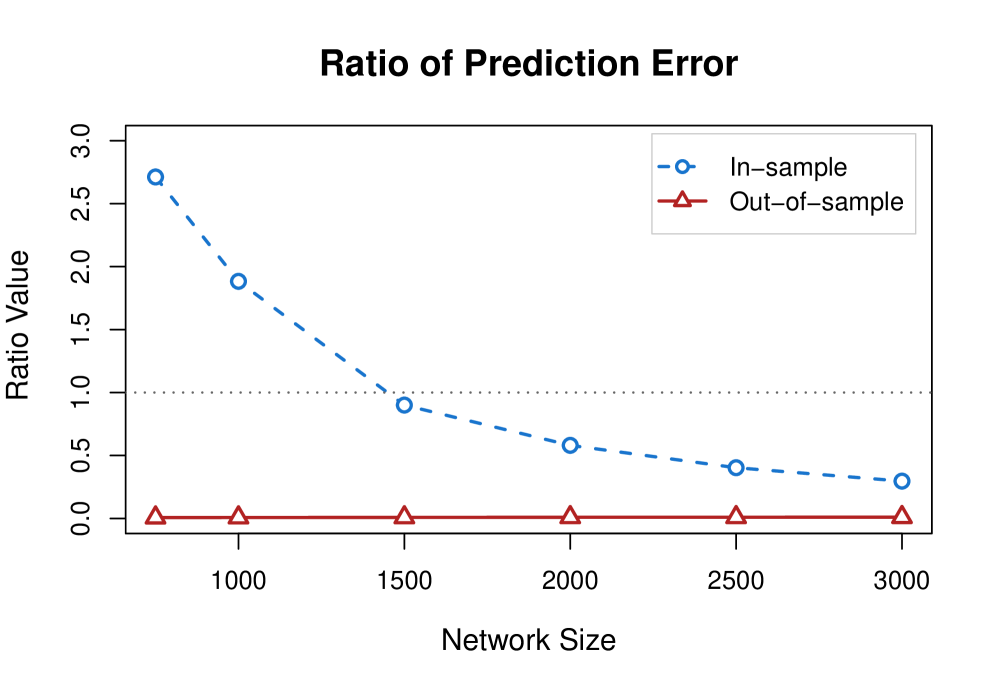

Beyond Benchmarks: A Superior Predictive Edge

Network Propagation Regression distinguishes itself from simpler benchmark models – such as LinearInMeansModel and NetworkCohesionModel – through its consistent ability to effectively capture the intricate relationships within network data. While these established methods often treat network nodes in isolation or rely on basic connectivity metrics, Network Propagation Regression leverages the network structure itself to propagate information and refine predictions. This approach allows the model to account for both direct and indirect influences between nodes, resulting in a more nuanced and accurate representation of dependencies. Consequently, the model doesn’t just identify connections, but understands how those connections contribute to the overall network behavior, offering a significant advantage in scenarios where relationships are key to understanding the data.

In the realm of social media sentiment analysis, this framework distinguishes itself by surpassing the performance of several established, complex models. Specifically, it achieves an Area Under the Curve (AUC) of 93.50%, exceeding the results of Random Network Convolution (RNC) at 92.71%, Simplified Graph Convolution (SGC) at 92.40%, and Logistic Regression (LR) at 89.82%. This outcome highlights the framework’s ability to more effectively capture nuanced relationships within social network data, translating to improved accuracy in sentiment prediction compared to these alternative methods. The superior performance suggests an advantage in modeling the intricate dependencies inherent in social media interactions, offering a valuable tool for understanding public opinion and trends.

The Network Propagation Regression framework doesn’t simply meet existing performance standards – it demonstrably exceeds them. In social media sentiment analysis, the model achieved a 3.68% relative improvement in Area Under the Curve (AUC) when contrasted with the next best performing method, Relational Network Cascades (RNC). This isn’t a marginal gain; it signifies a substantial advancement in predictive accuracy, translating to a more reliable and nuanced understanding of sentiment expressed within complex social networks. Such a performance leap suggests the framework’s ability to capture subtle dependencies and patterns that elude less sophisticated models, ultimately providing a more robust tool for analysis and decision-making.

The model’s reliability is underscored by a Standard Error (SE) of 0.52, a key metric demonstrating the precision of the Area Under the Curve (AUC) estimate. This relatively low SE indicates that the observed AUC of 93.50% is not simply due to random chance, but reflects a consistently strong performance across multiple evaluations. Further reinforcing this stability, the 95% Confidence Interval Lower Bound falls at 93.40%. This means there is a high degree of certainty-95%-that the true AUC of the model is above 93.40%, solidifying its position as a robust and dependable tool for sentiment analysis and predictive modeling in network-based datasets.

The pursuit of elegant frameworks invariably collides with the messy reality of production. This paper attempts to build a statistically sound network propagation regression framework, a noble effort. It’s a system designed to model dependencies within graph data, and the simulations suggest it outperforms existing methods. But the true test, as always, will be the inevitable accumulation of edge cases and unforeseen interactions. One suspects the bug tracker will become a detailed account of those failures. As John Dewey observed, “Education is not preparation for life; education is life itself.” Similarly, this framework isn’t a solution to network regression; it’s a continually evolving system responding to the ongoing challenges of real-world data. The framework will not prevent problems, it will only offer a more structured way to document them.

Sooner or Later, It All Breaks

This propagation framework, while statistically neat, merely shifts the burden of proof. Dependencies are modeled, yes, but the inherent messiness of real networks-the data quality issues, the unobserved confounders, the sheer volume of noise-will inevitably expose its limits. The simulations are encouraging, the social media analysis…well, social media is always a good place to hide a multitude of sins. The question isn’t if it will fail in production, but when, and more importantly, how spectacularly.

Future work will undoubtedly focus on extending this to more complex regression types – survival analysis is a natural fit, given the Cox regression component – and handling dynamic networks. But a truly significant advance won’t come from algorithmic elegance. It will arrive when someone finally builds a system that can reliably flag, and then ignore, the 80% of network features that contribute nothing but headaches.

The cycle continues. Everything new is old again, just renamed and still broken. This framework is no exception. It’s a useful tool, certainly, until it becomes tomorrow’s tech debt. Production, as always, will be the final, and most unforgiving, arbiter of its worth.

Original article: https://arxiv.org/pdf/2601.10533.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- Gold Rate Forecast

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Mario Tennis Fever Review: Game, Set, Match

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- What time is the Single’s Inferno Season 5 reunion on Netflix?

2026-01-18 06:32