Author: Denis Avetisyan

A new forecasting model uses spatial awareness and transformer networks to predict traffic patterns with greater accuracy.

This paper introduces GATTF, a geographically-aware transformer model leveraging mutual information to capture spatio-temporal dependencies for improved urban motorway traffic forecasting and digital twin development.

Accurate traffic prediction remains a significant challenge despite advancements in deep learning, particularly within the context of increasingly data-rich urban environments. This paper introduces a novel approach, ‘Geographically-aware Transformer-based Traffic Forecasting for Urban Motorway Digital Twins’, which leverages the power of Transformer networks alongside a geographically-informed methodology. By incorporating spatial dependencies between motorway sensors using mutual information, the proposed model-GATTF-demonstrates improved forecasting accuracy compared to standard Transformer architectures without increasing model complexity. Could this geographically-aware approach unlock more robust and reliable digital twins for proactive traffic management and ultimately, smarter, more connected cities?

Unveiling the Complexity of Modern Traffic Flow

Effective urban planning and congestion mitigation hinge on the ability to accurately predict traffic flow, a task increasingly complicated by the dynamics of modern motorway systems. Traditional forecasting methods, often reliant on linear models and historical averages, frequently fall short when confronted with the nonlinear relationships governing traffic behavior. These systems are inherently sensitive to a multitude of interacting factors – from the number of vehicles and driver behavior to unexpected incidents and even weather conditions – creating a level of complexity that overwhelms simpler predictive approaches. Consequently, even slight deviations from expected patterns can propagate rapidly, leading to significant errors in forecasts and undermining efforts to optimize traffic management and reduce delays for commuters.

Predicting traffic flow isn’t simply about extrapolating past trends; motorway systems exhibit complex, nonlinear dynamics where small changes can trigger disproportionately large effects. Standard time series forecasting, effective for relatively stable phenomena, struggles with this sensitivity to initial conditions and the emergence of chaotic patterns. Moreover, traffic isn’t isolated; external factors – such as weather events, accidents, or even scheduled events – introduce further complexity. These influences aren’t random noise, but rather systemic disruptions that alter the fundamental relationships within the traffic stream, rendering historical data less reliable for future predictions. Consequently, approaches relying on linear assumptions often fail to capture the full range of possible traffic states, leading to inaccurate forecasts and hindering effective traffic management strategies.

Conventional traffic prediction models frequently underestimate the interconnectedness of road networks, treating traffic flow on one segment as largely independent of its surroundings. This simplification overlooks the critical spatio-temporal dependencies inherent in motorway systems – the way congestion propagates as a wave, influenced by both location and time. A slowdown on one stretch inevitably impacts adjacent areas, and this effect is compounded by the time it takes for the disturbance to travel. Consequently, models relying on isolated data points or simplistic temporal analyses struggle to accurately forecast traffic conditions, particularly during peak hours or in response to unexpected events. Capturing these complex relationships requires advanced techniques capable of analyzing traffic as a dynamic, interconnected system, rather than a collection of static points, to unlock truly predictive capabilities.

Harnessing the Power of Transformer Architectures

Traffic data exhibits strong temporal dependencies, meaning current conditions are heavily influenced by past states; sequence-based deep learning models are well-suited to exploit this characteristic. Traditional recurrent neural networks (RNNs) were initially applied to traffic prediction, but the Transformer architecture, originally developed for natural language processing, has demonstrated superior performance. Unlike RNNs, Transformers process entire input sequences in parallel, enabling faster training and capturing long-range dependencies more effectively. This is achieved through self-attention mechanisms that weigh the importance of different time steps when predicting future traffic conditions. Consequently, the Transformer’s ability to model complex temporal relationships makes it a powerful framework for accurate traffic forecasting, outperforming many earlier approaches based on statistical methods or simpler machine learning models.

The Transformer architecture utilizes a self-attention mechanism to weigh the importance of different historical data points when generating traffic predictions. Unlike recurrent neural networks which process data sequentially, the attention mechanism allows the model to directly assess the relationships between all input time steps. This is achieved by calculating attention weights based on the similarity between each pair of time steps, effectively allowing the model to prioritize information from periods that are most indicative of future traffic conditions. Specifically, the attention weights are computed using scaled dot-product attention, where queries, keys, and values are derived from the input sequence, and the resulting weighted sum of values represents the contextually relevant historical information used for forecasting. This direct assessment of temporal relationships improves the model’s ability to capture complex dependencies and enhances forecasting accuracy, particularly for long-range dependencies where traditional recurrent models may struggle with vanishing gradients.

Adapting the Transformer architecture for traffic prediction necessitates specific strategies for spatial data integration and scalability. Traditional Transformers process sequential data; therefore, representing road networks and their interdependencies requires encoding spatial relationships, often through graph neural networks or by treating spatial locations as additional sequence dimensions. Furthermore, traffic datasets are typically large-scale and high-dimensional, demanding techniques like data aggregation, dimensionality reduction, or model parallelism to manage computational complexity and memory requirements during training and inference. Efficient attention mechanisms, such as sparse attention or linear attention, are also crucial for reducing the quadratic computational cost associated with the standard Transformer attention mechanism when dealing with extensive spatial and temporal data.

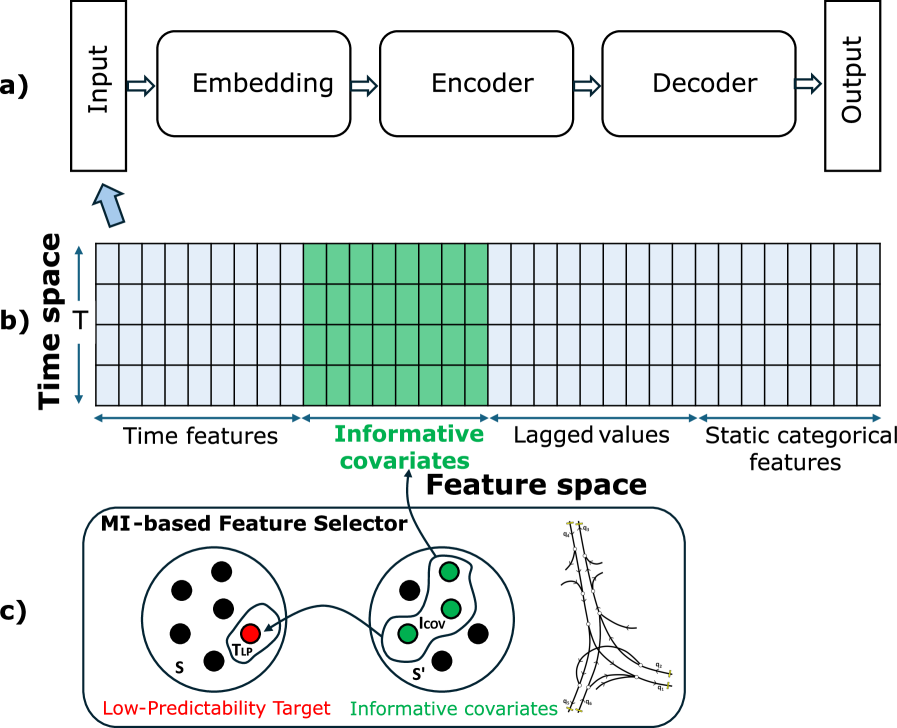

GATTF: A Geographically-Aware System for Enhanced Prediction

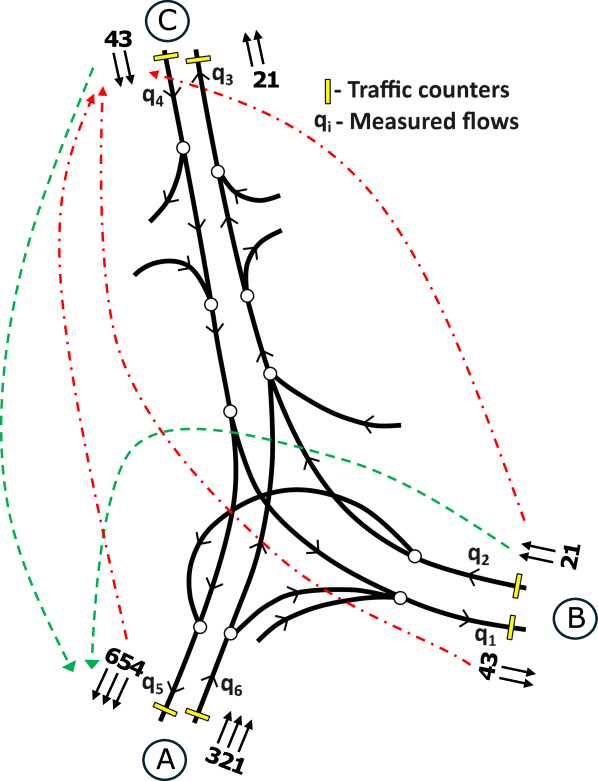

The Geographically-Aware Transformer (GATTF) model integrates the established Transformer architecture – known for its capacity to model sequential data and capture long-range dependencies – with a data-driven sensor selection process. This combination addresses limitations of traditional traffic forecasting methods which often rely on all available sensor data, leading to increased computational cost and potential noise. By leveraging statistical dependencies identified through Mutual Information analysis, GATTF selectively incorporates the most relevant sensors into the Transformer network. This targeted approach reduces model complexity while maintaining, and in many cases improving, the accuracy of traffic flow predictions by focusing on the sensors that contribute the most significant information for forecasting.

Mutual Information (MI) serves as the primary metric for assessing the statistical relationship between individual traffic sensors within the GATTF model. Specifically, MI quantifies the amount of information one sensor provides about another, beyond what is known from the sensor’s own data. A higher MI value indicates a stronger dependence, suggesting the receiving sensor’s data is more valuable for forecasting when the transmitting sensor’s data is also considered. The calculation of MI, based on the probability distributions of sensor readings, enables the identification of redundant sensors – those with low MI relative to others – which can then be excluded from the model to reduce computational load without significantly impacting forecast accuracy. This data-driven sensor selection process leverages the principle that only statistically dependent sensors contribute unique information for improved traffic flow prediction.

GATTF achieves reductions in computational complexity and improvements in forecasting performance through a selective sensor incorporation process. Rather than utilizing data from all available traffic sensors, the model leverages Mutual Information (MI) to identify the most statistically dependent and informative sensors for a given location. This targeted approach diminishes the dimensionality of the input data, thereby decreasing the number of parameters and operations required for processing. By focusing on sensors exhibiting strong spatio-temporal relationships, GATTF effectively captures dependencies in traffic flow, leading to more accurate predictions without the computational burden of processing redundant or weakly correlated data.

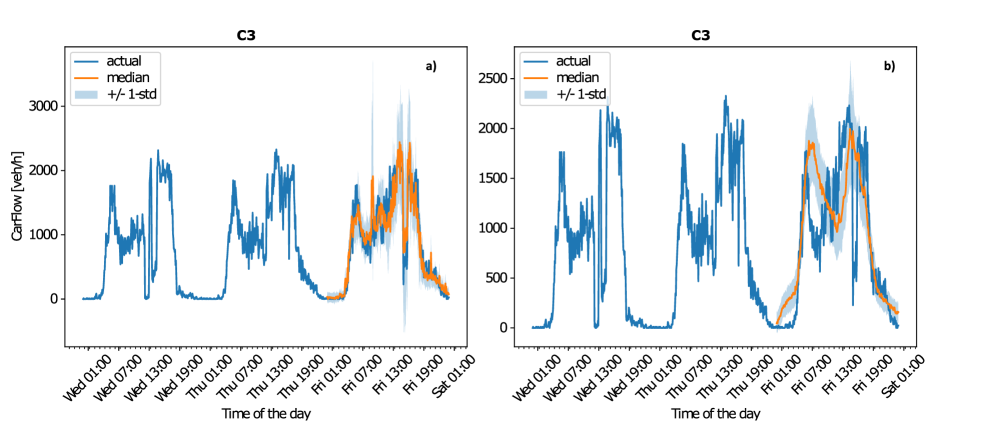

Evaluation of the GATTF model was conducted using the Geneva Motorway Network, demonstrating its capacity for accurate traffic flow prediction. Specifically, at location C3, GATTF achieved an 85.63% improvement in Mean Absolute Scaled Error (MASE) when benchmarked against a standard Transformer model lacking covariate inputs. This performance gain indicates the efficacy of the geographically-aware sensor selection process implemented in GATTF, highlighting its ability to leverage spatial dependencies for enhanced forecasting accuracy. The MASE metric provides a normalized measure of error, allowing for a direct comparison of predictive performance across different time series and scales.

Expanding the Predictive Horizon: Advanced Transformer Variants

The Transformer architecture, initially prominent in natural language processing, has proven remarkably adaptable to the complexities of traffic prediction, extending far beyond the capabilities of the Graph Attention Transformer (GATTF). Models such as Trafficformer and those utilizing a Decoder-Only Transformer structure showcase this versatility by effectively capturing spatiotemporal dependencies within traffic networks. Trafficformer, for example, focuses on enhancing the model’s ability to discern relationships between different roadways, while Decoder-Only Transformers leverage their generative capabilities to forecast traffic conditions over extended horizons. These diverse implementations highlight that the core Transformer mechanism-attention-provides a robust foundation for representing and predicting the dynamic patterns inherent in traffic flow, fostering innovation beyond the initial GATTF framework and paving the way for increasingly accurate and reliable traffic management systems.

Recognizing that traffic patterns are inherently spatial, recent advancements in Transformer-based forecasting models increasingly integrate Graph Convolutional Neural Networks (GCNNs). These hybrid architectures leverage the strengths of both approaches: Transformers excel at capturing temporal dependencies within traffic flow, while GCNNs effectively model the complex relationships between different locations on a road network. By representing roads as nodes and their connections as edges, GCNNs allow the model to understand how congestion on one street might influence traffic on others. This spatial awareness is crucial for accurate predictions, particularly in scenarios where localized incidents or bottlenecks can have cascading effects. The combination facilitates a more holistic understanding of traffic dynamics, going beyond simple time-series analysis to incorporate the geographical context of the problem and offering improved forecasting performance compared to models that treat each sensor in isolation.

Recent advancements in traffic prediction leverage Temporal Cluster Blocks to significantly enhance the adaptability of Transformer models. This technique groups similar time periods – such as rush hours or weekend patterns – across geographically distinct cities, allowing the model to learn transferable representations of traffic dynamics. By identifying and clustering these temporal patterns, the model isn’t required to relearn basic traffic behaviors for each new location. Instead, it can effectively transfer knowledge gained from one city’s sensor network to another, even with variations in infrastructure or traffic volume. This approach not only accelerates the training process for new locations but also improves prediction accuracy, particularly in scenarios where historical data is limited, making it a valuable tool for real-world deployment and scalable traffic management systems.

Traditional traffic prediction often focuses on single-point estimates of future conditions, but probabilistic forecasting acknowledges the inherent unpredictability of complex systems like road networks. Instead of predicting a single value for traffic volume or speed, this approach generates a probability distribution, outlining the range of likely outcomes and their associated likelihoods. This is particularly valuable because traffic flow is influenced by a multitude of factors – from weather and special events to individual driver behavior – creating considerable uncertainty. By providing a distribution, rather than a fixed prediction, decision-makers can better assess risk and implement more robust traffic management strategies, accounting for the possibility of unexpected congestion or incidents. This nuanced perspective allows for improved resource allocation, optimized route guidance, and ultimately, a more resilient transportation system.

The pursuit of accurate traffic forecasting, as demonstrated by GATTF, necessitates a holistic understanding of interconnected systems. The model’s innovative use of mutual information to capture spatio-temporal dependencies highlights the importance of recognizing that individual components do not operate in isolation. This aligns with a fundamental principle of robust design: if a system feels overly complex, it is likely fragile. Vinton Cerf aptly observed, “Technology is meant to make life easier, not more complicated.” The elegance of GATTF lies in its ability to simplify the representation of complex traffic patterns, thereby enhancing predictability and resilience – a testament to the power of streamlined, interconnected design.

Roads Ahead

The pursuit of accurate traffic forecasting, as exemplified by models like GATTF, often feels like attempting to redesign a city block by block. Each incremental improvement in capturing spatio-temporal dependencies – a clever use of mutual information here – is, in truth, a temporary fix. The underlying challenge isn’t simply predicting vehicle movements, but modeling the emergent behavior of a complex, adaptive system. A truly robust system must evolve, not require constant reconstruction.

Future work should focus less on architectural novelty and more on foundational principles. Exploring methods to integrate causal inference – understanding why traffic patterns change, not just that they change – is crucial. Furthermore, the anticipated proliferation of connected and automated vehicles presents both an opportunity and a complication. While offering a richer data stream, these vehicles also introduce new behavioral patterns that current models, trained on human-driven traffic, may struggle to accommodate.

The ideal trajectory isn’t a relentless chase for marginal gains in prediction accuracy. Instead, the field should prioritize building models that are inherently adaptable, capable of learning from unforeseen events, and robust to the inevitable shifts in urban mobility landscapes. The goal is not a perfect prediction, but a system that anticipates its own limitations and gracefully adjusts to the unpredictable currents of city life.

Original article: https://arxiv.org/pdf/2602.05983.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- Mario Tennis Fever Review: Game, Set, Match

- Gold Rate Forecast

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- What time is the Single’s Inferno Season 5 reunion on Netflix?

2026-02-08 14:14