Author: Denis Avetisyan

As machine learning models become increasingly integral to critical infrastructure, a proactive and holistic approach to security is essential throughout the entire development process.

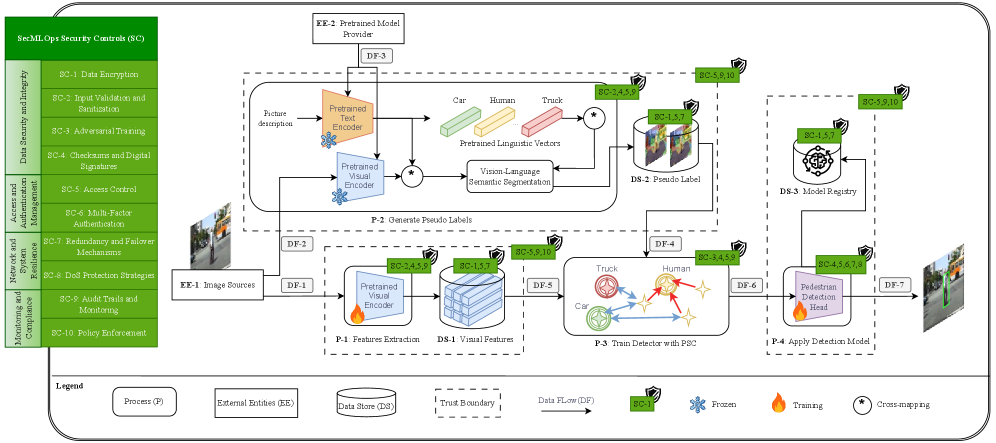

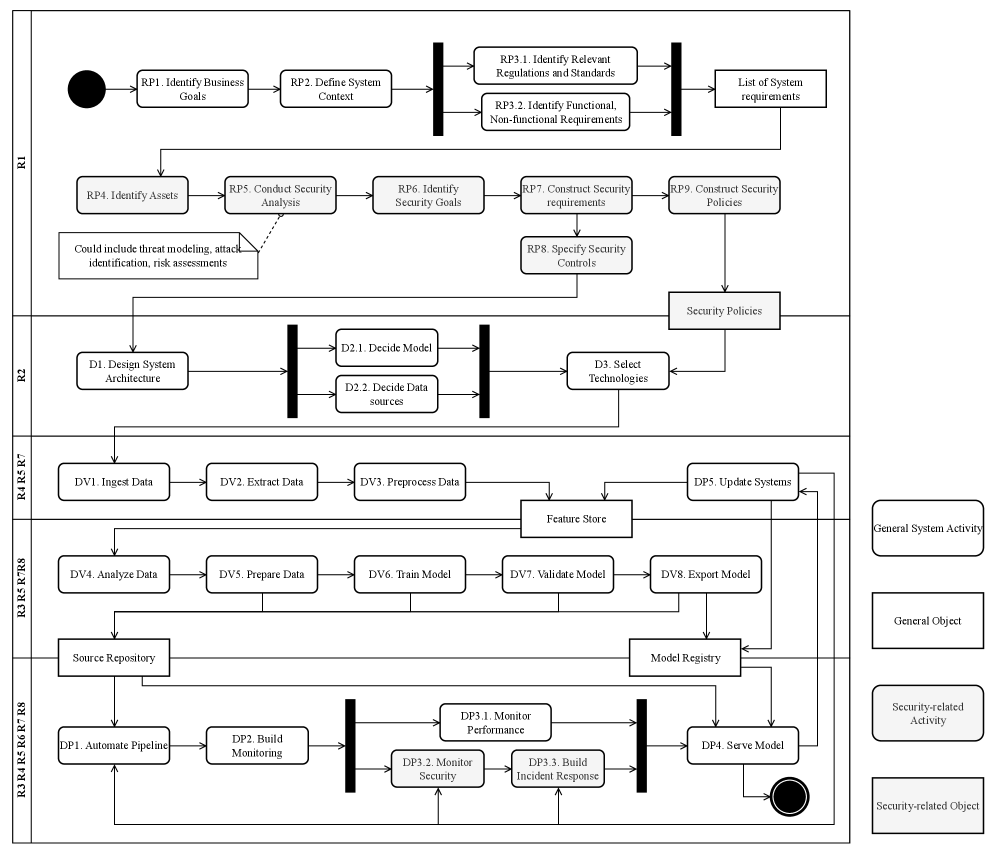

This review introduces SecMLOps, a comprehensive framework for integrating security practices into every stage of the MLOps lifecycle, addressing threats from data poisoning to adversarial attacks and ensuring regulatory compliance.

While machine learning increasingly powers critical infrastructure, its deployment introduces vulnerabilities to sophisticated attacks that threaten system integrity and reliability. This paper introduces SecMLOps: A Comprehensive Framework for Integrating Security Throughout the MLOps Lifecycle, addressing this challenge by systematically embedding security measures across the entire machine learning operations pipeline. Our framework enhances robustness against threats like data poisoning and adversarial examples, ultimately improving the trustworthiness and compliance of deployed models. How can practitioners effectively balance enhanced security with operational efficiency in diverse machine learning applications?

The Illusion of Robustness: Why Vision-Language Systems Are Ripe for Exploitation

Modern vision-language systems, integral to the functionality of autonomous vehicles and robotic assistants, demonstrate a surprising vulnerability to cleverly disguised adversarial attacks. These aren’t the easily detectable manipulations of simply obscuring a stop sign; instead, subtle alterations – a nearly imperceptible sticker on a road sign, a carefully crafted pattern on clothing, or even minor changes to image lighting – can reliably mislead the system. Such manipulations exploit the complex, high-dimensional feature spaces these models operate within, causing misclassifications with potentially catastrophic consequences. The concern isn’t just about fooling the algorithm, but the fact that these attacks are often designed to be imperceptible to human observers, meaning the system operates with a false sense of security while misinterpreting the real world. This highlights a critical gap between achieving high accuracy on benchmark datasets and ensuring robust, real-world performance in the face of malicious or unintended distortions.

Conventional Machine Learning Operations (MLOps) pipelines, designed for model deployment and monitoring, prove inadequate when confronting the nuanced security threats facing modern vision-language systems. These systems, with their intricate architectures and reliance on vast datasets, are vulnerable to adversarial attacks that bypass typical anomaly detection. Standard MLOps focuses on performance metrics like accuracy and latency, often overlooking subtle perturbations in input data crafted to deliberately mislead the model. Unlike traditional software vulnerabilities, attacks on vision-language models can manifest as misclassifications with real-world consequences, particularly in safety-critical applications like autonomous driving or medical diagnosis. The complexity of these models, coupled with the high-dimensional nature of image and text data, necessitates security measures integrated throughout the entire machine learning lifecycle – from data curation and model training to deployment and ongoing monitoring – exceeding the scope of conventional MLOps practices.

Ensuring the dependable operation of vision-language systems now demands a fundamental shift towards proactive security integrated throughout the entire machine learning lifecycle. Traditional reactive approaches, focused on post-deployment monitoring, are proving inadequate against increasingly sophisticated adversarial attacks that can subtly manipulate model outputs. This necessitates embedding security considerations from the initial data collection and model training phases, through rigorous validation and continuous monitoring even after deployment. A holistic strategy includes robust data sanitization, adversarial training techniques to enhance model resilience, and the implementation of formal verification methods to guarantee performance within defined safety boundaries. Prioritizing this proactive stance is no longer simply best practice; it’s becoming essential for building trustworthy artificial intelligence and mitigating the risks associated with deploying these powerful systems in real-world applications.

Beyond MLOps: A Paradigm Shift Towards Secure Machine Learning

SecMLOps represents an evolution of standard MLOps practices by systematically incorporating security evaluations and controls throughout the entire machine learning lifecycle. This integration begins with data ingestion, where validation and sanitization processes mitigate risks associated with compromised or malicious datasets. Security considerations continue through model training, encompassing code review, dependency management, and protection against adversarial attacks. During deployment, SecMLOps emphasizes secure infrastructure, access controls, and model integrity monitoring. Finally, continuous monitoring in production focuses on detecting anomalies, data drift, and potential security breaches, enabling rapid response and remediation. This comprehensive, lifecycle-based approach aims to proactively identify and address vulnerabilities, rather than reacting to incidents post-deployment.

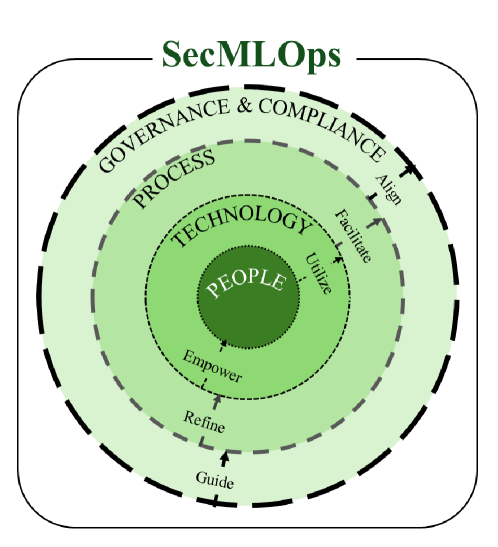

The PTPGC Framework offers a systematic methodology for SecMLOps implementation by addressing five core pillars. People focuses on skill development and awareness regarding machine learning security best practices across all teams. Technology encompasses the secure tools and infrastructure required for data handling, model training, and deployment, including vulnerability scanning and threat detection systems. Processes define secure coding practices, data validation procedures, and model validation pipelines integrated into the MLOps lifecycle. Governance establishes clear ownership, accountability, and risk management policies for machine learning systems. Finally, Compliance ensures adherence to relevant regulatory requirements and industry standards pertaining to data privacy, security, and model fairness.

Proactive security risk mitigation within SecMLOps directly enhances the trust and reliability of machine learning systems by reducing the potential for adversarial attacks, data breaches, and model manipulation. This is achieved through continuous security assessments, vulnerability scanning, and the implementation of robust access controls throughout the entire machine learning lifecycle. By identifying and addressing threats early, organizations minimize the likelihood of system failures, maintain data integrity, and ensure consistent, predictable model performance. This, in turn, fosters user confidence and facilitates the responsible deployment of machine learning applications in critical infrastructure and sensitive domains.

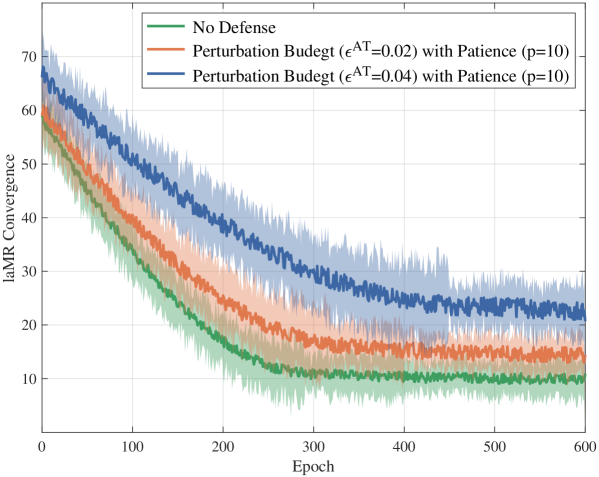

Fortifying the Foundation: Techniques for Robustness and Privacy

Adversarial training is a technique used to improve the robustness of machine learning models against intentionally crafted malicious inputs, known as adversarial examples. This process involves augmenting the training dataset with these adversarial examples, generated by applying small, carefully designed perturbations to existing data points. By training the model on both clean and adversarial examples, it learns to correctly classify inputs even when subjected to these perturbations. This results in a model less susceptible to attacks that exploit vulnerabilities in the decision boundaries, increasing its reliability in real-world deployments where malicious or unexpected inputs may occur. The effectiveness of adversarial training relies on the generation method used to create the adversarial examples, with algorithms like the Fast Gradient Sign Method (FGSM) being commonly employed.

Privacy-preserving methods address the challenge of training machine learning models on distributed datasets containing sensitive information. Differential Privacy achieves this by adding carefully calibrated noise to the training process or model outputs, ensuring that the contribution of any single data point is obfuscated. Federated Learning, conversely, enables collaborative model training without direct data exchange; instead, model updates are shared between participating clients, and a central server aggregates these updates to create an improved global model. Both techniques aim to minimize the risk of data breaches and maintain user privacy while still allowing for effective model development and deployment.

Data augmentation techniques, specifically CutMix and Model Distillation, address limitations in model generalization and robustness, particularly when training data is limited. CutMix operates by combining portions of different training images, creating new, composite examples that encourage the model to focus on more diverse features. Model Distillation transfers knowledge from a larger, potentially more accurate “teacher” model to a smaller “student” model, improving the student’s performance and generalization capabilities. In data-scarce scenarios, these techniques effectively increase the diversity of the training set and regularize the learning process, leading to improved performance on unseen data and increased resilience to adversarial attacks or variations in input conditions.

Evaluation of the proposed SecMLOps framework on pedestrian detection systems indicates a substantial reduction in miss rate when subjected to the Fast Gradient Sign Method (FGSM) attack. Specifically, systems utilizing the SecMLOps framework achieved a miss rate of approximately 14.9% under FGSM, representing a significant improvement over the 35.6% miss rate observed in undefended baseline systems. This data demonstrates the framework’s efficacy in mitigating the impact of adversarial attacks on the performance of pedestrian detection, suggesting enhanced reliability in safety-critical applications.

The Path Forward: Ethical Alignment and Future Directions

Constitutional AI represents a pivotal advancement in responsible machine learning, offering a structured approach to imbue models with ethical guidelines directly during the training process. Rather than relying solely on post-hoc evaluations or reward shaping, this technique defines a ‘constitution’ – a set of core principles – that guides the model’s self-improvement through reinforcement learning. The system evaluates its own outputs against these principles, iteratively refining its behavior to adhere to human values such as fairness, honesty, and helpfulness. Integrating this methodology into Secure Machine Learning Operations (SecMLOps) creates a proactive safety net, ensuring that models not only function securely against malicious inputs, but also consistently align with desired ethical standards throughout their lifecycle – from development and deployment to ongoing monitoring and updates. This synergistic approach promises a future where artificial intelligence is both robust and fundamentally benevolent.

The increasing deployment of Vision-Language Pre-trained Models (VLPDs) in autonomous systems-from self-driving vehicles to robotic assistants-necessitates a robust approach to safety and reliability, and SecMLOps offers precisely that. These models, while powerful, are susceptible to adversarial attacks and data biases that could lead to unpredictable and potentially harmful behavior in real-world applications. SecMLOps extends traditional DevOps principles by integrating security considerations throughout the entire machine learning lifecycle, enabling continuous monitoring, validation, and retraining. This proactive approach ensures that VLPD-powered systems not only function as intended but also maintain their integrity and trustworthiness in the face of evolving threats and changing environmental conditions, ultimately fostering public confidence in these increasingly prevalent technologies.

The development of robust and equitable Visual Pedestrian Localisation and Detection (VLPD) systems hinges significantly on the quality and inclusivity of training data, as exemplified by the CityPersons Dataset. This dataset isn’t merely a collection of images; it’s a carefully curated resource designed to address critical biases often found in standard computer vision datasets. By prioritizing diversity in pedestrian appearance, pose, clothing, and environmental conditions, CityPersons actively mitigates the risk of VLPD systems exhibiting skewed performance across different demographic groups or real-world scenarios. A dataset lacking such representation can lead to inaccurate detections – and potentially dangerous outcomes – for underrepresented populations, highlighting that fair and unbiased performance isn’t simply a technical goal, but an ethical imperative in the design of autonomous technologies.

The implementation of SecMLOps, while bolstering the security and reliability of machine learning systems, introduces a quantifiable, yet manageable, performance overhead. Rigorous input validation and sanitization processes, essential for preventing malicious inputs, currently add approximately 5 to 8 milliseconds to processing time. Furthermore, adversarial training – a technique used to fortify models against targeted attacks – contributes an additional 15 to 20 percent to overall inference time. This modest increase, however, represents a worthwhile trade-off, balancing computational efficiency with a substantially improved security posture and increased trust in deployed autonomous technologies, particularly as systems become more complex and integrated into critical infrastructure.

The pursuit of SecMLOps, as outlined in the paper, feels less like innovation and more like meticulously documenting the inevitable. The framework attempts to proactively address threats like data poisoning and adversarial attacks, yet one suspects production environments will discover novel failure modes regardless. It’s a valiant effort to build resilient machine learning systems, but the very nature of complex systems guarantees unforeseen vulnerabilities. As Bertrand Russell observed, ‘The problem with the world is that everyone is an expert in everything.’ This certainly applies to security; everyone thinks they’ve considered all the attack vectors, until something breaks. The cycle continues – elegant theory, messy implementation, and eventual technical debt. One can almost predict it.

What’s Next?

The proposal of ‘SecMLOps’ feels less like innovation and more like acknowledging the inevitable. For years, the field chased scalability and speed, building systems that were, at best, politely ignoring security concerns. Now, the logs are filling with adversarial examples and data poisoning attempts, so naturally, someone proposes a framework to add security later. It’s a familiar pattern. The elegance of the theoretical model always seems to fray at the edges of production.

The true test won’t be the framework itself, but the willingness to actually use it. Threat modeling is a fine exercise, until it conflicts with the quarterly release schedule. Compliance is a paperwork problem, easily solved by a dedicated team… who will inevitably be overruled by someone concerned with ‘time to market.’ The researchers correctly identify the attack vectors, but the real challenge lies in convincing those building the systems to accept that anything called ‘robust’ hasn’t been sufficiently stressed.

One suspects the next iteration of this problem won’t be about integrating security, but about accepting that perfect security is an illusion. A well-maintained monolith, rigorously tested, might prove more resilient than a hundred microservices each claiming to be invulnerable. The field will learn – as it always does – that sometimes, the simplest solutions are the ones that survive.

Original article: https://arxiv.org/pdf/2601.10848.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- The MCU’s Mandarin Twist, Explained

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- SHIB PREDICTION. SHIB cryptocurrency

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- Rob Reiner’s Son Officially Charged With First Degree Murder

- MNT PREDICTION. MNT cryptocurrency

- ‘Stranger Things’ Creators Break Down Why Finale Had No Demogorgons

2026-01-20 15:56