Author: Denis Avetisyan

This research details a new science gateway that streamlines post-disaster analysis by combining photogrammetry and machine learning techniques.

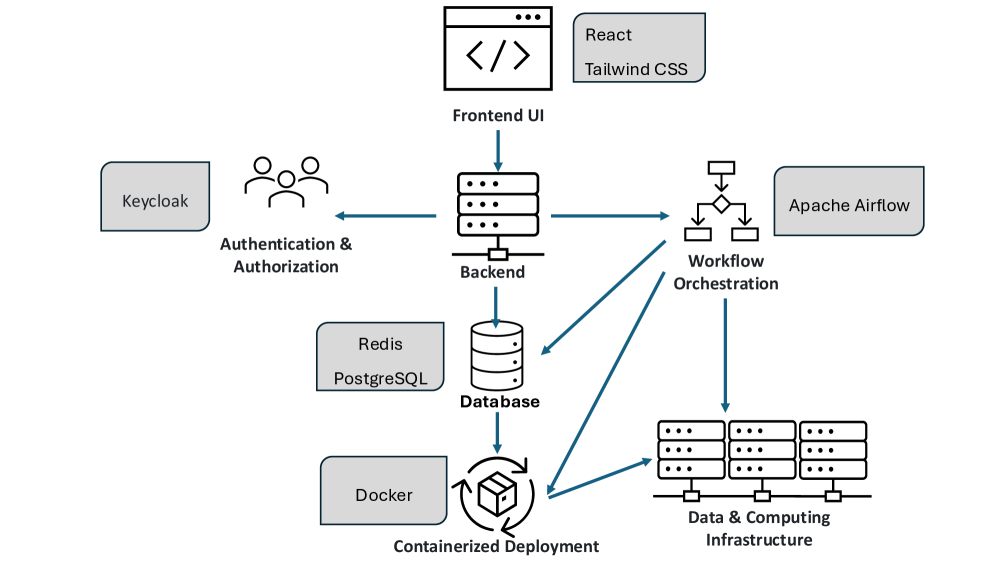

A portable, open-source workflow leveraging Airflow and cyberinfrastructure enables rapid, automated damage assessment for improved hazard management.

Increasingly frequent extreme weather events and seismic activity demand more rapid and effective damage assessment capabilities. This need is addressed in ‘Developing a Portable Solution for Post-Event Analysis Pipelines’, which presents a science gateway framework integrating photogrammetry, data visualization, and artificial intelligence for automated post-disaster analysis of aerial imagery. The resulting portable workflow enables faster evaluation of impacts on risk-exposed assets by streamlining traditionally complex analytical pipelines. Could such a system ultimately facilitate more proactive disaster response and resilience planning in vulnerable regions?

From Catastrophe to Calculation: The Imperative of Rapid Damage Assessment

In the immediate aftermath of a natural disaster, the speed with which damage is assessed directly impacts the efficacy of rescue efforts and the distribution of vital resources. Delays in understanding the scope of destruction can hinder search and rescue teams, impede the delivery of medical supplies, and exacerbate the suffering of affected populations. A rapid, accurate damage assessment allows relief organizations and government agencies to prioritize areas most in need, efficiently allocate personnel and funding, and ultimately save lives. This process isn’t merely about quantifying destruction; it’s about translating impacted areas into actionable intelligence, enabling a targeted and effective response that minimizes long-term consequences and facilitates a quicker path to recovery for communities facing devastation.

Post-disaster damage assessment has historically relied on ground-based teams physically surveying affected areas, a process inherently limited by accessibility and speed. These conventional methods are not only incredibly labor-intensive, demanding significant time and resources, but also struggle to provide the detailed, geographically precise data necessary for effective aid distribution. Consequently, response efforts often lack the spatial granularity needed to reach the most vulnerable populations or prioritize critical infrastructure repairs, leading to delays and inefficient resource allocation. The resulting broad-stroke understanding of damage hinders targeted assistance and prolongs the recovery period for communities impacted by natural disasters.

The advent of remote sensing technologies, especially aerial imagery captured by drones and aircraft, presents a transformative opportunity for post-disaster response. However, the sheer volume of data generated necessitates automated analytical techniques to be truly useful. Manually interpreting countless images is impractical given the urgent need for situational awareness; therefore, researchers are developing sophisticated algorithms-including machine learning models-capable of identifying damaged infrastructure, flooded areas, and displaced populations directly from aerial data. These automated systems not only accelerate the assessment process but also provide a level of spatial detail previously unattainable, enabling aid organizations to precisely target resources and maximize the impact of relief efforts. Ultimately, the full potential of aerial imagery lies not simply in data collection, but in the ability to rapidly and accurately translate that data into actionable intelligence.

Automated Damage Mapping: A Logical Progression

Deep Learning techniques facilitate automated damage assessment from aerial imagery by leveraging algorithms capable of pattern recognition and classification. These models are trained on extensive datasets of labeled images, enabling them to identify specific damage types – such as building collapse, flooding, or road blockage – directly from visual data. This contrasts with traditional manual analysis, which is time-consuming and resource-intensive. The automation provided by Deep Learning allows for rapid damage mapping following disaster events, supporting quicker and more effective response efforts. Current implementations utilize Convolutional Neural Networks (CNNs) to extract features from imagery and classify damage levels with increasing precision, often exceeding human performance in terms of speed and consistency.

Semantic segmentation is a pixel-level image analysis technique utilized in deep learning to categorize each pixel within an image. Unlike image classification, which assigns a single label to an entire image, or object detection which identifies and localizes objects with bounding boxes, semantic segmentation assigns a class label to every pixel. This results in a dense, per-pixel classification map, enabling the creation of detailed damage maps where each pixel is labeled with a specific damage type (e.g., no damage, minor damage, destroyed). The output is essentially a classification map overlaid on the original image, providing a granular understanding of the affected areas and facilitating precise damage assessment.

The 100 Layers Tiramisu model is a deep convolutional neural network architecture utilized for high-resolution semantic segmentation in damage mapping applications. Its design, featuring a dense connectivity pattern, facilitates efficient feature extraction and propagation, crucial for identifying subtle damage indicators in aerial or satellite imagery. Performance is significantly enhanced through training on datasets specifically curated for disaster response, such as FloodNet, which focuses on flood extent mapping, and RescueNet, containing imagery of various disaster types including building damage assessment. These datasets provide the necessary labeled examples for the model to learn and generalize, ultimately improving the accuracy of automated damage classification and mapping.

Effective training of deep learning models for damage mapping is fundamentally reliant on the availability of substantial, accurately labeled datasets. These datasets, comprising aerial or satellite imagery paired with precise annotations identifying damage types and extents, are computationally expensive to create and require significant human effort. The scale of labeling needed – often requiring pixel-level annotations for semantic segmentation – necessitates dedicated data curation pipelines and often benefits from community contributions. Publicly available datasets such as FloodNet and RescueNet are critical resources, facilitating model development and benchmarking, and enabling rapid deployment of damage assessment tools following disaster events. The performance of these models is directly correlated with the size and quality of the training data, emphasizing the need for continued investment in data collection and annotation efforts within disaster response frameworks.

Orchestrating the Analytical Pipeline: A Systematic Approach

Effective post-event analysis relies on a holistic workflow extending beyond a solely trained machine learning model. Reproducibility is achieved through version control of all processing steps, dependencies, and data, enabling consistent results over time and across different execution environments. Scalability requires the workflow to be designed for parallel processing and distribution across computational resources, handling increasing data volumes and analysis demands. This necessitates infrastructure capable of dynamically allocating resources and managing task dependencies, ensuring timely insights are generated even during large-scale events. A robust workflow incorporates automated testing and validation procedures to maintain data quality and model accuracy throughout the entire pipeline.

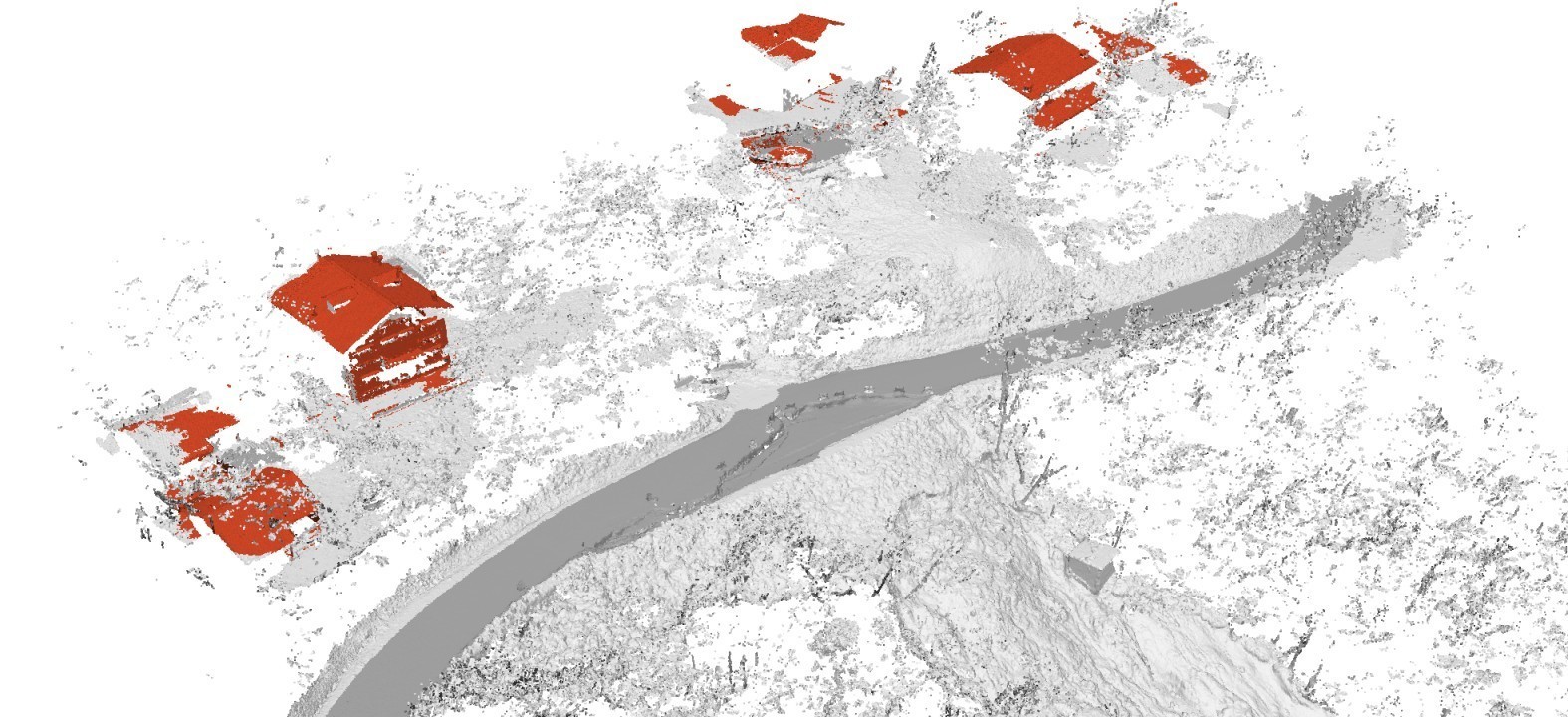

Photogrammetry, specifically employing Aerial Structure-from-Motion (ASfM) techniques, creates three-dimensional models from overlapping two-dimensional images captured aerially. This process involves identifying common points across multiple images and solving for the camera positions and 3D structure of the scene. The resulting 3D models provide a detailed contextual base for damage assessment by enabling accurate measurements of affected areas, volumetric calculations of debris, and visualization of structural changes. ASfM-derived models are particularly valuable in post-event analysis as they offer a geometrically accurate representation of the impacted environment, exceeding the information available from traditional two-dimensional imagery or manual surveys.

Apache Airflow functions as a workflow management platform utilizing Directed Acyclic Graphs (DAGs) to define and execute complex sequences of tasks. These DAGs represent the entire post-event analysis pipeline, beginning with data ingestion from various sources – including imagery and metadata – and progressing through pre-processing, model execution, and ultimately, the delivery of insights. Each node within the DAG represents a specific task, and dependencies between nodes define the execution order. Airflow monitors task status, handles retries upon failure, and provides a centralized interface for managing and scaling the entire process, ensuring a reproducible and automated workflow from initial data input to final model output.

Docker containers are utilized to package the post-event analysis workflow, including all dependencies – libraries, tools, and the trained machine learning model – into a standardized unit for deployment. This encapsulation guarantees consistent execution across diverse computing environments, eliminating discrepancies caused by differing system configurations. Complementing this, a Science Gateway serves as a centralized platform for accessing and managing the Dockerized workflow. This gateway provides user authentication, authorization, and resource allocation, enabling controlled access to the analysis pipeline and facilitating collaborative research or operational deployment. It abstracts the underlying infrastructure complexity, allowing users to focus on data input and result interpretation without needing to manage container orchestration or server configurations.

PLEIADI Infrastructure: Computational Foundation for Rapid Response

The PLEIADI Infrastructure, operated by the National Institute for Astrophysics (INAF), serves as the computational backbone for rapidly processing data following a disaster event. This high-performance computing facility doesn’t simply offer raw power; it’s specifically configured to handle the demands of complex post-event analysis workflows. These workflows often involve processing massive datasets – including aerial and satellite imagery – to identify damage, assess risks, and support emergency response. PLEIADI’s architecture allows for the parallel processing of these datasets, dramatically reducing the time needed to generate critical information for decision-makers. By providing the necessary computational muscle, the infrastructure transforms raw data into actionable intelligence, enabling a more effective and timely response to natural disasters and other crises.

The sheer volume of aerial imagery generated during rapid disaster assessment demands a storage solution capable of both capacity and speed, and the PLEIADI infrastructure utilizes the BEEGFS parallel file system to meet this challenge. Unlike traditional storage methods, BEEGFS distributes data across multiple servers, enabling simultaneous access and dramatically increasing throughput. This architecture is crucial for processing extensive datasets – often terabytes in size – allowing researchers to quickly analyze imagery for damage assessment and identify areas requiring immediate attention. By minimizing data access bottlenecks, BEEGFS ensures that computational resources are fully utilized, accelerating the entire post-disaster analysis workflow and facilitating timely response efforts.

The efficient processing of extensive aerial imagery following a disaster demands a sophisticated workload management system, and the PLEIADI infrastructure relies on SLURM to deliver this capability. SLURM – the Simple Linux Utility for Resource Management – dynamically allocates computational resources, prioritizing and scheduling tasks to maximize the utilization of PLEIADI’s high-performance computing facility. This ensures that processing requests, such as those involved in damage assessment or landslide analysis, are handled swiftly and effectively, even when multiple analyses are initiated concurrently. By intelligently distributing the computational burden, SLURM prevents bottlenecks and maintains optimal performance, ultimately enabling a more rapid and comprehensive understanding of the post-disaster situation.

The efficacy of the PLEIADI infrastructure was definitively proven through its application to the Tredozio Survey, a real-world test of the entire post-disaster analysis workflow. This validation exercise successfully processed aerial imagery to facilitate a detailed assessment of a significant landslide event. Measurements derived from the analysis revealed the landslide to be approximately 30 meters wide and 110 meters deep, demonstrating the system’s capacity to not only function operationally, but also to provide precise quantitative data crucial for understanding and responding to natural disasters. The Tredozio Survey therefore confirms PLEIADI’s readiness to support rapid and detailed analysis following catastrophic events, bridging the gap between data acquisition and actionable insights.

The pursuit of a portable solution for post-event analysis, as detailed in this work, echoes a fundamental principle of computational correctness. One might recall Alan Turing’s assertion: “There is no substitute for a good algorithm.” This holds true as the described science gateway integrates photogrammetry and machine learning-algorithms demanding rigorous validation. The system isn’t merely designed to ‘work on tests’ but to provide a provable, repeatable pipeline for hazard management. The emphasis on automated damage assessment, facilitated by Airflow and cyberinfrastructure, reflects a commitment to precision-a solution built upon a mathematically sound foundation, rather than empirical observation alone. Such a focus on algorithmic purity ensures the reliability demanded by critical applications.

The Horizon of Automated Insight

The presented confluence of photogrammetry, machine learning, and cyberinfrastructure represents a functional, if not entirely elegant, step toward automated post-event analysis. However, the true challenge does not lie in merely producing damage assessments, but in their inherent verifiability. Current methods, reliant as they are on trained algorithms, offer correlation, not necessarily truth. The field must now grapple with establishing formal proofs of accuracy, moving beyond empirical validation on test datasets toward provable reliability-a metric far more difficult to achieve, but infinitely more valuable.

A critical limitation remains the dependence on sufficiently high-resolution imagery. The elegance of any algorithm is diminished when shackled to imperfect data. Future work should explore methods for robust reconstruction from sparse or noisy inputs, perhaps leveraging techniques from information theory to quantify and minimize uncertainty. Furthermore, the current pipeline, while portable, lacks inherent adaptability. A truly robust system must not only assess damage but also learn from each event, refining its models and improving its predictive capabilities.

Ultimately, the pursuit of automated insight is not merely a technological endeavor, but a philosophical one. The goal is not to replace human judgment entirely, but to augment it with a system capable of providing demonstrably accurate and verifiable information-a system built on the foundations of mathematical rigor, not simply empirical success. The path forward demands a commitment to provability, even-and especially-when it proves inconvenient.

Original article: https://arxiv.org/pdf/2602.01798.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- NBA 2K26 Season 5 Adds College Themed Content

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Gold Rate Forecast

- Brent Oil Forecast

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Mario Tennis Fever Review: Game, Set, Match

- Heated Rivalry Adapts the Book’s Sex Scenes Beat by Beat

- EUR INR PREDICTION

2026-02-03 23:08