Author: Denis Avetisyan

New research reveals how data analysis can pinpoint the key individuals and automated accounts driving the proliferation of misinformation on X.

This study identifies and compares the influence metrics of human and bot accounts spreading conspiracy theories, utilizing an adapted H-index to reveal distinct behavioral patterns.

Despite growing concern over the societal impact of misinformation, effectively identifying the key drivers of online conspiracy theories remains a significant challenge. This research, ‘Unmasking Superspreaders: Data-Driven Approaches for Identifying and Comparing Key Influencers of Conspiracy Theories on X.com’, addresses this gap by analyzing over seven million tweets related to the COVID-19 pandemic to differentiate between human ‘superspreaders’ and automated bots. Our analysis reveals distinct communication strategies – with humans favoring complex language and substantive content, while bots prioritize accessibility through simpler language and hashtag usage – and introduces 27 novel metrics, including an adapted H-index, for quantifying and identifying these actors. Could these data-driven insights provide a foundation for more effective platform moderation and public awareness campaigns aimed at mitigating the spread of harmful misinformation?

The Infodemic: How Fear and Algorithms Fuel Conspiracy

The COVID-19 pandemic dramatically accelerated the spread of conspiracy theories across social media platforms, creating an environment where misinformation flourished. Existing anxieties surrounding health, economic stability, and governmental responses provided a receptive audience for unsubstantiated claims. Social media’s algorithmic structures, designed to maximize engagement, inadvertently amplified these narratives, prioritizing sensational content over verified facts. This confluence of factors – widespread fear, pre-existing distrust, and platform mechanics – transformed isolated pockets of conspiratorial thought into rapidly disseminating online movements, demonstrating how a public health crisis could simultaneously become an infodemic of false and misleading information. The speed and scale of this proliferation presented unprecedented challenges for fact-checkers, public health officials, and social media companies alike, highlighting the vulnerability of online information ecosystems to manipulation during times of crisis.

During the recent pandemic, unsubstantiated theories gained remarkable traction, circulating widely despite a clear absence of supporting evidence. This rapid dissemination wasn’t simply random; it was powerfully propelled by pre-existing negative emotions and a pervasive lack of trust in established institutions. Analyses reveal that content evoking fear, anger, or anxiety – often paired with accusations against authorities or experts – consistently outperformed factual reporting in terms of shares and engagement. This suggests that individuals already predisposed to distrust were particularly susceptible to these narratives, and that emotional resonance functioned as a key driver in their spread, effectively bypassing critical evaluation and reinforcing existing biases. The result was a self-perpetuating cycle where unsubstantiated claims, amplified by negative sentiment, gained an outsized presence in the digital information landscape.

Identifying the individuals and groups most responsible for disseminating disinformation during crises proves remarkably difficult for conventional analytical techniques. Existing approaches, often reliant on keyword analysis or broad network mapping, struggle to differentiate between genuine grassroots movements and coordinated disinformation campaigns, or to pinpoint the original sources of false narratives. This limitation hinders effective intervention, as simply removing content fails to address the underlying network of actors and motivations driving the spread of falsehoods. Furthermore, the anonymity afforded by online platforms and the increasing sophistication of ‘bot’ networks complicate attribution, allowing malicious actors to evade detection and continue propagating misleading information. Consequently, researchers are exploring advanced computational methods – including natural language processing and machine learning – to better understand the dynamics of disinformation and identify key influencers before false narratives take root and impact public health or societal stability.

Pinpointing the Spreaders: Humans and Bots in the Echo Chamber

Human Superspreaders were identified through the calculation of Engagement Score and Normalized Engagement Score. Engagement Score represents the total number of likes, retweets, and replies a user’s content receives, providing a raw measure of interaction. However, to account for varying follower counts, Normalized Engagement Score was calculated by dividing the Engagement Score by the number of followers, yielding a proportional metric. This normalization process allowed for the identification of users with high engagement relative to their audience size, effectively pinpointing individuals whose content disproportionately influenced network-wide conversation, even with comparatively fewer followers than other prominent accounts. Both metrics were used in conjunction to validate and refine the identification of key human disseminators of information within the network.

Botometer, a widely-used tool for bot detection, was employed to identify accounts exhibiting automated behavior indicative of bot activity. This analysis focused on identifying “Bot Spreaders” – automated accounts actively and strategically amplifying conspiracy-related content. The tool assesses account characteristics and activity patterns – including account age, posting frequency, network characteristics, and content duplication – to generate a bot score. Accounts exceeding a predetermined threshold were flagged as potential bots, allowing for the identification of coordinated amplification efforts and the disproportionate spread of misinformation by non-human actors within the observed network.

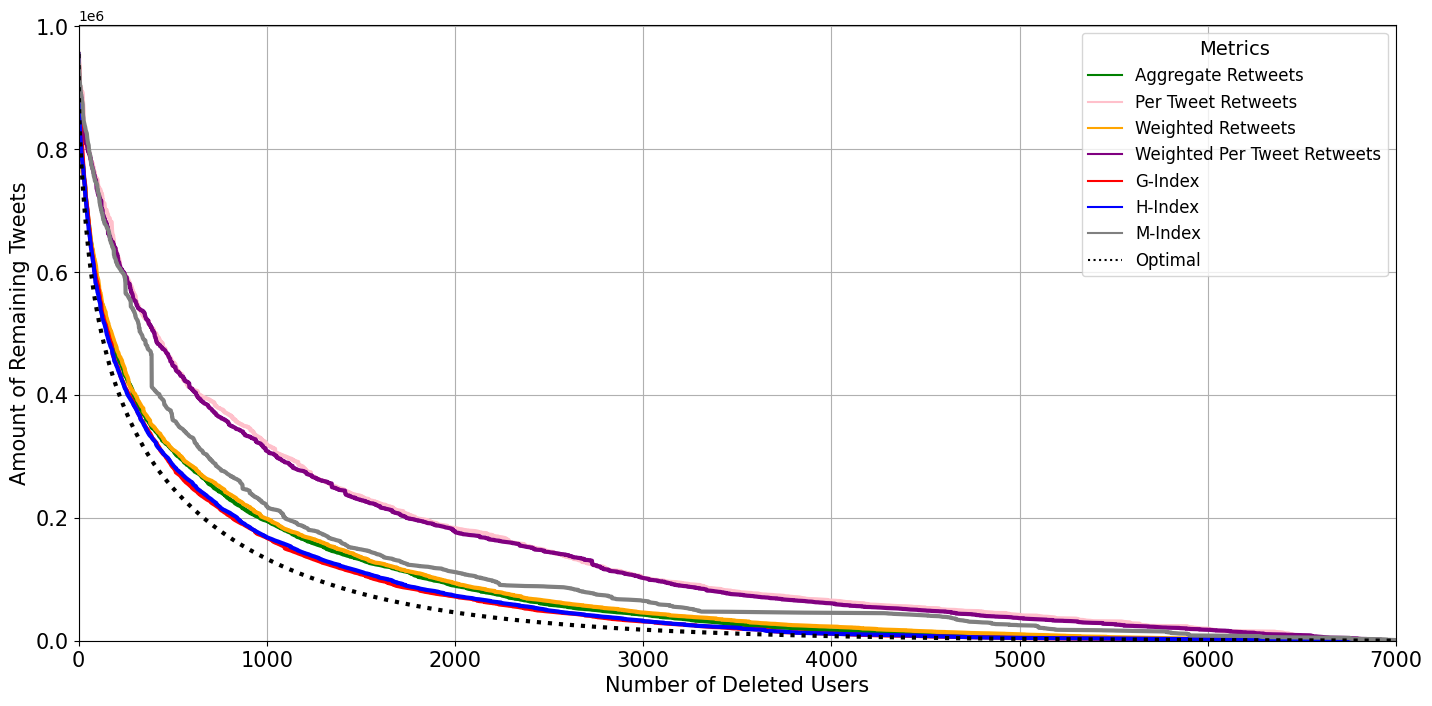

The H-index demonstrated significant efficacy in identifying key disseminators of conspiracy-related content, enabling the removal of 12.35% of such tweets through the suspension of only 0.1% of user accounts. This metric, alongside the G-index, provides a more robust alternative to relying solely on follower counts for identifying influential spreaders. The H-index calculates influence based on both the number of publications (in this case, tweets) and the number of citations (retweets, likes, replies) each publication receives, thereby prioritizing accounts with consistently high engagement rather than those with large but inactive followings. This approach allows for targeted intervention, minimizing collateral impact on legitimate users while maximizing the removal of harmful content.

Decoding the Message: Sentiment, Toxicity, and the Roots of Division

Quantitative assessment of negative sentiment within the dataset was achieved through the application of VADER (Valence Aware Dictionary and sEntiment Reasoner) Sentiment Analysis, a lexicon and rule-based sentiment analysis tool. This methodology was supplemented by referencing Ekman’s Basic Emotions – anger, disgust, fear, happiness, sadness, and surprise – to provide a nuanced understanding of the emotional content. VADER assigns a composite sentiment score ranging from -1 (most negative) to +1 (most positive), enabling a standardized measurement of sentiment across both human-authored and bot-generated content. The tool identifies and weights sentiment-laden words and phrases, considering contextual modifiers like capitalization, degree modifiers, and punctuation to improve accuracy in determining the overall emotional valence of each message.

Analysis of content shared by identified Human Superspreaders revealed a substantial prevalence of negative emotional expression. Specifically, 58% of the content disseminated by these accounts was categorized as expressing negative sentiment, as quantified by VADER Sentiment Analysis and referencing Ekman’s Basic Emotions. This indicates a strong tendency among these influential human actors to share content characterized by anger, fear, sadness, or other negative affective states, exceeding the levels observed in other account types within the dataset. This metric was used to characterize the overall emotional tone of content originating from these accounts and contributed to the broader assessment of messaging patterns.

Analysis of content generated by identified influencers revealed quantifiable levels of toxicity alongside their political orientations. Bot Spreaders exhibited a mean toxicity score of 0.25, indicating a higher prevalence of potentially offensive or harmful language compared to Human Superspreaders, who scored 0.22. This suggests that automated accounts, within the studied dataset, were more likely to generate content flagged as toxic by the analysis tools. Further investigation correlated these toxicity scores with the identified political leanings of the influencers, uncovering specific patterns in the types of toxic language used across different political alignments.

The Echo Chamber Effect: Implications for Discourse and Intervention

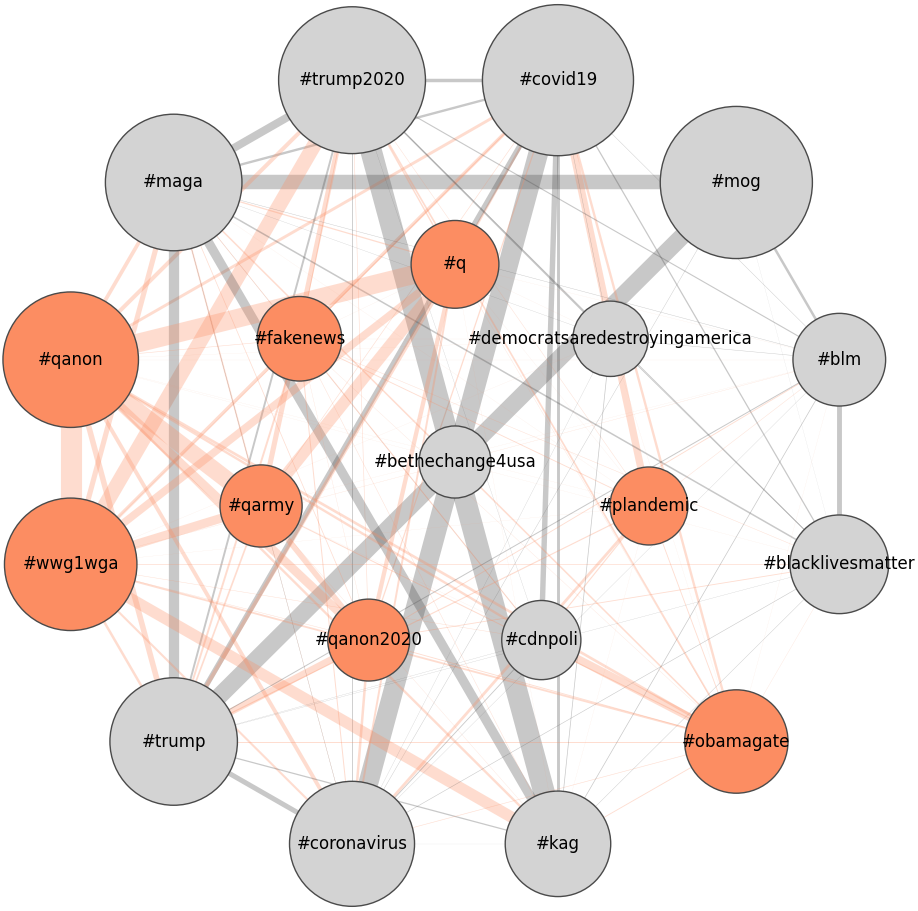

The dissemination of conspiracy theories isn’t simply a matter of individual belief, but rather a dynamic interplay between human users and automated accounts. Research indicates a complex ecosystem where both contribute actively to the propagation of unsubstantiated claims. Human ‘superspreaders’ share narratives with their networks, while automated ‘bot spreaders’ amplify those messages, extending their reach and creating an illusion of widespread support. This synergy significantly accelerates the velocity and volume of disinformation, exceeding what could be achieved through organic means alone. The study highlights how these actors aren’t isolated entities, but interconnected components within a larger network, suggesting that interventions must address both human motivations and the technical mechanisms driving automated amplification to effectively counter the spread of false information.

The proliferation of conspiracy theories isn’t simply a matter of false beliefs, but a potent phenomenon fueled by the convergence of emotional appeals, partisan positioning, and calculated dissemination strategies. Research indicates that content designed to provoke strong emotional responses – often fear or outrage – gains traction when aligned with pre-existing political ideologies. This combination isn’t organic; rather, it’s frequently amplified through coordinated efforts, including automated accounts and strategic sharing networks, creating an echo chamber effect. The result is a systematic erosion of public trust in established institutions and vital public health initiatives, as emotionally resonant, politically aligned disinformation overwhelms evidence-based information and hinders effective communication during crises.

Research indicates a notable asymmetry in the spread of conspiracy theories online, revealing that automated accounts, or “bot spreaders,” overwhelmingly lean towards right-leaning content. This contrasts sharply with human “superspreaders” who exhibit a more balanced distribution across the political spectrum. Consequently, effective interventions to counter disinformation require a nuanced approach; broad-stroke strategies may prove insufficient. Targeted fact-checking initiatives, tailored media literacy programs designed to address specific ideological vulnerabilities, and increased platform accountability-particularly concerning the amplification of right-leaning automated accounts-are essential to mitigate the erosion of trust in vital institutions and public health messaging. Addressing this imbalance is crucial for fostering a more informed and resilient public discourse.

The pursuit of quantifying influence, as this research demonstrates with its adapted H-index, feels less like innovation and more like meticulously documenting the inevitable. It’s a grim exercise in measuring decay. The study meticulously delineates the behavioral differences between human ‘superspreaders’ and automated bot accounts, yet the underlying principle remains constant: information, true or false, finds its vectors. As Edsger W. Dijkstra observed, “It’s always possible to do things wrong.” This research simply provides a slightly more detailed map of how things go wrong, identifying the actors – human or machine – who accelerate the process. One can build sophisticated metrics, but the system will always yield to the chaotic force of flawed information and its dedicated purveyors.

Sooner or Later, It All Breaks

This exercise in quantifying the spread of unsubstantiated claims-identifying “superspreaders,” as if that term hadn’t already seen enough use-feels predictably Sisyphean. The adapted H-index offers a neat way to categorize accounts, distinguishing between human-driven amplification and the relentless churn of bots. But let’s be honest, the bots will adapt. They always do. The metrics will require constant recalibration, a never-ending game of whack-a-mole against increasingly sophisticated automation. It’s a bit like building a sandcastle knowing the tide is coming in.

The truly interesting question isn’t who spreads the theories, but why. This work, like so much data science, focuses on the ‘what’ and largely ignores the ‘why.’ Understanding the underlying psychological and sociological vulnerabilities that make individuals susceptible to misinformation remains the real, messy, and likely intractable problem. A perfectly accurate algorithm identifying superspreaders won’t magically inoculate against irrationality.

One imagines future archaeologists, sifting through the digital ruins of X.com, will marvel at the sheer volume of nonsense and the ingenuity with which it was propagated. They’ll likely conclude that, despite all the clever tooling, humanity spent a lot of effort perfecting ways to shout into the void. And, if a system crashes consistently, at least it’s predictable. We don’t write code-we leave notes for digital archaeologists.

Original article: https://arxiv.org/pdf/2602.04546.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- NBA 2K26 Season 5 Adds College Themed Content

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Gold Rate Forecast

- Mario Tennis Fever Review: Game, Set, Match

- 4. The Gamer’s Guide to AI Summarizer Tools

- Train Dreams Is an Argument Against Complicity

- Every Death In The Night Agent Season 3 Explained

2026-02-06 00:04