Author: Denis Avetisyan

Researchers have developed a generative model capable of producing remarkably realistic magnetoencephalography (MEG) signals, opening new avenues for understanding and simulating brain activity.

FlatGPT, a novel Transformer-based architecture with a specialized tokenizer, enables stable, long-horizon generation and conditional specificity of MEG data.

Despite the increasing complexity of neuroimaging data, generating realistic and temporally extended brain signals remains a significant challenge. This is addressed in ‘Scaling Next-Brain-Token Prediction for MEG’, which introduces FlatGPT, a novel generative model that tokenizes magnetoencephalography (MEG) data and leverages a Transformer architecture for long-horizon prediction. The model demonstrates stable generation of minutes of MEG activity from limited context, exhibiting both temporal consistency and neurophysiological plausibility across datasets. Could this approach unlock new avenues for understanding brain dynamics and building more robust brain-computer interfaces?

Decoding the Neural Symphony: From Signal to Insight

Magnetoencephalography (MEG) stands as a powerful tool for charting brain activity with exceptional precision in time, capable of detecting changes in magnetic fields generated by neural processes with millisecond accuracy. However, this very strength is coupled with a significant challenge: the raw data acquired through MEG is remarkably complex and noisy. The brain’s magnetic signals are incredibly faint, easily obscured by both internal physiological artifacts and external electromagnetic interference. Consequently, substantial data processing is essential to isolate meaningful neural activity from this background clutter. Sophisticated algorithms and filtering techniques are therefore employed to not only enhance the signal-to-noise ratio, but also to accurately reconstruct the sources of these signals within the brain, transforming a chaotic stream of data into a comprehensible map of cognitive function.

Conventional analysis techniques often fall short when deciphering the intricate patterns within magnetoencephalography (MEG) signals, largely because they treat brain activity as isolated events rather than interconnected processes. The brain, however, functions through complex, distributed networks where seemingly disparate regions collaborate in real-time. Traditional methods, such as averaging signals across trials or focusing on specific frequency bands, can obscure these vital relationships, leading to an incomplete or inaccurate understanding of cognitive function. This limitation impacts the development of reliable brain-computer interfaces and hinders the ability to model neurological disorders effectively; a more holistic approach is needed to capture the full dynamic range and interdependence of neural activity as revealed by MEG data.

BrainTokMix: A Tokenized Representation of Neural Dynamics

BrainTokMix is a novel tokenizer engineered for magnetoencephalography (MEG) data represented in source space. It employs a causal channel-mixing approach within a Residual Vector Quantization (RVQ) framework. This design prioritizes the preservation of temporal dependencies inherent in brain signals during the compression process. By discretizing continuous MEG data into a sequence of tokens, BrainTokMix facilitates the application of large language model architectures, traditionally used for natural language processing, to the analysis and generative modeling of brain activity. The method is specifically tailored to handle the high dimensionality and complex relationships present in source-space MEG recordings.

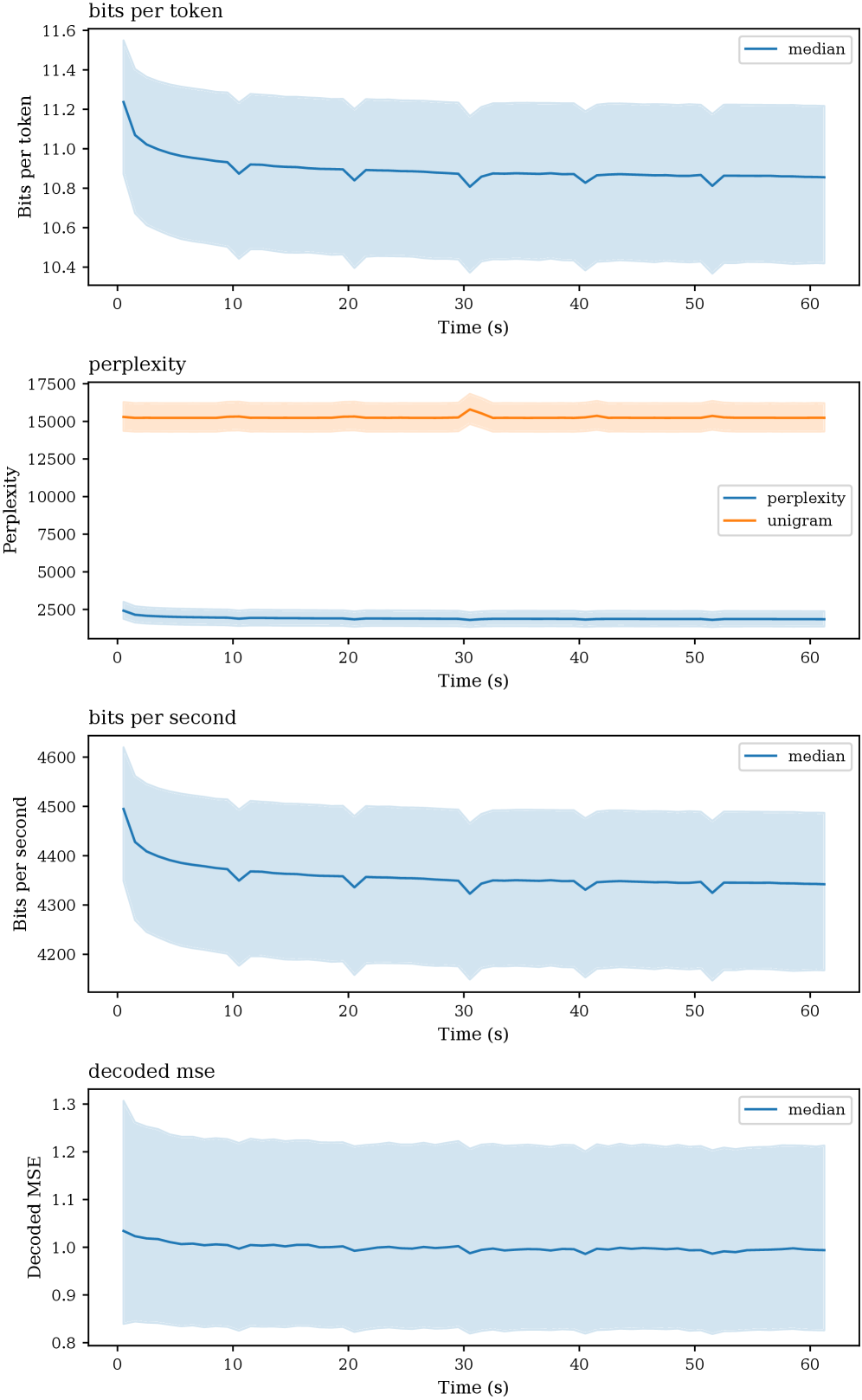

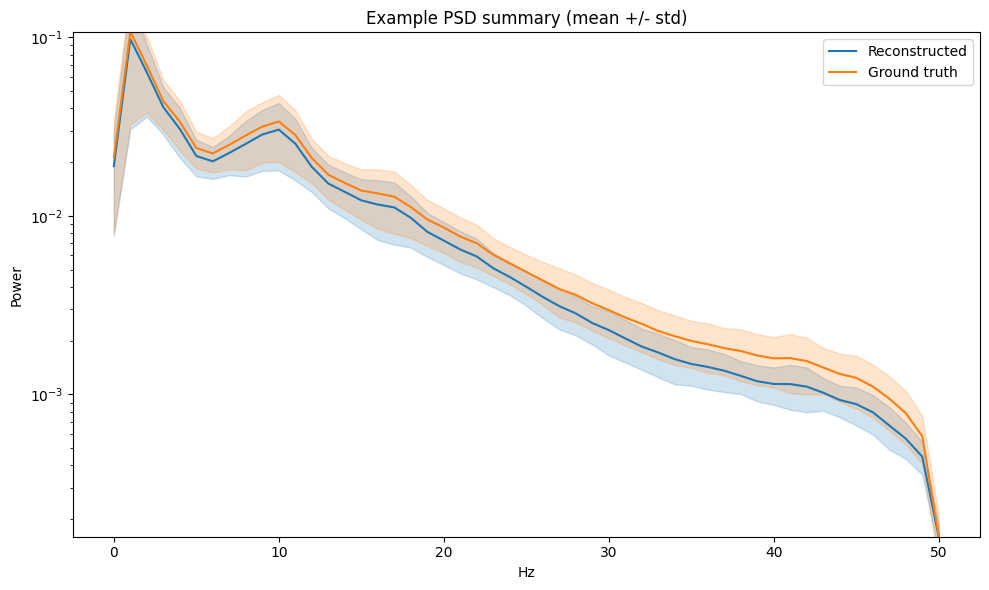

BrainTokMix utilizes a compression technique to reduce the dimensionality of high-resolution magnetoencephalography (MEG) signals into a series of discrete tokens. This process is designed to retain information regarding the causal flow of activity within the brain, as opposed to simply reducing data size. Evaluation on held-out MOUS (Movement and Orientation of Upper limbs and Speech) dataset demonstrates a Pearson correlation coefficient (PCC) of 0.944 between the original and reconstructed MEG signals, indicating high fidelity of the tokenization and reconstruction process. This level of reconstruction accuracy suggests minimal information loss during the compression and subsequent tokenization of the neural data.

The BrainTokMix tokenization process converts continuous, high-dimensional magnetoencephalography (MEG) data into a discrete, categorical representation – a sequence of tokens. This transformation is critical for interfacing MEG signals with large language models (LLMs), which are designed to process discrete inputs. By representing brain activity as tokens, LLMs can be leveraged to learn the underlying patterns and dependencies within the data, and subsequently generate new, synthetic MEG signals or predict future brain states. This capability opens avenues for applying generative modeling techniques, such as those employed in natural language processing, to the analysis and simulation of brain activity, effectively treating MEG data as a form of sequential information analogous to text.

FlatGPT: Synthesizing Brain Signals with a Transformer Architecture

FlatGPT is a generative model utilizing a decoder-only Transformer architecture, specifically leveraging the Qwen-2.5-VL backbone. Training data consists of BrainTokMix tokens, a discrete representation of Magnetoencephalography (MEG) signals. This tokenization allows the model to learn the statistical dependencies within brain activity and subsequently generate new MEG signals. The Qwen-2.5-VL base model provides pre-trained visual-language capabilities which are adapted for the temporal dynamics of MEG data through training on the BrainTokMix dataset. This approach enables FlatGPT to predict and synthesize brain signals directly from the learned token distribution.

FlatGPT employs autoregressive modeling, a technique where the prediction of a future brain state is conditioned on the preceding sequence of brain activity. This approach treats brain signals as a temporal sequence, allowing the model to learn the statistical dependencies between successive moments in time. Specifically, the model predicts the next brain state \hat{x}_t based on the previous states x_{t-1}, x_{t-2}, ..., x_1 . By iteratively predicting future states based on past activity, FlatGPT captures the dynamic and sequential nature of brain function, enabling the generation of plausible and temporally coherent brain signals.

The LUPI (Learning Universal Prior for Individuals) framework improves the cross-subject generalization capability of FlatGPT by introducing a subject-agnostic prior during training. This is achieved through the use of a learned embedding space that captures shared features across individuals, allowing the model to effectively transfer knowledge gained from one subject to others. Specifically, LUPI employs a variational autoencoder to map subject-specific data into a latent space, encouraging the model to learn representations that are invariant to individual differences while preserving the core characteristics of brain activity. This approach minimizes the need for extensive subject-specific fine-tuning, enabling FlatGPT to accurately model brain signals from previously unseen individuals with limited data.

FlatGPT is capable of generating magnetoencephalography (MEG) signals in an open-loop manner, producing up to 4 minutes of synthetic data based on a 1-minute conditioning context. Evaluation using the Out-of-envelope rate (OER) – a metric assessing the realism of generated signals by quantifying the proportion of samples falling outside the typical amplitude range of natural MEG data – demonstrates that the generated signals maintain values comparable to those observed in real recordings. This indicates the model’s ability to produce realistic and plausible brain activity patterns without requiring iterative feedback or closed-loop control during the generation process.

Extending Insights: Multi-Subject Generalization and Validation

FlatGPT’s development prioritized real-world applicability through rigorous training and validation against established, publicly accessible datasets – notably CamCAN, OMEGA, and MOUS. This strategy moves beyond isolated performance metrics, ensuring the model isn’t simply memorizing training examples but genuinely learning underlying principles of brain activity. By evaluating performance across diverse datasets, researchers demonstrated FlatGPT’s capacity to generalize to new, unseen subjects and varying experimental conditions. This robust performance is critical for translating the model from a research tool into a potentially valuable asset for personalized brain modeling, diagnostic applications, and ultimately, a deeper understanding of the human brain.

FlatGPT’s capacity for multi-subject modeling represents a significant step towards individualized neurological insights. Unlike traditional approaches trained on pooled data, this model can adapt to the unique characteristics of each brain, paving the way for highly personalized diagnostic tools and predictive analyses. By learning from a diverse range of individuals, FlatGPT doesn’t just identify general patterns of brain activity; it discerns the subtle nuances that distinguish one person’s neural processes from another. This capability is crucial for understanding atypical brain function, detecting early signs of neurological disorders specific to an individual, and ultimately, tailoring treatment plans for maximum efficacy. The potential extends beyond diagnostics, offering a framework for predicting an individual’s response to interventions and optimizing therapeutic strategies based on their unique brain signature.

FlatGPT distinguishes itself through its capacity for long context modeling, a feature crucial for understanding the temporally-extended dynamics of brain activity. Unlike models limited by short-term memory, FlatGPT can effectively capture dependencies spanning several minutes of neural data, as demonstrated by its ability to generate a plausible four-minute continuation of brain activity from just one minute of input. This capability is quantitatively supported by Prefix Divergence metrics, which confirm the model’s consistent context-dependence over extended sequences. By retaining information over longer timescales, FlatGPT moves beyond immediate predictions and can model the evolving states of complex brain systems with greater fidelity, offering a potentially powerful tool for investigating the underlying principles of neural computation and forecasting brain states.

FlatGPT’s predictive capabilities stem from a unique integration of causal prediction and the Free-Energy Principle, allowing it to move beyond simple correlation and model the underlying generative processes of brain activity. This approach posits that the brain constantly attempts to minimize ‘free energy’ – a measure of surprise – by accurately predicting incoming sensory information and internal states. By learning these generative models, FlatGPT doesn’t merely recognize patterns in brain data, but actively anticipates future states based on learned causal relationships. This enables the model to forecast brain activity with remarkable accuracy, effectively simulating how the brain itself generates experiences and maintains internal consistency, and offering a novel framework for understanding neurological function and dysfunction.

The pursuit of long-context modeling, as demonstrated by FlatGPT’s generation of realistic MEG signals, reveals a fundamental truth about complex systems. If the system looks clever, it’s probably fragile. The architecture prioritizes stable, long-horizon generation, suggesting a deliberate simplification-an art of choosing what to sacrifice-in favor of robustness. Vinton Cerf aptly observed, “It’s not enough to be busy; you must look at the results.” FlatGPT doesn’t merely generate signals; it generates stable signals, a testament to architectural choices focused on foundational reliability rather than superficial complexity. This aligns with the notion that structure dictates behavior, and a well-defined structure is crucial for predictable, long-term performance.

Beyond the Horizon

The pursuit of generative models for magnetoencephalography, as exemplified by FlatGPT, reveals a familiar pattern. The initial infrastructure – the tokenization, the Transformer architecture – functions as a provisional city plan. It allows for growth, certainly, but continued prosperity demands more than simply adding new buildings. The current system excels at stable generation and conditional specificity, yet the inherent limitations of fixed-length context windows remain a central constraint. Future iterations must prioritize structural evolution, not just incremental improvements.

The challenge isn’t simply extending the context length; it’s designing an infrastructure that inherently handles long-range dependencies without collapsing under computational burden. This necessitates exploration beyond the standard Transformer – perhaps hierarchical architectures, or novel attention mechanisms that mimic the brain’s own capacity for abstraction and efficient information routing.

Ultimately, the goal isn’t to perfectly simulate neural activity, but to create a generative framework capable of revealing underlying principles. The true test of success will be the model’s capacity to generate not merely plausible signals, but novel insights – predictive capabilities exceeding those currently available, and a deeper understanding of the complex choreography of brain function.

Original article: https://arxiv.org/pdf/2601.20138.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

- Silver Rate Forecast

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- Gold Rate Forecast

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

2026-01-29 15:12