Author: Denis Avetisyan

Researchers are leveraging the formal rigor of Petri nets to gain deeper insight into the behavior of binary neural networks, paving the way for improved verification and understanding.

This work presents a novel method for modeling binary neural networks as 1-safe Petri nets, enabling formal verification, causal reasoning, and scalable system validation.

Despite their potential for energy-efficient computation, Binary Neural Networks (BNNs) remain difficult to interpret and formally verify due to their discrete, non-linear behavior. This work, ‘Eventizing Traditionally Opaque Binary Neural Networks as 1-safe Petri net Models’, addresses this limitation by introducing a novel framework that models BNN operations as event-driven processes using Petri nets. By representing BNN components – including activation, gradient computation, and weight updates – as modular Petri net blueprints, we enable rigorous analysis of concurrency, causal dependencies, and system-level behavior. Can this approach unlock the full potential of BNNs in safety-critical applications requiring both performance and verifiable guarantees?

The Elegant Simplicity of Binary Neural Networks

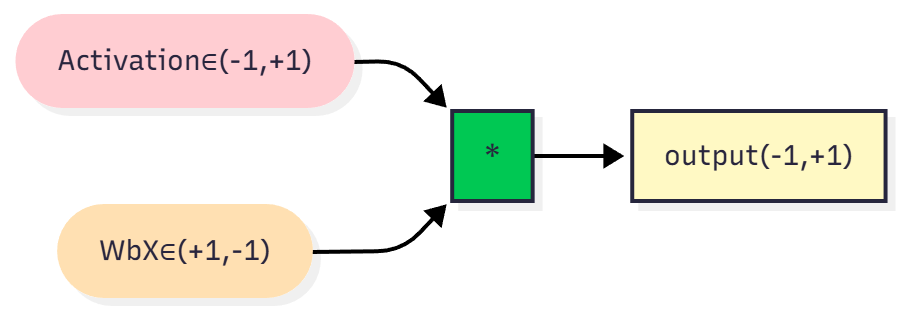

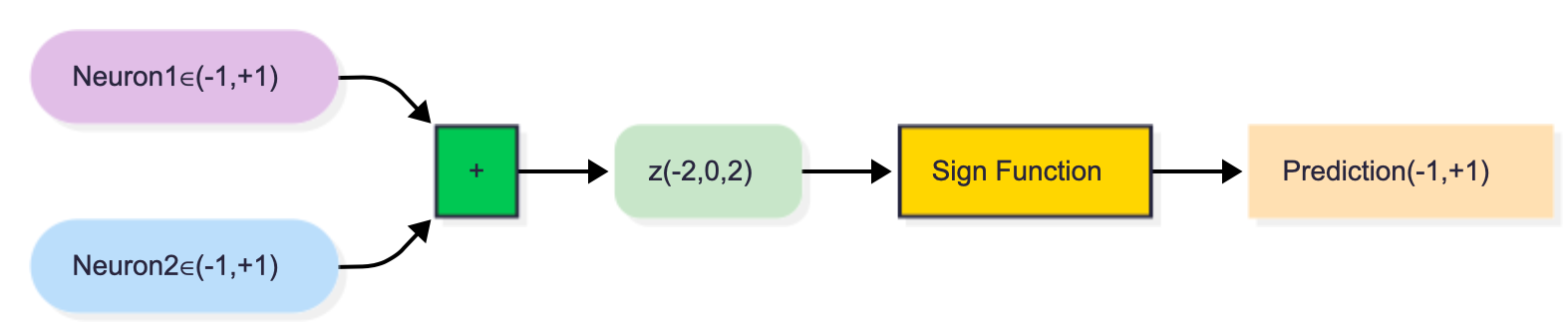

Binary Neural Networks (BNNs) present a compelling path toward dramatically reduced computational costs and energy consumption in deep learning applications. Unlike conventional neural networks that utilize 32- or 16-bit floating-point numbers to represent weights and activations, BNNs restrict these values to just one of two states – typically +1 or -1. This extreme quantization offers substantial advantages in terms of memory footprint and processing speed, particularly on hardware with limited resources. However, this simplification introduces a significant challenge: the binarization process, which converts continuous values into discrete binary values, is non-differentiable. Standard gradient-based optimization algorithms, the workhorses of neural network training, rely on continuous gradients to adjust network parameters. The abrupt transition inherent in the sign function-the core of binarization-breaks this continuity, preventing effective backpropagation and hindering the network’s ability to learn from data. Consequently, developing training strategies that can overcome this non-differentiability is crucial to unlocking the full potential of BNNs and realizing their promise of highly efficient deep learning.

The core difficulty in training Binary Neural Networks lies in the discontinuous nature of the binarization process, specifically the use of the sign function. Unlike standard neural networks which utilize continuous values and benefit from smooth gradient descent, BNNs restrict weights and activations to just +1 or -1. This creates a problem because the sign function – which outputs the sign of a number – has a derivative of zero almost everywhere. Consequently, during backpropagation, gradients effectively vanish, preventing meaningful weight updates. \frac{d}{dx} sign(x) = 0 \text{ for } x \neq 0 This ‘vanishing gradient’ issue hinders the network’s ability to learn and converge, demanding specialized training techniques to overcome the limitations imposed by this fundamental binary constraint.

The pursuit of efficient deep learning has led to binary neural networks (BNNs), but realizing their potential hinges on overcoming a fundamental training challenge. Conventional gradient-based methods, the workhorses of neural network optimization, falter when applied to BNNs because the binarization process – reducing weights and activations to just +1 or -1 – introduces a non-differentiable step. This creates an obstacle for backpropagation, the algorithm that adjusts network parameters during learning. Researchers are therefore actively exploring strategies to approximate gradients or develop alternative optimization techniques that can effectively navigate this binary landscape. The goal isn’t simply to enable training, but to do so while preserving, and ideally enhancing, both the accuracy and computational speed that make BNNs so promising – a delicate balance that defines the cutting edge of this research area.

Formalizing Efficiency: Modeling with Petri Nets

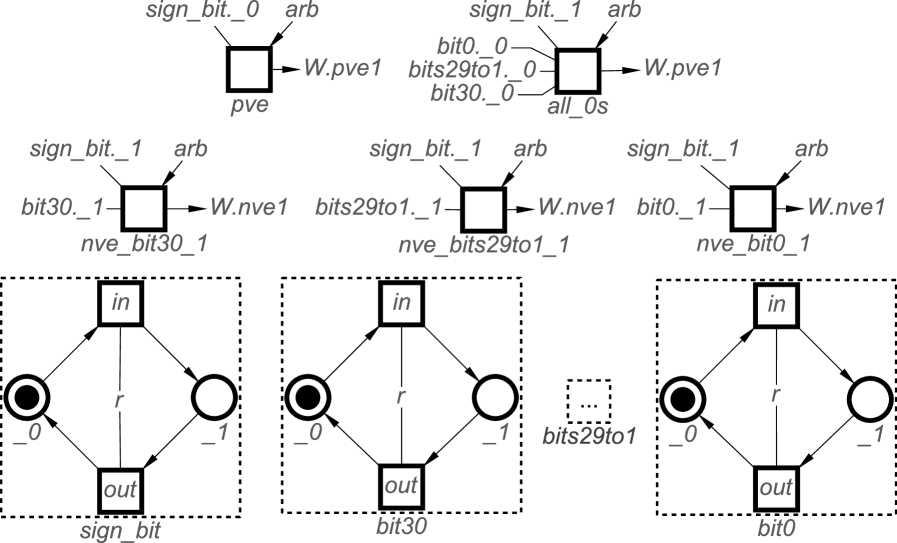

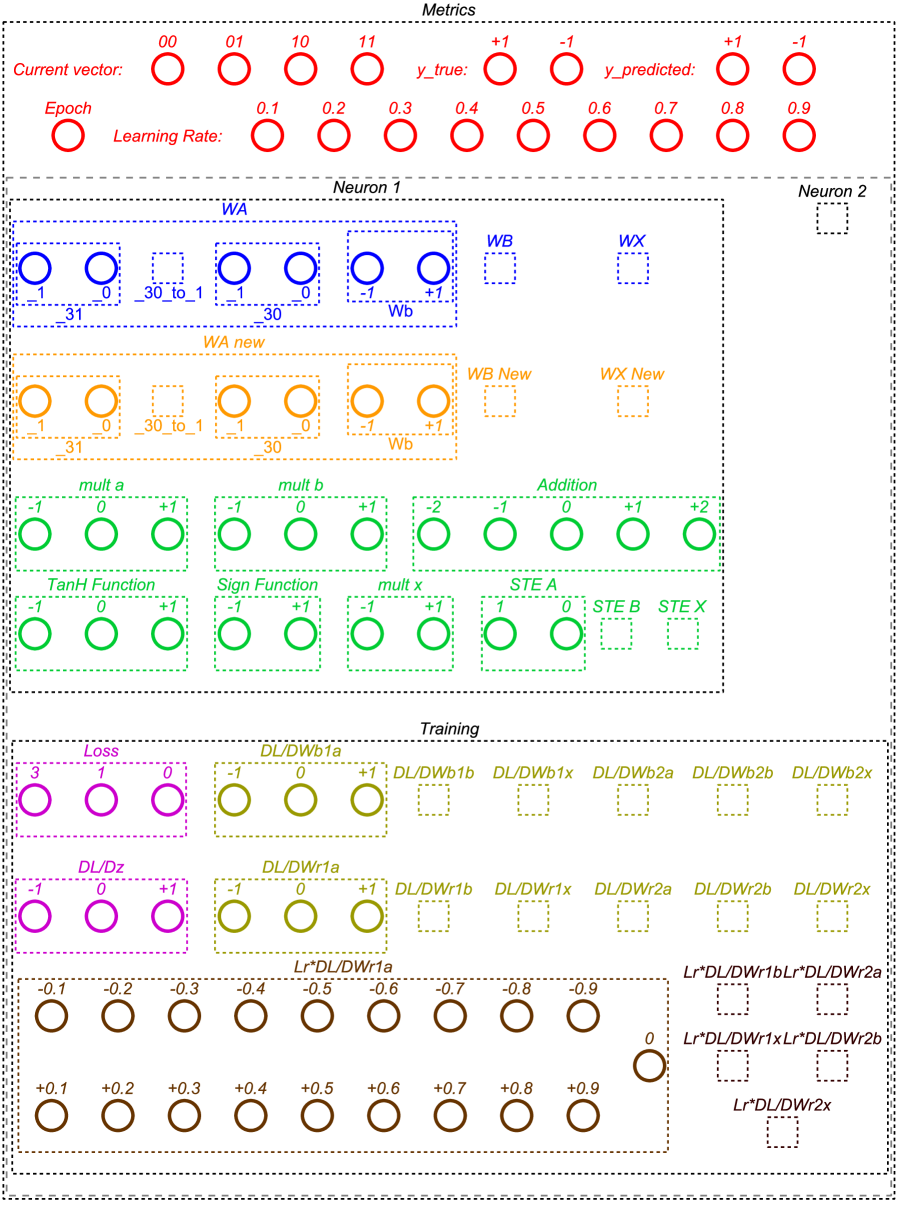

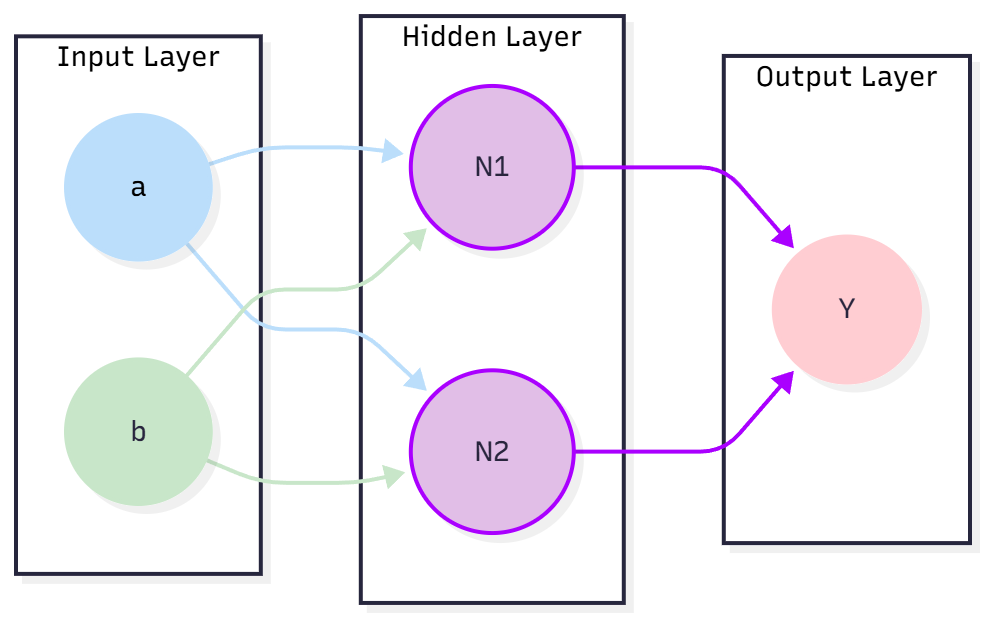

Binary Neural Networks (BNNs) are modeled using Petri Nets, a formalized mathematical language specifically designed to represent systems exhibiting concurrency, causality, and state transitions. Petri Nets consist of places, transitions, and tokens, allowing the depiction of conditions and events within a BNN as discrete states. This representation enables the formal verification of network behavior by mapping BNN components – weights, activations, and operations – directly to Petri Net elements. The use of Petri Nets facilitates the analysis of data flow and control dependencies within the BNN, providing a rigorous framework for assessing network properties and identifying potential issues related to its operational logic.

Formal analysis of the Binary Neural Network (BNN) model, achieved through Petri Net representation, enables verification of critical operational characteristics. Specifically, deadlock-freedom-ensuring the network does not reach an unrecoverable state-can be mathematically proven via state space analysis of the corresponding Petri Net. Similarly, safety properties-that the network consistently adheres to defined constraints during operation-are demonstrable through reachability analysis, confirming that undesirable states are unreachable under valid input conditions. This approach moves beyond empirical testing and provides a rigorous, mathematical guarantee of network behavior, crucial for safety-critical applications.

The conversion of Binary Neural Network (BNN) components into Petri Net elements establishes a formal, verifiable model of network behavior. Specifically, BNN weights are represented as transitions within the Petri Net, defining allowable state changes. Activations are modeled as tokens, representing the flow of data and the current network state. BNN operations, such as matrix multiplication and activation functions, are also mapped to transitions, dictating how tokens move between places – which represent memory locations or data storage within the network. This mapping allows for the application of Petri Net analysis techniques to formally verify properties of the BNN, such as freedom from deadlock and the satisfaction of safety constraints.

The resulting Petri Net models, generated from Binary Neural Networks (BNNs) based on network architecture, demonstrated a scaling relationship with dataset complexity. Specifically, the KWS6 dataset, representing keyword spotting, yielded a model size of 118,125 elements. More complex datasets resulted in proportionally larger models; the CIFAR2 dataset, consisting of 60,000 32×32 color images, corresponded to a model of 236,250 elements. The largest model, comprising 472,500 elements, was generated from the MNIST dataset of handwritten digits, containing 70,000 grayscale images.

Verifying Operational Integrity Through Formal Analysis

The Workcraft Toolset facilitates automated construction and analysis of Petri Net models representing Bayesian Neural Networks (BNNs). This automation includes translating the BNN architecture into a corresponding Petri Net, performing state-space exploration, and evaluating model properties. By automating these processes, verification tasks are significantly accelerated compared to manual methods, allowing for efficient identification of potential issues and confirmation of desired behaviors within the BNN. The toolset supports formal verification techniques, enabling rigorous analysis of the modeled BNN’s properties and adherence to specified safety constraints.

Metric instrumentation is utilized to quantitatively assess the correspondence between the Petri Net model and the original Binary Neural Network (BNN). This process involves defining and tracking key performance indicators (KPIs) within both systems, such as activation rates, weight distributions, and layer outputs. By comparing these metrics across the Petri Net and the reference BNN during simulated operation, we establish a measure of model fidelity. Discrepancies exceeding a predefined threshold trigger further investigation and model refinement, ensuring the Petri Net accurately reflects the behavior of the BNN it represents. This comparative analysis is crucial for validating the abstraction and ensuring the model’s suitability for formal verification and analysis.

Analysis of the constructed Petri Net model demonstrates its functional equivalence to the reference Bayesian Neural Network (BNN). This confirmation was achieved through a comparative assessment of state transitions and behavioral outputs under identical input conditions. Specifically, the model accurately replicates the core computational dynamics of the BNN, including probabilistic inference and parameter updates. This fidelity is critical for establishing the validity of the model as a reliable and representative abstraction of the original BNN, allowing for formal verification and analysis of its properties without direct experimentation on the neural network itself.

Verification of the Petri Net model specifically confirmed adherence to the defined Safety Property, which establishes constraints preventing the model from reaching invalid or unsafe states. This verification process involved formal analysis techniques to demonstrate that all possible state transitions within the model satisfy the pre-defined safety criteria. The Safety Property was implemented as a set of logical conditions enforced throughout the model’s execution, ensuring that critical system parameters remain within acceptable bounds and that predefined error conditions are avoided. Successful validation against this property confirms the model’s ability to operate without entering configurations that could lead to incorrect or hazardous behavior.

Experimental results indicate that the Petri Net model of the Bayesian Neural Network (BNN) exhibits improved performance compared to the reference BNN. Specifically, after 100 training epochs, the modeled BNN achieved a loss rate approximately 10% lower than that of the original BNN. This reduction in loss was measured using the same dataset and optimization parameters for both networks, ensuring a valid comparative analysis. The observed improvement suggests that the model accurately represents the BNN’s behavior and potentially offers enhanced learning efficiency.

Toward Robust and Verifiable Intelligence

This research delivers a crucial advancement in the development of neuromorphic computing, specifically focusing on Binary Neural Networks (BNNs). Current machine learning systems, while powerful, often lack the robustness and efficiency needed for deployment in safety-critical applications or resource-constrained environments. This work addresses these limitations by establishing a formal methodology for verifying the correctness and reliability of BNNs, ensuring predictable behavior and mitigating potential hazards. The demonstrated approach not only enhances the safety profile of these networks, but also paves the way for designing more energy-efficient hardware implementations, bringing the promise of truly intelligent and sustainable computing systems closer to reality. By rigorously analyzing network properties, this study provides a foundation for building trust in BNNs and accelerating their adoption across a wide range of applications.

Conventional deep learning systems, while powerful, often lack the guarantees of predictable and reliable operation crucial for safety-critical applications. This research addresses this limitation by integrating formal modeling – a rigorous mathematical approach to system verification – with the capabilities of deep learning. Through this synthesis, researchers can move beyond empirical testing and instead prove the correctness of neural network behavior under specific conditions. This methodology allows for the identification of potential vulnerabilities and ensures robustness against adversarial inputs or unexpected scenarios, something traditional approaches struggle to achieve. The resulting framework not only enhances the dependability of neuromorphic computing but also opens avenues for creating certified AI systems with demonstrably safe and predictable performance.

Investigations are now directed toward broadening the applicability of this formal verification framework to encompass the intricacies of more advanced neural network designs, moving beyond current simplified models. This expansion aims to address the challenges posed by deeper, wider, and more recurrent architectures commonly found in state-of-the-art machine learning. Simultaneously, researchers are actively exploring the potential of this methodology across diverse application domains, including autonomous robotics, medical diagnostics, and financial modeling, where safety and reliability are paramount. The anticipated outcome is a robust suite of tools capable of guaranteeing the dependable operation of neuromorphic systems in real-world scenarios, fostering trust and accelerating the adoption of this promising computing paradigm.

The developed formal verification methodology demonstrates a crucial advantage beyond binary neural networks – its scalability and broad applicability to the wider landscape of graph-based machine learning models. This isn’t simply a solution tailored for one specific architecture; the underlying principles allow for analysis of diverse models where data and relationships are represented as graphs, including graph convolutional networks and other emerging paradigms. By rigorously establishing properties like robustness and safety through formal methods, researchers can move beyond empirical testing and guarantee model behavior, even as these models grow in complexity. This adaptability promises a future where formal verification becomes a standard practice in ensuring the reliability and trustworthiness of increasingly sophisticated machine learning systems, fostering confidence in their deployment across critical applications.

The transformation of a Binary Neural Network into a Petri Net model, as detailed in the study, highlights a fundamental principle of systems architecture: understanding behavior requires modeling the interactions, not just the components. This echoes Alan Turing’s sentiment: “There is no longer any need for me to explain the nature of computation.” The researchers effectively translate the network’s opaque functionality into a transparent, event-driven framework, facilitating formal verification and causal reasoning. By representing the network as a 1-safe Petri Net, they expose the underlying logic, making it amenable to rigorous analysis and ultimately revealing the system’s true operational characteristics. Good architecture is invisible until it breaks, and only then is the true cost of decisions visible.

What Lies Ahead?

The endeavor to ‘eventize’ binary neural networks through Petri net modeling reveals a familiar truth: optimization invariably shifts, rather than eliminates, systemic tension. This work successfully translates the opaque computations of BNNs into a framework amenable to formal verification – a considerable achievement. However, the very act of modeling introduces a new layer of abstraction, and thus, a new surface for potential failure. The scalability of Petri net analysis, even with the constraints imposed by binary networks, remains a central challenge. A model that accurately reflects the nuanced behavior of a large-scale BNN may quickly become analytically intractable.

Future investigation should not focus solely on refining the mapping between neural network layers and Petri net components. A more fruitful approach lies in considering the implications of this formalization for causal reasoning. The architecture isn’t simply a diagram; it’s the system’s behavior over time. Understanding how specific activations propagate through the network, as modeled by the Petri net, could illuminate the emergence of unexpected behaviors-and, crucially, allow for targeted interventions beyond mere weight adjustments.

Ultimately, the value of this work will be determined not by the fidelity of the model, but by its ability to reveal previously hidden systemic properties. The goal is not to create a perfect representation, but a useful simplification – one that clarifies the fundamental constraints and trade-offs inherent in these increasingly complex systems.

Original article: https://arxiv.org/pdf/2602.13128.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- LINK PREDICTION. LINK cryptocurrency

- Will there be a Wicked 3? Wicked for Good stars have conflicting opinions

- All Itzaland Animal Locations in Infinity Nikki

- Ragnarok X Next Generation Class Tier List (January 2026)

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- Gold Rate Forecast

- Hell Let Loose: Vietnam Gameplay Trailer Released

- Vietnam’s Tech Talent Landscape: An AI-Powered Market Guide

- Moonpay’s New York Trust Charter: The Digital Gold Rush Gets Regulated! 🚀

2026-02-16 14:10