Author: Denis Avetisyan

New research demonstrates how explainable AI techniques can illuminate the decision-making of deep learning models used to detect faults in complex chemical processes.

This review explores the application of SHAP and Integrated Gradients to interpret deep learning-based fault detection systems for time series data in chemical engineering.

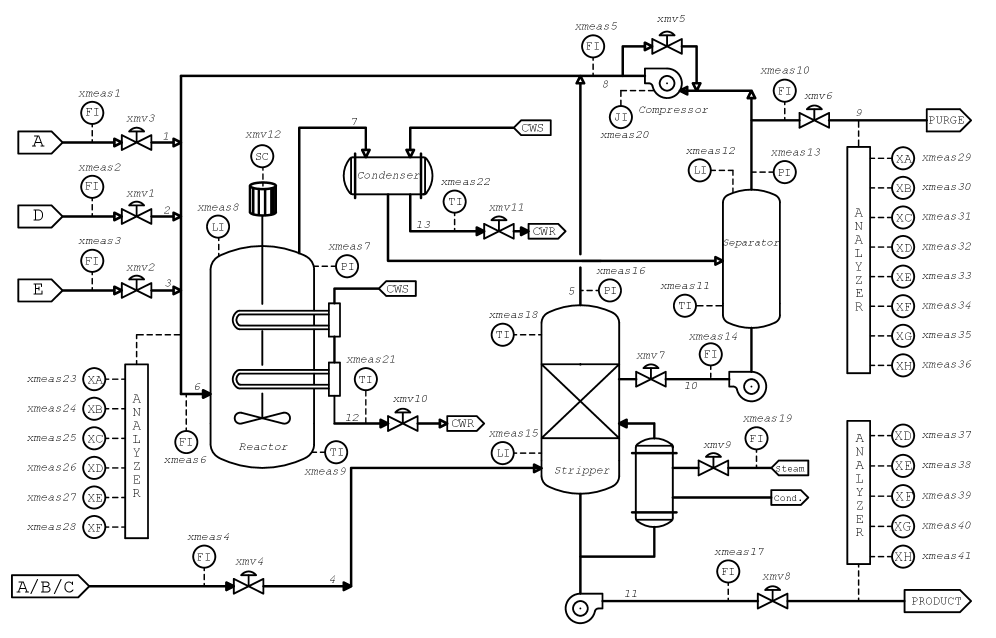

Despite advances in deep learning for industrial fault detection, understanding why these systems make specific diagnoses remains a significant challenge. This work, ‘Explainability for Fault Detection System in Chemical Processes’, investigates the application of model-agnostic explainable AI (XAI) techniques-specifically Integrated Gradients and SHAP values-to interpret the decisions of a Long Short-Time Memory (LSTM) classifier trained on the Tennessee Eastman Process. Results demonstrate that these methods can consistently identify key process variables driving fault predictions, offering insights into the underlying causes of detected anomalies. Could wider adoption of such XAI approaches foster greater trust and enable more effective human-machine collaboration in critical process control applications?

Decoding the Black Box: Towards Trustworthy Deep Learning

Deep learning models have achieved remarkable success in diverse fields, yet their inner workings often remain obscure, earning them the label “black boxes”. This opacity isn’t merely a philosophical concern; it fundamentally challenges trust and reliable decision-making, particularly when these systems are deployed in high-stakes scenarios. While a model might consistently predict outcomes with high accuracy, the lack of transparency regarding how those predictions are reached inhibits effective validation and debugging. Consequently, stakeholders are left with limited ability to identify biases, understand potential failure modes, or confidently rely on the system’s reasoning, creating a critical barrier to widespread adoption in areas demanding accountability and human oversight.

The inscrutable nature of deep learning presents substantial hurdles in high-stakes fields such as fault detection and medical diagnosis. Unlike traditional algorithms where the reasoning behind a conclusion is readily apparent, these complex models often arrive at predictions without revealing how those conclusions were reached. This lack of transparency isn’t merely an inconvenience; in critical applications, understanding the rationale is paramount for both trust and accountability. A system identifying a potential mechanical failure, for instance, must not only flag the issue but also pinpoint the specific indicators driving that assessment, allowing for informed maintenance decisions. Similarly, in healthcare, a diagnosis generated by a deep learning model requires accompanying justification to ensure clinical validity and patient safety; simply knowing what is wrong isn’t enough – understanding why is essential for effective treatment.

Historically, attempts to render artificial intelligence systems more understandable have often come at the cost of predictive performance. Conventional explanation techniques, such as rule-based systems or linear models, offer transparency but frequently lack the complexity to accurately represent real-world phenomena, resulting in diminished accuracy. This trade-off between interpretability and efficacy presents a substantial hurdle, particularly in domains where both reliable predictions and insightful reasoning are crucial. Consequently, a growing emphasis is being placed on the development of interpretable AI – methods that can simultaneously achieve high accuracy and provide clear, human-understandable explanations for their decisions, bridging the gap between powerful machine learning and trustworthy application.

Illuminating Decision Pathways: The Promise of Explainable AI

Explainable AI (XAI) encompasses a range of techniques aimed at increasing the transparency and interpretability of Deep Learning models. These methods address the “black box” nature of many deep neural networks, which often deliver accurate predictions without revealing how those predictions are reached. XAI techniques can be broadly categorized as either intrinsic or post-hoc. Intrinsic methods build transparency directly into the model architecture, such as using attention mechanisms or rule-based systems. Post-hoc methods, conversely, analyze already-trained models to understand their behavior, often by approximating the relationship between inputs and outputs. The goal of XAI is not simply to understand the model, but also to build trust, ensure fairness, and facilitate debugging and improvement of these complex systems.

Post-hoc explainability techniques operate on already-trained machine learning models to determine the relative importance of each input feature in influencing the model’s predictions. Methods such as SHAP (SHapley Additive exPlanations) leverage concepts from game theory, specifically Shapley Values, to assign each feature a value representing its contribution to the prediction, considering all possible feature combinations. Integrated Gradients, conversely, utilizes sensitivity analysis by calculating the integral of the gradients of the model’s output with respect to each input feature, effectively measuring the change in prediction as each feature varies from a baseline value. Both techniques provide feature importance scores, enabling users to understand which inputs are most influential in a given prediction without requiring access to the model’s internal parameters or retraining.

Post-hoc explainability techniques, such as SHAP and Integrated Gradients, determine feature contributions through mathematical principles. Shapley Values, originating from game theory, calculate the average marginal contribution of a feature across all possible feature combinations, providing a fair assessment of its impact. Sensitivity analysis, conversely, measures how changes in input features affect the model’s output; this is often achieved by iteratively perturbing each feature and observing the resulting change in prediction. Both approaches yield quantitative values representing feature importance, enabling the identification of the most influential inputs driving a model’s decision-making process; these values are crucial for understanding model behavior and building trust in AI systems.

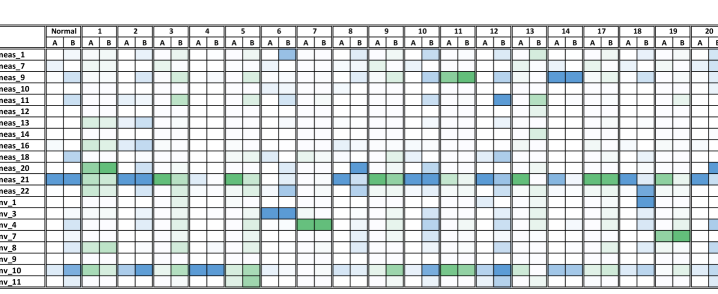

Implementation of post-hoc explainability techniques, specifically SHAP and Integrated Gradients, resulted in 99% accuracy when applied to fault detection within the Tennessee Eastman Process, a complex chemical manufacturing benchmark. This performance represents a substantial advancement over prior methods for process monitoring and control. The high accuracy was achieved by quantifying feature contributions to model outputs, enabling precise identification of the root causes of process deviations and facilitating timely corrective actions. This demonstrated capability underscores the potential of XAI to improve reliability and decision-making in critical industrial applications where accurate fault diagnosis is paramount.

Mapping Complexity: Leveraging Graph-Based Networks for Enhanced Understanding

Graph Convolutional Networks (GCNs) extend traditional Deep Learning architectures to process data represented as graphs, enabling the incorporation of relationships between data points. Unlike standard neural networks that assume data is independent, GCNs operate on graph structures consisting of nodes and edges, allowing information to propagate between connected nodes during the learning process. This is achieved through convolutional operations that aggregate feature information from a node’s neighbors, effectively learning representations that consider both node attributes and graph topology. The aggregation process typically involves weighting the contributions of neighboring nodes based on edge weights or learned attention mechanisms. This capability is particularly valuable in domains where relational data is inherent, such as social networks, knowledge graphs, and, as demonstrated in this context, complex system modeling and fault detection.

The Process Topology Convolutional Network (PTCN) utilizes Graph Convolutional Networks (GCNs) to analyze complex systems by representing process data as a graph, where nodes represent process variables and edges define relationships between them. This graph-based approach allows the PTCN to move beyond traditional methods that treat variables in isolation, instead leveraging relational information to improve classification accuracy. By applying convolutional operations directly on the process graph, the PTCN learns node embeddings that capture both the individual characteristics of each variable and its context within the larger system. This facilitates more robust and interpretable models suitable for tasks such as fault detection and process monitoring in complex industrial environments.

Integration of Graph Convolutional Networks (GCNs) with autoencoders and Layerwise Relevance Propagation (LRP) significantly improves fault detection performance. Specifically, this combined approach achieved 99% accuracy in identifying faults within both the IDV 11 and IDV 8 test cases. The autoencoder component facilitates dimensionality reduction and feature extraction from the graph data, while LRP is utilized to decompose the model’s prediction and identify the features most influential in the fault detection process. This combination provides both high accuracy and increased interpretability, enabling a more detailed understanding of the factors contributing to fault identification.

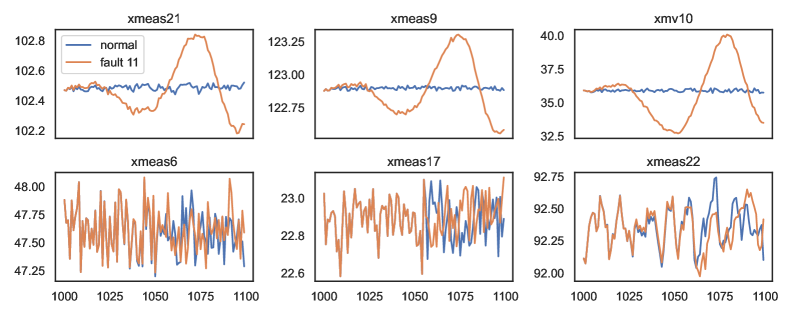

Analysis utilizing the SHAP (SHapley Additive exPlanations) method revealed a greater degree of variation in the contribution of key variables to model outputs compared to results obtained with the Integrated Gradients method. This indicates that SHAP provides a more granular and nuanced understanding of feature importance, identifying instances where individual features have differing impacts depending on the input data. Specifically, SHAP’s ability to distribute prediction contributions according to Shapley values exposes a wider range of behavioral patterns in the model, offering a more detailed explanation of its decision-making process than Integrated Gradients, which can sometimes mask these variations by averaging feature contributions.

Expanding the Horizon: Towards a Future of Trustworthy and Collaborative Intelligence

The analytical framework detailed within extends significantly beyond the confines of process optimization and industrial control. The core principles of extracting interpretable features and establishing causal relationships from complex datasets prove readily adaptable to diverse fields, notably image classification. By treating image pixels as a time-series of visual data, the techniques-originally designed for analyzing process variables-can be repurposed to identify critical image features and understand the decision-making process of deep learning models. This cross-domain applicability highlights the versatility of the approach, suggesting a pathway towards more transparent and reliable artificial intelligence systems capable of explaining why an image is classified in a specific manner, rather than simply that it is so classified. Furthermore, the ability to decompose complex systems into understandable components offers benefits for any domain grappling with high-dimensional data and opaque decision-making processes, promising advancements in fields ranging from medical diagnostics to financial modeling.

The Tennessee Eastman Process, a benchmark in process control, presents a significant challenge due to its complex, non-linear dynamics and high dimensionality. Recent advancements demonstrate that combining Long Short-Term Memory (LSTM) architectures with Deep Neural Networks offers a particularly robust approach to analyzing the time-series data generated by this process. LSTMs excel at capturing long-term dependencies within sequential data, crucial for understanding the delayed effects inherent in chemical processes. When integrated with the feature extraction capabilities of deep neural networks, the resulting hybrid model can accurately predict process variables, detect anomalies, and ultimately optimize control strategies. This synergistic combination surpasses the performance of traditional methods, offering improved stability, efficiency, and a deeper understanding of the underlying process dynamics – positioning it as a promising foundation for advanced process monitoring and control applications.

Continued development of Explainable Artificial Intelligence (XAI) methods remains crucial as systems grow in complexity, demanding techniques that move beyond simple feature importance to provide truly insightful and actionable explanations. Current XAI approaches often struggle with scalability, becoming computationally prohibitive when applied to high-dimensional data or intricate models; future research must prioritize methods capable of handling these challenges without sacrificing fidelity or comprehensibility. A key focus should be on developing XAI techniques that are not merely post-hoc interpretations, but are intrinsically woven into the model’s architecture, allowing for real-time explanations and fostering greater trust in automated decision-making processes. Furthermore, evaluating the quality of explanations – ensuring they are both accurate and understandable to diverse audiences – will be paramount to unlocking the full potential of XAI in critical applications.

The convergence of advanced process monitoring techniques with Natural Language Processing (NLP) presents a compelling pathway towards more intuitive and effective human-machine collaboration. By enabling systems to not only detect anomalies but also to articulate their reasoning in natural language, operators gain a deeper understanding of complex processes, fostering trust and facilitating informed decision-making. This integration transcends simple alert systems; it envisions a future where machines can explain why a particular state is flagged, suggest potential corrective actions, and even engage in a dialogue to refine strategies. Such capabilities are particularly valuable in high-stakes environments, like chemical plants or energy grids, where nuanced understanding and rapid response are critical, ultimately moving beyond automation towards genuine collaborative intelligence.

The pursuit of understanding why a deep learning model flags a fault in a chemical process is paramount, extending beyond mere accuracy. This study highlights the value of techniques like SHAP and Integrated Gradients not simply as ‘black box’ openers, but as tools to reveal the system’s internal logic. As Claude Shannon observed, “The most important thing in communication is to convey the meaning, not just the message.” Similarly, fault detection isn’t just about identifying anomalies; it’s about understanding how the model arrived at that conclusion, illuminating the underlying process behavior and enabling informed intervention. The careful application of explainable AI, as demonstrated, reveals the delicate balance between model complexity and interpretability, mirroring a well-designed system where each component’s role is clearly defined.

The Road Ahead

The pursuit of explainability, as demonstrated in this work, often feels like illuminating a black box with a flickering candle. While methods like SHAP and Integrated Gradients offer glimpses into model reasoning, the fundamental challenge remains: these are post-hoc justifications, not inherent properties of the system itself. The focus on interpreting decisions after they’ve been made obscures a more crucial point – the architecture should, ideally, facilitate transparency from the outset. A reliance on complex deep learning models, even with explainability tools, simply shifts the opacity, it does not eliminate it.

Future work must address the trade-off between model complexity and interpretability. Scaling these explanation techniques to genuinely high-dimensional, dynamic processes will prove difficult; the computational cost of explaining a decision can quickly exceed the cost of making it. The true metric of success won’t be the volume of explanations produced, but the degree to which those explanations engender trust and facilitate meaningful intervention.

Ultimately, the field needs to move beyond explaining what a model has done and focus on why it chose that course of action, considering the broader process context. Dependencies are the true cost of freedom, and a model divorced from the underlying physics and chemistry is a brittle thing, no matter how elegantly it’s explained. The goal should not be to make complex systems understandable, but to design systems that are, fundamentally, simple.

Original article: https://arxiv.org/pdf/2602.16341.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Gold Rate Forecast

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Tales of Xillia Remastered 1.0.3 update fixes diagonal movement, progression issues, graphical issues, & more

- Not My Robin Hood

- The best streamers of 2025

2026-02-20 05:45