Cleaning Up Code Training Data for Smarter AI

A new framework dynamically filters out noisy labels during training, boosting the performance and reliability of language models used for code-related tasks.

A new framework dynamically filters out noisy labels during training, boosting the performance and reliability of language models used for code-related tasks.

A new approach to clinical decision support uses interconnected AI agents to more accurately identify secondary headaches in primary care settings.

A new theoretical framework explores how federated learning can overcome the unique challenges of terahertz wireless communication.

A new decision support system uses fuzzy logic to analyze real-time performance data and suggest optimal player substitutions, potentially offering a more nuanced approach than traditional methods.

New research reveals that deep learning models consistently operate within surprisingly constrained spaces, offering pathways to more efficient AI.

New research shows that smaller artificial intelligence models, enhanced with reasoning abilities, can match the performance of much larger systems in identifying critical risks to children.

A new study explores how well traditional machine learning techniques can identify sarcastic comments on Reddit, focusing solely on the text of replies.

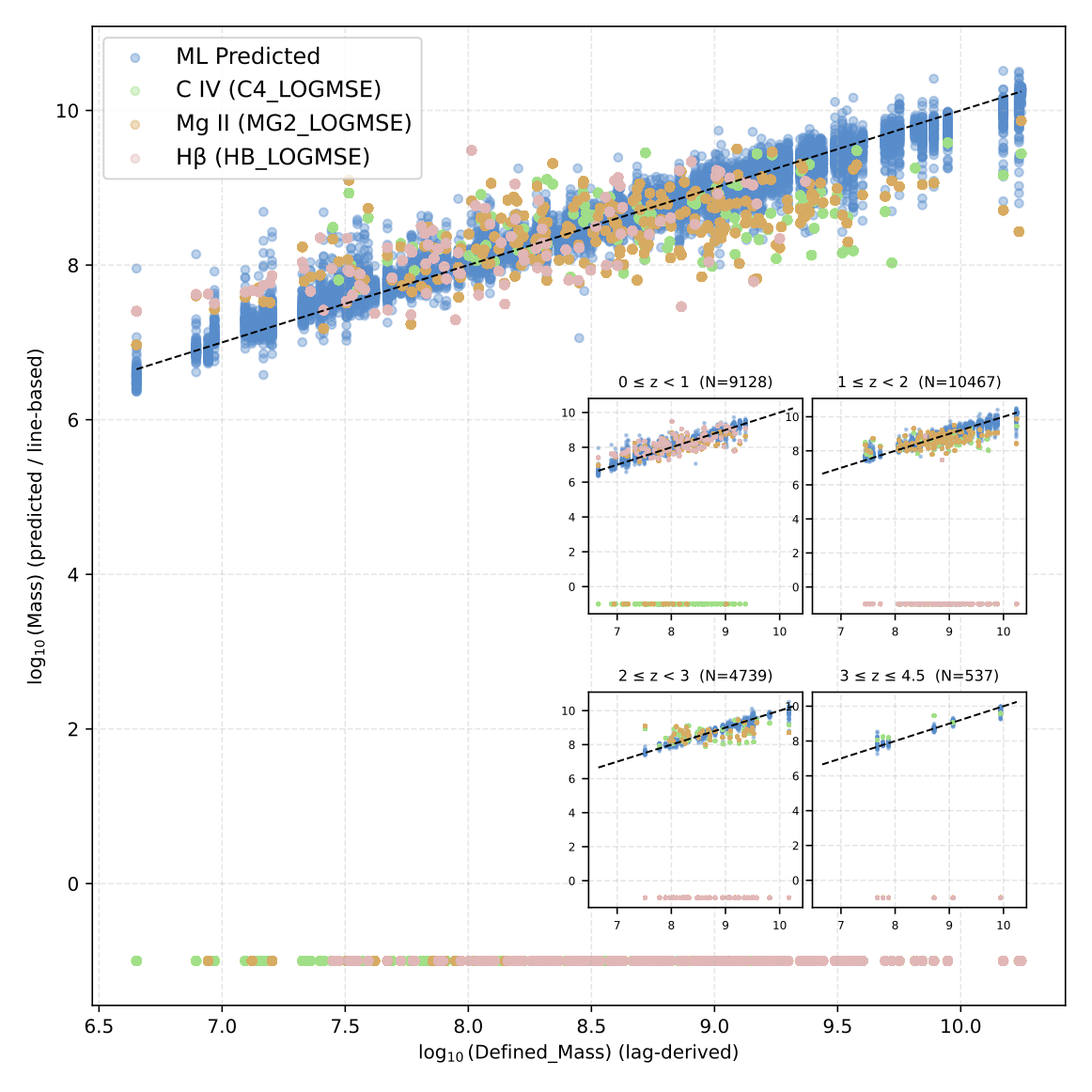

A new deep learning model delivers precise black hole mass estimates for an unprecedented sample of quasars, offering a comprehensive view of these cosmic behemoths.

Researchers have developed a theoretical framework to optimize how large AI models distribute work, ensuring efficient use of specialized components.

New research reveals that the effectiveness of optimization algorithms hinges on the rank of neural network activations, explaining why some methods excel in certain scenarios.