Sifting Signal from Noise: A Data Efficiency Framework

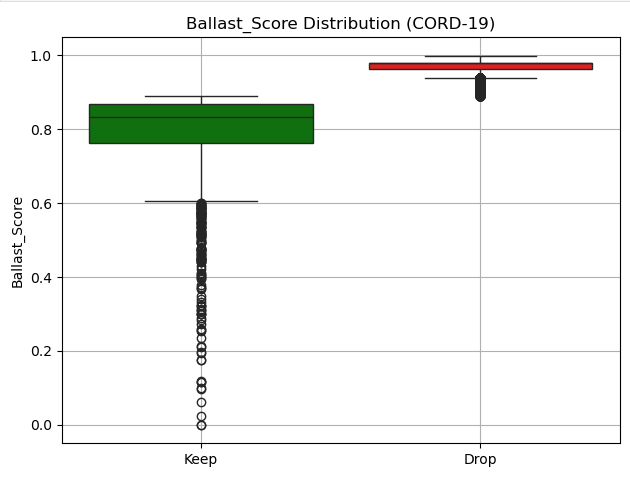

New research introduces a method for identifying and eliminating redundant information in multi-modal datasets, boosting analytical performance and reducing storage costs.

New research introduces a method for identifying and eliminating redundant information in multi-modal datasets, boosting analytical performance and reducing storage costs.

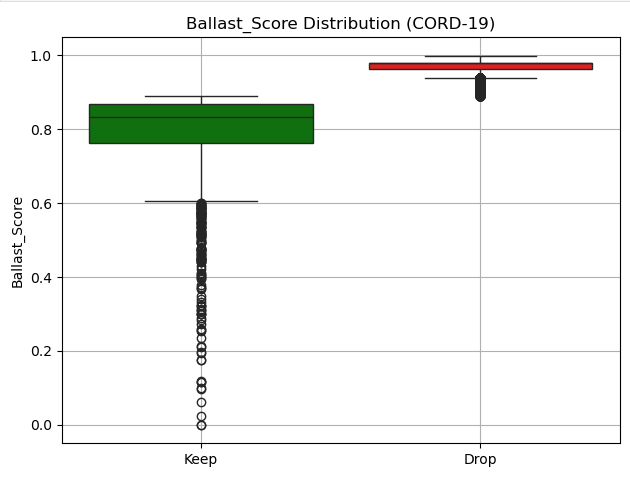

A new framework leverages the power of speech recognition and artificial intelligence to transform unstructured emergency communications into actionable data for improved UAV coordination.

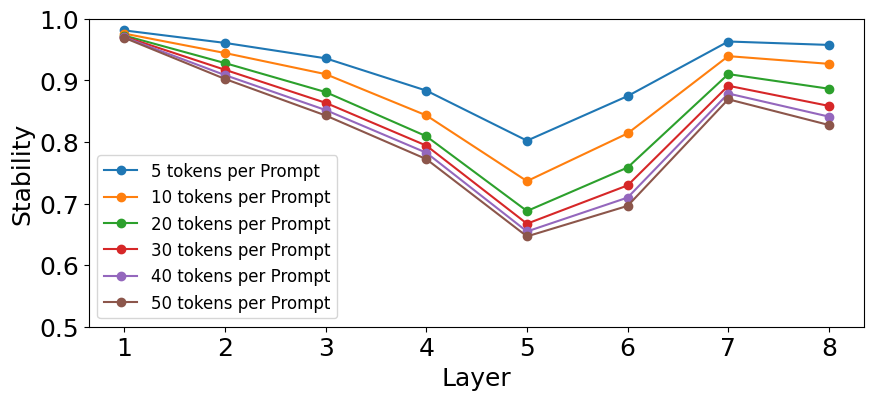

New research reveals that the core components of large language models exhibit surprising instability, challenging assumptions about the consistency of their learned representations.

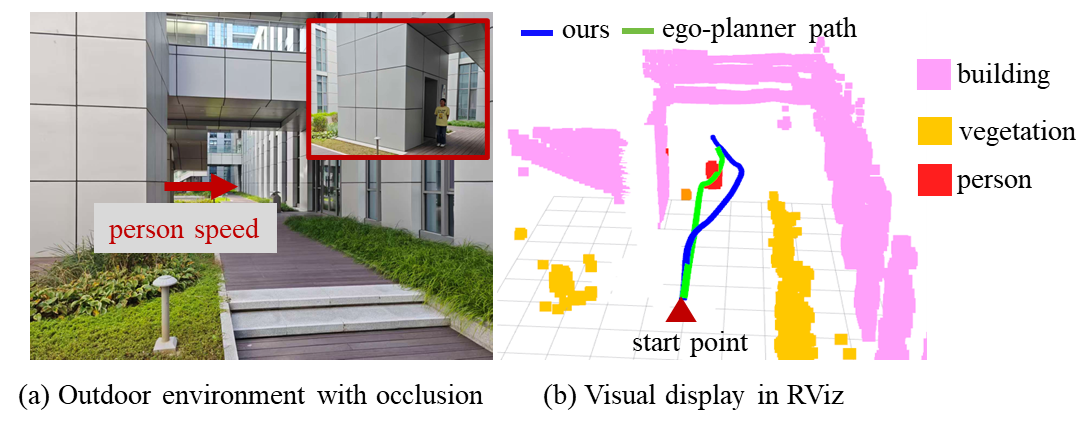

A new system leverages semantic understanding of surroundings to enable aerial robots to proactively avoid hazards and navigate complex, unpredictable environments.

![The study demonstrates a method for assessing the constraining power of a C3NN model by subjecting training maps to phase randomization within their Fourier transforms-a process involving Fast Fourier Transforms (FFT), uniform phase distribution between 0 and [latex]2\pi[/latex], and inverse FFT-effectively testing the model’s reliance on subtle, potentially illusory, correlations within the cosmological data.](https://arxiv.org/html/2602.16768v1/x8.png)

A new framework uses convolutional neural networks to extract richer information from weak lensing data, potentially unlocking more precise measurements of cosmological parameters.

New research introduces a dynamic approach to prevent performance degradation and maintain safety standards as large language models are refined for specific tasks.

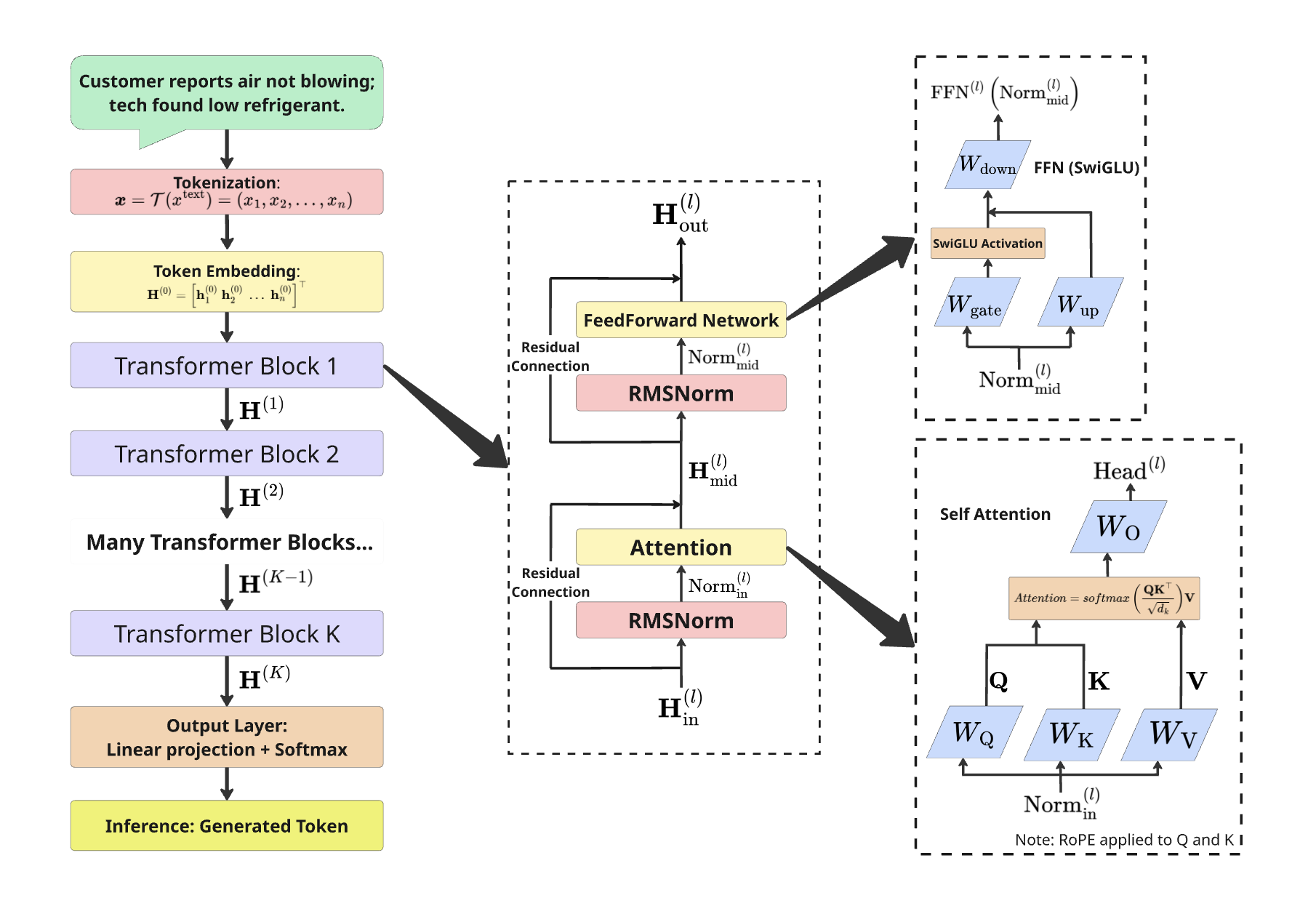

New research demonstrates that fine-tuned artificial intelligence models can accurately forecast corrective actions for warranty claims, paving the way for truly automated processing.

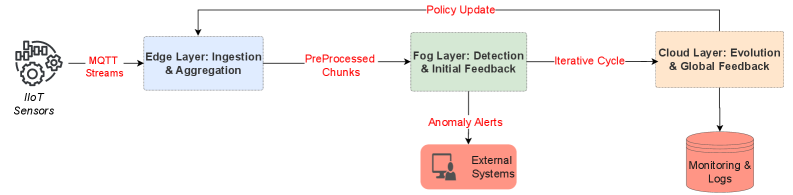

A new approach to predictive maintenance leverages self-evolving multi-agent systems to enhance reliability and minimize downtime in industrial IoT environments.

Researchers are harnessing the power of distributed learning and advanced neural networks to improve the accuracy of lung disease diagnosis while safeguarding patient data.

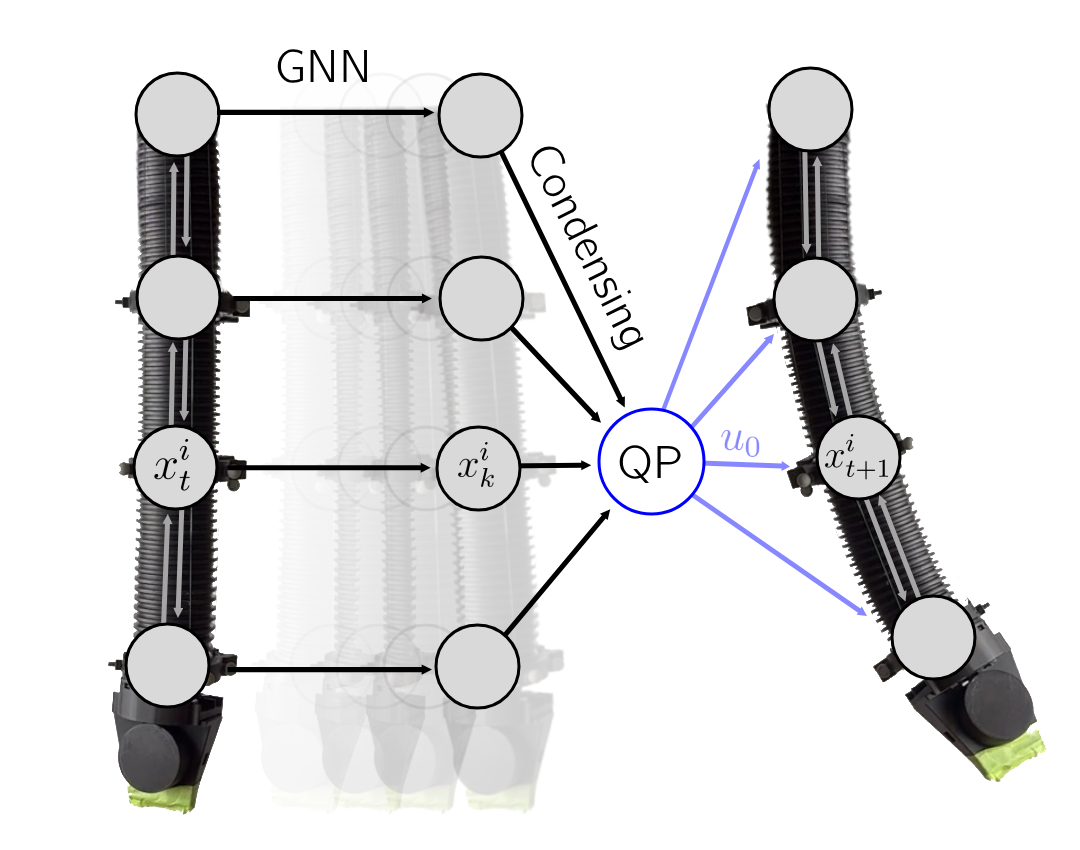

A new approach combines graph neural networks and model predictive control to enable real-time command of complex systems like soft robots.