Riding the Chaos: Accurately Predicting System Responses to Complex Forces

![The system’s state space trajectories, captured at intervals of approximately 10 seconds, demonstrate the predictive capacity of a Gaussian State Space model (red) and an autoencoder-LSTM network (green) against the backdrop of a periodically forced system (cyan) and its true response (black) as projected onto different phase space coordinates-specifically, [latex]x_{10}, \dot{x}_{10}, x_{11}[/latex] and [latex]x_1, \dot{x}_1, x_{20}[/latex]-revealing the models’ ability to approximate system behavior across varying states.](https://arxiv.org/html/2602.16848v1/x16.png)

A new computational approach offers a faster and more precise method for determining the stable states of mechanical systems under unpredictable external influences.

![The system’s state space trajectories, captured at intervals of approximately 10 seconds, demonstrate the predictive capacity of a Gaussian State Space model (red) and an autoencoder-LSTM network (green) against the backdrop of a periodically forced system (cyan) and its true response (black) as projected onto different phase space coordinates-specifically, [latex]x_{10}, \dot{x}_{10}, x_{11}[/latex] and [latex]x_1, \dot{x}_1, x_{20}[/latex]-revealing the models’ ability to approximate system behavior across varying states.](https://arxiv.org/html/2602.16848v1/x16.png)

A new computational approach offers a faster and more precise method for determining the stable states of mechanical systems under unpredictable external influences.

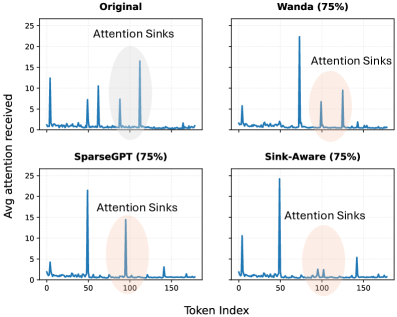

A new pruning strategy targets unstable attention patterns in diffusion models to significantly improve computational efficiency without sacrificing performance.

A novel framework combines established physical models with data-driven techniques to achieve more accurate and efficient identification of complex nonlinear systems.

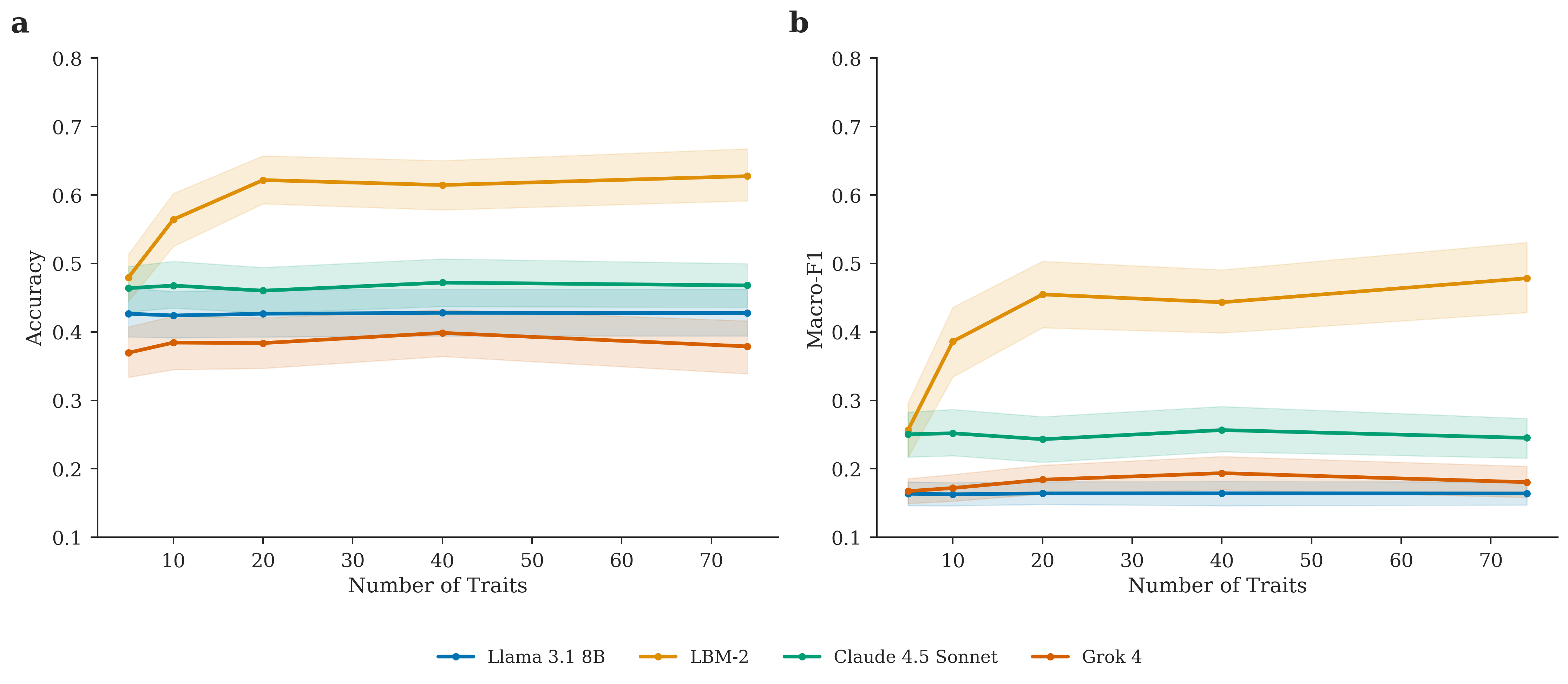

Researchers have developed a novel approach to forecasting individual actions by integrating psychological traits with the power of large language models.

A novel data-driven framework offers a robust method for detecting subtle structural changes that signal critical transitions in high-dimensional dynamical systems.

A new approach utilizes advanced 3D reconstruction techniques to create detailed digital twins of civil infrastructure, enabling precise damage assessment and long-term monitoring.

A new study reveals how neural machine translation systems can lose representational diversity, and demonstrates a method to preserve translation quality by maximizing the angular separation of decoder embeddings.

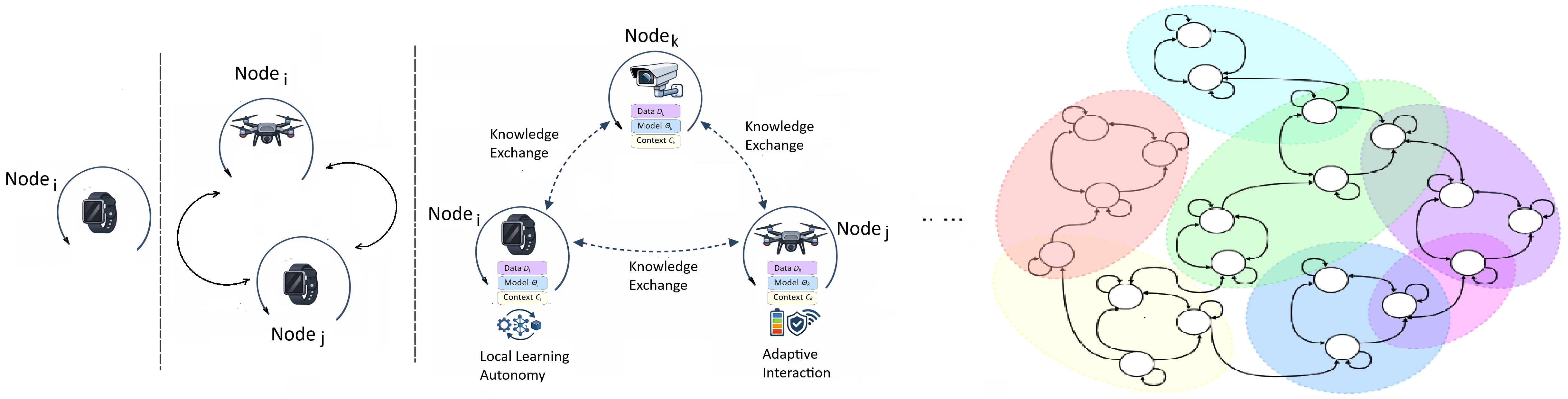

A new paradigm shifts intelligence away from centralized servers and onto individual devices, enabling continuous learning and real-time adaptation.

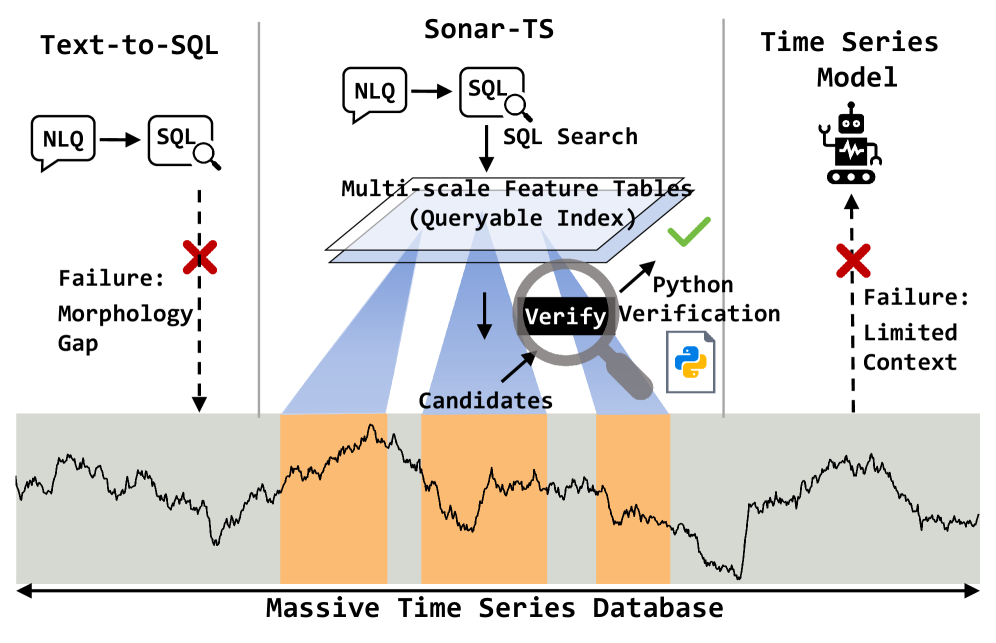

Researchers have developed a new framework that allows users to query time series databases using plain English, overcoming the limitations of traditional methods.

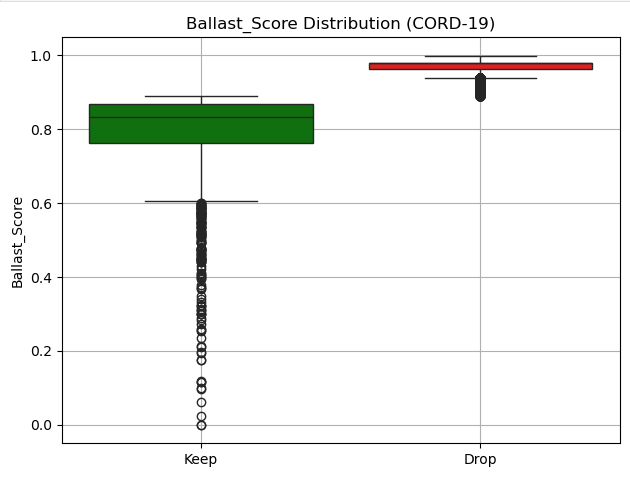

New research introduces a method for identifying and eliminating redundant information in multi-modal datasets, boosting analytical performance and reducing storage costs.