Author: Denis Avetisyan

New research explores how to make large language models more reliable at identifying vulnerabilities in code.

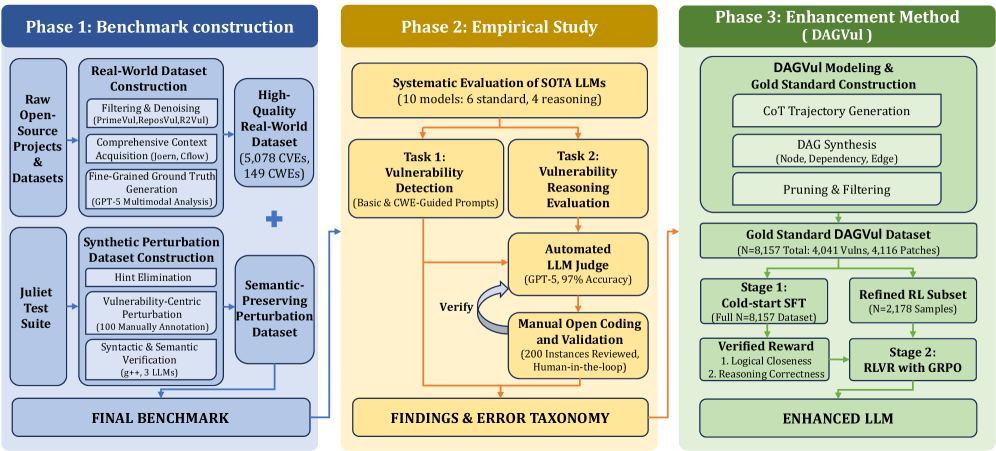

A novel framework leveraging Directed Acyclic Graphs significantly enhances the reasoning fidelity of large language models for vulnerability detection and security auditing.

Despite advances in vulnerability detection, Large Language Models (LLMs) often arrive at correct answers through flawed reasoning, obscuring a critical reliability gap in AI-assisted security auditing. This work, ‘Evaluating and Enhancing the Vulnerability Reasoning Capabilities of Large Language Models’, introduces DAGVul, a novel framework that models vulnerability reasoning as a Directed Acyclic Graph (DAG) generation task, enforcing structural consistency and aligning model traces with program logic. Empirical results demonstrate that DAGVul achieves an average improvement of 18.9% in reasoning F1-score, surpassing existing models-including those with significantly larger parameter counts-and even approaching the performance of state-of-the-art systems like Claude-Sonnet-4.5. Could this approach unlock a new paradigm for trustworthy and explainable AI in critical security applications?

The Inherent Limitations of Contemporary Vulnerability Detection

Traditional vulnerability detection often begins with static analysis, a technique examining source code without actually executing it. While valuable for identifying obvious flaws, this approach increasingly falters when confronted with the intricacies of modern codebases. Complex software, characterized by extensive interdependencies, dynamic behavior, and obfuscation techniques, presents a significant challenge to static analyzers. These tools struggle to trace the flow of data and control through convoluted code paths, leading to incomplete analyses and a high rate of false negatives. The sheer scale of many applications further exacerbates the problem, as the computational resources required for a thorough static analysis grow exponentially with code size, effectively limiting its practical application in many real-world scenarios.

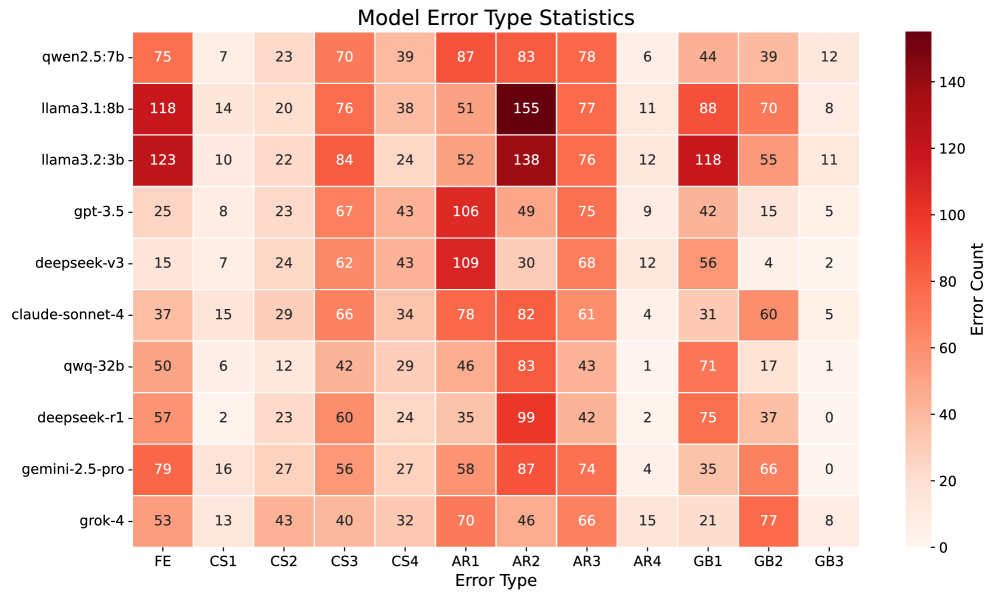

Traditional vulnerability detection systems, while valuable, frequently stumble due to their reliance on predefined rules. These systems operate by identifying code patterns that match known vulnerabilities, but this approach struggles with the ever-evolving landscape of software exploits. Consequently, they often flag benign code as problematic – generating false positives that overwhelm security teams. More critically, this rigid methodology frequently misses subtle or novel vulnerabilities that don’t neatly fit established patterns. These nuanced weaknesses, often arising from complex interactions within the code, remain hidden because the system lacks the capacity to understand the intent of the code, rather than simply its structure. This limitation highlights a core challenge: detecting vulnerabilities requires more than pattern matching; it demands a degree of reasoning about the code’s behavior.

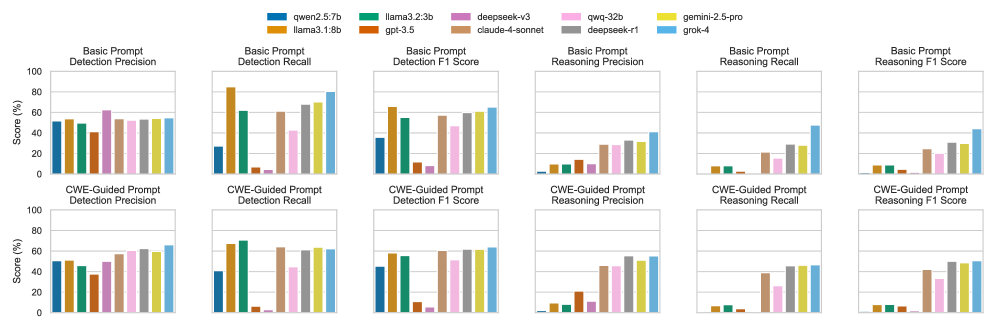

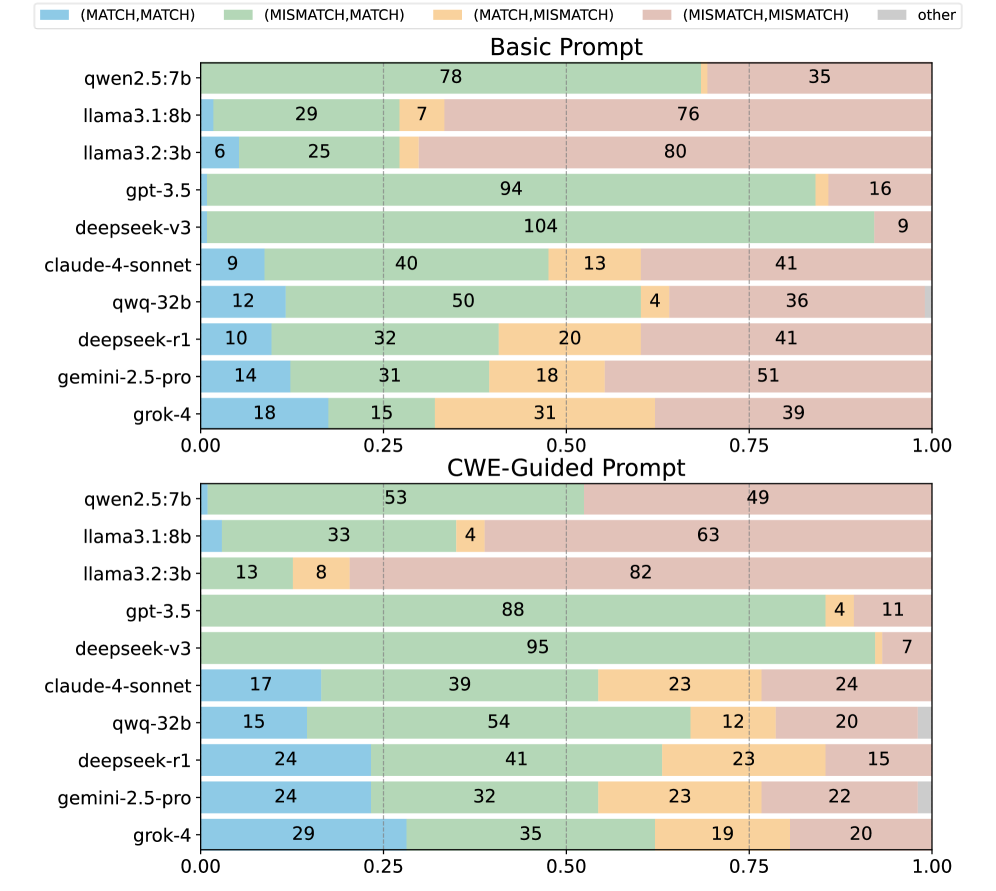

Current vulnerability detection systems frequently fail to grasp the core logic behind successful exploits, focusing instead on pattern matching rather than genuine vulnerability reasoning. This limitation results in a surprisingly low accuracy rate – around 63.5% – in correctly identifying the ‘why’ of a security flaw. Consequently, these tools often miss subtle yet critical vulnerabilities that a human analyst might recognize by understanding the attacker’s intent and the underlying code behavior. The inability to accurately deduce the reasoning behind an exploit significantly hinders proactive security measures and leaves systems vulnerable to attacks that bypass conventional detection methods, highlighting a crucial need for more sophisticated analytical approaches.

Beyond Static Inspection: The Power of Dynamic Analysis

Dynamic analysis complements static analysis techniques by actively running the software under observation and examining its behavior for deviations from expected norms. This approach involves executing the code with various inputs and monitoring system calls, memory access patterns, network traffic, and other runtime characteristics. Anomalies detected during execution – such as buffer overflows, memory leaks, or unexpected control flow – are flagged as potential vulnerabilities. Unlike static analysis, which relies on examining the code without executing it, dynamic analysis provides insights into the actual execution paths taken and the program’s real-world behavior, enabling the identification of vulnerabilities that might not be apparent through code inspection alone.

Dynamic analysis facilitates vulnerability reasoning by observing a program’s execution and identifying the specific sequence of instructions, or execution path, that leads to a flaw. Unlike static analysis which infers potential vulnerabilities, dynamic analysis demonstrates actual exploitation paths. By monitoring variables, memory access, and control flow during runtime, analysts can pinpoint the precise conditions under which a vulnerability is triggered. This direct observation provides concrete evidence supporting vulnerability assessments and allows for a more accurate understanding of the root cause, going beyond theoretical possibilities to confirm exploitable behavior.

Effective dynamic analysis, while valuable for identifying vulnerabilities, presents practical challenges regarding resource utilization and test coverage. Achieving comprehensive analysis necessitates substantial computational power to execute code and monitor its behavior across a wide range of inputs. Furthermore, the generation of effective test cases is critical; insufficient or poorly designed tests can limit the scope of analysis and lead to incomplete results. Current dynamic analysis methods, despite advancements, demonstrate a flawed reasoning rate averaging approximately 36.4%, indicating a significant proportion of potential vulnerabilities may remain undetected due to limitations in test case design or computational constraints.

Complexity as a Harbinger of Vulnerability

Code complexity, as quantified by metrics such as cyclomatic complexity and token count, exhibits a demonstrable correlation with an increased incidence of software vulnerabilities. Cyclomatic complexity measures the number of linearly independent paths through a program’s source code, with higher values indicating greater decision complexity and, consequently, a larger potential attack surface. Token count, representing the total number of lexical elements in the code, serves as a proxy for code size and overall structural intricacy. Empirical analysis consistently reveals that code modules exceeding established complexity thresholds are disproportionately likely to contain security flaws, attributable to increased cognitive load on developers and a heightened probability of introducing errors during development and maintenance. These metrics, while not definitive predictors, provide a valuable initial signal for prioritizing security review and static analysis efforts.

Increased code complexity directly impacts comprehensibility for developers, leading to a higher incidence of errors during both development and maintenance. This is because complex code requires greater cognitive load to understand the control flow, data dependencies, and potential side effects of any given operation. Consequently, developers are more likely to introduce bugs, overlook edge cases, or implement incorrect logic. Hidden flaws are also more prevalent in complex codebases as the intricate interactions between different code sections obscure potential vulnerabilities, making them difficult to detect through code review or testing. The difficulty in understanding complex code extends beyond the initial author, impacting future maintainers and increasing the long-term risk of security issues.

Analysis combining code complexity metrics – such as cyclomatic complexity and token count – with the detection of semantic perturbations significantly improves vulnerability identification. Semantic perturbations refer to minor alterations in code structure that may indicate underlying logical flaws. Our framework, utilizing this combined approach, achieved a 73.25% success rate in identifying code samples exhibiting logical closeness – meaning samples with similar intended functionality but potentially differing implementations – thereby demonstrating an enhanced capacity for reasoning about code behavior and pinpointing areas susceptible to exploitation. This suggests that evaluating code not only for inherent complexity but also for subtle structural changes is crucial for effective vulnerability assessment.

Towards a Proactive and Reasoned Vulnerability Posture

A robust vulnerability assessment necessitates moving beyond static code review to encompass runtime behavior and inherent code characteristics. Integrating dynamic analysis – observing software in operation – with complexity metrics, which quantify the intricacy of the codebase, and semantic analysis, which understands the code’s intended meaning, offers a significantly more comprehensive approach. This multifaceted technique allows security professionals to identify vulnerabilities that might remain hidden through traditional methods; dynamic analysis reveals flaws triggered by specific inputs or conditions, while complexity metrics highlight areas prone to errors, and semantic analysis confirms whether the code behaves as expected. By correlating these insights, assessments move beyond simply finding vulnerabilities to understanding their potential impact and prioritizing remediation efforts, ultimately strengthening the software’s security posture.

Effective vulnerability assessment increasingly relies on a dual understanding of software: its runtime behavior and its structural complexity. Security professionals can significantly refine their efforts by moving beyond static code analysis to incorporate dynamic analysis, which reveals how code actually functions under various conditions. Simultaneously, assessing inherent complexity – factors like code size, cyclomatic complexity, and data dependencies – highlights areas prone to errors and potential exploits. This combined approach enables prioritization; resources are focused on complex, actively-used code segments rather than being spread thinly across the entire system. Consequently, remediation becomes more efficient, reducing the attack surface and strengthening the overall security posture by addressing the most critical vulnerabilities first.

Shifting from reactive patching to proactive vulnerability assessment demonstrably enhances software security, minimizing opportunities for exploitation. Recent advancements, such as the DagVul framework, exemplify this progress through improved reasoning capabilities in vulnerability detection. Evaluations reveal an 18.9% increase in reasoning quality-reaching an F1-score of 70.97% when tested on the Qwen3-8B model-and a reasoning accuracy of 76.11%, performance that closely parallels the capabilities of the Claude-Sonnet-4.5 system. This heightened analytical precision allows security teams to anticipate and address weaknesses before they can be leveraged by malicious actors, ultimately strengthening the overall security posture and resilience of software systems.

The pursuit of demonstrable correctness in automated vulnerability detection, as highlighted in the article’s introduction of DagVul, aligns with a fundamental principle of computational elegance. Grace Hopper famously stated, “It’s easier to ask forgiveness than it is to get permission.” While seemingly a paradox, this resonates with the article’s focus on reasoning fidelity. DagVul doesn’t simply offer a probabilistic answer; it constructs a Directed Acyclic Graph, a provable representation of the program’s logic, offering a clear and justifiable path to identifying vulnerabilities. This emphasis on a logically sound, demonstrable approach-rather than relying on heuristics-is paramount to building trustworthy AI-assisted security auditing tools. The framework’s reliance on a provable graph is not about seeking permission to flag a vulnerability, but about offering irrefutable evidence of its existence.

Where Do We Go From Here?

The construction of DagVul represents a tentative step toward imbuing Large Language Models with something resembling verifiable reasoning. It is, however, crucial to acknowledge that correlation, even demonstrably improved performance on vulnerability detection benchmarks, does not equate to causation, nor does it constitute a proof of genuine understanding. The framework addresses the superficial appearance of reasoning-the output-but the underlying cognitive processes remain opaque. The question persists: is the model truly reasoning about vulnerabilities, or simply pattern-matching with greater sophistication?

Future work must move beyond empirical evaluation and embrace formal verification. The creation of a demonstrably correct algorithm, provably capable of identifying vulnerabilities under specific conditions, remains the gold standard. The current reliance on datasets, however extensive, introduces inherent biases and limits generalizability. A truly robust system would not simply detect vulnerabilities, but prove their existence based on first principles.

Ultimately, the field faces a fundamental challenge: can a system built on probabilistic approximations ever achieve the rigor demanded by security-critical applications? The pursuit of AI-assisted security auditing is not merely an engineering problem; it is a philosophical inquiry into the nature of intelligence and the limits of computation. Until that question is addressed, even the most elegant framework will remain, at best, a clever approximation.

Original article: https://arxiv.org/pdf/2602.06687.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- These are the 25 best PlayStation 5 games

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- SHIB PREDICTION. SHIB cryptocurrency

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- Rob Reiner’s Son Officially Charged With First Degree Murder

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Every Death In The Night Agent Season 3 Explained

2026-02-09 15:49