Author: Denis Avetisyan

New research introduces a framework for aligning artificial intelligence with real-world experience, enabling more robust planning and physical interaction.

WorldMind leverages predictive coding and a knowledge repository to improve the physical grounding of language-model-based agents through experiential learning.

Large language models excel at semantic reasoning yet often struggle to translate knowledge into physically plausible action, creating a disconnect between understanding and execution. This limitation motivates the work ‘Aligning Agentic World Models via Knowledgeable Experience Learning’, which introduces WorldMind, a framework enabling agents to build a symbolic \mathcal{W}orld \mathcal{K}nowledge Repository through the synthesis of environmental feedback. By unifying process and goal experiences to correct prediction errors and distill successful trajectories, WorldMind demonstrably improves physical grounding and planning capabilities. Could this approach unlock truly robust and adaptable agentic systems capable of navigating complex, real-world environments?

Breaking the Prediction Barrier

Despite their impressive capacity to generate human-quality text and perform complex tasks, current Large Language Model (LLM) agents frequently exhibit a surprising lack of grounding in real-world understanding. This deficiency manifests as brittle behavior – unexpected failures when faced with even slight deviations from their training data or novel situations. The agents excel at identifying patterns and predicting the next word or action, but struggle to build a cohesive “world model” – an internal representation of how things work, including cause and effect, physical constraints, and common sense. Consequently, these agents can generate plausible-sounding but ultimately nonsensical or impractical responses, demonstrating a performance gap between statistical proficiency and genuine intelligence. The limitations highlight the need for LLM agents to move beyond simple prediction and develop a deeper, more robust understanding of the world they are operating within.

True intelligence extends beyond the capacity to anticipate subsequent events; it necessitates a comprehension of causal relationships. Current artificial intelligence systems frequently excel at predicting what will happen, based on patterns observed in vast datasets, but often falter when confronted with scenarios outside those patterns or when asked to explain why something occurred. This limitation stems from a lack of genuine world modeling; an agent that merely predicts lacks the underlying understanding to adapt to novelty, reason counterfactually, or generalize knowledge effectively. Consequently, systems reliant solely on prediction exhibit brittle behavior, prone to errors when faced with even minor deviations from familiar circumstances, highlighting the critical need for AI to move beyond correlation and embrace the principles of causation to achieve robust and meaningful intelligence.

Constructing Reality: The WorldMind Framework

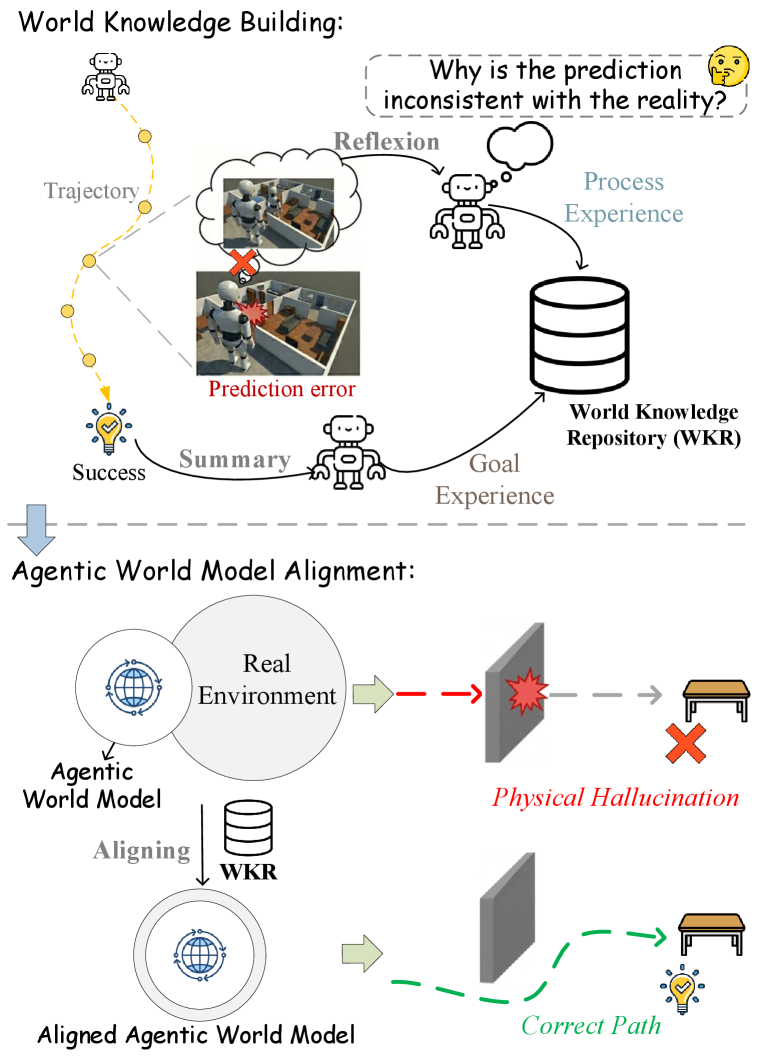

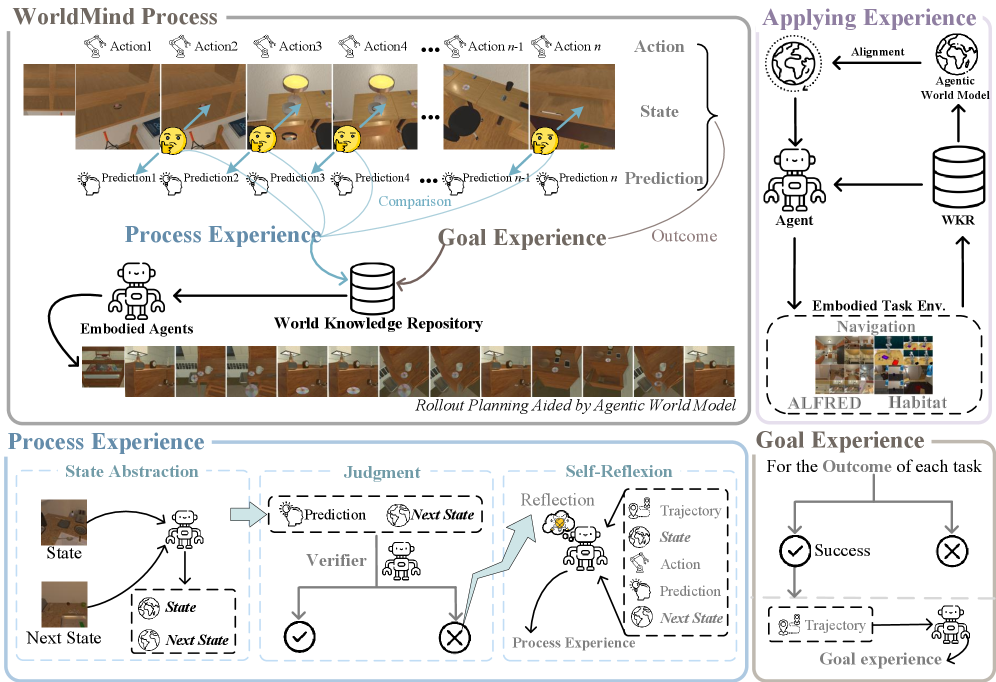

WorldMind establishes a framework for building agentic world models through experiential learning, prioritizing alignment with observed reality. This is achieved by constructing internal simulations that are both physically grounded – meaning they adhere to the laws of physics and spatial relationships – and semantically plausible, ensuring the simulated entities and events are logically consistent with established knowledge. The framework differs from traditional approaches by focusing on continuous refinement of these models through interaction with the environment, effectively learning by doing and iteratively reducing discrepancies between prediction and perception. This emphasis on experiential data allows agents to develop robust and accurate understandings of their surroundings, facilitating effective planning and decision-making in complex scenarios.

WorldMind’s operational principle centers on minimizing prediction error, a process directly informed by Predictive Coding theory. The system maintains an internal simulation of the environment and continuously compares its predictions of sensory input to actual perceived data. Discrepancies between predicted and received signals generate error signals which are then used to adjust the internal model’s parameters. This iterative refinement process, driven by the minimization of prediction error, allows WorldMind to improve the accuracy and fidelity of its simulated environment, enhancing its ability to anticipate and react to real-world stimuli. The magnitude of the error signal informs the degree to which the model is updated, prioritizing adjustments that resolve the most significant discrepancies between simulation and reality.

The World Knowledge Repository functions as a persistent, structured database within the WorldMind framework, designed to accumulate and organize information derived from the agent’s interactions with its environment. This repository doesn’t simply store raw sensory data; instead, it abstracts experiences into reusable, semantic representations of objects, relationships, and physical laws. Data is stored as probabilistic assertions, allowing the system to handle uncertainty and incomplete information. The repository utilizes a graph-based structure to facilitate efficient retrieval and reasoning, enabling the agent to leverage past experiences to inform current predictions and actions. Updates to the repository occur continuously through a process of inference and consolidation, with new information either confirming existing knowledge or triggering refinement of existing assertions based on prediction error minimization.

Learning Through Action and Outcome

Process Experience within the WorldMind architecture is generated through the analysis of prediction errors occurring during simulated actions. These errors, representing discrepancies between predicted and actual outcomes, are used to refine the simulation’s internal model of physics and dynamics. Specifically, the system adjusts parameters and constraints to minimize future prediction errors, thereby enforcing physical feasibility. This iterative refinement ensures that simulated trajectories adhere to established real-world laws, preventing actions that are physically impossible, such as objects passing through each other or violating conservation of momentum. The resulting constraint system effectively bounds the solution space, limiting exploration to physically plausible states and actions.

Goal Experience within the WorldMind architecture is generated by analyzing completed, successful task trajectories. This analysis doesn’t simply record the final outcome, but rather extracts the sequence of actions that reliably led to success. These sequences are then distilled into procedural heuristics – essentially, learned ‘rules of thumb’ – which are used to bias subsequent simulations. By prioritizing action paths similar to those observed in successful trajectories, the system increases the probability of finding effective solutions to new, related tasks. This approach allows WorldMind to leverage past successes as a form of inductive reasoning, effectively transferring knowledge and improving planning efficiency without requiring explicit programming of task-specific strategies.

Constrained Simulation leverages both Process and Goal Experience to enhance the reliability of planning by actively preventing the execution of actions deemed physically impossible or semantically implausible. Process Experience, gained from identifying and correcting prediction errors, establishes boundaries based on the laws of physics and real-world constraints. Simultaneously, Goal Experience, derived from successful task completions, provides procedural knowledge that guides the simulation toward feasible solutions. This dual-constraint approach effectively narrows the search space, reducing the likelihood of generating plans that are either physically unrealistic or logically inconsistent, ultimately leading to more robust and dependable outcomes.

WorldMind’s robustness is fundamentally achieved through a bidirectional learning process that integrates feedback from both unsuccessful and successful simulation trajectories. Analysis of prediction errors-representing failures to adhere to physical laws or established constraints-provides ‘Process Experience’ which refines the simulation’s adherence to reality. Concurrently, distillation of successful trajectories yields ‘Goal Experience’, offering procedural guidance towards task completion. This cyclical process of learning from both failure and success allows WorldMind to iteratively improve its planning capabilities, avoiding physically implausible actions and increasing the likelihood of achieving desired outcomes in complex environments.

Beyond Prediction: Towards True Agency

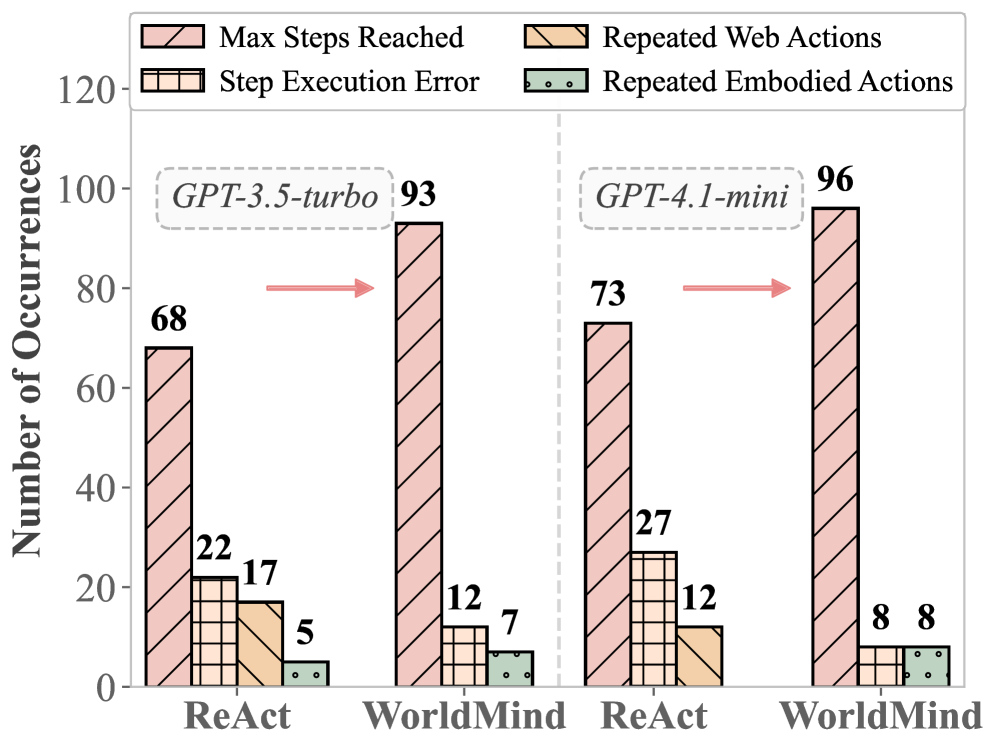

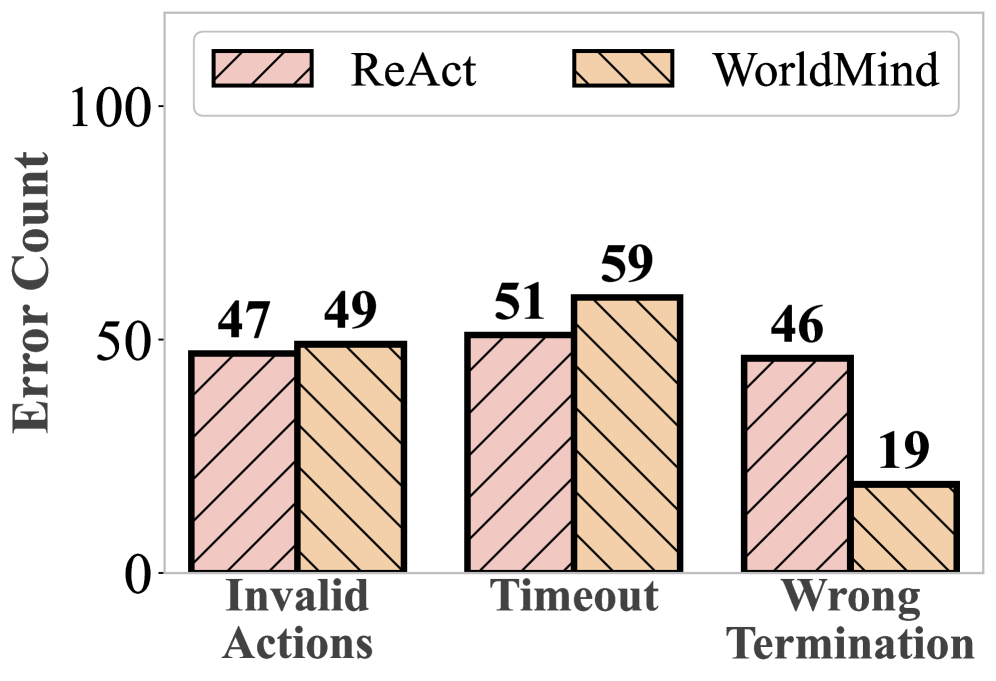

Evaluations of WorldMind across demanding embodied AI environments-including the simulated homes of EB-Habitat and the interactive tasks of EB-ALFRED-reveal substantial gains in performance. Notably, the system achieved a 48.0% success rate on EB-ALFRED, marking a 3.6% improvement compared to existing baseline models. This indicates an enhanced capacity for completing complex, real-world-inspired objectives within simulated spaces, suggesting the framework’s potential for robust and adaptable performance in embodied artificial intelligence applications. The demonstrated advancements position WorldMind as a promising step toward creating AI agents capable of navigating and interacting with environments more effectively.

Evaluations utilizing the EB-ALFRED benchmark reveal a substantial advancement in goal-oriented task completion with the framework achieving a 63.0% Goal-Conditioned Success rate. This represents a significant leap forward when contrasted with the 50.4% attained by the baseline model, demonstrating an improved capacity to not only understand task objectives but also to consistently execute the necessary steps for successful completion. The enhanced performance suggests a more robust internal representation of task goals, allowing the system to navigate complex scenarios and adapt its actions accordingly, ultimately leading to a markedly higher rate of achieving designated objectives within the simulated environment.

Evaluations within the EB-Habitat simulation environment reveal that WorldMind consistently surpasses the performance of the established ReAct baseline, demonstrating a notable 9.2% increase in overall Success Rate. This improvement extends to Goal-Conditioned Success, where WorldMind achieves a substantial margin, indicating a heightened ability to not only navigate environments but also to effectively pursue and complete specific objectives. These results suggest that the framework’s approach to environmental understanding and task execution provides a significant advantage in complex, simulated scenarios, paving the way for more robust and adaptable embodied AI systems.

The WorldMind framework exhibits a remarkable capacity for knowledge generalization, enabling effective cross-model transfer. This means insights and learned behaviors developed within one simulated environment can be successfully applied to entirely new and unseen environments, dramatically accelerating the learning process. Rather than requiring extensive retraining from scratch in each novel setting, the system leverages previously acquired understanding, allowing it to adapt and perform effectively with significantly less data and computational effort. This ability to transfer knowledge is a crucial step towards building adaptable and robust AI systems capable of navigating real-world complexity, where environments are constantly changing and unpredictable.

The development of robust world models represents a pivotal advancement in artificial intelligence, moving beyond simple reaction to stimuli towards genuine understanding and foresight. These models, built and continually refined through interaction with an environment, allow systems to anticipate the consequences of actions, plan complex sequences, and reason about abstract goals. Unlike systems reliant on immediate sensory input, an AI equipped with an accurate world model can internally simulate scenarios, evaluate potential outcomes, and select the most effective course of action-a capacity crucial for tackling intricate, real-world challenges. This ability to construct and manipulate internal representations of the external world isn’t merely about predicting what will happen, but about understanding why, fostering adaptability and enabling AI to navigate unforeseen circumstances with increasing autonomy and efficiency.

The pursuit within this research mirrors a fundamental tenet of intellectual exploration: understanding through deconstruction and reconstruction. WorldMind, with its iterative process of prediction error correction and successful trajectory distillation, embodies this principle. It doesn’t simply accept a pre-defined reality; it actively tests it, seeking discrepancies between expectation and observation. As Ada Lovelace noted, “The Analytical Engine has no pretensions whatever to originate anything.” This framework doesn’t create knowledge ex nihilo, but refines it through experience-aligning agentic world models not by invention, but by rigorous testing against physical reality and building a World Knowledge Repository as a result. The system’s strength lies not in its initial assumptions, but in its capacity to learn from-and correct-its own mistakes.

What Breaks Down From Here?

The construction of a “World Knowledge Repository” – a neatly organized accumulation of predicted and corrected experience – feels, predictably, like a bid for complete control. But what happens when the corrections themselves become suspect? The framework rightly addresses physical grounding, but grounding isn’t about perfectly matching prediction to sensation; it’s about useful mismatch. A slight error in trajectory, a miscalculation of force – these aren’t bugs, they’re features of adaptation. The next iteration must deliberately introduce controlled inconsistencies, exploring the boundary between reliable knowledge and generative novelty.

Currently, successful trajectory distillation rewards efficiency. But efficiency, divorced from curiosity, is a local optimum. What if the system were rewarded for unexpected successes? For finding solutions that defy initial predictions, even if those solutions are needlessly complex? Such a shift would demand a re-evaluation of the “error” signal itself – transforming it from a corrective force into an exploratory beacon.

Ultimately, WorldMind, or its successors, will be judged not by its ability to predict, but by its capacity to be surprised. The true test isn’t building a perfect map of the world, but creating an agent that actively seeks out the edges of its own understanding, and cheerfully dismantles its assumptions when confronted with the genuinely new.

Original article: https://arxiv.org/pdf/2601.13247.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- The MCU’s Mandarin Twist, Explained

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- SHIB PREDICTION. SHIB cryptocurrency

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- Server and login issues in Escape from Tarkov (EfT). Error 213, 418 or “there is no game with name eft” are common. Developers are working on the fix

- Rob Reiner’s Son Officially Charged With First Degree Murder

- MNT PREDICTION. MNT cryptocurrency

- Gold Rate Forecast

2026-01-21 08:18