Author: Denis Avetisyan

Researchers have developed a novel framework that combines geometric modeling with in-context learning to achieve more accurate and adaptable predictions for complex time series data.

Laplacian In-context Spectral Analysis (LISA) leverages nonparametric Gaussian process latent models and diffusion maps for improved forecasting in both stationary and nonstationary time series.

Adapting time-series models to evolving dynamics remains a persistent challenge in forecasting and dynamical systems analysis. This paper introduces ‘LISA: Laplacian In-context Spectral Analysis’, a novel framework that leverages nonparametric geometric representations and in-context learning to address this limitation. LISA constructs diffusion-coordinate state representations via Laplacian spectral learning, coupled with lightweight latent-space adapters for inference-time adaptation. By linking in-context learning to spectral methods, can we unlock more robust and adaptable time-series models capable of handling nonstationary data with minimal retraining?

The Inherent Instability of Dynamic Systems

Conventional time-series forecasting techniques, frequently built upon autoregressive models, encounter significant limitations when applied to chaotic systems characterized by nonstationary behavior. These methods operate under the assumption of relative stability – that past patterns will reasonably predict future values – an assumption quickly invalidated by the sensitive dependence on initial conditions inherent in chaotic dynamics. Consequently, predictions rapidly diverge from actual trajectories, rendering long-term forecasting unreliable. The core of the problem lies in the inability of these models to effectively capture and adapt to continually shifting statistical properties, such as changing means or variances, that define nonstationary processes. While effective for stable systems, their fixed parameter structures prove inadequate for tracking the evolving complexities of chaotic phenomena, leading to systematic errors and a loss of predictive power as the system drifts from its initial state.

Traditional forecasting techniques often falter when applied to complex dynamical systems because they overlook the inherent geometric organization within the data. Systems like the Lorenz and Rössler attractors, while appearing chaotic, are not random; rather, their trajectories unfold on low-dimensional manifolds embedded within a higher-dimensional state space. These manifolds represent the system’s ‘habits’ – the constrained regions where its evolution is most likely to occur. Standard methods, focused on statistical correlations rather than geometric relationships, struggle to identify and track these crucial structures. Consequently, their predictions rapidly degrade as the system explores new regions of the attractor, unable to leverage the underlying order governing its behavior. Capturing this manifold structure is therefore paramount for achieving accurate and robust forecasting in truly complex systems.

A fundamental limitation of conventional time-series analysis arises from its inflexibility when confronted with evolving systems; models trained on past data often falter as the underlying dynamics shift. The problem isn’t merely a matter of inaccurate initial parameters, but a deeper challenge in computational efficiency. Traditional approaches demand complete retraining – or at least substantial parameter adjustments – each time the system’s behavior deviates, a process that is both time-consuming and computationally expensive. This requirement for frequent, large-scale updates becomes particularly problematic in real-time applications or when dealing with exceptionally long time horizons where continuous adaptation is paramount. Consequently, the inability to learn and adjust incrementally – to track subtle changes without discarding previously acquired knowledge – severely restricts the predictive power of these methods in truly nonstationary environments.

The limitations of conventional forecasting techniques in nonstationary systems demand a shift toward adaptive methodologies. Current approaches often require substantial computational resources for recalibration whenever dynamics evolve, proving impractical for real-time applications or systems with rapid shifts. A new paradigm prioritizes lightweight adaptation – the ability to continuously refine predictive models with minimal parameter updates and computational overhead. This necessitates algorithms capable of identifying and tracking changes in underlying system behavior without necessitating complete retraining. Such robust performance, even amidst unpredictable change, promises not only improved forecasting accuracy but also the potential to unlock predictive capabilities in a wide range of complex systems, from climate modeling to financial markets. The focus is shifting from static models to continually learning systems, capable of maintaining accuracy without being overwhelmed by the inherent dynamism of the world.

Unveiling Hidden Geometry: Nonlinear Spectral Analysis

Nonlinear Laplacian Spectral Analysis addresses the challenge of extracting meaningful structure from high-dimensional time-series data by identifying the underlying low-dimensional manifold on which the data resides. This technique assumes that complex, high-dimensional observations are often generated by dynamics occurring on a lower-dimensional, potentially nonlinear, space. The method leverages the Laplacian operator-a measure of local connectivity-to define a diffusion process on the data. Analyzing the spectrum of the Laplacian allows for the identification of the intrinsic dimensionality and the principal modes of variation within the data, effectively reducing dimensionality while preserving essential geometric relationships. This approach differs from linear dimensionality reduction techniques, such as Principal Component Analysis, by being able to uncover and represent curved or nonlinearly embedded manifolds that would be poorly approximated by linear subspaces.

Diffusion Maps establish a low-dimensional coordinate system by modeling data as a diffusion process on a graph constructed from nearest neighbors. This technique calculates a Markov transition matrix P representing the probability of transitioning between data points, and then computes the eigenvectors and eigenvalues of P. The eigenvectors corresponding to the largest eigenvalues define the embedding coordinates, effectively capturing the data’s intrinsic geometry and relationships between points regardless of ambient space distortions. This diffusion-based embedding allows for more accurate data representation, dimensionality reduction, and improved predictive modeling compared to methods relying on Euclidean distance or linear assumptions.

The Coifman-Lafon construction addresses challenges in manifold learning related to data density variations. Traditional methods can be biased by uneven sampling, leading to unstable mappings. This construction employs a density-corrected softmax operator to weight the influence of each neighboring point during the embedding process. Specifically, the transition probabilities between data points are calculated using a kernel function, and then normalized by a local density estimate – the sum of all kernel weights for a given point. This density correction effectively mitigates the impact of crowded regions and ensures that points in sparser areas contribute proportionally to the overall manifold structure, resulting in a more robust and stable embedding. The resulting transition matrix is then used in an eigendecomposition to determine the intrinsic coordinates of the data on the manifold.

Traditional time-series analysis often relies on linear models which assume relationships between variables can be adequately represented by straight lines or planes. However, many real-world dynamical systems exhibit nonlinear behavior, characterized by complex interactions and feedback loops that deviate from these linear assumptions. Nonlinear Laplacian Spectral Analysis overcomes the limitations of linear approximations by employing techniques like Diffusion Maps to identify and model the intrinsic, potentially nonlinear, geometry of the data. This allows for the representation and analysis of intricate dynamics – such as those found in chaotic systems or high-dimensional datasets – that were previously obscured or inaccurately captured by methods constrained to linear representations. The ability to model these previously inaccessible dynamics improves predictive accuracy and enables a more comprehensive understanding of complex system behavior.

Adaptation Without Parameters: In-Context Learning

The Laplacian In-context Spectral Analysis framework builds upon spectral representations of data by incorporating an In-Context Mechanism designed for rapid adaptation to non-stationary dynamics. This mechanism operates by analyzing the spectral characteristics of recent data to adjust predictive modeling without modifying the underlying model parameters. Specifically, it leverages the spectral representation to identify changes in the data’s underlying distribution and recalibrates predictions accordingly. This approach facilitates lightweight adaptation because it avoids the computational expense of full parameter updates, enabling the model to respond efficiently to evolving data patterns. The framework’s ability to adapt ‘in-context’ is achieved through this spectral analysis of recent history, allowing for effective performance in dynamic environments without the need for retraining.

The In-Context Mechanism employs a Markov Operator to dynamically adjust predictions based on the most recent data sequence, achieving adaptation without modifying model parameters. This is accomplished by defining a transition probability that weights recent observations more heavily than those further in the past, effectively creating a context-dependent prediction. The Markov Operator allows the model to shift its predictive distribution based solely on the input history, functioning as a non-parametric update rule. Consequently, the model’s behavior is determined by the input context, rather than learned weights, which is characteristic of in-context learning approaches and avoids the need for traditional gradient-based optimization or retraining.

The Gaussian Process Latent Model functions as a decoder within the framework by utilizing Kernel Ridge Regression to map coordinates from a latent diffusion space back to the original ambient space. This mapping enables reconstruction of observed data and, crucially, forecasting of future states. Kernel Ridge Regression provides a computationally efficient method for approximating the Gaussian Process regression, allowing for accurate prediction given a set of training data in the latent space. The model learns a mapping function that transforms latent representations – derived from the diffusion process – into the observed data domain, facilitating both data recovery and predictive capabilities without requiring explicit parameter updates.

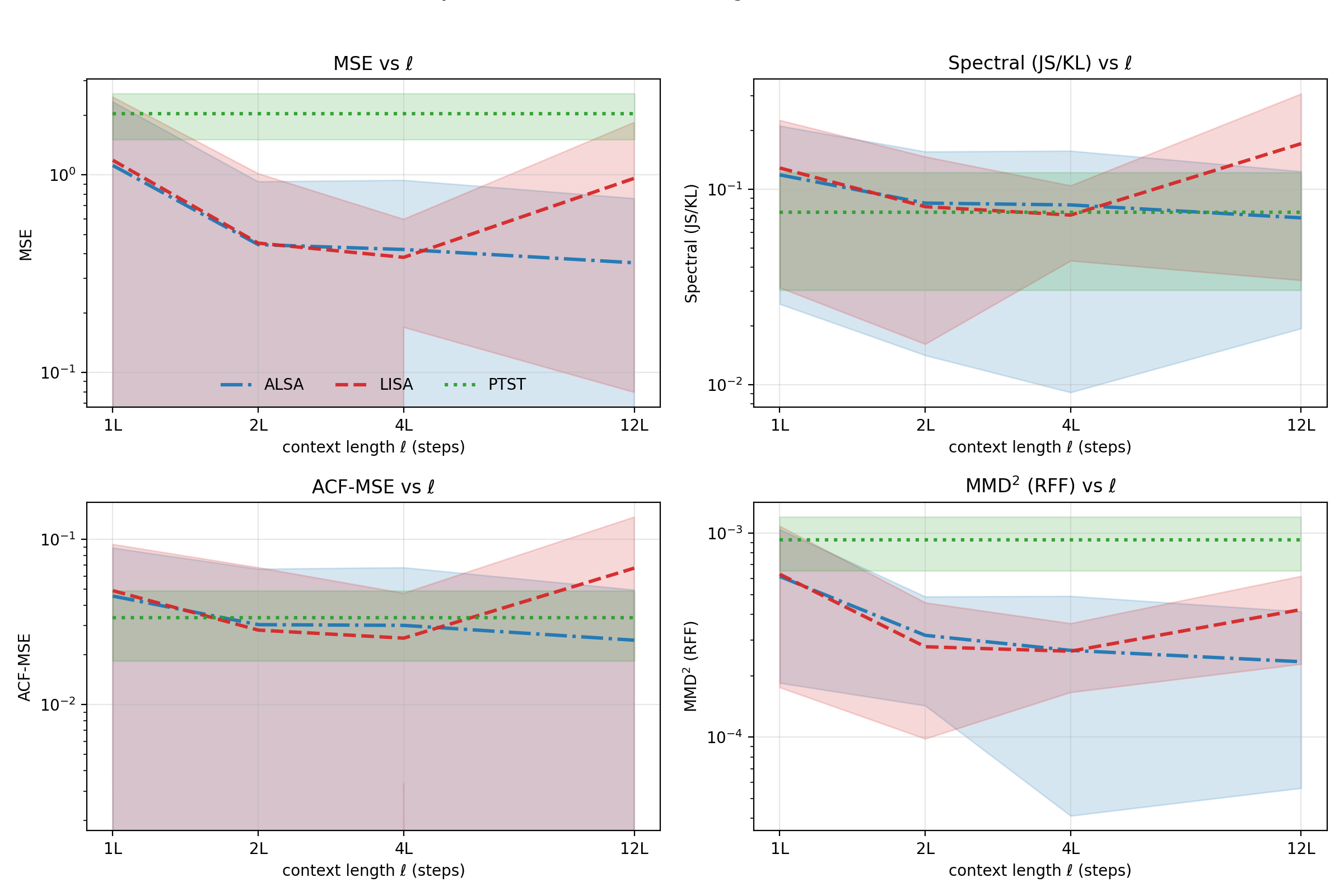

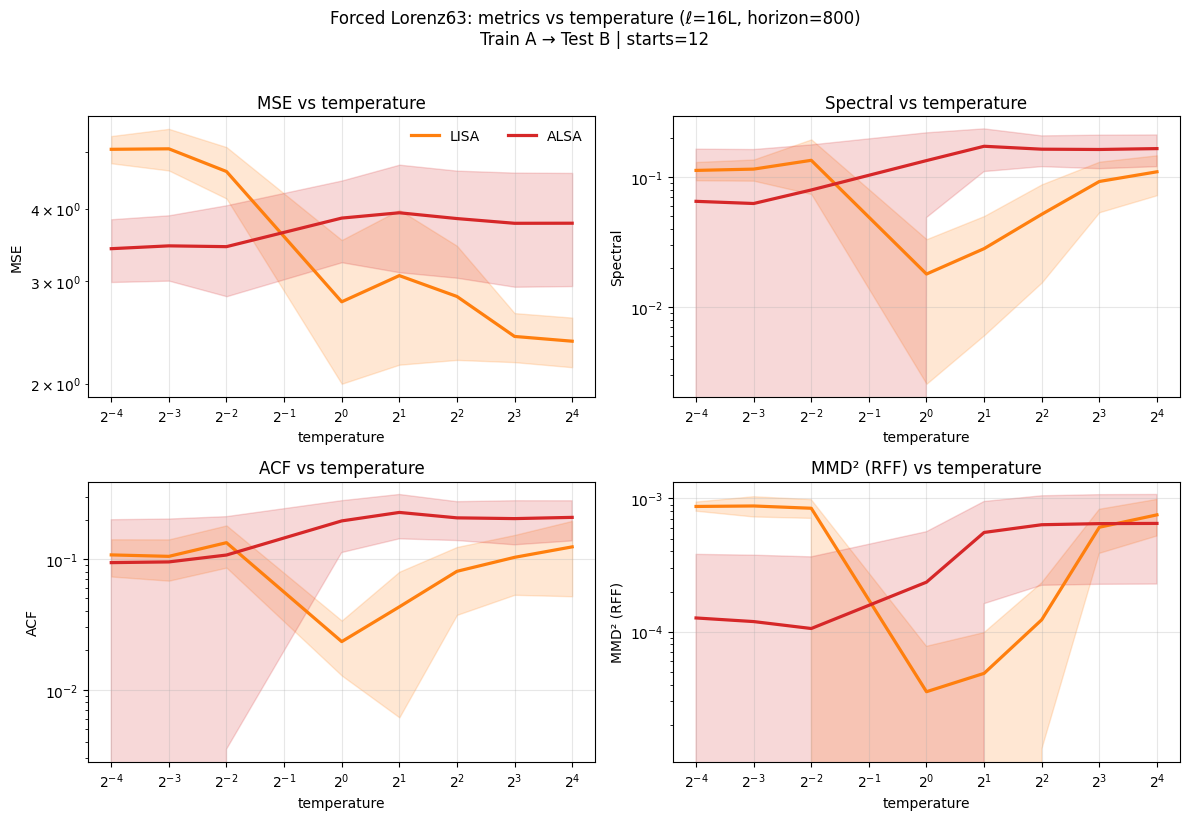

The model’s predictive performance in nonstationary environments is enhanced by its ability to leverage recent data context without parameter updates. Empirical evaluation, using metrics such as Mean Squared Error (MSE), spectral divergence, Autocorrelation Function Mean Squared Error (ACF-MSE), and Maximum Mean Discrepancy with Random Fourier Features (MMD2(RFF)), demonstrates a consistent trend of improvement as the length of the considered context increases. Specifically, reductions in these error metrics indicate that the model effectively adapts to changing dynamics by incorporating information from the immediate history of data points, thereby improving forecast accuracy in time-varying systems.

Validation and Practical Impact

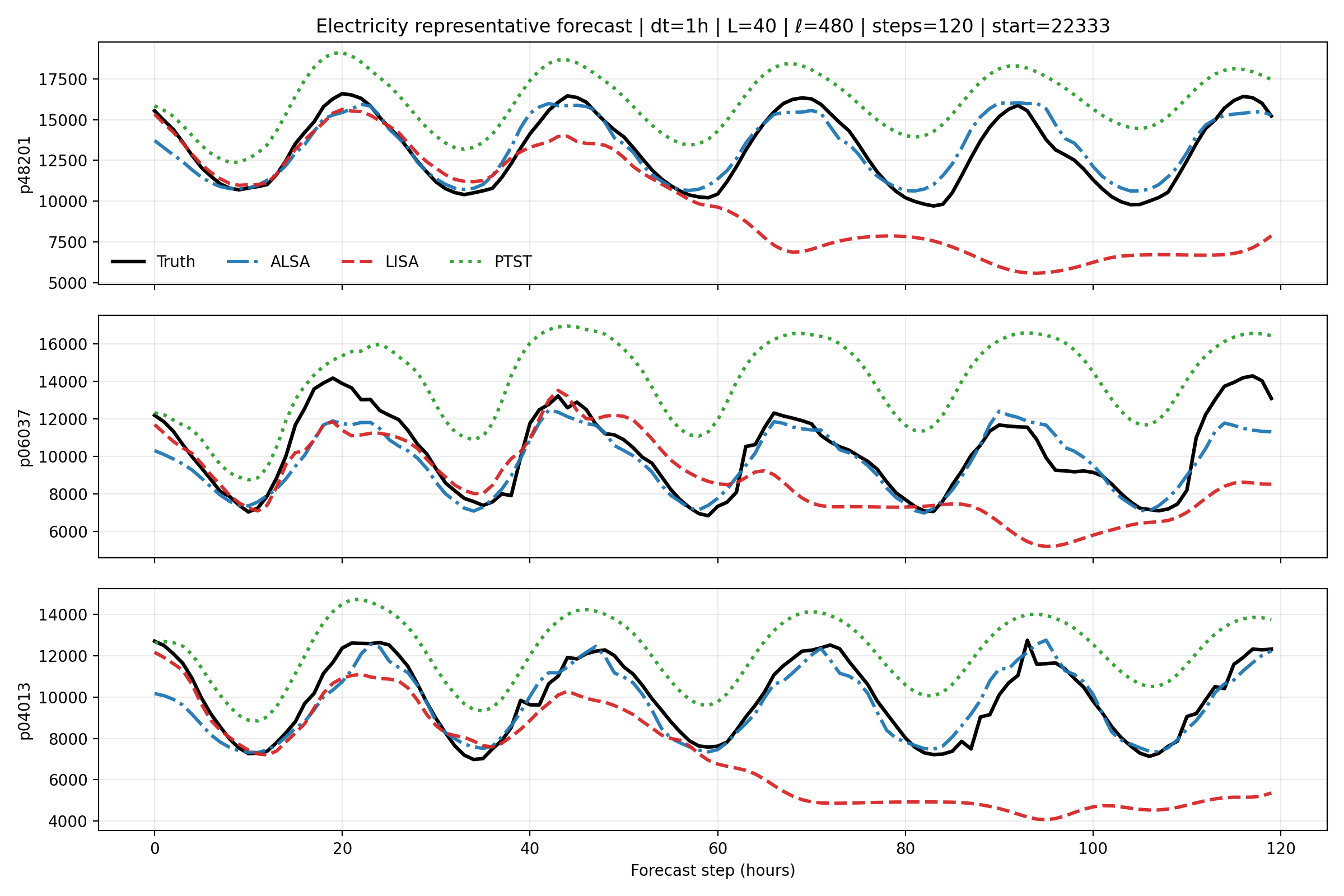

Rigorous testing of the forecasting framework using actual electricity load data reveals a substantial improvement in predictive accuracy when compared to established time-series methodologies. These evaluations, conducted on diverse datasets representing varying consumption patterns, consistently demonstrate the framework’s capability to model complex, real-world dynamics. The system doesn’t simply predict future loads; it accurately captures subtle fluctuations and trends often missed by conventional techniques, leading to more reliable short-term and long-term forecasts. This enhanced precision has significant implications for optimizing energy distribution, reducing waste, and improving the overall efficiency of power grids, offering a practical solution for a critical infrastructure challenge.

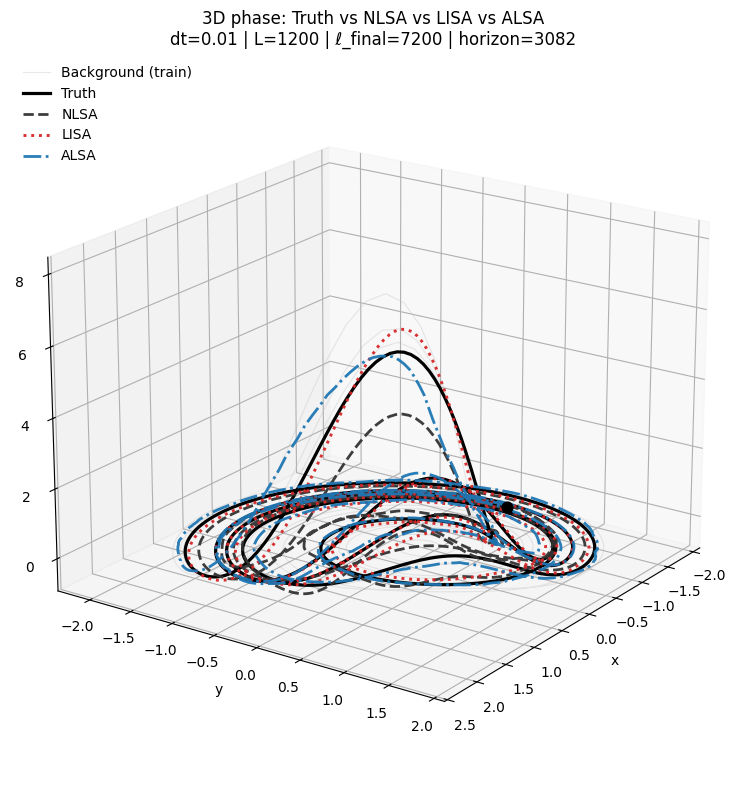

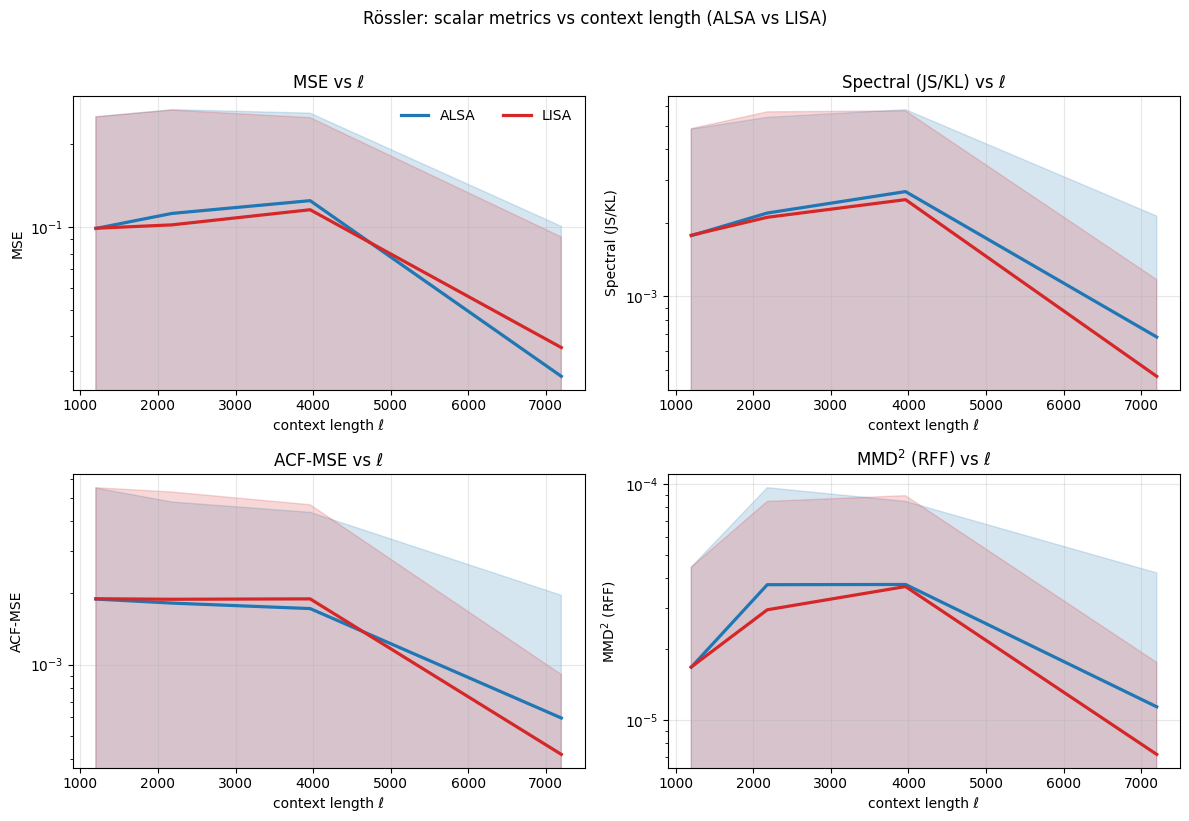

The framework’s capacity to accurately model chaotic systems-specifically, the Lorenz and Rössler attractors-demonstrates a remarkable level of robustness and adaptability. These systems, famed for their sensitive dependence on initial conditions and inherently unpredictable behavior, pose a significant challenge to traditional forecasting methods. The ability of this approach to not only generate plausible trajectories within these chaotic regimes, but to maintain accuracy over extended forecasting horizons, highlights its capacity to handle complex, nonlinear dynamics. This isn’t simply about predicting specific values; it’s about capturing the underlying structure of unpredictability itself, suggesting the framework can generalize beyond the limitations of linear models and offer reliable insights even when dealing with highly sensitive and volatile data.

Rigorous testing demonstrates that a novel forecasting approach, leveraging both nonlinear spectral analysis and in-context adaptation, substantially enhances predictive performance and the ability to generalize to unseen data. This improvement isn’t merely theoretical; it’s consistently reflected in key performance indicators across diverse datasets. Specifically, evaluations reveal significantly lower Mean Squared Error (MSE) values, indicating greater accuracy, alongside reduced spectral divergence, which confirms a closer match between predicted and actual spectral characteristics. Further bolstering these findings are consistently improved scores for both Autocorrelation Function Mean Squared Error (ACF-MSE) – demonstrating better capture of temporal dependencies – and MMD2(RFF), a metric measuring the similarity of probability distributions. These combined results suggest that this method offers a robust and reliable advancement over traditional forecasting techniques, promising enhanced predictive power in complex systems.

The developed forecasting framework extends beyond theoretical demonstration, offering tangible benefits to diverse and critical sectors. In energy management, accurate predictions of electricity load enable optimized grid operation, reduced waste, and improved resource allocation – crucial steps towards sustainable energy practices. Financial forecasting stands to gain from this approach through enhanced risk assessment and more reliable market predictions, potentially leading to more informed investment strategies. Furthermore, the framework’s ability to model chaotic systems provides a powerful tool for climate modeling, offering the potential to improve the accuracy of long-term climate predictions and facilitate proactive mitigation efforts. By consistently improving upon existing methods, this technology promises to be a valuable asset in navigating the complexities of these interconnected fields and building more resilient systems.

The pursuit of robust time-series forecasting, as demonstrated by LISA, echoes a fundamental tenet of mathematical elegance: provable correctness. The framework’s integration of nonparametric geometric models with in-context learning isn’t merely about achieving empirical success, but establishing a system capable of adapting to non-stationary data through spectral analysis. This mirrors the principle that a truly refined algorithm isn’t simply ‘working on tests’, but possesses an inherent logical structure. As Alan Turing observed, “Sometimes it is the people who no one imagines anything of who do the things that no one can imagine.” LISA, in its novel combination of diffusion maps and Gaussian process latent models, embodies this spirit of unexpected innovation, pushing the boundaries of what is considered possible in time-series analysis.

What Remains?

The introduction of LISA, while demonstrating a practical improvement in time-series forecasting, merely shifts the fundamental question. Let N approach infinity – what remains invariant? The core challenge isn’t simply predicting the next data point, but establishing a provably robust manifold upon which these series reside. Current nonparametric methods, even those incorporating in-context learning, remain susceptible to the curse of dimensionality and the fragility of spectral decomposition when faced with truly chaotic or high-frequency non-stationarity. The ‘in-context’ mechanism, while mimicking human adaptability, lacks the axiomatic grounding of a verifiable geometric principle.

Future work must focus on identifying those invariants. A fruitful avenue lies in exploring connections between Laplacian spectral analysis and information-theoretic bounds on predictability. Can a provable limit be established, beyond which forecasting becomes fundamentally impossible, regardless of model complexity? Moreover, the current reliance on Gaussian Process latent models introduces assumptions that may not hold universally. A more rigorous treatment would necessitate a model-agnostic approach, focusing solely on the intrinsic geometry of the time-series data itself.

Ultimately, the pursuit of accurate forecasting should not be conflated with understanding the underlying generative process. LISA offers a pragmatic solution, yet it is a descriptive, not an explanatory, framework. The true elegance will reside in a formalism that reveals the why, not merely the what-a formalism where predictive power is a natural consequence of provable geometric constraints.

Original article: https://arxiv.org/pdf/2602.04906.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- Gold Rate Forecast

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Mario Tennis Fever Review: Game, Set, Match

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- Scream 7 Will Officially Bring Back 5 Major Actors from the First Movie

- What time is the Single’s Inferno Season 5 reunion on Netflix?

2026-02-08 07:29