Author: Denis Avetisyan

As AI increasingly powers vehicle automation, traditional safety standards are proving insufficient, demanding a broader evaluation of functional behavior beyond simple component failure.

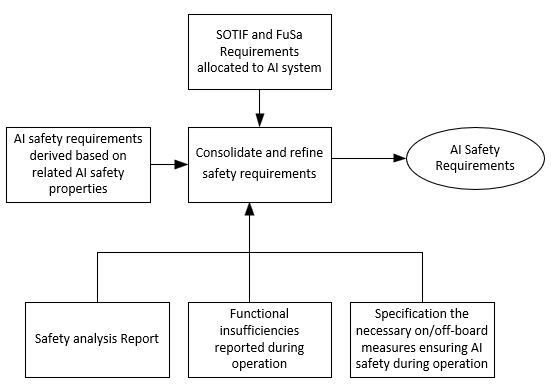

This review argues that even AI components managed through Quality Management processes require rigorous Safety of the Intended Functionality (SOTIF) analysis to mitigate hazards arising from functional limitations.

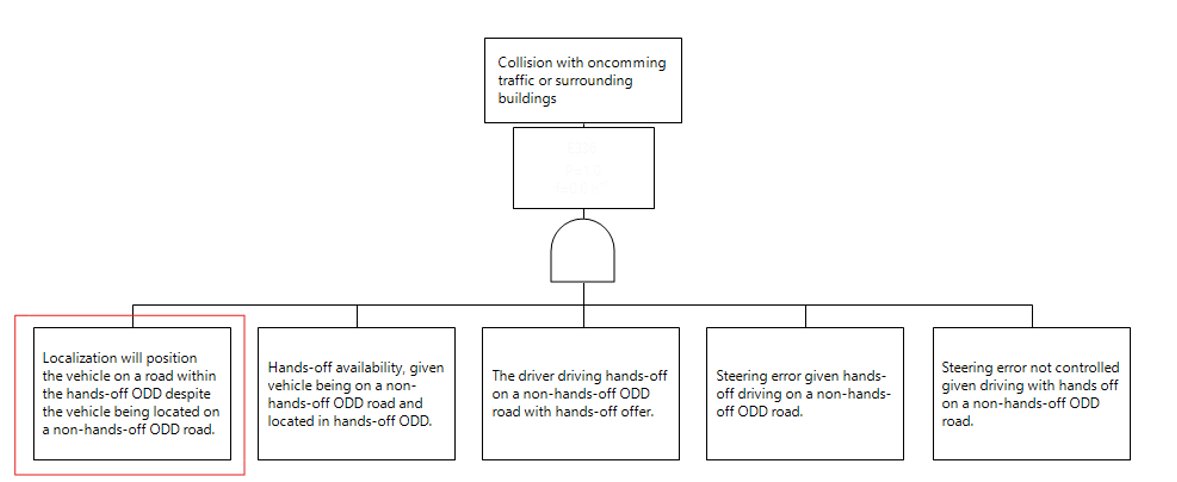

While automotive safety standards traditionally compartmentalize component classifications-often excluding Quality Management (QM)-rated elements from rigorous hazard analysis-the increasing integration of artificial intelligence necessitates a re-evaluation of this approach. This paper, ‘The Necessity of a Holistic Safety Evaluation Framework for AI-Based Automation Features’, argues that even QM-classified AI components within driving automation systems can contribute to Safety of the Intended Functionality (SOTIF)-related risks, demanding comprehensive safety assessment under emerging standards like ISO/PAS 8800. Through case studies of perception algorithms, we demonstrate that functional insufficiencies-rather than traditional failures-can lead to critical safety implications, violating established risk acceptance criteria. How can existing safety frameworks be effectively revised to proactively address the unique challenges posed by AI and ensure comprehensive safety assurance across all component classifications?

The Inevitable Shift: Navigating the Evolution of Vehicle Automation

The automotive industry is currently experiencing a dramatic transformation, fueled by relentless innovation in Advanced Driver-Assistance Systems (ADAS) and Artificial Intelligence (AI). Once limited to features like adaptive cruise control and lane departure warnings, ADAS are now evolving into increasingly sophisticated systems capable of handling more complex driving scenarios. Simultaneously, breakthroughs in machine learning, particularly deep neural networks, are enabling vehicles to perceive their surroundings with greater accuracy and make more informed decisions. This convergence isn’t merely incremental; it’s a fundamental shift towards higher levels of automation, ranging from driver-assistance to fully autonomous operation. Consequently, investment in autonomous vehicle technology is surging, with major automakers, tech companies, and startups all vying for leadership in this rapidly expanding landscape. The anticipated benefits extend beyond convenience and efficiency, promising safer roads, reduced congestion, and increased accessibility for those unable to drive.

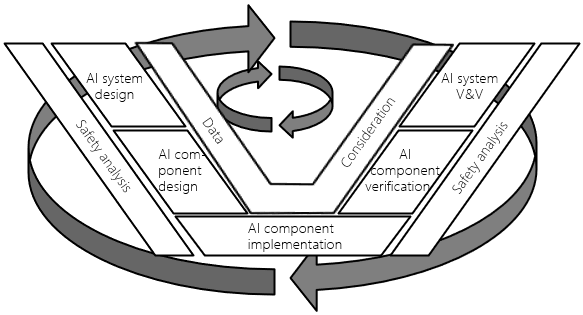

Historically, vehicle safety relied on Functional Safety – a reactive approach identifying known hazards and building systems to mitigate predictable failures. However, the inherent complexity of Artificial Intelligence powering autonomous vehicles presents a significant challenge to this established paradigm. AI systems, particularly those employing deep learning, often operate as ‘black boxes’, making it difficult to anticipate all possible responses to novel or ambiguous situations. This unpredictability means traditional hazard analysis, based on pre-defined scenarios, is insufficient to guarantee safety; the system can encounter and react to conditions it wasn’t specifically programmed to handle. Consequently, a reliance on solely demonstrating the absence of failure – the core of Functional Safety – proves inadequate when dealing with the emergent behaviors and continuous learning capabilities of AI-driven autonomous systems, necessitating a more proactive and comprehensive safety framework.

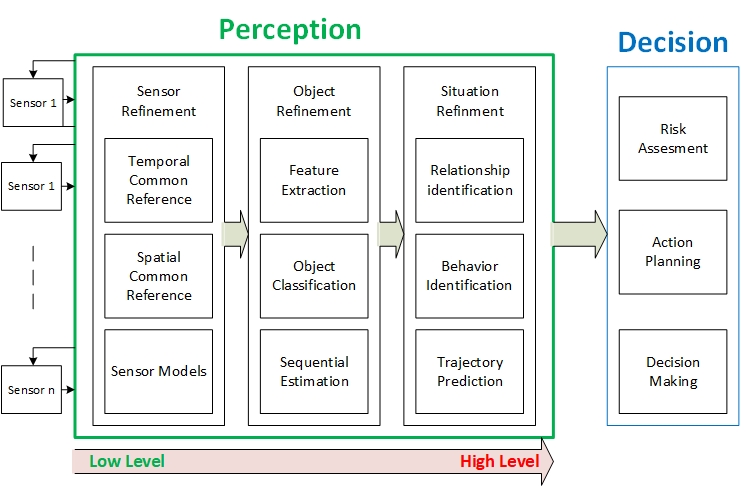

Truly safe autonomous operation demands a fundamental shift from reactive to proactive safety measures. Traditional functional safety, while vital for component reliability, struggles with the unpredictable nature of artificial intelligence and the complex scenarios encountered in real-world driving. Instead of solely focusing on mitigating failures after they occur, current research emphasizes anticipating potential hazards before they materialize. This involves leveraging advanced predictive modeling, utilizing sensor fusion to create a comprehensive understanding of the vehicle’s surroundings, and employing AI algorithms capable of identifying subtle cues indicative of impending danger. Such a proactive approach necessitates continuous risk assessment, real-time adaptation to changing conditions, and the implementation of preventative strategies, ultimately aiming to avoid accidents rather than simply minimizing their consequences. The goal is not merely to build self-driving cars that react well to crises, but to create systems that foresee and circumvent them altogether.

Beyond Simple Failure: Addressing the Nuances of SOTIF

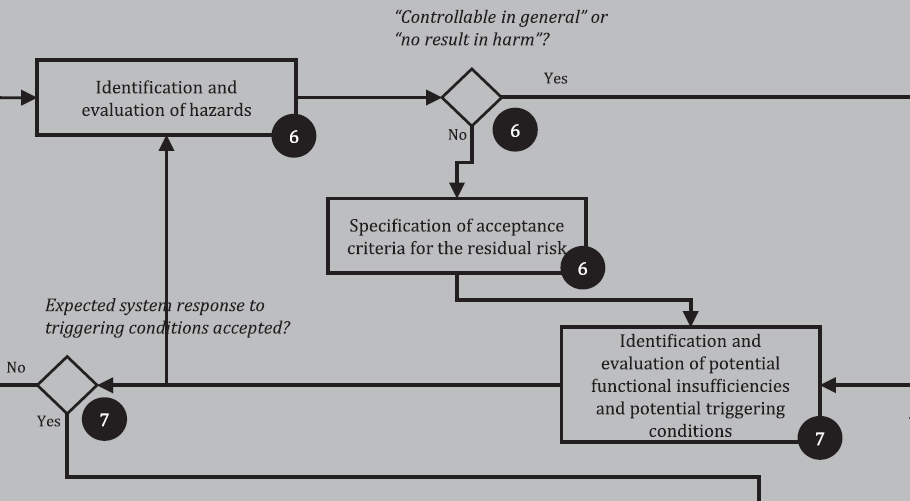

ISO 21448 defines Safety Of The Intended Functionality (SOTIF) as encompassing hazards resulting from functional insufficiencies of a system, even when those systems are functioning as designed. This represents a shift from traditional functional safety, which primarily addresses hazards caused by component failures or random hardware malfunctions. SOTIF focuses on reasonably foreseeable misuse, performance limitations under edge-case scenarios, and uncertainties in perception or prediction that could lead to unsafe behavior, even if no technical fault exists. Consequently, SOTIF necessitates hazard analysis and risk mitigation strategies that consider the complete operational domain and potential interactions between the system, its environment, and other actors, extending beyond the scope of ISO 26262 which concentrates on eliminating or controlling systematic and random hardware failures.

Traditional hazard analysis in automotive safety, as defined by standards like ISO 26262, largely concentrates on failures within individual hardware or software components. However, the shift towards SOTIF necessitates a broadened scope, focusing on hazards arising from the intended functionality of the system, even when all components are operating as designed. This means analysis must now encompass scenarios where logical flaws, environmental factors, or reasonably foreseeable misuse lead to unintended, potentially hazardous behavior. This requires identifying situations where the system’s algorithms or control logic, while technically correct, could result in unsafe outcomes due to limitations in sensing, perception, or decision-making under specific, valid operating conditions. Consequently, hazard analysis must move beyond component-level fault injection and embrace system-level scenario analysis to identify and mitigate these risks.

System-Theoretic Process Analysis (STPA) is gaining prominence as a technique to address the expanded scope of safety analysis required by SOTIF, moving beyond traditional failure-based approaches. Unlike hazard analysis focused on component malfunctions, STPA examines the control structure of a system to identify potentially hazardous control actions, considering how interactions between components can lead to unintended consequences. This is particularly relevant in systems incorporating Artificial Intelligence (AI), as components meeting Quality Management (QM) standards – implying adherence to development processes – do not inherently guarantee safety in all operational contexts. AI’s reliance on data and algorithms introduces the potential for unforeseen behavior under specific conditions, making a systemic analysis like STPA crucial for identifying and mitigating SOTIF-related hazards even within seemingly well-managed AI components.

Beyond Reaction: Tools for Proactive Hazard Analysis

Hazard and Operability (HAZOP) studies and Fault Tree Analysis (FTA) are established techniques for systematically identifying hazards associated with individual components and their immediate interactions within a system. However, these methods struggle to effectively address the emergent behavior and complex interactions characteristic of Artificial Intelligence (AI) systems. AI’s reliance on data-driven algorithms and machine learning introduces hazards not easily captured by pre-defined failure modes or component-level analysis. Specifically, issues like data bias, adversarial attacks, and unpredictable responses to novel inputs require analytical approaches beyond the scope of traditional HAZOP and FTA, necessitating supplementary or alternative hazard analysis techniques for safe AI system deployment.

Bayesian Networks represent a probabilistic graphical model that allows for the depiction of dependencies and causal relationships between variables within a system. Unlike deterministic methods, they quantify uncertainty and allow hazard analysis to move beyond simple “present/absent” assessments. These networks utilize directed acyclic graphs where nodes represent variables (e.g., sensor readings, system states, component failures) and edges represent probabilistic dependencies. Probabilities are assigned to each node given its parent nodes, enabling the calculation of the probability of a hazard occurring given evidence from other variables. This allows analysts to model complex scenarios, propagate uncertainties, and identify critical pathways contributing to system failures, making them particularly well-suited for analyzing the intricate interactions within AI-driven systems where hazards may not be directly attributable to single component failures.

Effective risk assessment for autonomous systems necessitates the application of Automotive Safety Integrity Levels (ASIL) to prioritize mitigation strategies based on potential hazard severity, probability of exposure, and controllability. ASIL categorization, ranging from QM to ASIL D, dictates the rigor of safety requirements and validation activities. Critical performance metrics, such as safe state trigger latency, directly impact system safety; for instance, maintaining a latency of 100ms or less is crucial for reliable Low-Level Perception (LLP) Out-of-Distribution (ODD) detection, ensuring the system can transition to a safe state before potentially hazardous conditions arise. Failure to meet these latency requirements, as determined through ASIL-based hazard analysis, can escalate risk and compromise operational safety.

The Foundation of Trust: Data Integrity and AI Assurance

The efficacy of advanced driver-assistance systems (ADAS) powered by artificial intelligence fundamentally depends on the caliber of the data fueling their operation. These systems learn to perceive and react to the world through extensive training datasets, meaning inaccuracies, incompleteness, or inconsistencies within this data can directly translate into compromised performance and safety risks. A system trained on biased or poorly labeled data may exhibit discriminatory behavior or fail to recognize critical objects in real-world scenarios. Furthermore, even minor data corruption can introduce subtle errors that accumulate over time, eroding the reliability of the ADAS and potentially leading to incorrect decisions. Consequently, meticulous data curation, validation, and continuous monitoring are not merely best practices, but essential components for ensuring the safe and dependable operation of AI-driven ADAS technologies.

A dependable Dataset Lifecycle management process is paramount to the successful deployment of artificial intelligence, ensuring the data underpinning these systems remains consistently accurate, complete, and consistent throughout its entire journey. This process extends beyond simple data collection, encompassing rigorous validation, careful annotation, and continuous monitoring for drift or anomalies. Without such a structured approach, subtle errors or biases within the dataset can propagate through the AI model, leading to unpredictable behavior and potentially compromising safety-critical applications. Effective lifecycle management includes version control, clear data provenance tracking, and automated quality checks, allowing for swift identification and remediation of data-related issues. Ultimately, prioritizing data integrity throughout the lifecycle isn’t merely a best practice; it’s a foundational requirement for building trustworthy and reliable AI systems.

The reliable performance of AI-driven systems, particularly in safety-critical applications, is increasingly governed by emerging standards like ISO/PAS 8800, which establish crucial assurance practices. These guidelines directly address vulnerabilities stemming from data bias and the potential for adversarial attacks designed to compromise system integrity. Achieving robust performance necessitates strict quality control; for instance, Large Language Performance (LLP) requires a boundary classification error rate below 1% even when faced with GPS position inaccuracies of up to ±5 meters. Furthermore, consistent and timely data updates are paramount, demanding high-definition map synchronization at least every 24 hours to guarantee the accuracy of geofence data and maintain the overall safety and dependability of the AI system.

Towards Resilient Systems: The Future of Autonomous Safety

The development of genuinely safe autonomous systems hinges on a synergistic approach to hazard analysis and data management. Traditional methods of identifying potential risks are proving insufficient for the complexity of self-driving technology; therefore, sophisticated techniques – encompassing both predictive modeling and real-time monitoring – are essential. However, the sheer volume of data generated by autonomous vehicles necessitates robust data infrastructure capable of efficient storage, retrieval, and analysis. This requires not only scalable databases but also sophisticated data governance policies to ensure data quality, consistency, and security. By integrating these advanced hazard analysis methods with such robust data management practices, developers can proactively identify and mitigate risks, leading to more reliable and trustworthy autonomous operation. The ability to comprehensively assess potential failures, coupled with the capacity to learn from vast datasets, is paramount to building public confidence and realizing the full potential of autonomous technology.

Advancements in autonomous system safety are increasingly reliant on a shift towards data-centric artificial intelligence and the implementation of explainable AI (XAI) techniques. Traditional AI development often prioritizes model architecture, but a data-centric approach recognizes that the quality and representativeness of training data are paramount for reliable performance, particularly in unpredictable real-world scenarios. By focusing on data curation, augmentation, and validation, developers can build systems that generalize more effectively and exhibit fewer unexpected behaviors. Crucially, XAI methods provide insights into the decision-making processes of these complex algorithms, allowing engineers to identify potential biases, understand failure modes, and ultimately build greater trust in autonomous systems. This enhanced understanding isn’t merely about debugging; it’s about proactively mitigating risks and ensuring that autonomous vehicles operate predictably and safely, even in challenging conditions, thereby facilitating wider public acceptance and deployment.

The trajectory of autonomous vehicle safety is increasingly defined by evolving international standards, notably ISO 21448 and ISO/PAS 8800, which provide a framework for managing functional safety and operational design domain (ODD) limitations. These standards aren’t merely descriptive; they actively shape development through quantifiable metrics-for example, systems are designed to trigger driver alerts and initiate deceleration if a cumulative positional drift exceeds 3 meters over 30 seconds. Rigorous validation, guided by a target residual risk of no more than 1 × 10^{-6} adverse events per kilometer traveled, is employed to assess the effectiveness of critical components like the LLP-based ODD detector. This emphasis on measurable safety, formalized through standardized procedures, isn’t simply about compliance; it’s a fundamental step towards building public trust and enabling the widespread adoption of self-driving technology by demonstrating a commitment to minimizing risk and maximizing reliability.

The pursuit of safety in automated systems, as detailed in this analysis of SOTIF and ISO 26262, reveals a fundamental truth about complex creations. Even components deemed ‘QM’ are not immune to hazard-their functional insufficiencies, rather than outright failures, present unique risks. This echoes Vinton Cerf’s observation: “Any sufficiently advanced technology is indistinguishable from magic.” The ‘magic’ of AI, while promising, demands rigorous evaluation, not because it’s inherently unreliable, but because its behavior, like any system, evolves within the medium of time, and its intended functionality must be constantly assessed to ensure graceful aging – a principle crucial to maintaining safety as AI matures within automotive applications.

The Long View

The insistence on extending safety frameworks – initially conceived for deterministic systems – to encompass the stochastic nature of AI is not a triumph, but a recognition of persistent inadequacy. The categorization of AI components as ‘QM’ offers a comforting illusion of control, a deferral of genuine analysis. This work highlights the fallacy of that position; quality management addresses how something is built, not what it might become under novel conditions. Every abstraction carries the weight of the past, and the very act of defining ‘intended functionality’ within a static framework limits the ability to anticipate emergent hazards.

Future efforts must move beyond simply layering SOTIF and ISO/PAS 8800 onto existing structures. A truly resilient approach requires embracing the inherent uncertainty of these systems, and developing methods for continuous, adaptive hazard analysis. The focus should shift from preventing failure to managing degradation – acknowledging that all systems decay, and the goal is not immortality, but graceful aging.

Ultimately, the pursuit of ‘safe AI’ is a temporary reprieve. The more pressing question isn’t how to eliminate risk, but how to build systems capable of absorbing and adapting to it. Only slow change preserves resilience, and the long-term viability of AI-based automation depends not on preventing the unexpected, but on preparing for it.

Original article: https://arxiv.org/pdf/2602.05157.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- These are the 25 best PlayStation 5 games

- The MCU’s Mandarin Twist, Explained

- Movie Games responds to DDS creator’s claims with $1.2M fine, saying they aren’t valid

- Mario Tennis Fever Review: Game, Set, Match

- Gold Rate Forecast

- A Knight Of The Seven Kingdoms Season 1 Finale Song: ‘Sixteen Tons’ Explained

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Beyond Linear Predictions: A New Simulator for Dynamic Networks

2026-02-07 16:32