Author: Denis Avetisyan

Deploying artificial intelligence in finance demands more than just predictive power, requiring a delicate balance between performance, regulation, and genuine understanding.

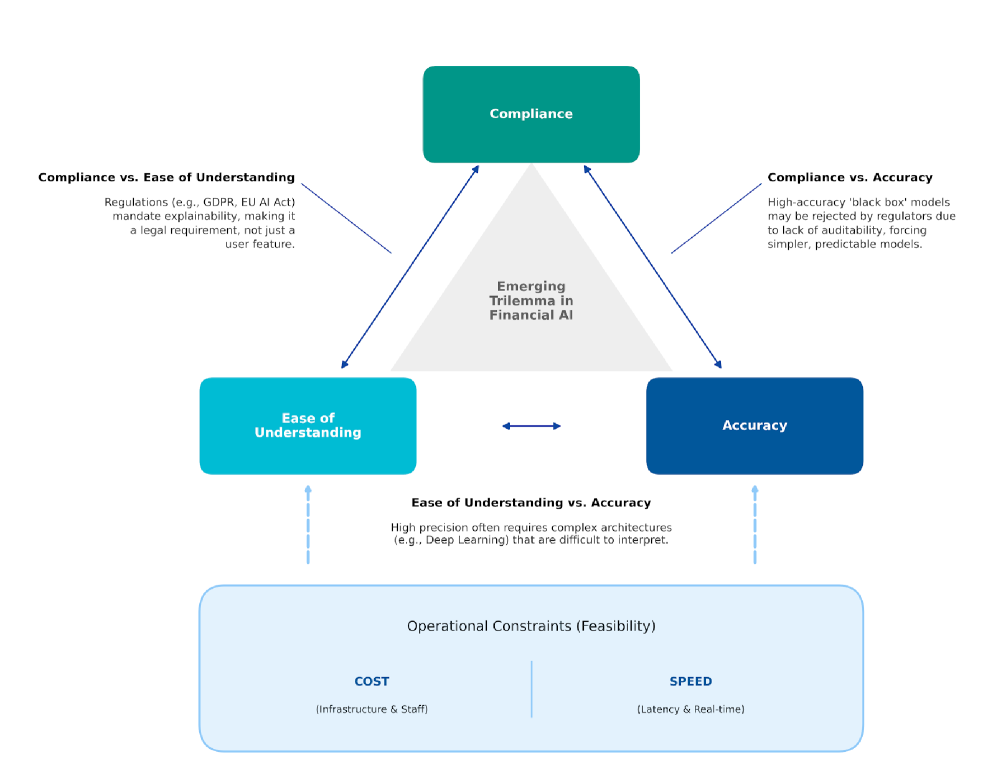

This review argues that successful financial AI implementation hinges on navigating a trilemma where accuracy and compliance are baseline requirements, and explainability is the key to operational success.

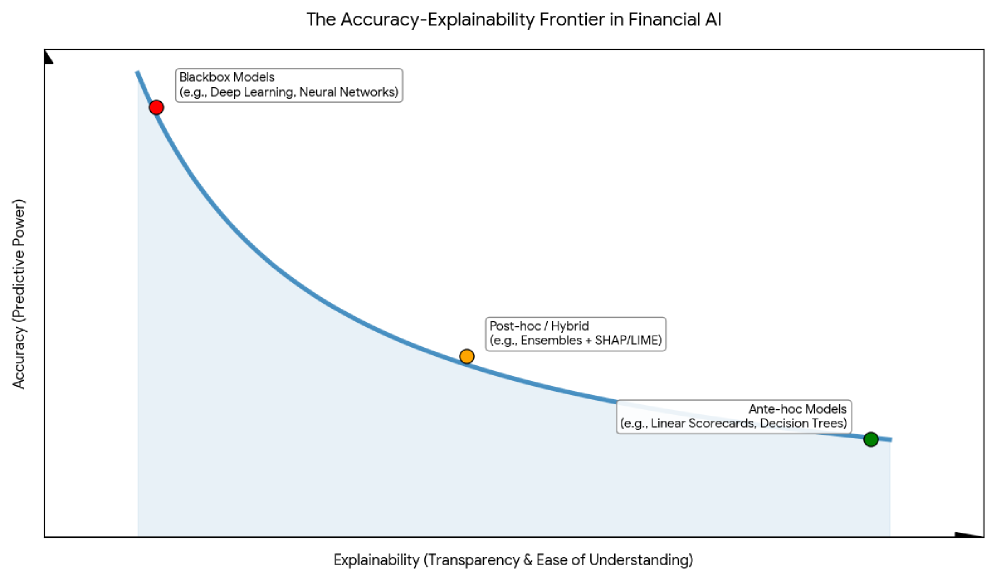

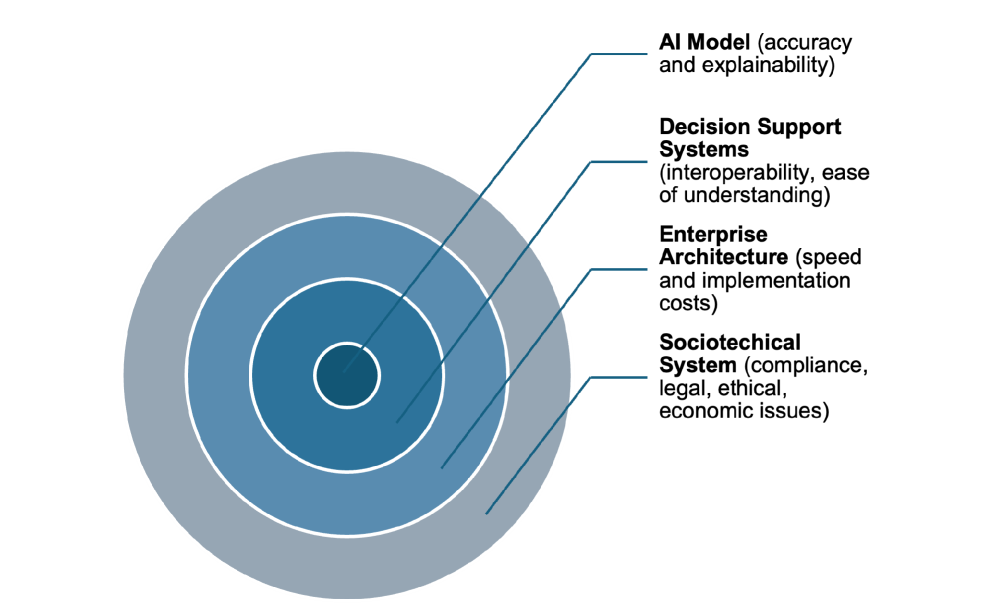

Despite growing demand for transparency in algorithmic decision-making, framing explainability as a simple trade-off with model accuracy overlooks the complex realities of financial applications. This paper, ‘Trade-offs in Financial AI: Explainability in a Trilemma with Accuracy and Compliance’, argues that successful AI deployment in finance hinges on navigating a ‘trilemma’ where accuracy and regulatory compliance function as non-negotiable prerequisites. Through interviews with finance professionals, we find that operational factors like cost and speed shape feasibility, while genuine understanding-rather than superficial transparency-determines trust and practical adoption. Ultimately, how can financial institutions move beyond viewing explainability as a constraint and instead leverage it as a driver of responsible innovation?

The Inevitable Reshaping of Financial Decision-Making

Artificial intelligence is fundamentally reshaping financial decision-making processes, moving beyond simple automation to offer predictive analytics and personalized strategies previously unattainable. This transformation extends across numerous sectors, from algorithmic trading and fraud detection to credit risk assessment and customer service. The speed and scale at which AI algorithms can process complex datasets – including market trends, economic indicators, and individual consumer behavior – promise significant gains in efficiency and innovation. By identifying patterns and correlations often missed by human analysts, these systems can optimize investment portfolios, streamline loan approvals, and enhance risk management protocols. Moreover, AI-driven tools are enabling the development of entirely new financial products and services, such as robo-advisors and automated insurance claims processing, thereby increasing accessibility and reducing costs for both institutions and consumers.

The increasing deployment of artificial intelligence in finance introduces substantial risks stemming from the opacity of many ‘black box’ models. These complex algorithms, while potentially delivering superior predictive power, often lack transparency in their decision-making processes, creating challenges for ensuring accuracy and identifying potential biases. This lack of interpretability raises concerns about fairness, as discriminatory outcomes may occur without readily apparent justification, and complicates efforts to meet stringent regulatory requirements designed to protect consumers and maintain market stability. Without the ability to thoroughly audit and understand how these models arrive at their conclusions, financial institutions face escalating legal and reputational risks, hindering the widespread and responsible adoption of AI in critical financial applications.

The escalating volume and intricacy of modern financial data present a significant challenge to traditional analytical methods, often leaving them unable to discern subtle patterns or predict emerging risks effectively. This limitation underscores the ‘financial XAI trilemma’: a precarious balance between achieving high predictive accuracy, maintaining strict regulatory compliance, and ensuring explainability in decision-making processes. Unlike simpler models, many advanced AI systems operate as ‘black boxes’, offering predictions without clear rationales, which hinders both human oversight and the fulfillment of increasingly stringent financial regulations. Consequently, the successful adoption of artificial intelligence in finance hinges not only on its predictive power but also on the ability to transparently demonstrate how those predictions are reached, satisfying the non-negotiable demands of accuracy and compliance that govern the industry.

The Rise of Machine Learning in Modern Financial Methods

Machine learning algorithms are increasingly utilized in financial risk assessment due to their capacity for high-volume data processing and pattern recognition. Traditional credit scoring models rely on a limited set of variables; machine learning models can incorporate hundreds or thousands of features, leading to more nuanced and predictive risk profiles. In fraud detection, these algorithms identify anomalous transactions in real-time by learning from historical data, surpassing the capabilities of rule-based systems. Specifically, supervised learning techniques such as logistic regression, support vector machines, and decision trees are common for classification tasks, while unsupervised learning methods like clustering can identify previously unknown fraudulent patterns. The speed of these automated assessments also allows for faster loan approvals and quicker responses to potential fraud, improving operational efficiency and reducing financial losses.

Alternative data sources, encompassing information beyond conventional financial records, are increasingly utilized to enhance financial analysis. These sources include geolocation data, social media activity, web scraping results, satellite imagery, and sensor data. This expanded data scope allows for the identification of non-linear relationships and predictive signals not captured by traditional metrics like credit scores or income statements. Consequently, alternative data improves risk assessment, enables more granular customer segmentation, and facilitates the development of novel investment strategies. The integration of these datasets often requires specialized data processing techniques and infrastructure to handle the volume, velocity, and variety of information.

Machine learning models utilized in financial applications necessitate rigorous validation procedures to guarantee consistent performance across diverse datasets and market conditions. This validation must extend beyond simple accuracy metrics to include stress-testing against adverse scenarios and evaluation of performance across different demographic groups. Failure to adequately validate can result in models that are brittle, exhibiting significant performance degradation when faced with previously unseen data, or that perpetuate and amplify existing biases present in the training data, leading to unfair or discriminatory outcomes in areas like loan applications or credit risk assessment. Techniques such as cross-validation, backtesting, and the use of independent validation datasets are crucial for identifying and mitigating these risks, ensuring the reliability and fairness of deployed models.

Demystifying the ‘Black Box’: The Imperative of Explainable AI

Explainable AI (XAI) encompasses a set of methods and techniques designed to increase the transparency and interpretability of machine learning models. These models, often characterized by their complexity – such as deep neural networks – can function as “black boxes” where the rationale behind their predictions is opaque. XAI addresses this by providing tools to dissect the model’s decision-making process, allowing users to understand how a prediction was made, not just that a prediction was made. This is achieved through techniques that highlight important features, decompose predictions into component contributions, or approximate complex models with simpler, interpretable surrogates. The goal is to move beyond simply achieving high accuracy to understanding the underlying logic and biases within the model.

SHAP (SHapley Additive exPlanations) values leverage concepts from game theory to explain the output of any machine learning model. Specifically, SHAP values calculate the contribution of each feature to the prediction, considering all possible combinations of features. This is achieved by averaging the marginal contribution of a feature across all possible feature subsets. The resulting SHAP value for a feature represents its impact on the model’s output relative to the average prediction. Positive values indicate a feature increased the prediction, while negative values indicate a decrease; the magnitude of the value represents the strength of that contribution. By summing the SHAP values for all features, the difference between the model’s prediction for a specific instance and the expected value can be fully explained, providing a complete additive explanation of the prediction.

Explainable AI (XAI) is increasingly vital for compliance with evolving regulations such as the GDPR and industry-specific guidelines that mandate transparency in automated decision-making processes. Beyond legal requirements, XAI facilitates the demonstration of algorithmic fairness by enabling the identification and mitigation of bias in model predictions. This transparency directly contributes to building trust with stakeholders – including customers, auditors, and internal teams – who require understanding of why a model arrived at a specific outcome. The ‘financial XAI trilemma’ posits that in financial applications, the usability and defensibility of an AI system are directly determined by the ease with which its reasoning can be understood; therefore, XAI is not simply a desirable feature, but a core component for successful and responsible AI deployment in regulated industries.

Toward a Future of Responsible Innovation in Finance

A transformative wave is reshaping the financial landscape, fueled by the convergence of machine learning, alternative data sources, and explainable AI (XAI). Traditional financial modeling, often reliant on limited historical data, is giving way to systems that ingest and analyze a far broader range of information – from social media sentiment to satellite imagery – to identify patterns and predict market trends. However, the true innovation lies not simply in what these systems analyze, but in how. Explainable AI is crucial, moving beyond ‘black box’ algorithms to provide clear, understandable rationales for financial decisions. This increased transparency is not merely a matter of compliance, but a fundamental shift towards building trust and accountability within a sector increasingly reliant on complex automated processes, ultimately fostering a more responsible and sustainable financial ecosystem.

The increasing integration of artificial intelligence into financial systems is prompting a corresponding evolution in regulatory frameworks worldwide. Regulators are no longer solely focused on the outcomes of financial decisions, but are now intensely scrutinizing the processes by which those decisions are made – specifically, the ‘black box’ nature of many AI algorithms. This shift demands greater transparency, requiring institutions to demonstrate not only that their AI systems are accurate and compliant, but also that they are understandable and auditable. Consequently, financial institutions are facing pressure to implement robust model risk management frameworks, enhanced data governance practices, and explainable AI (XAI) techniques to satisfy evolving regulatory expectations and foster public trust in AI-driven financial services. The goal is to proactively address potential biases, ensure fairness, and maintain market stability in an era of increasingly complex algorithmic trading and automated financial decision-making.

The development of a resilient financial future hinges on proactively addressing the ethical dimensions of artificial intelligence and implementing rigorous validation procedures. Current AI systems often face the ‘financial XAI trilemma’ – a challenging trade-off between achieving high predictive accuracy, adhering to complex regulatory compliance standards, and maintaining interpretability for stakeholders. Successfully navigating this requires a shift towards Explainable AI (XAI), where models are not simply ‘black boxes’ but provide clear rationales for their decisions. Robust validation goes beyond mere performance metrics, encompassing fairness assessments to mitigate bias, stress-testing under adverse conditions, and continuous monitoring to ensure long-term reliability. Prioritizing these elements is not merely a matter of risk management; it’s foundational to building trust in AI-driven financial systems and fostering a sustainable ecosystem where innovation and responsibility coexist.

The pursuit of artificial intelligence in finance demands a precision mirroring mathematical proof. This article highlights a critical ‘trilemma’ – accuracy, compliance, and explainability – where all three are essential, not merely a binary choice. Alan Turing observed, “Sometimes people who are untutored are more creative than someone who has a lot of training.” This sentiment resonates with the need for models that are not only accurate and compliant, but also understandable within the operational context. A technically flawless, yet opaque, algorithm offers no practical advantage; its logic must be demonstrably sound, akin to a theorem, to gain acceptance and truly function within the stringent requirements of financial regulation. The emphasis isn’t solely on ‘working’ but on why it works, aligning with a fundamentally rigorous approach to problem-solving.

What Remains Invariant?

The framing of explainability as merely a trade-off with accuracy feels… quaint. This work rightly shifts the discourse to a trilemma – accuracy and compliance not as optional concessions, but as foundational requirements. Yet, even this reframing skirts the truly fundamental question. Let N approach infinity – what remains invariant? Operational constraints, regulatory pressures, and the inherent complexity of financial instruments are not transient problems to be ‘solved’ with better algorithms. They are the system.

Future research must abandon the pursuit of ‘perfect’ explainability, a chimera fueled by the desire for intuitive understanding. Instead, the focus should be on provable properties of these systems. Can one mathematically demonstrate that a model, while opaque to human inspection, adheres to pre-defined regulatory boundaries under all foreseeable conditions? This is not about making AI ‘friendly’; it’s about establishing verifiable guarantees.

The ease with which a model’s outputs can be understood is then relegated to its proper place: a secondary engineering concern, influencing adoption rates but irrelevant to the core question of systemic risk. The true measure of progress will not be algorithms that ‘explain themselves,’ but systems that can be formally proven to be safe, compliant, and robust – even if nobody truly understands why.

Original article: https://arxiv.org/pdf/2602.01368.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- NBA 2K26 Season 5 Adds College Themed Content

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Mario Tennis Fever Review: Game, Set, Match

- EUR INR PREDICTION

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- He Had One Night to Write the Music for Shane and Ilya’s First Time

- Gold Rate Forecast

- Brent Oil Forecast

2026-02-03 13:13