Author: Denis Avetisyan

Researchers have developed a novel approach to forecasting individual actions by integrating psychological traits with the power of large language models.

Fine-tuning language models with structured psychometric data significantly improves the accuracy of high-fidelity behavioral prediction at the individual level.

Accurately modeling human decision-making remains a persistent challenge for artificial intelligence, particularly when predicting individual behavior in complex scenarios. This is addressed in ‘Decoding the Human Factor: High Fidelity Behavioral Prediction for Strategic Foresight’, which introduces the Large Behavioral Model (LBM), a fine-tuned large language model conditioned on detailed psychometric profiles to enhance behavioral prediction. The LBM demonstrates that fine-tuning and increasing trait dimensionality significantly improve performance over prompting-based approaches and foundational models. Could this scalable approach unlock new capabilities in areas like strategic foresight, negotiation analysis, and cognitive security by offering a more nuanced understanding of the human element in complex systems?

Deconstructing Prediction: The Limits of Averaged Behavior

Conventional behavioral models often falter when attempting to forecast individual responses within multifaceted situations. These models frequently depend on averaging behaviors across large groups, thereby overlooking the significant variability inherent in human action. The inherent complexity arises because people don’t simply react to stimuli; their responses are shaped by a dynamic interplay of stable personality traits, immediate contextual cues, and prior experiences. Consequently, predictions based solely on generalized trends prove unreliable, especially when faced with the unpredictable nature of real-world complexities where even slight variations in circumstance can dramatically alter outcomes. This limitation underscores the necessity for more sophisticated approaches that acknowledge the unique characteristics of each individual and the specific details of their environment.

Current behavioral prediction models frequently fall short due to an overreliance on generalized patterns, effectively treating individuals as mere statistical averages. These approaches often assume homogeneity within groups, neglecting the significant impact of unique personality traits and the specific details of any given situation. While useful for establishing broad trends, such generalizations struggle to account for the considerable variability in human response-a person’s characteristic level of impulsivity, for example, or the influence of immediate social pressures. Consequently, predictions based solely on group averages can be markedly inaccurate when applied to specific individuals navigating complex, real-world scenarios, hindering effective strategies in fields demanding precise foresight.

A fundamental limitation of current behavioral science lies in its struggle to move beyond group averages and forecast what any single individual will do in a given situation. The demand for predictive systems that account for the intricate interplay between a person’s inherent characteristics – their established trait profiles encompassing personality, values, and beliefs – and the specific contextual variables at play is therefore critical. Such a system would not simply categorize individuals, but dynamically assess how a person’s predispositions are expressed – or suppressed – by the immediate environment, offering a far more granular and accurate picture of likely behavior. This personalized approach promises breakthroughs in fields requiring an understanding of individual agency, from optimizing strategic choices in competitive landscapes to designing targeted interventions in healthcare and education.

The capacity to anticipate individual behavior holds transformative potential across diverse fields, extending far beyond theoretical interest. In strategic decision-making, for instance, accurately forecasting the actions of competitors, allies, or even adversaries becomes paramount for optimizing outcomes and mitigating risks. Similarly, personalized interventions in healthcare, education, and public policy hinge on the ability to predict how a specific individual will respond to a given stimulus or treatment. This precision allows for tailored approaches, maximizing effectiveness and minimizing unintended consequences – from designing targeted therapies for mental health to crafting educational programs that cater to individual learning styles. Ultimately, a robust system for behavioral prediction promises not only a deeper understanding of human nature but also the tools to navigate complex social systems and improve individual well-being through proactive, informed action.

The Behavioral Foundation: Building a Predictive Engine

The Large Behavioral Model (LBM) is built upon a Behavioral Foundation Model, initially trained using extensive datasets of human decision-making processes. This pre-training phase exposed the model to a broad range of behavioral patterns and associated data points, enabling it to establish a foundational understanding of human choices. The scale of this pre-training data is critical, as it allows the model to generalize effectively and form robust internal representations of behavioral tendencies before undergoing task-specific adaptation. This approach differs from training models from scratch, providing a significant advantage in terms of both data efficiency and overall performance.

The Behavioral Foundation Model undergoes a two-stage refinement process utilizing Supervised Fine-Tuning and LoRA. Supervised Fine-Tuning adjusts the model’s weights using labeled data from a proprietary dataset, optimizing performance on specific behavioral prediction tasks. Subsequently, LoRA, or Low-Rank Adaptation, is applied; this technique introduces a smaller set of trainable parameters, reducing computational cost and preventing catastrophic forgetting of the pre-trained model’s knowledge while adapting it to the proprietary dataset. This combined approach allows for efficient and targeted customization of the foundation model, improving its ability to generalize to new behavioral data.

The Large Behavioral Model (LBM) incorporates a Structured Trait Representation to inform its predictions. This representation utilizes High-Dimensional Trait Profiles, which are derived from the established Big Five Personality Traits – Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. These traits are not assessed as simple scores, but are expanded into a high-dimensional vector space, allowing for a more nuanced and detailed representation of individual behavioral characteristics. This approach enables the model to condition its outputs on a comprehensive understanding of personality, moving beyond basic trait categorization to capture subtle variations in behavioral tendencies.

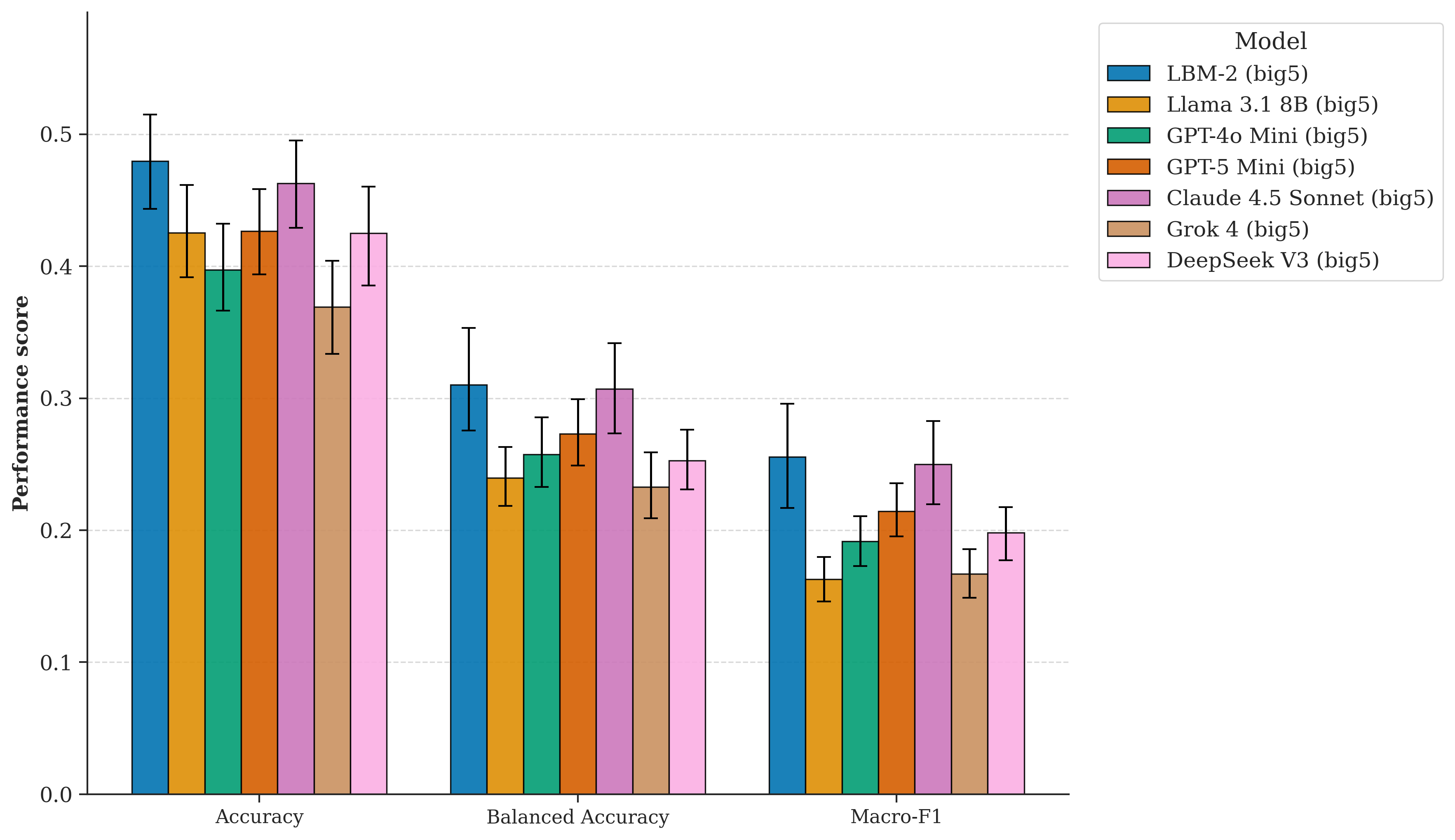

Following supervised fine-tuning and the application of LoRA on the proprietary dataset, the Large Behavioral Model demonstrates a 16% improvement in accuracy when benchmarked against the pre-trained base model. This optimization results in an overall accuracy score of 0.48. Accuracy is calculated based on the model’s ability to correctly predict behavioral outcomes within the defined test parameters of the proprietary dataset, representing a measurable gain in predictive capability achieved through the fine-tuning process.

Unlocking Agency: Persona Prompting and Contextual Awareness

Persona prompting functions by directly inputting a defined trait profile into the Large Language Model (LLM) as part of the initial prompt. This method circumvents the need for extensive fine-tuning or retrieval-augmented generation to imbue the model with a specific personality. The trait profile, consisting of characteristics such as openness, conscientiousness, extraversion, agreeableness, and neuroticism, is communicated to the LLM in a structured format, enabling it to generate responses consistent with the designated persona. Effectively, the trait profile serves as a set of behavioral guidelines for the LLM during text generation, influencing its stylistic choices, content focus, and overall response characteristics.

The application of persona prompting enables Large Language Models (LLMs) to generate responses consistent with a specified character profile. By providing the LLM with a detailed description of traits – such as openness, conscientiousness, extraversion, agreeableness, and neuroticism – the model adjusts its output to reflect those characteristics. This is achieved through conditioning the LLM’s response generation process on the provided trait information, influencing both the style and content of the text produced to align with the intended persona. Consequently, the model doesn’t simply state facts, but delivers them as if expressed by an individual possessing the defined personality attributes.

Effective processing of complex Strategic Dilemmas by Large Language Models (LLMs) necessitates the utilization of long context windows. These extended input capacities allow the LLM to ingest and retain a significantly larger volume of relevant information pertaining to the dilemma, including background details, contributing factors, and potential consequences. Without sufficient context, the model risks generating responses based on incomplete data, leading to inaccurate or suboptimal strategic recommendations. The ability to consider all pertinent information within the context window is therefore critical for ensuring a comprehensive and well-informed analysis of the strategic challenge.

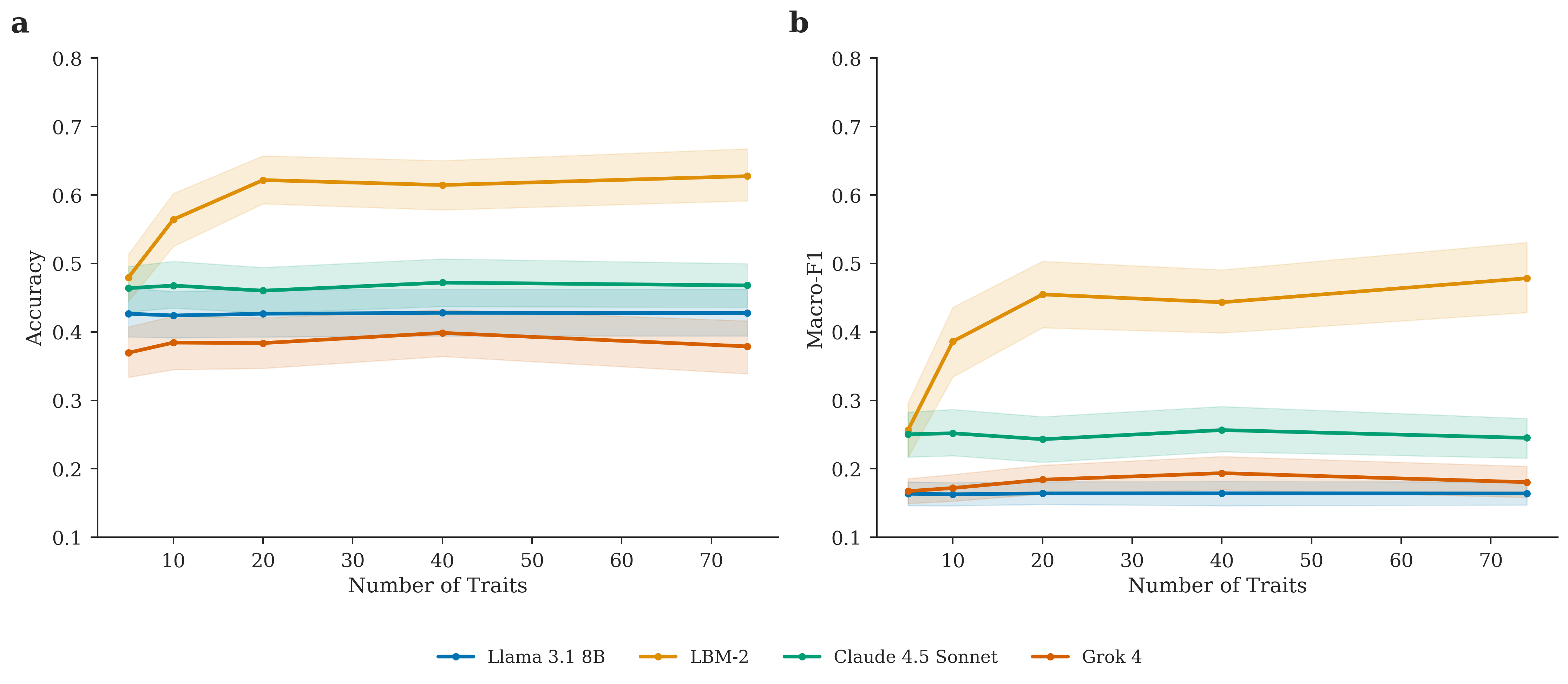

Performance evaluations indicate a substantial improvement in model accuracy when utilizing a 20-trait personality profile. Specifically, models employing this dimensionality achieved an accuracy score of 0.62 and a Macro-F1 score of 0.45. These metrics demonstrate a quantifiable benefit derived from increasing the number of traits used to define the persona, suggesting that a higher degree of trait granularity positively impacts the model’s ability to generate contextually appropriate and nuanced responses.

Beyond Prediction: Validating Performance and Measuring Impact

The Language-Based Model (LBM) exhibits marked enhancements in its capacity to anticipate outcomes, a capability rigorously assessed through the metrics of Balanced Accuracy and Macro-F1. These measures provide a comprehensive evaluation of predictive performance, moving beyond simple accuracy to account for imbalanced datasets and nuanced classifications. Improvements in both Balanced Accuracy and Macro-F1 signify that the LBM not only predicts outcomes more frequently, but also does so with greater consistency across all categories-even those with fewer instances. This refined predictive power suggests the LBM can offer substantial benefits in fields requiring reliable foresight, potentially influencing strategic decision-making and the development of highly targeted interventions.

The study demonstrates a substantial leap in predictive performance with the SFT-tuned Language-Based Model (LBM), achieving a Balanced Accuracy of 0.31. This figure represents not merely incremental progress, but a significant 23% improvement when contrasted with the foundational, untuned model. Balanced Accuracy, a metric particularly valuable in scenarios with imbalanced datasets, underscores the model’s ability to accurately predict across all classes, not just the majority. The considerable gain highlights the efficacy of Supervised Fine-Tuning (SFT) in calibrating the LBM for enhanced predictive capabilities, positioning it as a potentially transformative tool in areas demanding precise and reliable forecasting.

The Latent Belief Model (LBM) demonstrated a substantial enhancement in its capacity to discern relevant information, as evidenced by a Macro-F1 score of 0.26. This metric, which balances precision and recall across multiple classes, signifies a noteworthy 63% improvement compared to the performance of the foundational model. A higher Macro-F1 indicates a more robust and reliable predictive capability, particularly in scenarios involving imbalanced datasets or complex relationships. This considerable leap suggests the LBM is not merely identifying trends, but doing so with increased accuracy and a more comprehensive understanding of underlying patterns, paving the way for impactful applications in areas demanding nuanced forecasting.

The demonstrated advancements in predictive accuracy suggest the Latent Belief Model (LBM) possesses the capacity to fundamentally reshape approaches within diverse fields. Strategic forecasting, traditionally reliant on complex statistical analysis and expert intuition, stands to benefit from the LBM’s ability to discern underlying patterns and project future outcomes with increased precision. Beyond broad-scale predictions, the model’s performance also hints at possibilities for highly individualized interventions. By accurately modeling latent beliefs, the LBM could enable the development of personalized strategies in areas like healthcare, education, and behavioral science, tailoring approaches to the unique cognitive landscape of each individual and maximizing the potential for positive outcomes. These improvements aren’t merely incremental; they represent a shift towards a more nuanced and effective understanding of complex systems and human behavior.

The Road Ahead: Scaling, Generalization, and Dynamic Modeling

Continued development hinges on significantly broadening the scope of the Proprietary Dataset, a crucial step towards building a more robust and universally applicable model. Current efforts are directed at incorporating a greater diversity of real-world scenarios, moving beyond the initially tested conditions to include a wider array of contextual factors influencing behavior. Simultaneously, the dataset is being expanded to represent a more inclusive range of demographic groups, addressing potential biases and ensuring equitable performance across different populations. This expansion isn’t merely about increasing the volume of data; it’s about cultivating a dataset that accurately reflects the complexity and heterogeneity of human experience, ultimately bolstering the model’s predictive capabilities and generalizability to previously unseen individuals and situations.

The LBM’s adaptability hinges on overcoming the limitations of data-intensive machine learning models; therefore, future development prioritizes few-shot and transfer learning techniques. These approaches aim to enable the model to accurately perform new tasks with minimal training examples, drastically reducing the need for extensive, labeled datasets. Few-shot learning will allow the LBM to quickly adapt to novel situations by leveraging prior knowledge, while transfer learning will facilitate the application of insights gained from one behavioral domain to another. This combined strategy promises a significantly more versatile and broadly applicable LBM, capable of predicting human behavior across a wider range of contexts and populations, even when faced with limited data for specific scenarios.

The capacity to incorporate live behavioral data streams represents a significant advancement for the LBM framework, moving beyond static prediction towards dynamic, responsive modeling. By continuously analyzing incoming data – such as eye movements, physiological signals, or even digital interactions – the model can refine its predictions in real-time, adapting to an individual’s evolving state and immediate context. This capability unlocks the potential for truly personalized interventions, tailoring recommendations or support precisely when and how it’s most effective. Rather than simply forecasting likely outcomes, the LBM could become an active component within a system, adjusting strategies based on observed behavior and maximizing the impact of any applied intervention – a crucial step towards proactive and adaptive behavioral science.

The Latent Behavior Model (LBM) transcends the limitations of current behavioral prediction systems, offering a scalable framework applicable to diverse fields ranging from public health and urban planning to marketing and personalized education. By identifying underlying behavioral patterns from complex data, the LBM doesn’t simply forecast what individuals might do, but begins to illuminate why, creating opportunities for proactive intervention and optimized resource allocation. This capability extends beyond prediction; the model’s latent space representation allows for the simulation of different scenarios and the assessment of potential outcomes, effectively turning behavioral understanding into a predictive and preventative science. Ultimately, the LBM’s potential lies in its ability to transform raw behavioral data into actionable insights, fostering a deeper comprehension of human motivations and enabling the development of more effective strategies across a multitude of domains.

“`html

The pursuit of predicting human behavior, as detailed in this exploration of Large Behavioral Models, inherently necessitates a willingness to dismantle conventional approaches. The study’s focus on fine-tuning and trait dimensionality isn’t merely about optimization; it’s about reverse-engineering the complexities of individual differences. As Donald Davies observed, “A bug is the system confessing its design sins.” Similarly, inaccuracies in behavioral prediction reveal fundamental gaps in our understanding of the underlying ‘design’ – the interplay of psychometric traits and their influence on strategic actions. The LBM, in its iterative refinement, acknowledges these ‘sins’ and strives for a more honest, predictive model of human intention.

Beyond the Algorithm: Charting Unseen Territories

The pursuit of predictive behavioral models invariably reveals the inadequacies of the models themselves. This work, in establishing a foundation for trait-informed large language models, doesn’t so much solve the problem of human prediction as relocate its most pressing questions. The observed improvements through fine-tuning and dimensionality reduction suggest that the shape of trait space-how these characteristics interrelate-is as crucial as the traits themselves. Future iterations must abandon the assumption of neat compartmentalization and explore the chaotic interplay of psychometric factors.

Every exploit starts with a question, not with intent. The current architecture, while demonstrably effective, remains susceptible to the inherent noise of human action. The model accurately predicts tendencies, but true prediction necessitates anticipating the deviations-the irrational, the impulsive, the uniquely human responses to unforeseen circumstances. A deeper investigation into the model’s failure cases, rather than its successes, will likely prove more illuminating.

The ultimate horizon isn’t simply better prediction, but a systemic understanding of the limits of prediction itself. Can a model, however sophisticated, truly account for the emergent properties of consciousness? Or are some behaviors, by their very nature, destined to remain outside the reach of algorithmic capture? The exploration of this boundary-the point where prediction collapses into irreducible complexity-represents the next frontier.

Original article: https://arxiv.org/pdf/2602.17222.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- What time is the Single’s Inferno Season 5 reunion on Netflix?

- Hollywood is using “bounty hunters” to track AI companies misusing IP

- Heated Rivalry Adapts the Book’s Sex Scenes Beat by Beat

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- EUR INR PREDICTION

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

2026-02-22 11:34